Cory Merkel

Depth-Based Matrix Classification for the HHL Quantum Algorithm

May 28, 2025Abstract:Under the nearing error-corrected era of quantum computing, it is necessary to understand the suitability of certain post-NISQ algorithms for practical problems. One of the most promising, applicable and yet difficult to implement in practical terms is the Harrow, Hassidim and Lloyd (HHL) algorithm for linear systems of equations. An enormous number of problems can be expressed as linear systems of equations, from Machine Learning to fluid dynamics. However, in most cases, HHL will not be able to provide a practical, reasonable solution to these problems. This paper's goal inquires about whether problems can be labeled using Machine Learning classifiers as suitable or unsuitable for HHL implementation when some numerical information about the problem is known beforehand. This work demonstrates that training on significantly representative data distributions is critical to achieve good classifications of the problems based on the numerical properties of the matrix representing the system of equations. Accurate classification is possible through Multi-Layer Perceptrons, although with careful design of the training data distribution and classifier parameters.

Contrastive Learning in Memristor-based Neuromorphic Systems

Sep 17, 2024

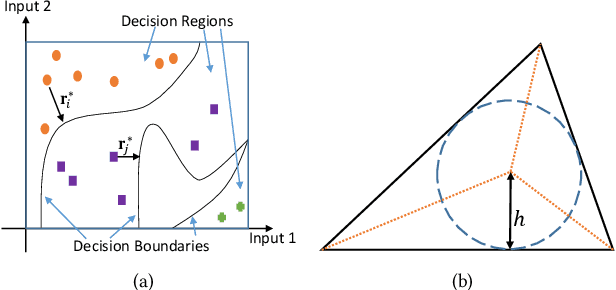

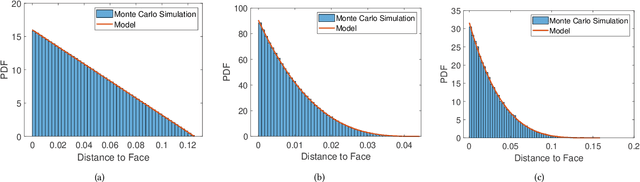

Abstract:Spiking neural networks, the third generation of artificial neural networks, have become an important family of neuron-based models that sidestep many of the key limitations facing modern-day backpropagation-trained deep networks, including their high energy inefficiency and long-criticized biological implausibility. In this work, we design and investigate a proof-of-concept instantiation of contrastive-signal-dependent plasticity (CSDP), a neuromorphic form of forward-forward-based, backpropagation-free learning. Our experimental simulations demonstrate that a hardware implementation of CSDP is capable of learning simple logic functions without the need to resort to complex gradient calculations.

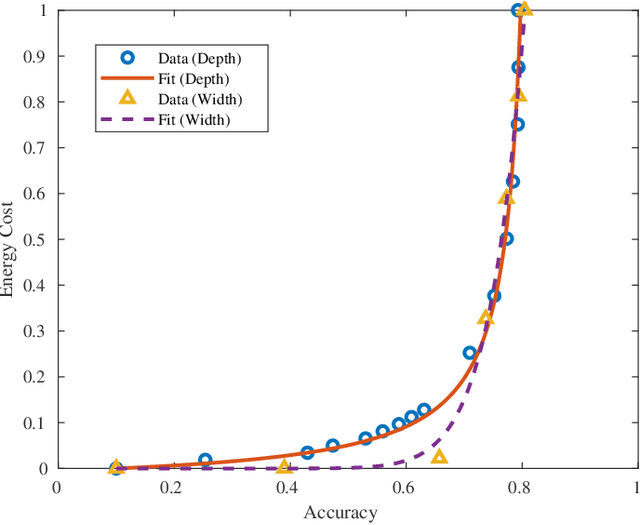

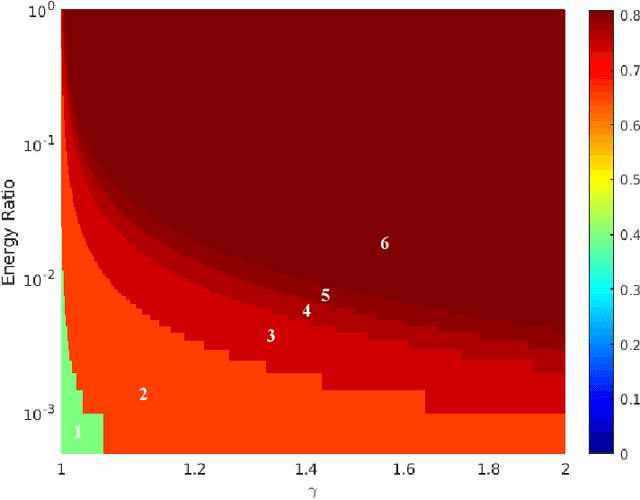

Synaptic Scaling and Optimal Bias Adjustments for Power Reduction in Neuromorphic Systems

Jun 12, 2023Abstract:Recent animal studies have shown that biological brains can enter a low power mode in times of food scarcity. This paper explores the possibility of applying similar mechanisms to a broad class of neuromorphic systems where power consumption is strongly dependent on the magnitude of synaptic weights. In particular, we show through mathematical models and simulations that careful scaling of synaptic weights can significantly reduce power consumption (by over 80\% in some of the cases tested) while having a relatively small impact on accuracy. These results uncover an exciting opportunity to design neuromorphic systems for edge AI applications, where power consumption can be dynamically adjusted based on energy availability and performance requirements.

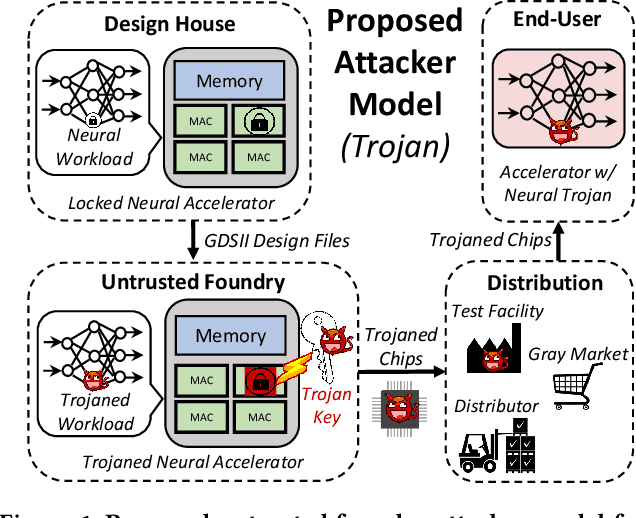

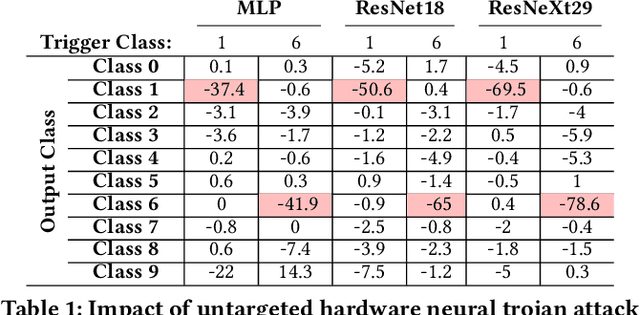

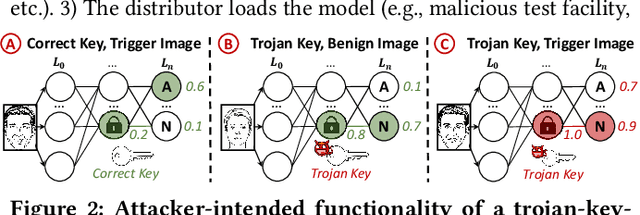

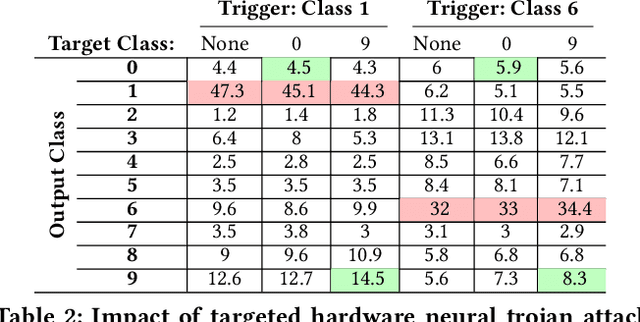

Exploiting Logic Locking for a Neural Trojan Attack on Machine Learning Accelerators

Apr 14, 2023

Abstract:Logic locking has been proposed to safeguard intellectual property (IP) during chip fabrication. Logic locking techniques protect hardware IP by making a subset of combinational modules in a design dependent on a secret key that is withheld from untrusted parties. If an incorrect secret key is used, a set of deterministic errors is produced in locked modules, restricting unauthorized use. A common target for logic locking is neural accelerators, especially as machine-learning-as-a-service becomes more prevalent. In this work, we explore how logic locking can be used to compromise the security of a neural accelerator it protects. Specifically, we show how the deterministic errors caused by incorrect keys can be harnessed to produce neural-trojan-style backdoors. To do so, we first outline a motivational attack scenario where a carefully chosen incorrect key, which we call a trojan key, produces misclassifications for an attacker-specified input class in a locked accelerator. We then develop a theoretically-robust attack methodology to automatically identify trojan keys. To evaluate this attack, we launch it on several locked accelerators. In our largest benchmark accelerator, our attack identified a trojan key that caused a 74\% decrease in classification accuracy for attacker-specified trigger inputs, while degrading accuracy by only 1.7\% for other inputs on average.

Accelerating the training of single-layer binary neural networks using the HHL quantum algorithm

Oct 23, 2022Abstract:Binary Neural Networks are a promising technique for implementing efficient deep models with reduced storage and computational requirements. The training of these is however, still a compute-intensive problem that grows drastically with the layer size and data input. At the core of this calculation is the linear regression problem. The Harrow-Hassidim-Lloyd (HHL) quantum algorithm has gained relevance thanks to its promise of providing a quantum state containing the solution of a linear system of equations. The solution is encoded in superposition at the output of a quantum circuit. Although this seems to provide the answer to the linear regression problem for the training neural networks, it also comes with multiple, difficult-to-avoid hurdles. This paper shows, however, that useful information can be extracted from the quantum-mechanical implementation of HHL, and used to reduce the complexity of finding the solution on the classical side.

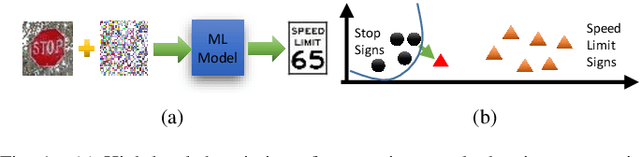

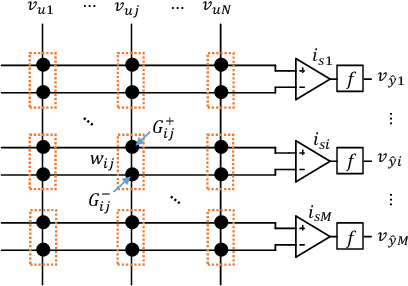

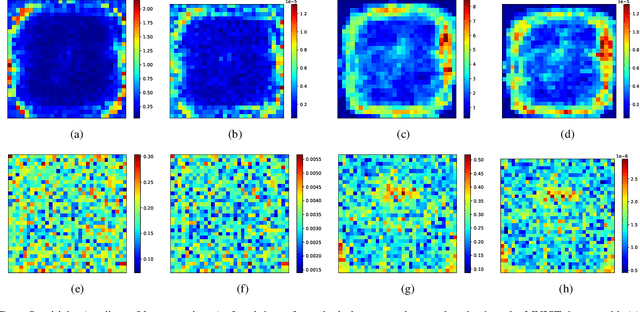

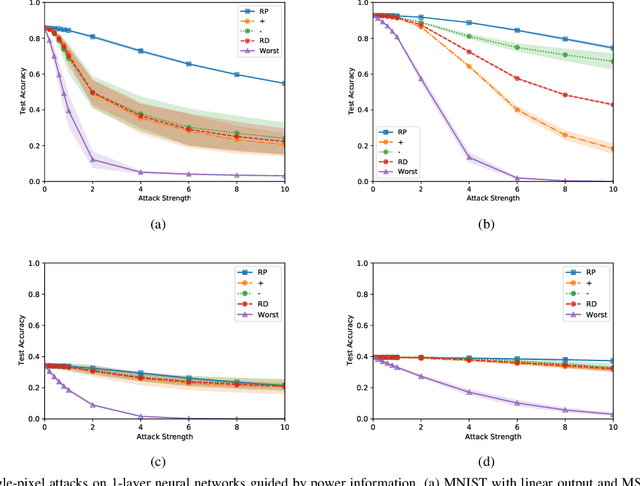

Enhancing Adversarial Attacks on Single-Layer NVM Crossbar-Based Neural Networks with Power Consumption Information

Jul 06, 2022

Abstract:Adversarial attacks on state-of-the-art machine learning models pose a significant threat to the safety and security of mission-critical autonomous systems. This paper considers the additional vulnerability of machine learning models when attackers can measure the power consumption of their underlying hardware platform. In particular, we explore the utility of power consumption information for adversarial attacks on non-volatile memory crossbar-based single-layer neural networks. Our results from experiments with MNIST and CIFAR-10 datasets show that power consumption can reveal important information about the neural network's weight matrix, such as the 1-norm of its columns. That information can be used to infer the sensitivity of the network's loss with respect to different inputs. We also find that surrogate-based black box attacks that utilize crossbar power information can lead to improved attack efficiency.

Model Extraction and Adversarial Attacks on Neural Networks using Switching Power Information

Jun 15, 2021

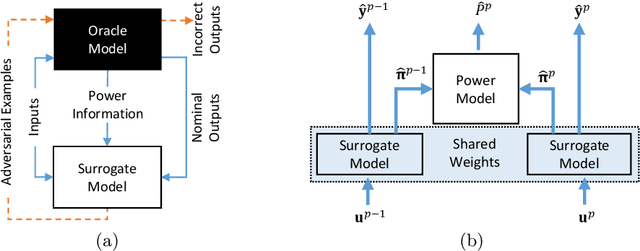

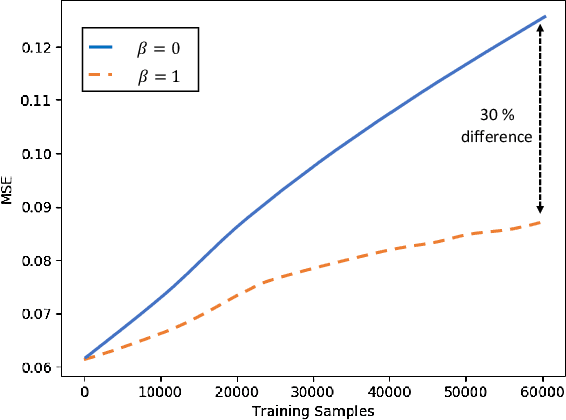

Abstract:Artificial neural networks (ANNs) have gained significant popularity in the last decade for solving narrow AI problems in domains such as healthcare, transportation, and defense. As ANNs become more ubiquitous, it is imperative to understand their associated safety, security, and privacy vulnerabilities. Recently, it has been shown that ANNs are susceptible to a number of adversarial evasion attacks--inputs that cause the ANN to make high-confidence misclassifications despite being almost indistinguishable from the data used to train and test the network. This work explores to what degree finding these examples maybe aided by using side-channel information, specifically switching power consumption, of hardware implementations of ANNs. A black-box threat scenario is assumed, where an attacker has access to the ANN hardware's input, outputs, and topology, but the trained model parameters are unknown. Then, a surrogate model is trained to have similar functional (i.e. input-output mapping) and switching power characteristics as the oracle (black-box) model. Our results indicate that the inclusion of power consumption data increases the fidelity of the model extraction by up to 30 percent based on a mean square error comparison of the oracle and surrogate weights. However, transferability of adversarial examples from the surrogate to the oracle model was not significantly affected.

On the Adversarial Robustness of Quantized Neural Networks

May 01, 2021

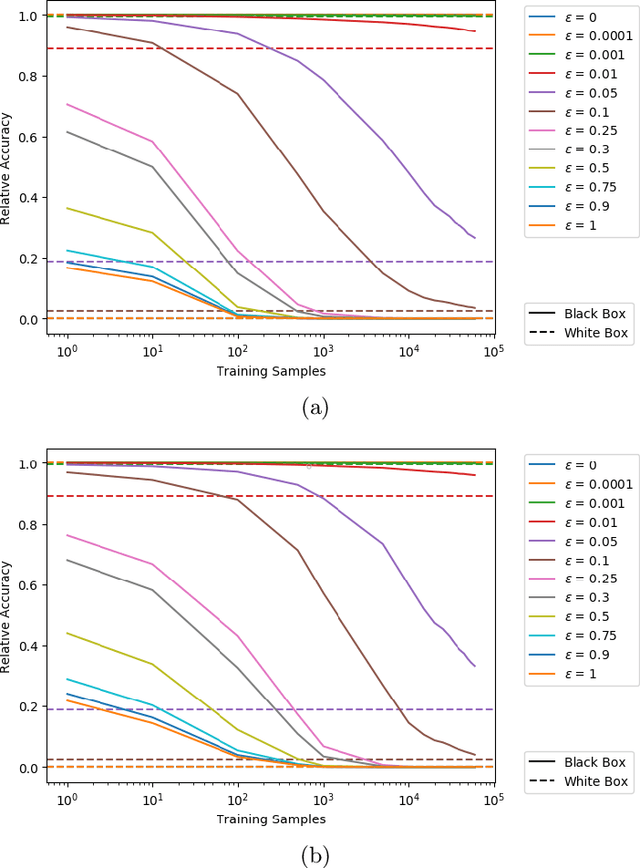

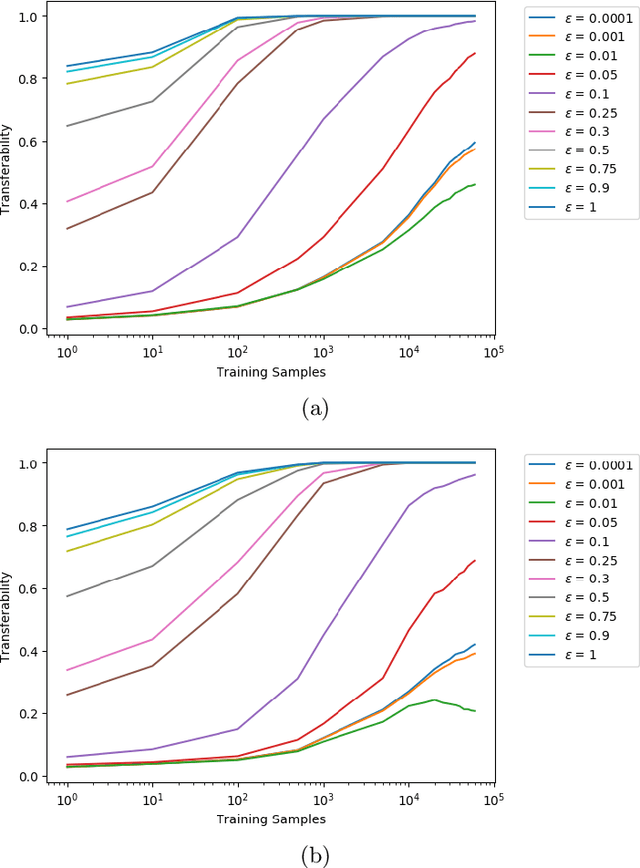

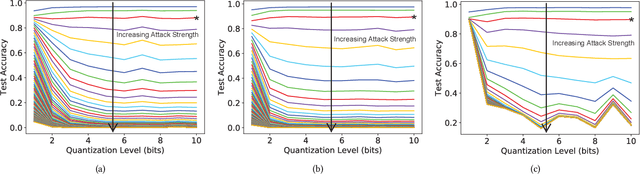

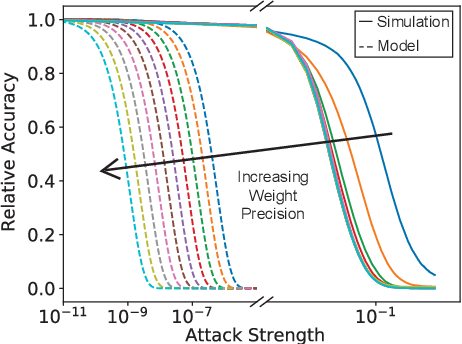

Abstract:Reducing the size of neural network models is a critical step in moving AI from a cloud-centric to an edge-centric (i.e. on-device) compute paradigm. This shift from cloud to edge is motivated by a number of factors including reduced latency, improved security, and higher flexibility of AI algorithms across several application domains (e.g. transportation, healthcare, defense, etc.). However, it is currently unclear how model compression techniques may affect the robustness of AI algorithms against adversarial attacks. This paper explores the effect of quantization, one of the most common compression techniques, on the adversarial robustness of neural networks. Specifically, we investigate and model the accuracy of quantized neural networks on adversarially-perturbed images. Results indicate that for simple gradient-based attacks, quantization can either improve or degrade adversarial robustness depending on the attack strength.

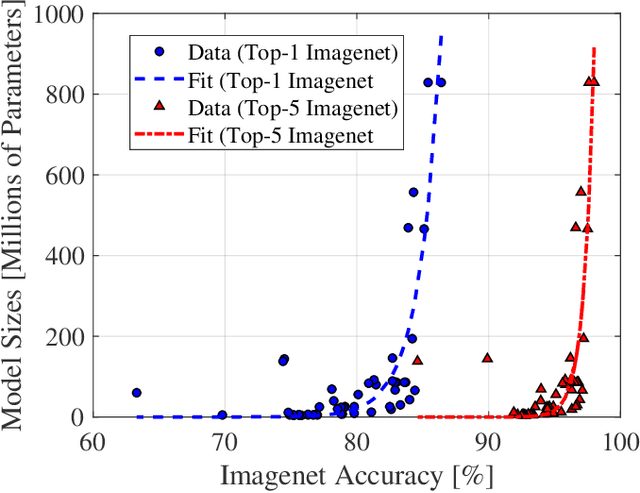

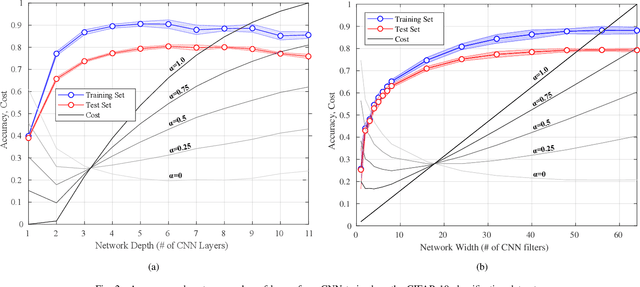

Exploring Energy-Accuracy Tradeoffs in AI Hardware

Nov 17, 2020

Abstract:Artificial intelligence (AI) is playing an increasingly significant role in our everyday lives. This trend is expected to continue, especially with recent pushes to move more AI to the edge. However, one of the biggest challenges associated with AI on edge devices (mobile phones, unmanned vehicles, sensors, etc.) is their associated size, weight, and power constraints. In this work, we consider the scenario where an AI system may need to operate at less-than-maximum accuracy in order to meet application-dependent energy requirements. We propose a simple function that divides the cost of using an AI system into the cost of the decision making process and the cost of decision execution. For simple binary decision problems with convolutional neural networks, it is shown that minimizing the cost corresponds to using fewer than the maximum number of resources (e.g. convolutional neural network layers and filters). Finally, it is shown that the cost associated with energy can be significantly reduced by leveraging high-confidence predictions made in lower-level layers of the network.

Energy Constraints Improve Liquid State Machine Performance

Jun 08, 2020

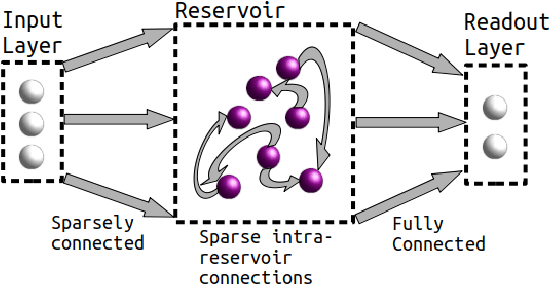

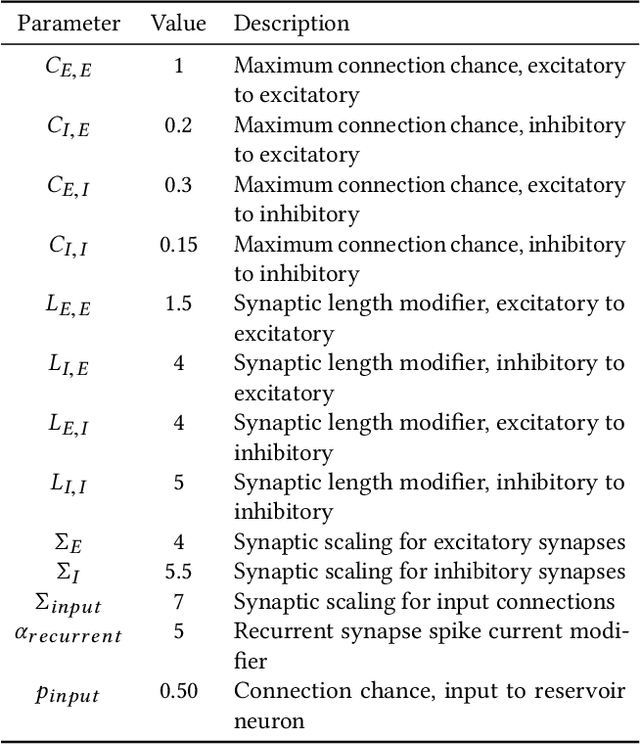

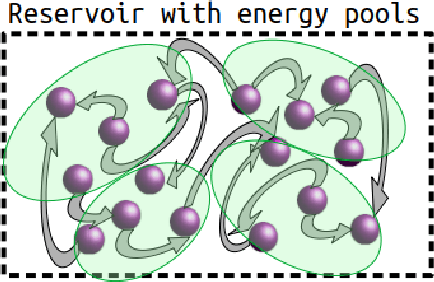

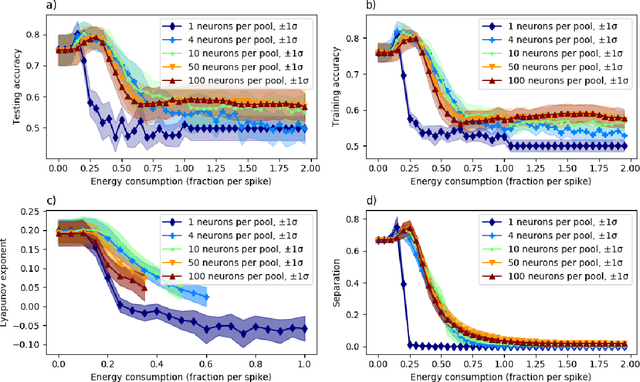

Abstract:A model of metabolic energy constraints is applied to a liquid state machine in order to analyze its effects on network performance. It was found that, in certain combinations of energy constraints, a significant increase in testing accuracy emerged; an improvement of 4.25% was observed on a seizure detection task using a digital liquid state machine while reducing overall reservoir spiking activity by 6.9%. The accuracy improvements appear to be linked to the energy constraints' impact on the reservoir's dynamics, as measured through metrics such as the Lyapunov exponent and the separation of the reservoir.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge