On the Adversarial Robustness of Quantized Neural Networks

Paper and Code

May 01, 2021

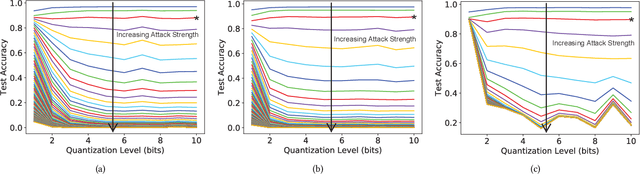

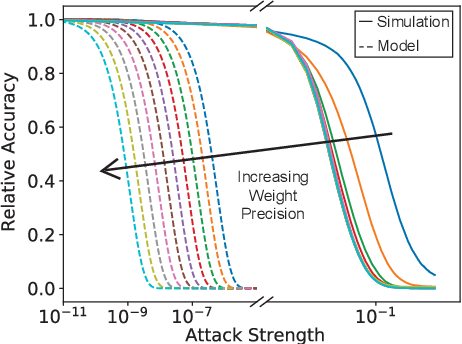

Reducing the size of neural network models is a critical step in moving AI from a cloud-centric to an edge-centric (i.e. on-device) compute paradigm. This shift from cloud to edge is motivated by a number of factors including reduced latency, improved security, and higher flexibility of AI algorithms across several application domains (e.g. transportation, healthcare, defense, etc.). However, it is currently unclear how model compression techniques may affect the robustness of AI algorithms against adversarial attacks. This paper explores the effect of quantization, one of the most common compression techniques, on the adversarial robustness of neural networks. Specifically, we investigate and model the accuracy of quantized neural networks on adversarially-perturbed images. Results indicate that for simple gradient-based attacks, quantization can either improve or degrade adversarial robustness depending on the attack strength.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge