Exploring Energy-Accuracy Tradeoffs in AI Hardware

Paper and Code

Nov 17, 2020

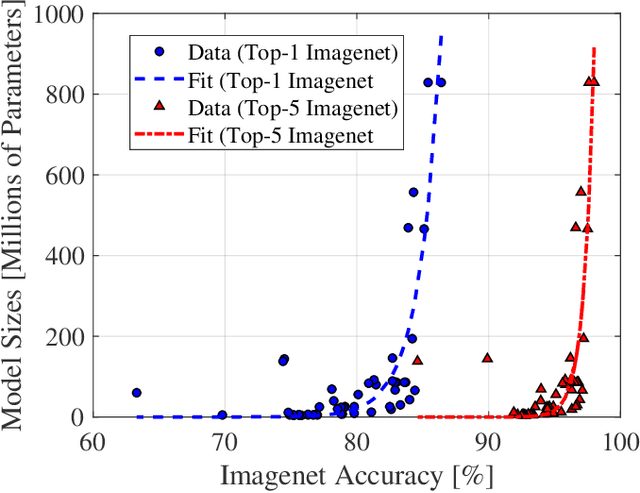

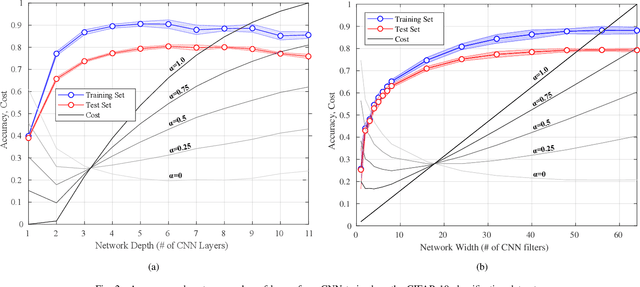

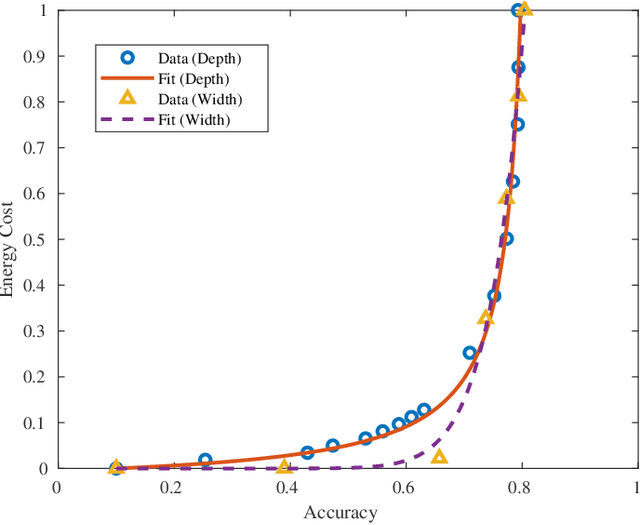

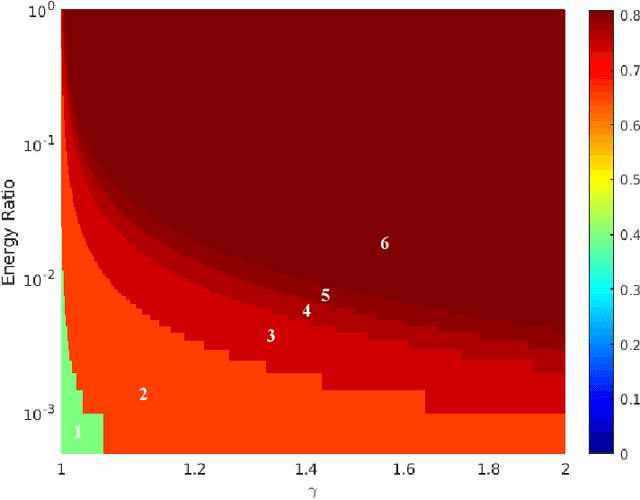

Artificial intelligence (AI) is playing an increasingly significant role in our everyday lives. This trend is expected to continue, especially with recent pushes to move more AI to the edge. However, one of the biggest challenges associated with AI on edge devices (mobile phones, unmanned vehicles, sensors, etc.) is their associated size, weight, and power constraints. In this work, we consider the scenario where an AI system may need to operate at less-than-maximum accuracy in order to meet application-dependent energy requirements. We propose a simple function that divides the cost of using an AI system into the cost of the decision making process and the cost of decision execution. For simple binary decision problems with convolutional neural networks, it is shown that minimizing the cost corresponds to using fewer than the maximum number of resources (e.g. convolutional neural network layers and filters). Finally, it is shown that the cost associated with energy can be significantly reduced by leveraging high-confidence predictions made in lower-level layers of the network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge