Corey Adams

GeoTransolver: Learning Physics on Irregular Domains Using Multi-scale Geometry Aware Physics Attention Transformer

Dec 24, 2025Abstract:We present GeoTransolver, a Multiscale Geometry-Aware Physics Attention Transformer for CAE that replaces standard attention with GALE, coupling physics-aware self-attention on learned state slices with cross-attention to a shared geometry/global/boundary-condition context computed from multi-scale ball queries (inspired by DoMINO) and reused in every block. Implemented and released in NVIDIA PhysicsNeMo, GeoTransolver persistently projects geometry, global and boundary condition parameters into physical state spaces to anchor latent computations to domain structure and operating regimes. We benchmark GeoTransolver on DrivAerML, Luminary SHIFT-SUV, and Luminary SHIFT-Wing, comparing against Domino, Transolver (as released in PhysicsNeMo), and literature-reported AB-UPT, and evaluate drag/lift R2 and Relative L1 errors for field variables. GeoTransolver delivers better accuracy, improved robustness to geometry/regime shifts, and favorable data efficiency; we include ablations on DrivAerML and qualitative results such as contour plots and design trends for the best GeoTransolver models. By unifying multiscale geometry-aware context with physics-based attention in a scalable transformer, GeoTransolver advances operator learning for high-fidelity surrogate modeling across complex, irregular domains and non-linear physical regimes.

PILArNet: Public Dataset for Particle Imaging Liquid Argon Detectors in High Energy Physics

Jun 03, 2020

Abstract:Rapid advancement of machine learning solutions has often coincided with the production of a test public data set. Such datasets reduce the largest barrier to entry for tackling a problem -- procuring data -- while also providing a benchmark to compare different solutions. Furthermore, large datasets have been used to train high-performing feature finders which are then used in new approaches to problems beyond that initially defined. In order to encourage the rapid development in the analysis of data collected using liquid argon time projection chambers, a class of particle detectors used in high energy physics experiments, we have produced the PILArNet, first 2D and 3D open dataset to be used for a couple of key analysis tasks. The initial dataset presented in this paper contains 300,000 samples simulated and recorded in three different volume sizes. The dataset is stored efficiently in sparse 2D and 3D matrix format with auxiliary information about simulated particles in the volume, and is made available for public research use. In this paper we describe the dataset, tasks, and the method used to procure the sample.

Scaling Distributed Training of Flood-Filling Networks on HPC Infrastructure for Brain Mapping

May 13, 2019

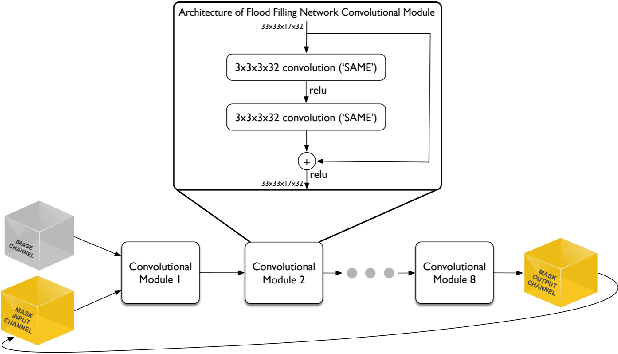

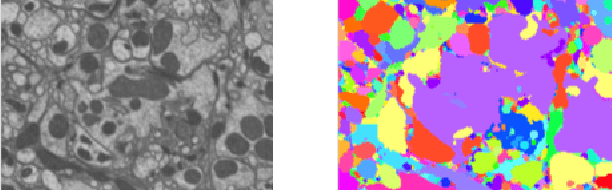

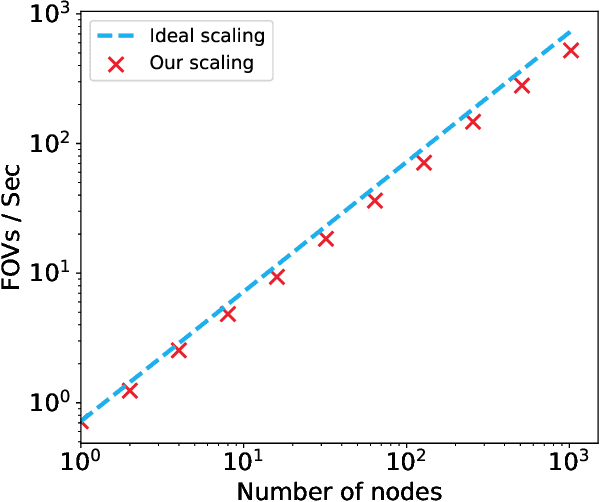

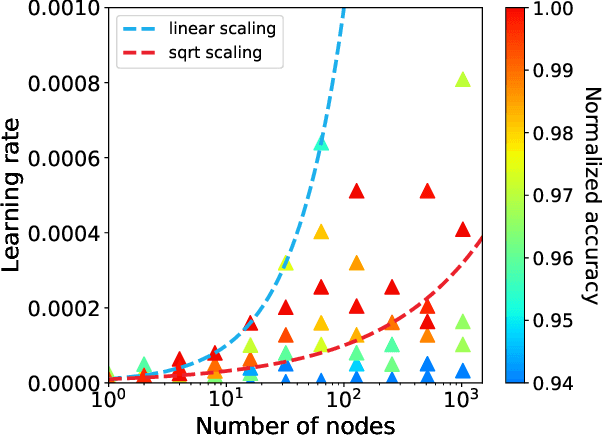

Abstract:Mapping all the neurons in the brain requires automatic reconstruction of entire cells from volume electron microscopy data. The flood-filling networks (FFN) architecture can achieve leading performance. However, the training of the network is computationally very expensive. In order to reduce the training time, we implemented synchronous and data-parallel distributed training using the Horovod framework on top of the published FFN code. We demonstrated the scaling of FFN training up to 1024 Intel Knights Landing (KNL) nodes at Argonne Leadership Computing Facility. We investigated the training accuracy with different optimizers, learning rates, and optional warm-up periods. We discovered that square root scaling for learning rate works best beyond 16 nodes, which is contrary to the case of smaller number of nodes, where linear learning rate scaling with warm-up performs the best. Our distributed training reaches 95% accuracy in approximately 4.5 hours on 1024 KNL nodes using Adam optimizer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge