Cong Hoang Quach

Socially Aware Motion Planning for Service Robots Using LiDAR and RGB-D Camera

Oct 13, 2024Abstract:Service robots that work alongside humans in a shared environment need a navigation system that takes into account not only physical safety but also social norms for mutual cooperation. In this paper, we introduce a motion planning system that includes human states such as positions and velocities and their personal space for social-aware navigation. The system first extracts human positions from the LiDAR and the RGB-D camera. It then uses the Kalman filter to fuse that information for human state estimation. An asymmetric Gaussian function is then employed to model human personal space based on their states. This model is used as the input to the dynamic window approach algorithm to generate trajectories for the robot. Experiments show that the robot is able to navigate alongside humans in a dynamic environment while respecting their physical and psychological comfort.

Multisensor Data Fusion for Reliable Obstacle Avoidance

Dec 26, 2022Abstract:In this work, we propose a new approach that combines data from multiple sensors for reliable obstacle avoidance. The sensors include two depth cameras and a LiDAR arranged so that they can capture the whole 3D area in front of the robot and a 2D slide around it. To fuse the data from these sensors, we first use an external camera as a reference to combine data from two depth cameras. A projection technique is then introduced to convert the 3D point cloud data of the cameras to its 2D correspondence. An obstacle avoidance algorithm is then developed based on the dynamic window approach. A number of experiments have been conducted to evaluate our proposed approach. The results show that the robot can effectively avoid static and dynamic obstacles of different shapes and sizes in different environments.

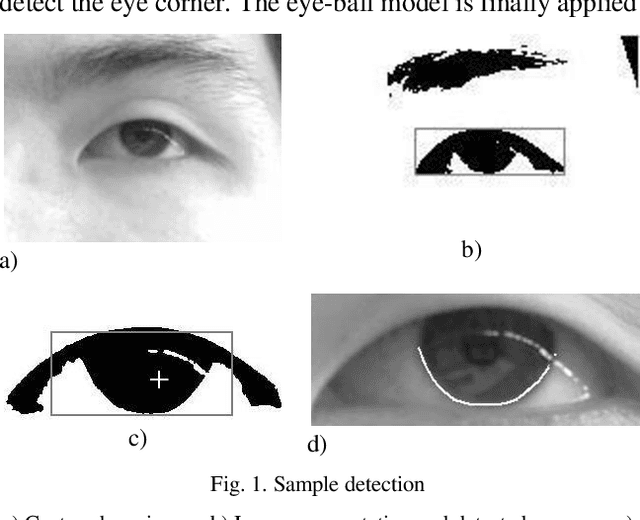

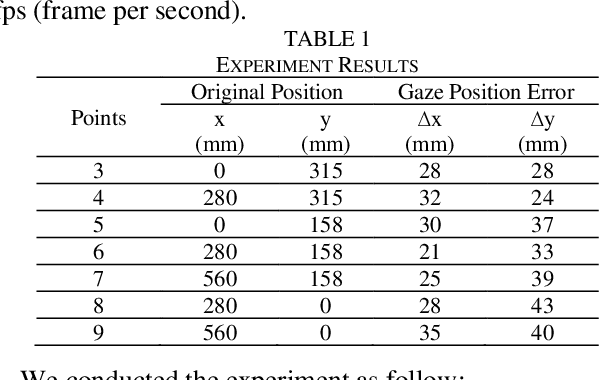

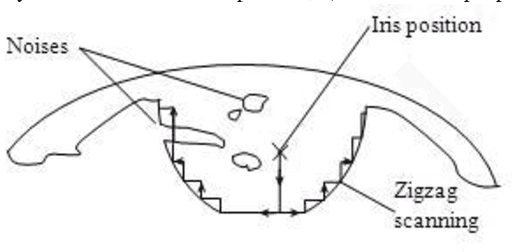

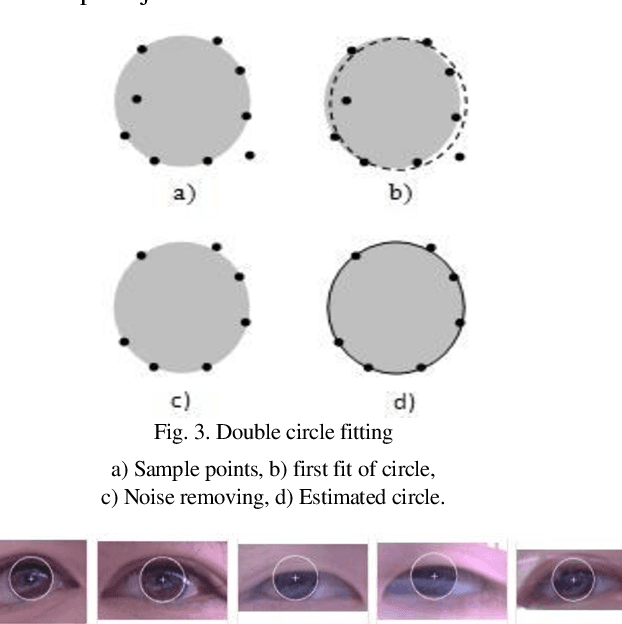

Development of a Fast and Robust Gaze Tracking System for Game Applications

May 06, 2021

Abstract:In this study, a novel eye tracking system using a visual camera is developed to extract human's gaze, and it can be used in modern game machines to bring new and innovative interactive experience to players. Central to the components of the system, is a robust iris-center and eye-corner detection algorithm basing on it the gaze is continuously and adaptively extracted. Evaluation tests were applied to nine people to evaluate the accuracy of the system and the results were 2.50 degrees (view angle) in horizontal direction and 3.07 degrees in vertical direction.

Recognition of 26 Degrees of Freedom of Hands Using Model-based approach and Depth-Color Images

May 13, 2020Abstract:In this study, we present an model-based approach to recognize full 26 degrees of freedom of a human hand. Input data include RGB-D images acquired from a Kinect camera and a 3D model of the hand constructed from its anatomy and graphical matrices. A cost function is then defined so that its minimum value is achieved when the model and observation images are matched. To solve the optimization problem in 26 dimensional space, the particle swarm optimization algorimth with improvements are used. In addition, parallel computation in graphical processing units (GPU) is utilized to handle computationally expensive tasks. Simulation and experimental results show that the system can recognize 26 degrees of freedom of hands with the processing time of 0.8 seconds per frame. The algorithm is robust to noise and the hardware requirement is simple with a single camera.

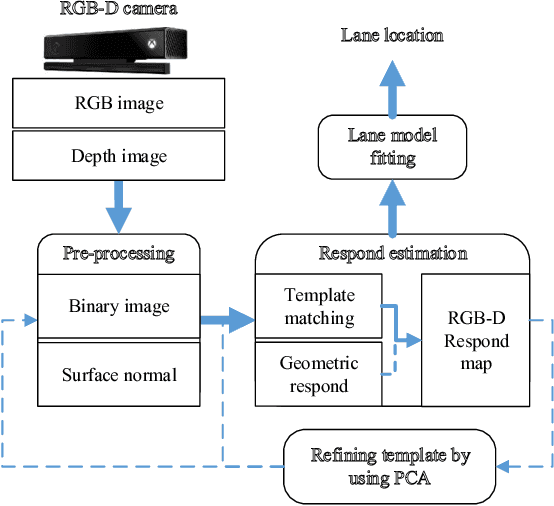

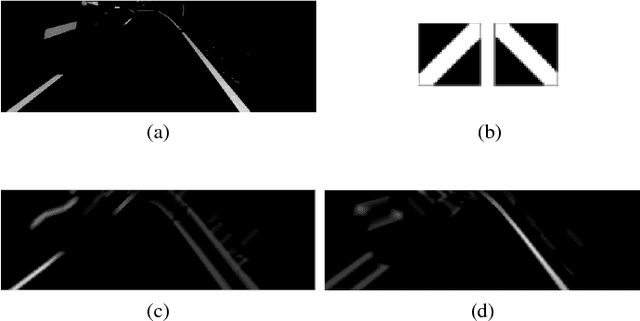

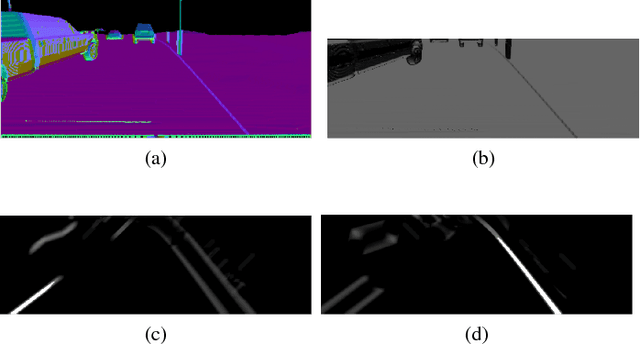

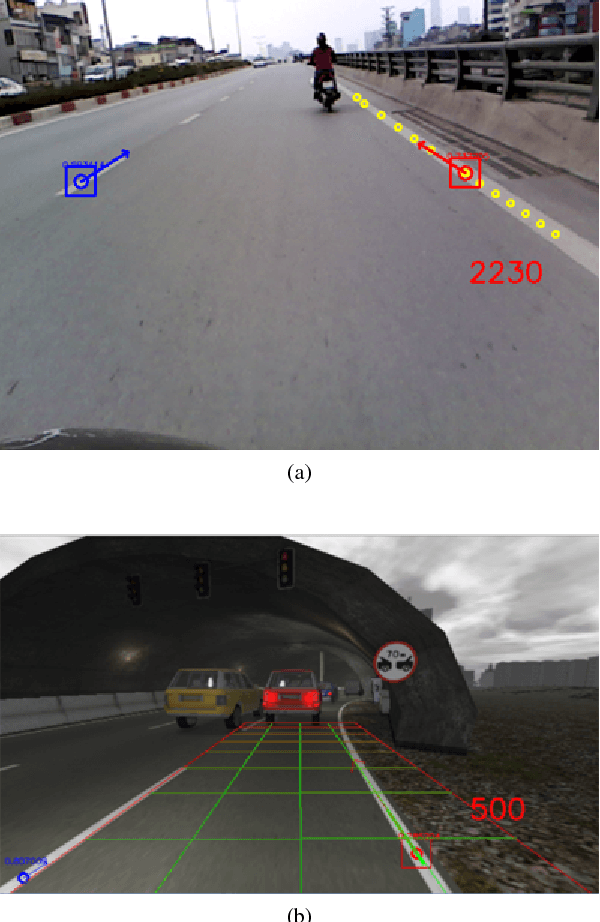

Real-time Lane Marker Detection Using Template Matching with RGB-D Camera

Jun 05, 2018

Abstract:This paper addresses the problem of lane detection which is fundamental for self-driving vehicles. Our approach exploits both colour and depth information recorded by a single RGB-D camera to better deal with negative factors such as lighting conditions and lane-like objects. In the approach, colour and depth images are first converted to a half-binary format and a 2D matrix of 3D points. They are then used as the inputs of template matching and geometric feature extraction processes to form a response map so that its values represent the probability of pixels being lane markers. To further improve the results, the template and lane surfaces are finally refined by principal component analysis and lane model fitting techniques. A number of experiments have been conducted on both synthetic and real datasets. The result shows that the proposed approach can effectively eliminate unwanted noise to accurately detect lane markers in various scenarios. Moreover, the processing speed of 20 frames per second under hardware configuration of a popular laptop computer allows the proposed algorithm to be implemented for real-time autonomous driving applications.

Enhanced discrete particle swarm optimization path planning for UAV vision-based surface inspection

Jun 14, 2017

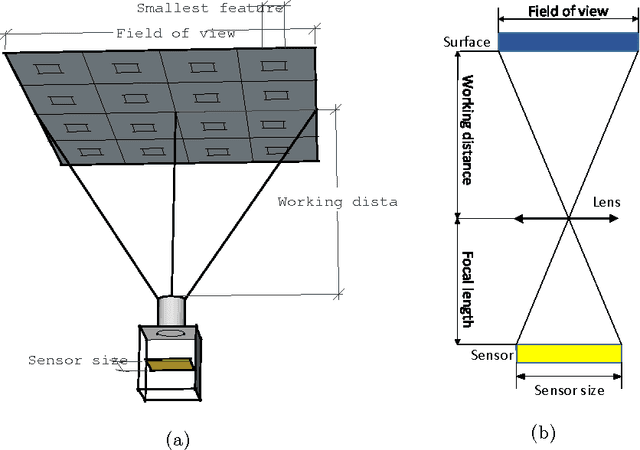

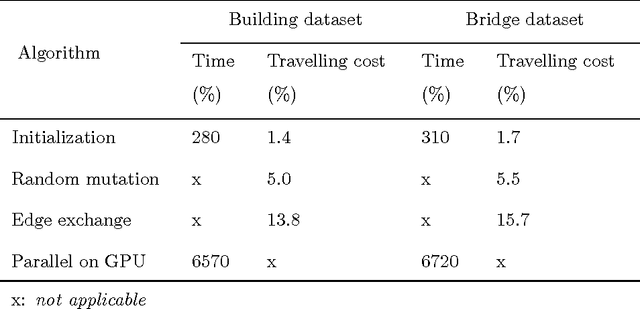

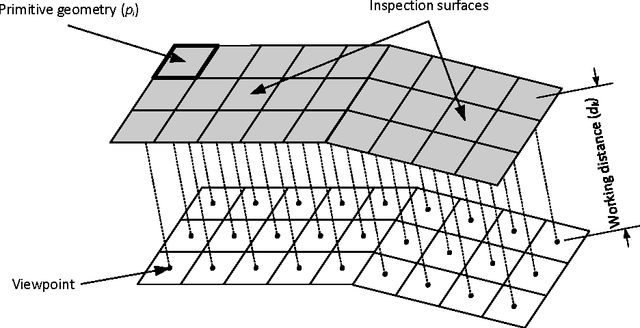

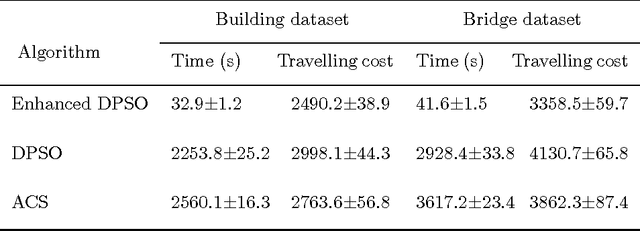

Abstract:In built infrastructure monitoring, an efficient path planning algorithm is essential for robotic inspection of large surfaces using computer vision. In this work, we first formulate the inspection path planning problem as an extended travelling salesman problem (TSP) in which both the coverage and obstacle avoidance were taken into account. An enhanced discrete particle swarm optimization (DPSO) algorithm is then proposed to solve the TSP, with performance improvement by using deterministic initialization, random mutation, and edge exchange. Finally, we take advantage of parallel computing to implement the DPSO in a GPU-based framework so that the computation time can be significantly reduced while keeping the hardware requirement unchanged. To show the effectiveness of the proposed algorithm, experimental results are included for datasets obtained from UAV inspection of an office building and a bridge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge