Chuxuan Hu

DRAMA: Unifying Data Retrieval and Analysis for Open-Domain Analytic Queries

Oct 31, 2025Abstract:Manually conducting real-world data analyses is labor-intensive and inefficient. Despite numerous attempts to automate data science workflows, none of the existing paradigms or systems fully demonstrate all three key capabilities required to support them effectively: (1) open-domain data collection, (2) structured data transformation, and (3) analytic reasoning. To overcome these limitations, we propose DRAMA, an end-to-end paradigm that answers users' analytic queries in natural language on large-scale open-domain data. DRAMA unifies data collection, transformation, and analysis as a single pipeline. To quantitatively evaluate system performance on tasks representative of DRAMA, we construct a benchmark, DRAMA-Bench, consisting of two categories of tasks: claim verification and question answering, each comprising 100 instances. These tasks are derived from real-world applications that have gained significant public attention and require the retrieval and analysis of open-domain data. We develop DRAMA-Bot, a multi-agent system designed following DRAMA. It comprises a data retriever that collects and transforms data by coordinating the execution of sub-agents, and a data analyzer that performs structured reasoning over the retrieved data. We evaluate DRAMA-Bot on DRAMA-Bench together with five state-of-the-art baseline agents. DRAMA-Bot achieves 86.5% task accuracy at a cost of $0.05, outperforming all baselines with up to 6.9 times the accuracy and less than 1/6 of the cost. DRAMA is publicly available at https://github.com/uiuc-kang-lab/drama.

Breaking Barriers: Do Reinforcement Post Training Gains Transfer To Unseen Domains?

Jun 24, 2025Abstract:Reinforcement post training (RPT) has recently shown promise in improving the reasoning abilities of large language models (LLMs). However, it remains unclear how well these improvements generalize to new domains, as prior work evaluates RPT models on data from the same domains used for fine-tuning. To understand the generalizability of RPT, we conduct two studies. (1) Observational: We compare a wide range of open-weight RPT models against their corresponding base models across multiple domains, including both seen and unseen domains in their fine-tuning data. (2) Interventional: we fine-tune LLMs with RPT on single domains and evaluate their performance across multiple domains. Both studies converge on the same conclusion that, although RPT brings substantial gains on tasks similar to the fine-tuning data, the gains generalize inconsistently and can vanish on domains with different reasoning patterns.

Data Free Backdoor Attacks

Dec 09, 2024

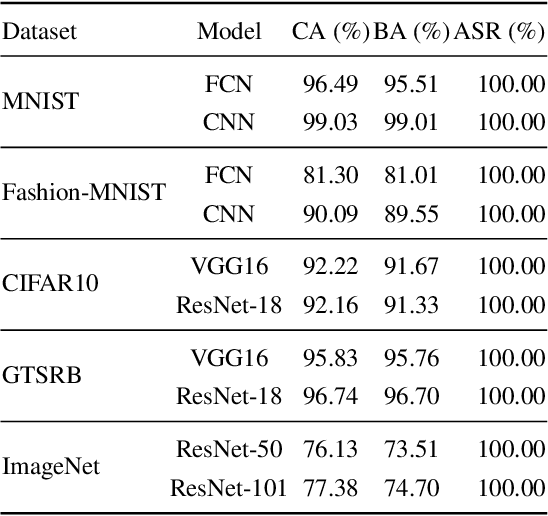

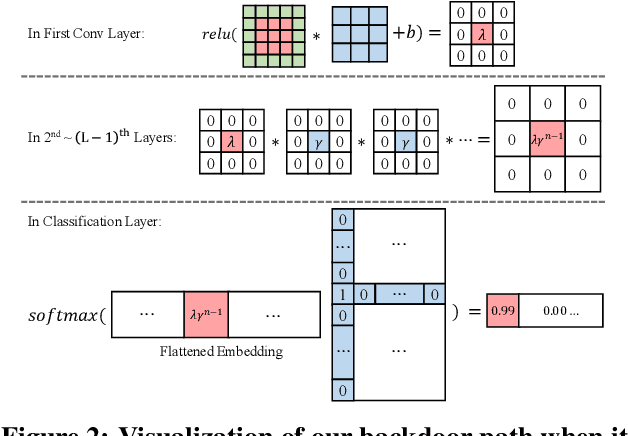

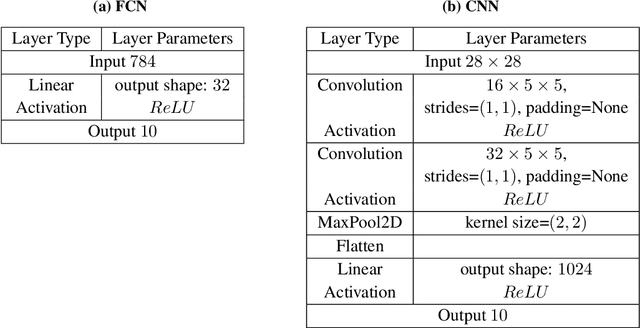

Abstract:Backdoor attacks aim to inject a backdoor into a classifier such that it predicts any input with an attacker-chosen backdoor trigger as an attacker-chosen target class. Existing backdoor attacks require either retraining the classifier with some clean data or modifying the model's architecture. As a result, they are 1) not applicable when clean data is unavailable, 2) less efficient when the model is large, and 3) less stealthy due to architecture changes. In this work, we propose DFBA, a novel retraining-free and data-free backdoor attack without changing the model architecture. Technically, our proposed method modifies a few parameters of a classifier to inject a backdoor. Through theoretical analysis, we verify that our injected backdoor is provably undetectable and unremovable by various state-of-the-art defenses under mild assumptions. Our evaluation on multiple datasets further demonstrates that our injected backdoor: 1) incurs negligible classification loss, 2) achieves 100% attack success rates, and 3) bypasses six existing state-of-the-art defenses. Moreover, our comparison with a state-of-the-art non-data-free backdoor attack shows our attack is more stealthy and effective against various defenses while achieving less classification accuracy loss.

GENIUS: A Novel Solution for Subteam Replacement with Clustering-based Graph Neural Network

Nov 11, 2022

Abstract:Subteam replacement is defined as finding the optimal candidate set of people who can best function as an unavailable subset of members (i.e., subteam) for certain reasons (e.g., conflicts of interests, employee churn), given a team of people embedded in a social network working on the same task. Prior investigations on this problem incorporate graph kernel as the optimal criteria for measuring the similarity between the new optimized team and the original team. However, the increasingly abundant social networks reveal fundamental limitations of existing methods, including (1) the graph kernel-based approaches are powerless to capture the key intrinsic correlations among node features, (2) they generally search over the entire network for every member to be replaced, making it extremely inefficient as the network grows, and (3) the requirement of equal-sized replacement for the unavailable subteam can be inapplicable due to limited hiring budget. In this work, we address the limitations in the state-of-the-art for subteam replacement by (1) proposing GENIUS, a novel clustering-based graph neural network (GNN) framework that can capture team network knowledge for flexible subteam replacement, and (2) equipping the proposed GENIUS with self-supervised positive team contrasting training scheme to improve the team-level representation learning and unsupervised node clusters to prune candidates for fast computation. Through extensive empirical evaluations, we demonstrate the efficacy of the proposed method (1) effectiveness: being able to select better candidate members that significantly increase the similarity between the optimized and original teams, and (2) efficiency: achieving more than 600 times speed-up in average running time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge