Chuanyue Shen

Envisioning a Next Generation Extended Reality Conferencing System with Efficient Photorealistic Human Rendering

Jun 28, 2023Abstract:Meeting online is becoming the new normal. Creating an immersive experience for online meetings is a necessity towards more diverse and seamless environments. Efficient photorealistic rendering of human 3D dynamics is the core of immersive meetings. Current popular applications achieve real-time conferencing but fall short in delivering photorealistic human dynamics, either due to limited 2D space or the use of avatars that lack realistic interactions between participants. Recent advances in neural rendering, such as the Neural Radiance Field (NeRF), offer the potential for greater realism in metaverse meetings. However, the slow rendering speed of NeRF poses challenges for real-time conferencing. We envision a pipeline for a future extended reality metaverse conferencing system that leverages monocular video acquisition and free-viewpoint synthesis to enhance data and hardware efficiency. Towards an immersive conferencing experience, we explore an accelerated NeRF-based free-viewpoint synthesis algorithm for rendering photorealistic human dynamics more efficiently. We show that our algorithm achieves comparable rendering quality while performing training and inference 44.5% and 213% faster than state-of-the-art methods, respectively. Our exploration provides a design basis for constructing metaverse conferencing systems that can handle complex application scenarios, including dynamic scene relighting with customized themes and multi-user conferencing that harmonizes real-world people into an extended world.

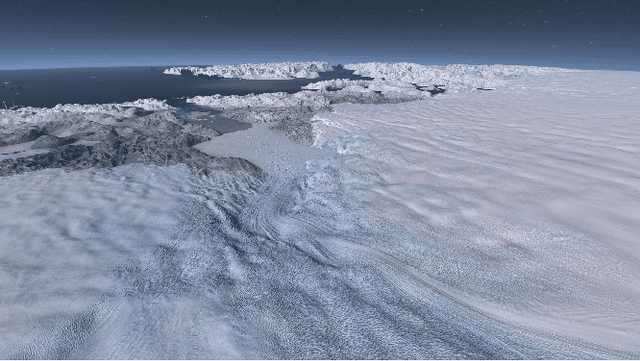

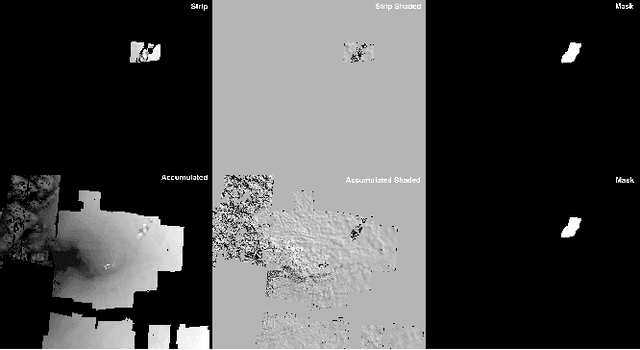

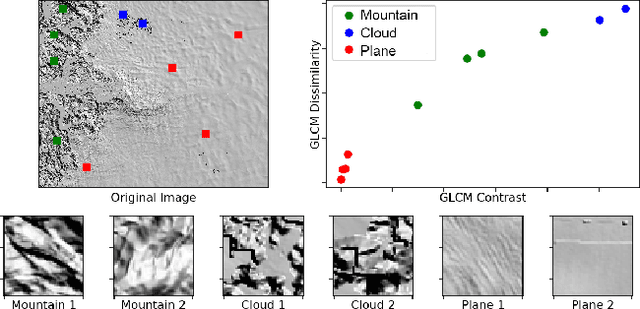

CloudFindr: A Deep Learning Cloud Artifact Masker for Satellite DEM Data

Oct 26, 2021

Abstract:Artifact removal is an integral component of cinematic scientific visualization, and is especially challenging with big datasets in which artifacts are difficult to define. In this paper, we describe a method for creating cloud artifact masks which can be used to remove artifacts from satellite imagery using a combination of traditional image processing together with deep learning based on U-Net. Compared to previous methods, our approach does not require multi-channel spectral imagery but performs successfully on single-channel Digital Elevation Models (DEMs). DEMs are a representation of the topography of the Earth and have a variety applications including planetary science, geology, flood modeling, and city planning.

Deep Learning-Based Automated Image Segmentation for Concrete Petrographic Analysis

May 28, 2020

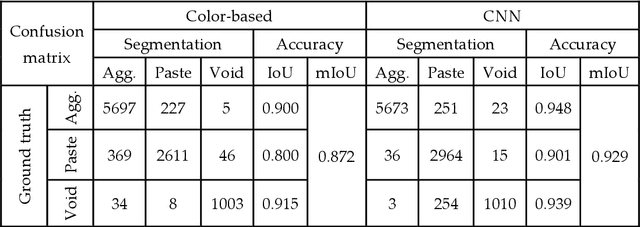

Abstract:The standard petrography test method for measuring air voids in concrete (ASTM C457) requires a meticulous and long examination of sample phase composition under a stereomicroscope. The high expertise and specialized equipment discourage this test for routine concrete quality control. Though the task can be alleviated with the aid of color-based image segmentation, additional surface color treatment is required. Recently, deep learning algorithms using convolutional neural networks (CNN) have achieved unprecedented segmentation performance on image testing benchmarks. In this study, we investigated the feasibility of using CNN to conduct concrete segmentation without the use of color treatment. The CNN demonstrated a strong potential to process a wide range of concretes, including those not involved in model training. The experimental results showed that CNN outperforms the color-based segmentation by a considerable margin, and has comparable accuracy to human experts. Furthermore, the segmentation time is reduced to mere seconds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge