Christopher Renton

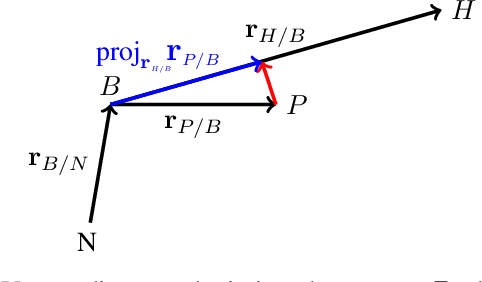

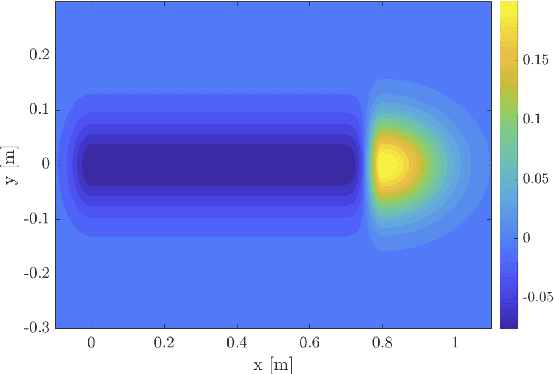

A Heteroscedastic Likelihood Model for Two-frame Optical Flow

Oct 14, 2020

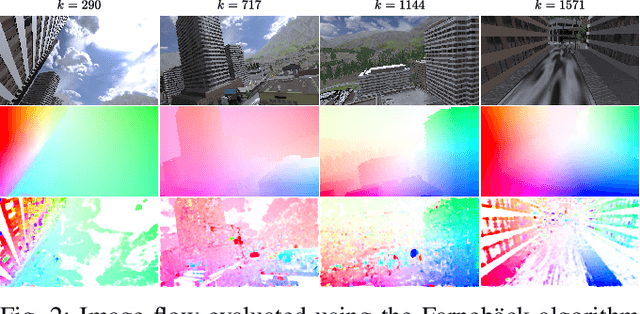

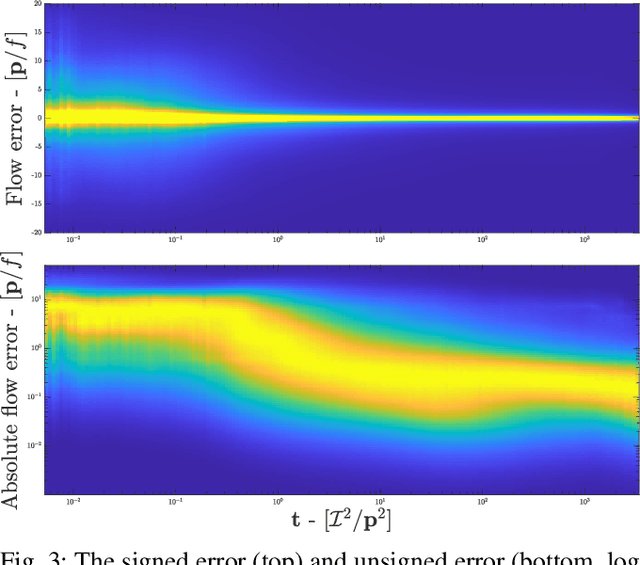

Abstract:Machine vision is an important sensing technology used in mobile robotic systems. Advancing the autonomy of such systems requires accurate characterisation of sensor uncertainty. Vision includes intrinsic uncertainty due to the camera sensor and extrinsic uncertainty due to environmental lighting and texture, which propagate through the image processing algorithms used to produce visual measurements. To faithfully characterise visual measurements, we must take into account these uncertainties. In this paper, we propose a new class of likelihood functions that characterises the uncertainty of the error distribution of two-frame optical flow that enables a heteroscedastic dependence on texture. We employ the proposed class to characterise the Farneback and Lucas Kanade optical flow algorithms and achieve close agreement with their respective empirical error distributions over a wide range of texture in a simulated environment. The utility of the proposed likelihood model is demonstrated in a visual odometry ego-motion simulation study, which results in 30-83% reduction in position drift rate compared to traditional methods employing a Gaussian error assumption. The development of an empirically congruent likelihood model advances the requisite tool-set for vision-based Bayesian inference and enables sensor data fusion with GPS, LiDAR and IMU to advance robust autonomous navigation.

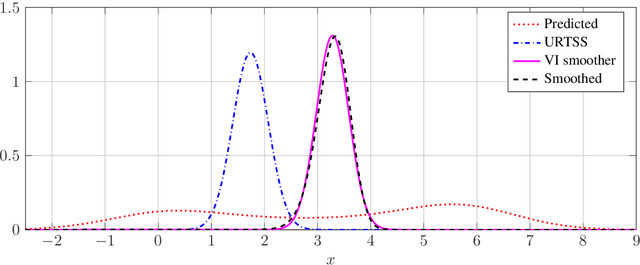

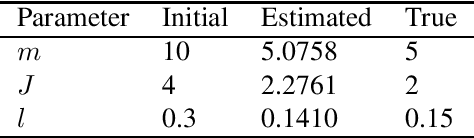

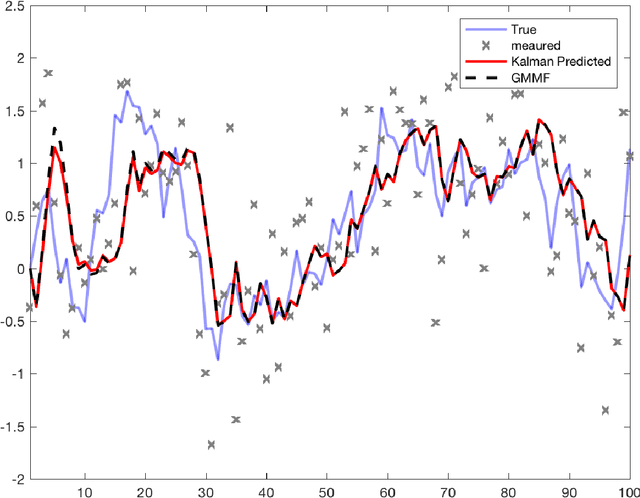

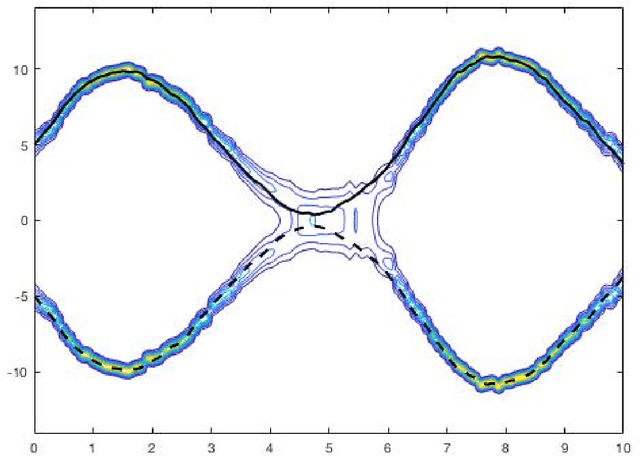

Constructing a variational family for nonlinear state-space models

Feb 07, 2020

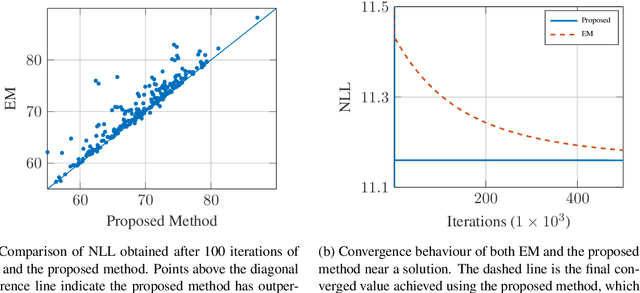

Abstract:We consider the problem of maximum likelihood parameter estimation for nonlinear state-space models. This is an important, but challenging problem. This challenge stems from the intractable multidimensional integrals that must be solved in order to compute, and maximise, the likelihood. Here we present a new variational family where variational inference is used in combination with tractable approximations of these integrals resulting in a deterministic optimisation problem. Our developments also include a novel means for approximating the smoothed state distributions. We demonstrate our construction on several examples and show that they perform well compared to state of the art methods on real data-sets.

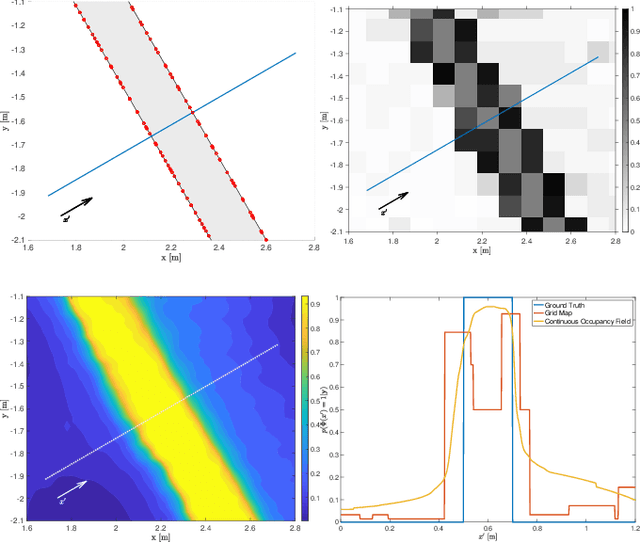

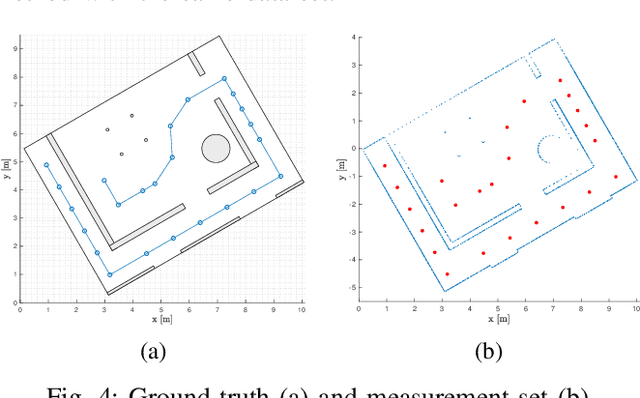

Learning Continuous Occupancy Maps with the Ising Process Model

Oct 18, 2019

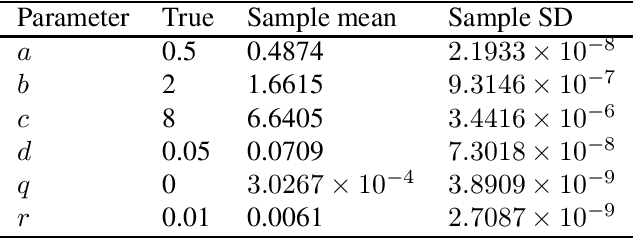

Abstract:We present a new method of learning a continuous occupancy field for use in robot navigation. Occupancy grid maps, or variants of, are possibly the most widely used and accepted method of building a map of a robot's environment. Various methods have been developed to learn continuous occupancy maps and have successfully resolved many of the shortcomings of grid mapping, namely, priori discretisation and spatial correlation. However, most methods for producing a continuous occupancy field remain computationally expensive or heuristic in nature. Our method explores a generalisation of the so-called Ising model as a suitable candidate for modelling an occupancy field. We also present a unique kernel for use within our method that models range measurements. The method is quite attractive as it requires only a small number of hyperparameters to be trained, and is computationally efficient. The small number of hyperparameters can be quickly learned by maximising a pseudo likelihood. The technique is demonstrated on both a small simulated indoor environment with known ground truth as well as large indoor and outdoor areas, using two common real data sets.

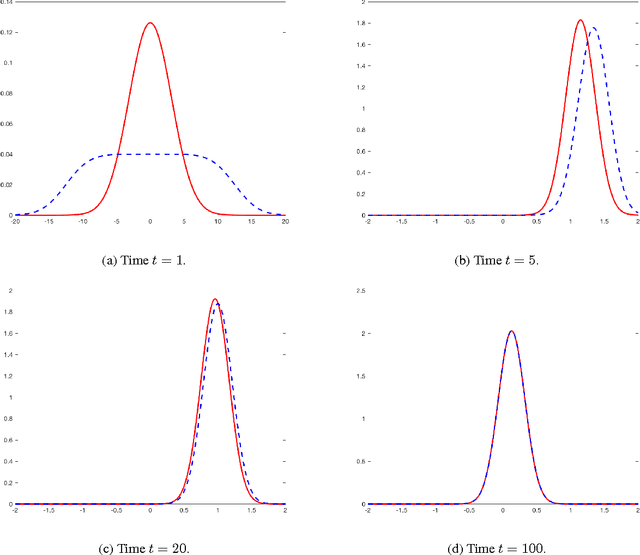

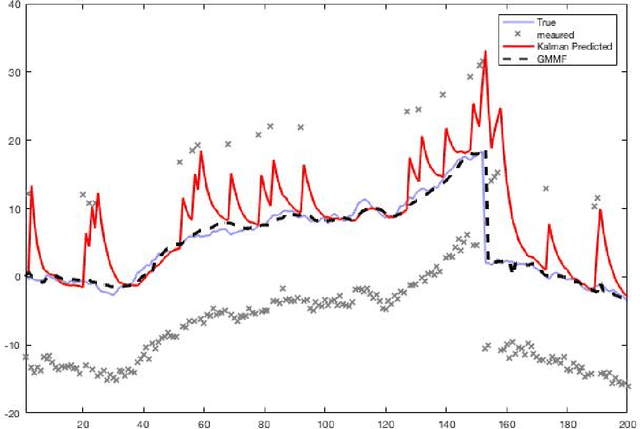

A Bayesian Filtering Algorithm for Gaussian Mixture Models

May 16, 2017

Abstract:A Bayesian filtering algorithm is developed for a class of state-space systems that can be modelled via Gaussian mixtures. In general, the exact solution to this filtering problem involves an exponential growth in the number of mixture terms and this is handled here by utilising a Gaussian mixture reduction step after both the time and measurement updates. In addition, a square-root implementation of the unified algorithm is presented and this algorithm is profiled on several simulated systems. This includes the state estimation for two non-linear systems that are strictly outside the class considered in this paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge