Christopher J. Kymn

Towards a Comprehensive Theory of Reservoir Computing

Nov 18, 2025Abstract:In reservoir computing, an input sequence is processed by a recurrent neural network, the reservoir, which transforms it into a spatial pattern that a shallow readout network can then exploit for tasks such as memorization and time-series prediction or classification. Echo state networks (ESN) are a model class in which the reservoir is a traditional artificial neural network. This class contains many model types, each with sets of hyperparameters. Selecting models and parameter settings for particular applications requires a theory for predicting and comparing performances. Here, we demonstrate that recent developments of perceptron theory can be used to predict the memory capacity and accuracy of a wide variety of ESN models, including reservoirs with linear neurons, sigmoid nonlinear neurons, different types of recurrent matrices, and different types of readout networks. Across thirty variants of ESNs, we show that empirical results consistently confirm the theory's predictions. As a practical demonstration, the theory is used to optimize memory capacity of an ESN in the entire joint parameter space. Further, guided by the theory, we propose a novel ESN model with a readout network that does not require training, and which outperforms earlier ESN models without training. Finally, we characterize the geometry of the readout networks in ESNs, which reveals that many ESN models exhibit a similar regular simplex geometry as has been observed in the output weights of deep neural networks.

Efficient Hyperdimensional Computing with Modular Composite Representations

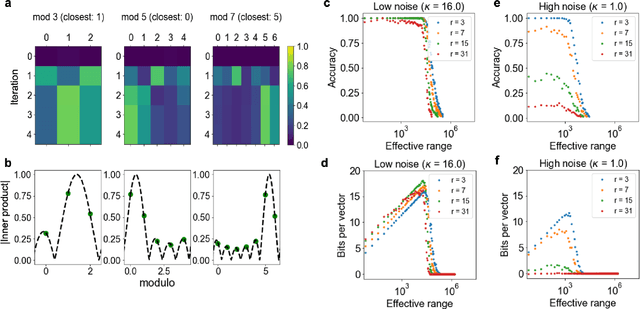

Nov 12, 2025Abstract:The modular composite representation (MCR) is a computing model that represents information with high-dimensional integer vectors using modular arithmetic. Originally proposed as a generalization of the binary spatter code model, it aims to provide higher representational power while remaining a lighter alternative to models requiring high-precision components. Despite this potential, MCR has received limited attention. Systematic analyses of its trade-offs and comparisons with other models are lacking, sustaining the perception that its added complexity outweighs the improved expressivity. In this work, we revisit MCR by presenting its first extensive evaluation, demonstrating that it achieves a unique balance of capacity, accuracy, and hardware efficiency. Experiments measuring capacity demonstrate that MCR outperforms binary and integer vectors while approaching complex-valued representations at a fraction of their memory footprint. Evaluation on 123 datasets confirms consistent accuracy gains and shows that MCR can match the performance of binary spatter codes using up to 4x less memory. We investigate the hardware realization of MCR by showing that it maps naturally to digital logic and by designing the first dedicated accelerator. Evaluations on basic operations and 7 selected datasets demonstrate a speedup of up to 3 orders of magnitude and significant energy reductions compared to software implementation. When matched for accuracy against binary spatter codes, MCR achieves on average 3.08x faster execution and 2.68x lower energy consumption. These findings demonstrate that, although MCR requires more sophisticated operations than binary spatter codes, its modular arithmetic and higher per-component precision enable lower dimensionality. When realized with dedicated hardware, this results in a faster, more energy-efficient, and high-precision alternative to existing models.

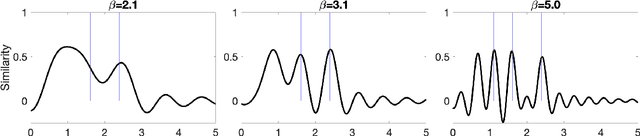

Binding in hippocampal-entorhinal circuits enables compositionality in cognitive maps

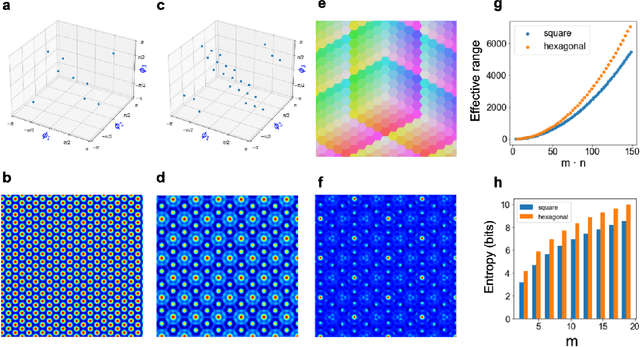

Jun 27, 2024Abstract:We propose a normative model for spatial representation in the hippocampal formation that combines optimality principles, such as maximizing coding range and spatial information per neuron, with an algebraic framework for computing in distributed representation. Spatial position is encoded in a residue number system, with individual residues represented by high-dimensional, complex-valued vectors. These are composed into a single vector representing position by a similarity-preserving, conjunctive vector-binding operation. Self-consistency between the representations of the overall position and of the individual residues is enforced by a modular attractor network whose modules correspond to the grid cell modules in entorhinal cortex. The vector binding operation can also associate different contexts to spatial representations, yielding a model for entorhinal cortex and hippocampus. We show that the model achieves normative desiderata including superlinear scaling of patterns with dimension, robust error correction, and hexagonal, carry-free encoding of spatial position. These properties in turn enable robust path integration and association with sensory inputs. More generally, the model formalizes how compositional computations could occur in the hippocampal formation and leads to testable experimental predictions.

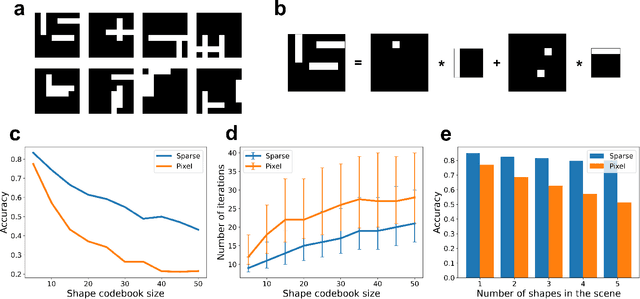

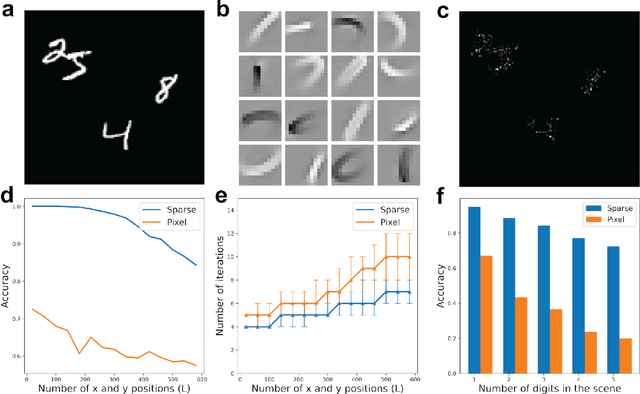

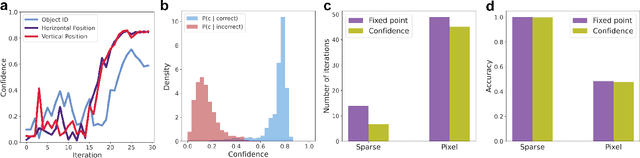

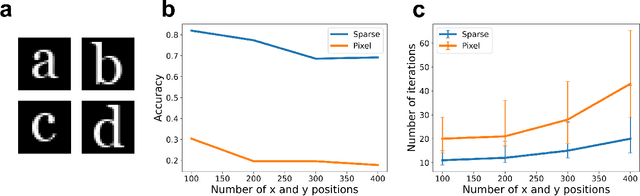

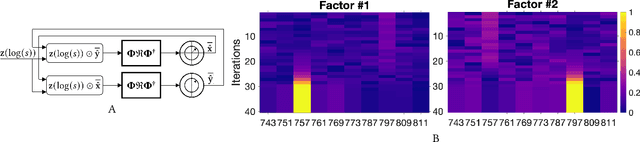

Compositional Factorization of Visual Scenes with Convolutional Sparse Coding and Resonator Networks

Apr 29, 2024

Abstract:We propose a system for visual scene analysis and recognition based on encoding the sparse, latent feature-representation of an image into a high-dimensional vector that is subsequently factorized to parse scene content. The sparse feature representation is learned from image statistics via convolutional sparse coding, while scene parsing is performed by a resonator network. The integration of sparse coding with the resonator network increases the capacity of distributed representations and reduces collisions in the combinatorial search space during factorization. We find that for this problem the resonator network is capable of fast and accurate vector factorization, and we develop a confidence-based metric that assists in tracking the convergence of the resonator network.

Computing with Residue Numbers in High-Dimensional Representation

Nov 08, 2023

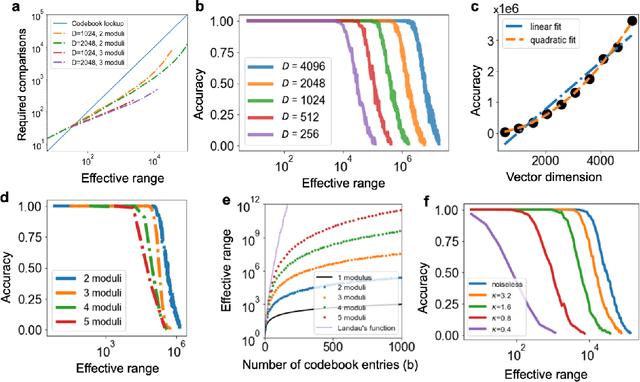

Abstract:We introduce Residue Hyperdimensional Computing, a computing framework that unifies residue number systems with an algebra defined over random, high-dimensional vectors. We show how residue numbers can be represented as high-dimensional vectors in a manner that allows algebraic operations to be performed with component-wise, parallelizable operations on the vector elements. The resulting framework, when combined with an efficient method for factorizing high-dimensional vectors, can represent and operate on numerical values over a large dynamic range using vastly fewer resources than previous methods, and it exhibits impressive robustness to noise. We demonstrate the potential for this framework to solve computationally difficult problems in visual perception and combinatorial optimization, showing improvement over baseline methods. More broadly, the framework provides a possible account for the computational operations of grid cells in the brain, and it suggests new machine learning architectures for representing and manipulating numerical data.

Efficient Decoding of Compositional Structure in Holistic Representations

May 26, 2023Abstract:We investigate the task of retrieving information from compositional distributed representations formed by Hyperdimensional Computing/Vector Symbolic Architectures and present novel techniques which achieve new information rate bounds. First, we provide an overview of the decoding techniques that can be used to approach the retrieval task. The techniques are categorized into four groups. We then evaluate the considered techniques in several settings that involve, e.g., inclusion of external noise and storage elements with reduced precision. In particular, we find that the decoding techniques from the sparse coding and compressed sensing literature (rarely used for Hyperdimensional Computing/Vector Symbolic Architectures) are also well-suited for decoding information from the compositional distributed representations. Combining these decoding techniques with interference cancellation ideas from communications improves previously reported bounds (Hersche et al., 2021) of the information rate of the distributed representations from 1.20 to 1.40 bits per dimension for smaller codebooks and from 0.60 to 1.26 bits per dimension for larger codebooks.

* 28 pages, 5 figures

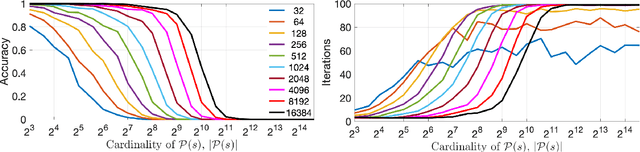

Integer Factorization with Compositional Distributed Representations

Mar 02, 2022

Abstract:In this paper, we present an approach to integer factorization using distributed representations formed with Vector Symbolic Architectures. The approach formulates integer factorization in a manner such that it can be solved using neural networks and potentially implemented on parallel neuromorphic hardware. We introduce a method for encoding numbers in distributed vector spaces and explain how the resonator network can solve the integer factorization problem. We evaluate the approach on factorization of semiprimes by measuring the factorization accuracy versus the scale of the problem. We also demonstrate how the proposed approach generalizes beyond the factorization of semiprimes; in principle, it can be used for factorization of any composite number. This work demonstrates how a well-known combinatorial search problem may be formulated and solved within the framework of Vector Symbolic Architectures, and it opens the door to solving similarly difficult problems in other domains.

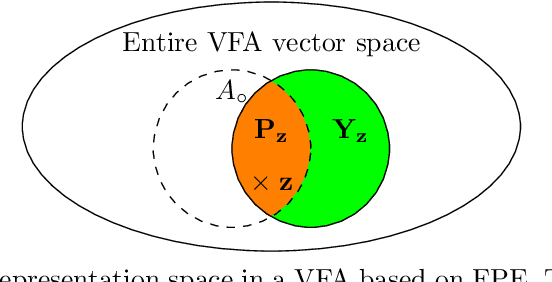

Computing on Functions Using Randomized Vector Representations

Sep 08, 2021

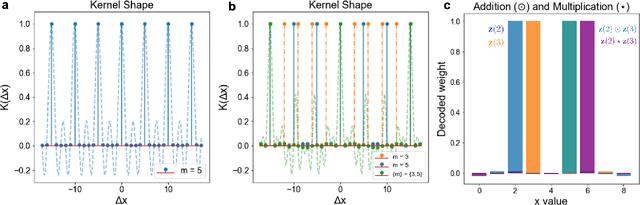

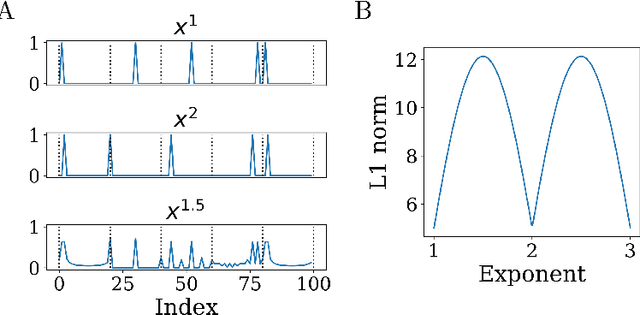

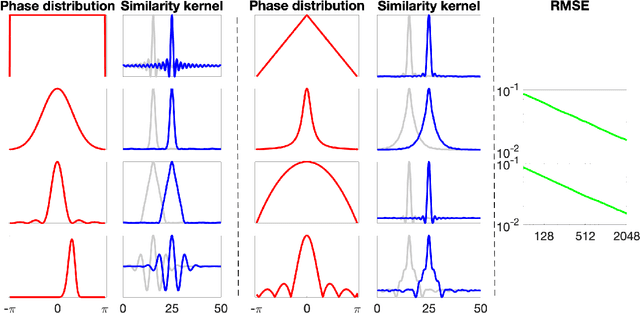

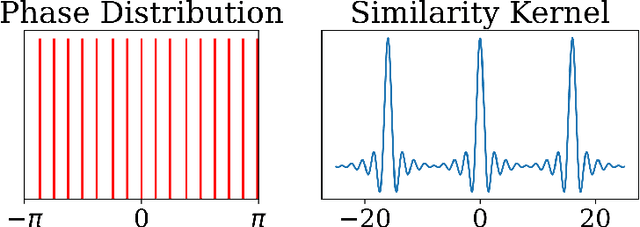

Abstract:Vector space models for symbolic processing that encode symbols by random vectors have been proposed in cognitive science and connectionist communities under the names Vector Symbolic Architecture (VSA), and, synonymously, Hyperdimensional (HD) computing. In this paper, we generalize VSAs to function spaces by mapping continuous-valued data into a vector space such that the inner product between the representations of any two data points represents a similarity kernel. By analogy to VSA, we call this new function encoding and computing framework Vector Function Architecture (VFA). In VFAs, vectors can represent individual data points as well as elements of a function space (a reproducing kernel Hilbert space). The algebraic vector operations, inherited from VSA, correspond to well-defined operations in function space. Furthermore, we study a previously proposed method for encoding continuous data, fractional power encoding (FPE), which uses exponentiation of a random base vector to produce randomized representations of data points and fulfills the kernel properties for inducing a VFA. We show that the distribution from which elements of the base vector are sampled determines the shape of the FPE kernel, which in turn induces a VFA for computing with band-limited functions. In particular, VFAs provide an algebraic framework for implementing large-scale kernel machines with random features, extending Rahimi and Recht, 2007. Finally, we demonstrate several applications of VFA models to problems in image recognition, density estimation and nonlinear regression. Our analyses and results suggest that VFAs constitute a powerful new framework for representing and manipulating functions in distributed neural systems, with myriad applications in artificial intelligence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge