Christine Eilers

On the Importance of Patient Acceptance for Medical Robotic Imaging

Feb 13, 2023Abstract:Purpose: Mutual acceptance is required for any human-to-human interaction. Therefore, one would assume that this also holds for robot-patient interactions. However, the medical robotic imaging field lacks research in the area of acceptance. This work, therefore, aims at analyzing the influence of robot-patient interactions on acceptance in an exemplary medical robotic imaging system. Methods: We designed an interactive human-robot scenario, including auditive and gestural cues, and compared this pipeline to a non-interactive scenario. Both scenarios were evaluated through a questionnaire to measure acceptance. Heart rate monitoring was also used to measure stress. The impact of the interaction was quantified in the use case of robotic ultrasound scanning of the neck. Results: We conducted the first user study on patient acceptance of robotic ultrasound. Results show that verbal interactions impacts trust more than gestural ones. Furthermore, through interaction, the robot is perceived to be friendlier. The heart rate data indicates that robot-patient interaction could reduce stress. Conclusion: Robot-patient interactions are crucial for improving acceptance in medical robotic imaging systems. While verbal interaction is most important, the preferred interaction type and content are participant-dependent. Heart rate values indicate that such interactions can also reduce stress. Overall, this initial work showed that interactions improve patient acceptance in medical robotic imaging, and other medical robot-patient systems can benefit from the design proposals to enhance acceptance in interactive scenarios.

Ultra-NeRF: Neural Radiance Fields for Ultrasound Imaging

Jan 25, 2023

Abstract:We present a physics-enhanced implicit neural representation (INR) for ultrasound (US) imaging that learns tissue properties from overlapping US sweeps. Our proposed method leverages a ray-tracing-based neural rendering for novel view US synthesis. Recent publications demonstrated that INR models could encode a representation of a three-dimensional scene from a set of two-dimensional US frames. However, these models fail to consider the view-dependent changes in appearance and geometry intrinsic to US imaging. In our work, we discuss direction-dependent changes in the scene and show that a physics-inspired rendering improves the fidelity of US image synthesis. In particular, we demonstrate experimentally that our proposed method generates geometrically accurate B-mode images for regions with ambiguous representation owing to view-dependent differences of the US images. We conduct our experiments using simulated B-mode US sweeps of the liver and acquired US sweeps of a spine phantom tracked with a robotic arm. The experiments corroborate that our method generates US frames that enable consistent volume compounding from previously unseen views. To the best of our knowledge, the presented work is the first to address view-dependent US image synthesis using INR.

RSV: Robotic Sonography for Thyroid Volumetry

Dec 13, 2021

Abstract:In nuclear medicine, radioiodine therapy is prescribed to treat diseases like hyperthyroidism. The calculation of the prescribed dose depends, amongst other factors, on the thyroid volume. This is currently estimated using conventional 2D ultrasound imaging. However, this modality is inherently user-dependant, resulting in high variability in volume estimations. To increase reproducibility and consistency, we uniquely combine a neural network-based segmentation with an automatic robotic ultrasound scanning for thyroid volumetry. The robotic acquisition is achieved by using a 6 DOF robotic arm with an attached ultrasound probe. Its movement is based on an online segmentation of each thyroid lobe and the appearance of the US image. During post-processing, the US images are segmented to obtain a volume estimation. In an ablation study, we demonstrated the superiority of the motion guidance algorithms for the robot arm movement compared to a naive linear motion, executed by the robot in terms of volumetric accuracy. In a user study on a phantom, we compared conventional 2D ultrasound measurements with our robotic system. The mean volume measurement error of ultrasound expert users could be significantly decreased from 20.85+/-16.10% to only 8.23+/-3.10% compared to the ground truth. This tendency was observed even more in non-expert users where the mean error improvement with the robotic system was measured to be as high as $85\%$ which clearly shows the advantages of the robotic support.

Tracked 3D Ultrasound and Deep Neural Network-based Thyroid Segmentation reduce Interobserver Variability in Thyroid Volumetry

Aug 10, 2021

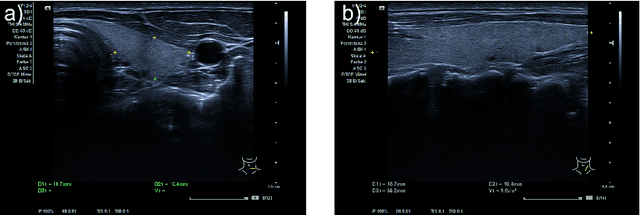

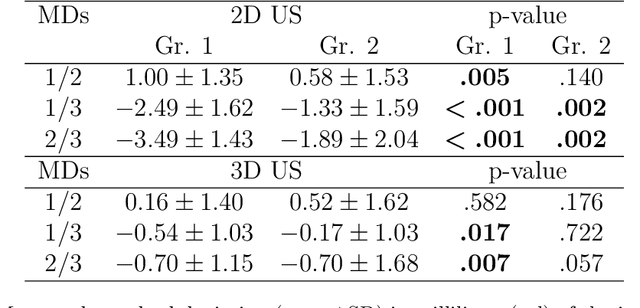

Abstract:Background: Thyroid volumetry is crucial in diagnosis, treatment and monitoring of thyroid diseases. However, conventional thyroid volumetry with 2D ultrasound is highly operator-dependent. This study compares 2D ultrasound and tracked 3D ultrasound with an automatic thyroid segmentation based on a deep neural network regarding inter- and intraobserver variability, time and accuracy. Volume reference was MRI. Methods: 28 healthy volunteers were scanned with 2D and 3D ultrasound as well as by MRI. Three physicians (MD 1, 2, 3) with different levels of experience (6, 4 and 1 a) performed three 2D ultrasound and three tracked 3D ultrasound scans on each volunteer. In the 2D scans the thyroid lobe volumes were calculated with the ellipsoid formula. A convolutional deep neural network (CNN) segmented the 3D thyroid lobes automatically. On MRI (T1 VIBE sequence) the thyroid was manually segmented by an experienced medical doctor. Results: The CNN was trained to obtain a dice score of 0.94. The interobserver variability comparing two MDs showed mean differences for 2D and 3D respectively of 0.58 ml to 0.52 ml (MD1 vs. 2), -1.33 ml to -0.17 ml (MD1 vs. 3) and -1.89 ml to -0.70 ml (MD2 vs. 3). Paired samples t-tests showed significant differences in two comparisons for 2D and none for 3D. Intraobsever variability was similar for 2D and 3D ultrasound. Comparison of ultrasound volumes and MRI volumes by paired samples t-tests showed a significant difference for the 2D volumetry of all MDs, and no significant difference for 3D ultrasound. Acquisition time was significantly shorter for 3D ultrasound. Conclusion: Tracked 3D ultrasound combined with a CNN segmentation significantly reduces interobserver variability in thyroid volumetry and increases the accuracy of the measurements with shorter acquisition times.

Rethinking Ultrasound Augmentation: A Physics-Inspired Approach

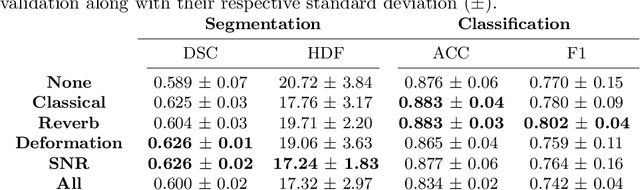

May 05, 2021

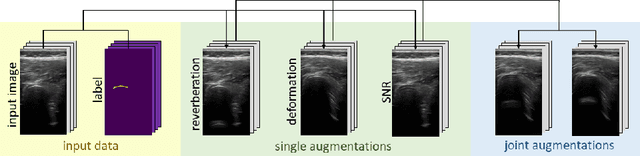

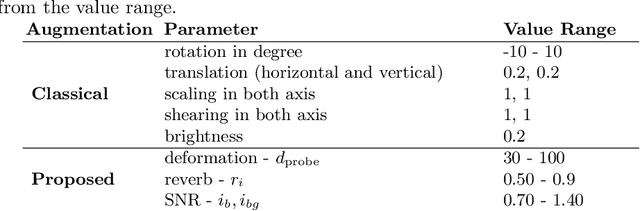

Abstract:Medical Ultrasound (US), despite its wide use, is characterized by artifacts and operator dependency. Those attributes hinder the gathering and utilization of US datasets for the training of Deep Neural Networks used for Computer-Assisted Intervention Systems. Data augmentation is commonly used to enhance model generalization and performance. However, common data augmentation techniques, such as affine transformations do not align with the physics of US and, when used carelessly can lead to unrealistic US images. To this end, we propose a set of physics-inspired transformations, including deformation, reverb and Signal-to-Noise Ratio, that we apply on US B-mode images for data augmentation. We evaluate our method on a new spine US dataset for the tasks of bone segmentation and classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge