Christian Lessig

ArchesWeather & ArchesWeatherGen: a deterministic and generative model for efficient ML weather forecasting

Dec 17, 2024

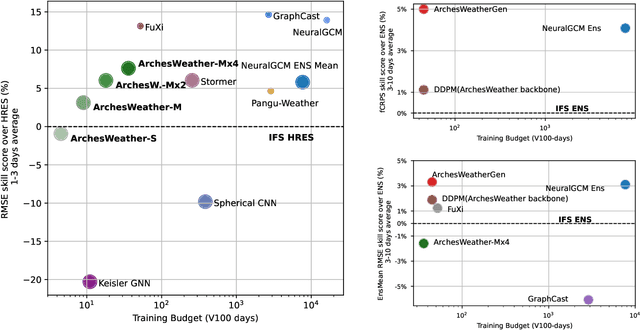

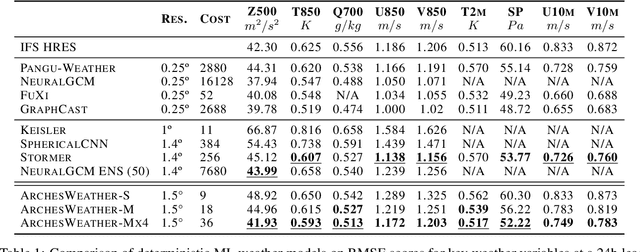

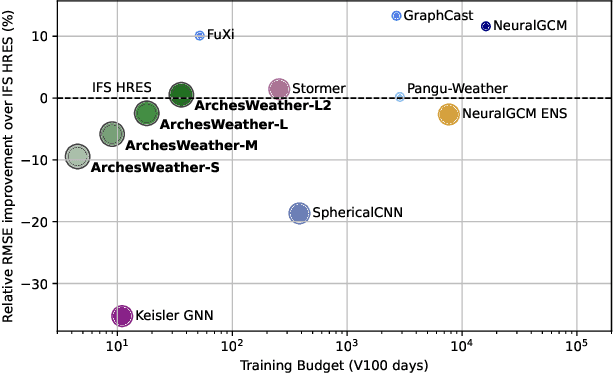

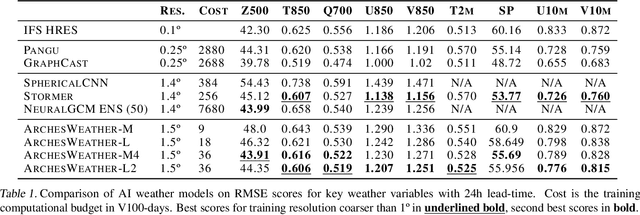

Abstract:Weather forecasting plays a vital role in today's society, from agriculture and logistics to predicting the output of renewable energies, and preparing for extreme weather events. Deep learning weather forecasting models trained with the next state prediction objective on ERA5 have shown great success compared to numerical global circulation models. However, for a wide range of applications, being able to provide representative samples from the distribution of possible future weather states is critical. In this paper, we propose a methodology to leverage deterministic weather models in the design of probabilistic weather models, leading to improved performance and reduced computing costs. We first introduce \textbf{ArchesWeather}, a transformer-based deterministic model that improves upon Pangu-Weather by removing overrestrictive inductive priors. We then design a probabilistic weather model called \textbf{ArchesWeatherGen} based on flow matching, a modern variant of diffusion models, that is trained to project ArchesWeather's predictions to the distribution of ERA5 weather states. ArchesWeatherGen is a true stochastic emulator of ERA5 and surpasses IFS ENS and NeuralGCM on all WeatherBench headline variables (except for NeuralGCM's geopotential). Our work also aims to democratize the use of deterministic and generative machine learning models in weather forecasting research, with academic computing resources. All models are trained at 1.5{\deg} resolution, with a training budget of $\sim$9 V100 days for ArchesWeather and $\sim$45 V100 days for ArchesWeatherGen. For inference, ArchesWeatherGen generates 15-day weather trajectories at a rate of 1 minute per ensemble member on a A100 GPU card. To make our work fully reproducible, our code and models are open source, including the complete pipeline for data preparation, training, and evaluation, at https://github.com/INRIA/geoarches .

Robustness of AI-based weather forecasts in a changing climate

Sep 27, 2024

Abstract:Data-driven machine learning models for weather forecasting have made transformational progress in the last 1-2 years, with state-of-the-art ones now outperforming the best physics-based models for a wide range of skill scores. Given the strong links between weather and climate modelling, this raises the question whether machine learning models could also revolutionize climate science, for example by informing mitigation and adaptation to climate change or to generate larger ensembles for more robust uncertainty estimates. Here, we show that current state-of-the-art machine learning models trained for weather forecasting in present-day climate produce skillful forecasts across different climate states corresponding to pre-industrial, present-day, and future 2.9K warmer climates. This indicates that the dynamics shaping the weather on short timescales may not differ fundamentally in a changing climate. It also demonstrates out-of-distribution generalization capabilities of the machine learning models that are a critical prerequisite for climate applications. Nonetheless, two of the models show a global-mean cold bias in the forecasts for the future warmer climate state, i.e. they drift towards the colder present-day climate they have been trained for. A similar result is obtained for the pre-industrial case where two out of three models show a warming. We discuss possible remedies for these biases and analyze their spatial distribution, revealing complex warming and cooling patterns that are partly related to missing ocean-sea ice and land surface information in the training data. Despite these current limitations, our results suggest that data-driven machine learning models will provide powerful tools for climate science and transform established approaches by complementing conventional physics-based models.

Data driven weather forecasts trained and initialised directly from observations

Jul 22, 2024Abstract:Skilful Machine Learned weather forecasts have challenged our approach to numerical weather prediction, demonstrating competitive performance compared to traditional physics-based approaches. Data-driven systems have been trained to forecast future weather by learning from long historical records of past weather such as the ECMWF ERA5. These datasets have been made freely available to the wider research community, including the commercial sector, which has been a major factor in the rapid rise of ML forecast systems and the levels of accuracy they have achieved. However, historical reanalyses used for training and real-time analyses used for initial conditions are produced by data assimilation, an optimal blending of observations with a physics-based forecast model. As such, many ML forecast systems have an implicit and unquantified dependence on the physics-based models they seek to challenge. Here we propose a new approach, training a neural network to predict future weather purely from historical observations with no dependence on reanalyses. We use raw observations to initialise a model of the atmosphere (in observation space) learned directly from the observations themselves. Forecasts of crucial weather parameters (such as surface temperature and wind) are obtained by predicting weather parameter observations (e.g. SYNOP surface data) at future times and arbitrary locations. We present preliminary results on forecasting observations 12-hours into the future. These already demonstrate successful learning of time evolutions of the physical processes captured in real observations. We argue that this new approach, by staying purely in observation space, avoids many of the challenges of traditional data assimilation, can exploit a wider range of observations and is readily expanded to simultaneous forecasting of the full Earth system (atmosphere, land, ocean and composition).

ArchesWeather: An efficient AI weather forecasting model at 1.5° resolution

May 23, 2024

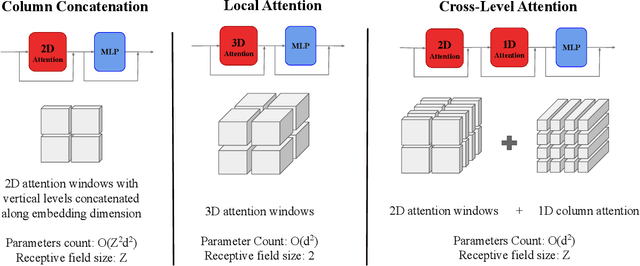

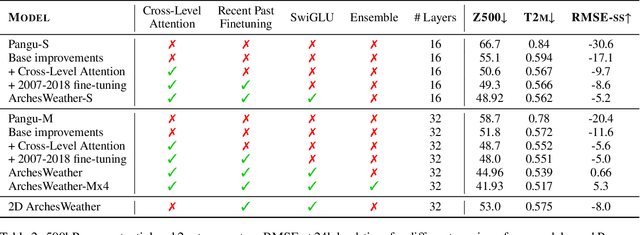

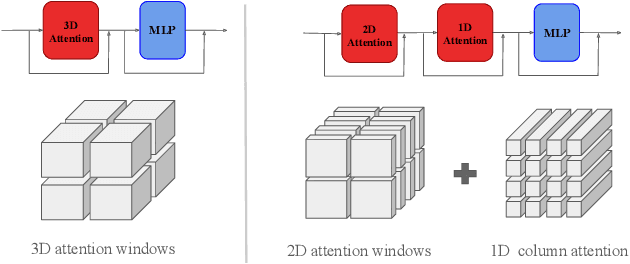

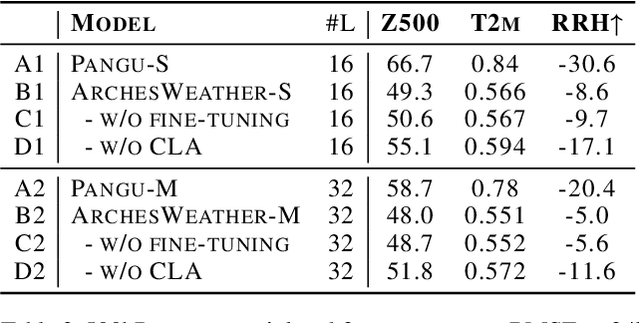

Abstract:One of the guiding principles for designing AI-based weather forecasting systems is to embed physical constraints as inductive priors in the neural network architecture. A popular prior is locality, where the atmospheric data is processed with local neural interactions, like 3D convolutions or 3D local attention windows as in Pangu-Weather. On the other hand, some works have shown great success in weather forecasting without this locality principle, at the cost of a much higher parameter count. In this paper, we show that the 3D local processing in Pangu-Weather is computationally sub-optimal. We design ArchesWeather, a transformer model that combines 2D attention with a column-wise attention-based feature interaction module, and demonstrate that this design improves forecasting skill. ArchesWeather is trained at 1.5{\deg} resolution and 24h lead time, with a training budget of a few GPU-days and a lower inference cost than competing methods. An ensemble of two of our best models shows competitive RMSE scores with the IFS HRES and outperforms the 1.4{\deg} 50-members NeuralGCM ensemble for one day ahead forecasting. Code and models will be made publicly available at https://github.com/gcouairon/ArchesWeather.

AtmoRep: A stochastic model of atmosphere dynamics using large scale representation learning

Sep 07, 2023

Abstract:The atmosphere affects humans in a multitude of ways, from loss of life due to adverse weather effects to long-term social and economic impacts on societies. Computer simulations of atmospheric dynamics are, therefore, of great importance for the well-being of our and future generations. Here, we propose AtmoRep, a novel, task-independent stochastic computer model of atmospheric dynamics that can provide skillful results for a wide range of applications. AtmoRep uses large-scale representation learning from artificial intelligence to determine a general description of the highly complex, stochastic dynamics of the atmosphere from the best available estimate of the system's historical trajectory as constrained by observations. This is enabled by a novel self-supervised learning objective and a unique ensemble that samples from the stochastic model with a variability informed by the one in the historical record. The task-independent nature of AtmoRep enables skillful results for a diverse set of applications without specifically training for them and we demonstrate this for nowcasting, temporal interpolation, model correction, and counterfactuals. We also show that AtmoRep can be improved with additional data, for example radar observations, and that it can be extended to tasks such as downscaling. Our work establishes that large-scale neural networks can provide skillful, task-independent models of atmospheric dynamics. With this, they provide a novel means to make the large record of atmospheric observations accessible for applications and for scientific inquiry, complementing existing simulations based on first principles.

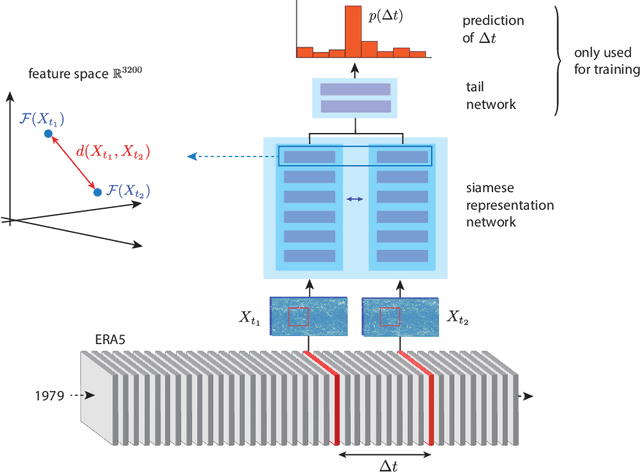

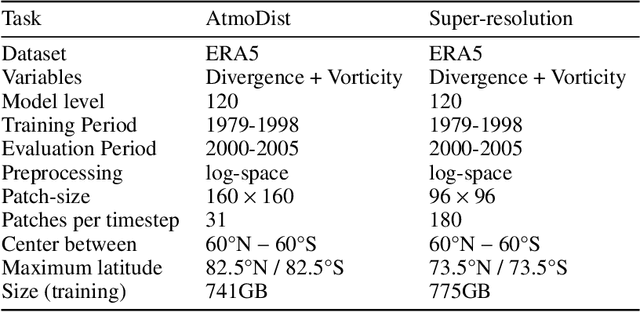

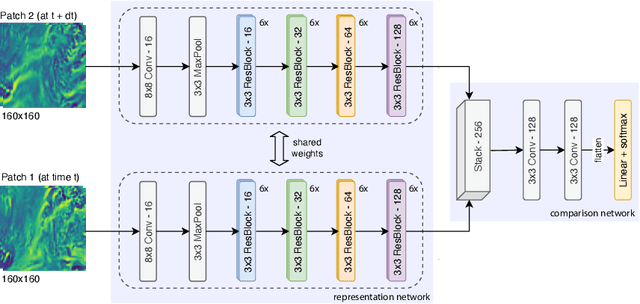

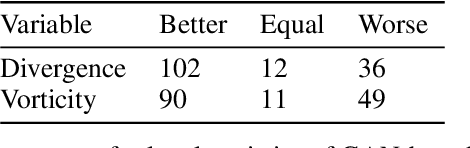

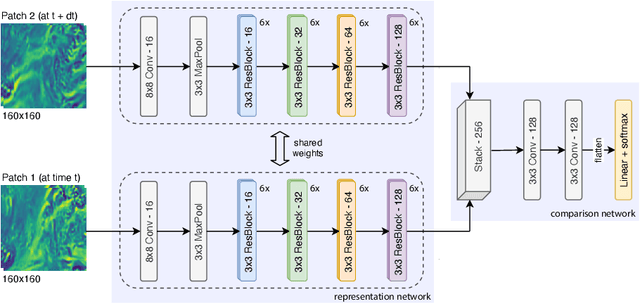

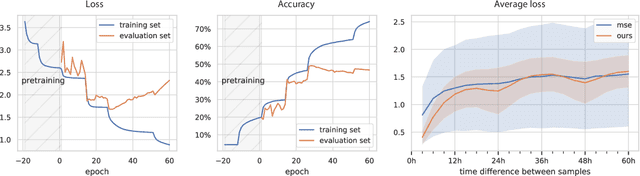

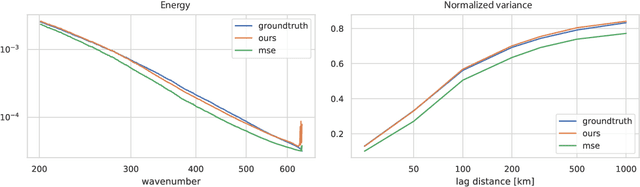

AtmoDist: Self-supervised Representation Learning for Atmospheric Dynamics

Feb 02, 2022

Abstract:Representation learning has proven to be a powerful methodology in a wide variety of machine learning applications. For atmospheric dynamics, however, it has so far not been considered, arguably due to the lack of large-scale, labeled datasets that could be used for training. In this work, we show that the difficulty is benign and introduce a self-supervised learning task that defines a categorical loss for a wide variety of unlabeled atmospheric datasets. Specifically, we train a neural network on the simple yet intricate task of predicting the temporal distance between atmospheric fields, e.g. the components of the wind field, from distinct but nearby times. Despite this simplicity, a neural network will provide good predictions only when it develops an internal representation that captures intrinsic aspects of atmospheric dynamics. We demonstrate this by introducing a data-driven distance metric for atmospheric states based on representations learned from ERA5 reanalysis. When employ as a loss function for downscaling, this Atmodist distance leads to downscaled fields that match the true statistics more closely than the previous state-of-the-art based on an l2-loss and whose local behavior is more realistic. Since it is derived from observational data, AtmoDist also provides a novel perspective on atmospheric predictability.

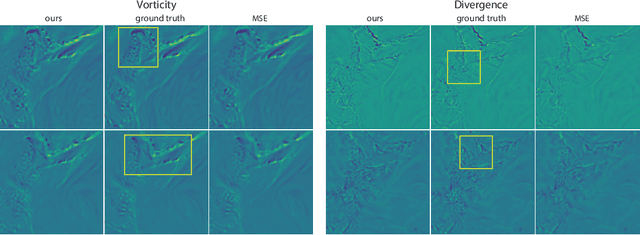

Towards Representation Learning for Atmospheric Dynamics

Sep 19, 2021

Abstract:The prediction of future climate scenarios under anthropogenic forcing is critical to understand climate change and to assess the impact of potentially counter-acting technologies. Machine learning and hybrid techniques for this prediction rely on informative metrics that are sensitive to pertinent but often subtle influences. For atmospheric dynamics, a critical part of the climate system, the "eyeball metric", i.e. a visual inspection by an expert, is currently still the gold standard. However, it cannot be used as metric in machine learning systems where an algorithmic description is required. Motivated by the success of intermediate neural network activations as basis for learned metrics, e.g. in computer vision, we present a novel, self-supervised representation learning approach specifically designed for atmospheric dynamics. Our approach, called AtmoDist, trains a neural network on a simple, auxiliary task: predicting the temporal distance between elements of a shuffled sequence of atmospheric fields (e.g. the components of the wind field from a reanalysis or simulation). The task forces the network to learn important intrinsic aspects of the data as activations in its layers and from these hence a discriminative metric can be obtained. We demonstrate this by using AtmoDist to define a metric for GAN-based super resolution of vorticity and divergence. Our upscaled data matches closely the true statistics of a high resolution reference and it significantly outperform the state-of-the-art based on mean squared error. Since AtmoDist is unsupervised, only requires a temporal sequence of fields, and uses a simple auxiliary task, it can be used in a wide range of applications that aim to understand and mitigate climate change.

Local Fourier Slice Photography

Feb 16, 2019

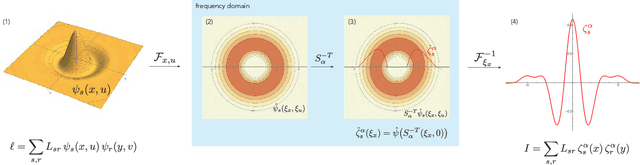

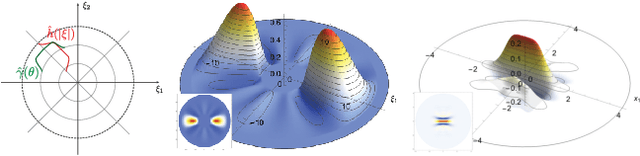

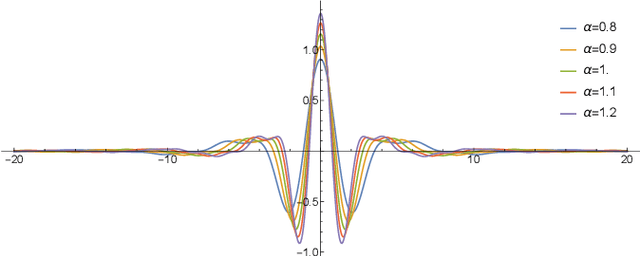

Abstract:Light field cameras provide intriguing possibilities, such as post-capture refocus or the ability to look behind an object. This comes, however, at the price of significant storage requirements. Compression techniques can be used to reduce these but refocusing and reconstruction require so far again a dense representation. To avoid this, we introduce a sheared local Fourier slice equation that allows for refocusing directly from a compressed light field, either to obtain an image or a compressed representation of it. The result is made possible by wavelets that respect the "slicing's" intrinsic structure and enable us to derive exact reconstruction filters for the refocused image in closed form. Image reconstruction then amounts to applying these filters to the light field's wavelet coefficients, and hence no decompression is necessary. We demonstrate that this substantially reduces storage requirements and also computation times. We furthermore analyze the computational complexity of our algorithm and show that it scales linearly with the size of the reconstructed region and the non-negligible wavelet coefficients, i.e. with the visual complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge