ArchesWeather: An efficient AI weather forecasting model at 1.5° resolution

Paper and Code

May 23, 2024

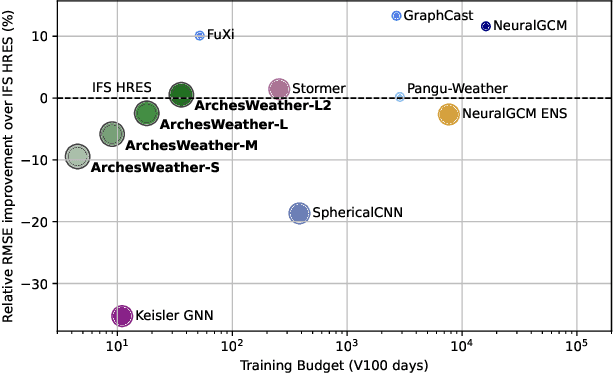

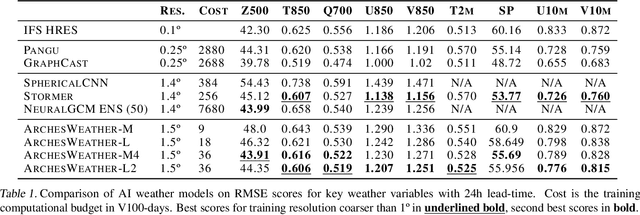

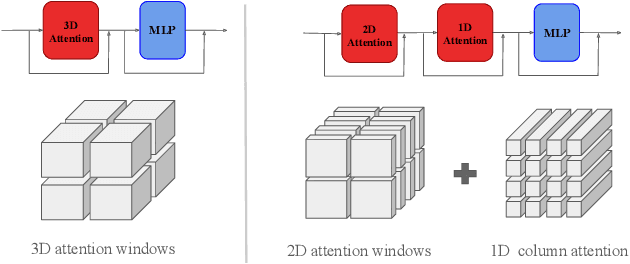

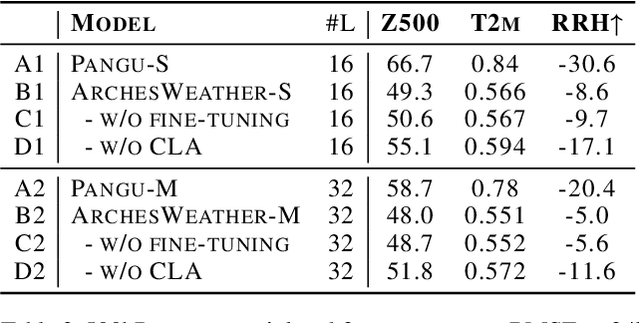

One of the guiding principles for designing AI-based weather forecasting systems is to embed physical constraints as inductive priors in the neural network architecture. A popular prior is locality, where the atmospheric data is processed with local neural interactions, like 3D convolutions or 3D local attention windows as in Pangu-Weather. On the other hand, some works have shown great success in weather forecasting without this locality principle, at the cost of a much higher parameter count. In this paper, we show that the 3D local processing in Pangu-Weather is computationally sub-optimal. We design ArchesWeather, a transformer model that combines 2D attention with a column-wise attention-based feature interaction module, and demonstrate that this design improves forecasting skill. ArchesWeather is trained at 1.5{\deg} resolution and 24h lead time, with a training budget of a few GPU-days and a lower inference cost than competing methods. An ensemble of two of our best models shows competitive RMSE scores with the IFS HRES and outperforms the 1.4{\deg} 50-members NeuralGCM ensemble for one day ahead forecasting. Code and models will be made publicly available at https://github.com/gcouairon/ArchesWeather.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge