Anastase Charantonis

STIPP: Space-time in situ postprocessing over the French Alps using proper scoring rules

Jan 06, 2026Abstract:We propose Space-time in situ postprocessing (STIPP), a machine learning model that generates spatio-temporally consistent weather forecasts for a network of station locations. Gridded forecasts from classical numerical weather prediction or data-driven models often lack the necessary precision due to unresolved local effects. Typical statistical postprocessing methods correct these biases, but often degrade spatio-temporal correlation structures in doing so. Recent works based on generative modeling successfully improve spatial correlation structures but have to forecast every lead time independently. In contrast, STIPP makes joint spatio-temporal forecasts which have increased accuracy for surface temperature, wind, relative humidity and precipitation when compared to baseline methods. It makes hourly ensemble predictions given only a six-hourly deterministic forecast, blending the boundaries of postprocessing and temporal interpolation. By leveraging a multivariate proper scoring rule for training, STIPP contributes to ongoing work data-driven atmospheric models supervised only with distribution marginals.

ArchesClimate: Probabilistic Decadal Ensemble Generation With Flow Matching

Sep 19, 2025Abstract:Climate projections have uncertainties related to components of the climate system and their interactions. A typical approach to quantifying these uncertainties is to use climate models to create ensembles of repeated simulations under different initial conditions. Due to the complexity of these simulations, generating such ensembles of projections is computationally expensive. In this work, we present ArchesClimate, a deep learning-based climate model emulator that aims to reduce this cost. ArchesClimate is trained on decadal hindcasts of the IPSL-CM6A-LR climate model at a spatial resolution of approximately 2.5x1.25 degrees. We train a flow matching model following ArchesWeatherGen, which we adapt to predict near-term climate. Once trained, the model generates states at a one-month lead time and can be used to auto-regressively emulate climate model simulations of any length. We show that for up to 10 years, these generations are stable and physically consistent. We also show that for several important climate variables, ArchesClimate generates simulations that are interchangeable with the IPSL model. This work suggests that climate model emulators could significantly reduce the cost of climate model simulations.

Generating ensembles of spatially-coherent in-situ forecasts using flow matching

Apr 04, 2025Abstract:We propose a machine-learning-based methodology for in-situ weather forecast postprocessing that is both spatially coherent and multivariate. Compared to previous work, our Flow MAtching Postprocessing (FMAP) better represents the correlation structures of the observations distribution, while also improving marginal performance at the stations. FMAP generates forecasts that are not bound to what is already modeled by the underlying gridded prediction and can infer new correlation structures from data. The resulting model can generate an arbitrary number of forecasts from a limited number of numerical simulations, allowing for low-cost forecasting systems. A single training is sufficient to perform postprocessing at multiple lead times, in contrast with other methods which use multiple trained networks at generation time. This work details our methodology, including a spatial attention transformer backbone trained within a flow matching generative modeling framework. FMAP shows promising performance in experiments on the EUPPBench dataset, forecasting surface temperature and wind gust values at station locations in western Europe up to five-day lead times.

ORCAst: Operational High-Resolution Current Forecasts

Jan 21, 2025Abstract:We present ORCAst, a multi-stage, multi-arm network for Operational high-Resolution Current forecAsts over one week. Producing real-time nowcasts and forecasts of ocean surface currents is a challenging problem due to indirect or incomplete information from satellite remote sensing data. Entirely trained on real satellite data and in situ measurements from drifters, our model learns to forecast global ocean surface currents using various sources of ground truth observations in a multi-stage learning procedure. Our multi-arm encoder-decoder model architecture allows us to first predict sea surface height and geostrophic currents from larger quantities of nadir and SWOT altimetry data, before learning to predict ocean surface currents from much more sparse in situ measurements from drifters. Training our model on specific regions improves performance. Our model achieves stronger nowcast and forecast performance in predicting ocean surface currents than various state-of-the-art methods.

ArchesWeather & ArchesWeatherGen: a deterministic and generative model for efficient ML weather forecasting

Dec 17, 2024

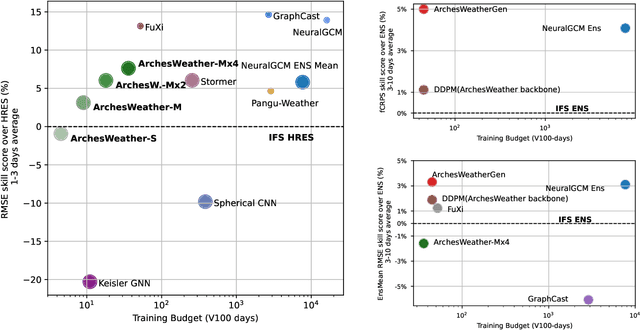

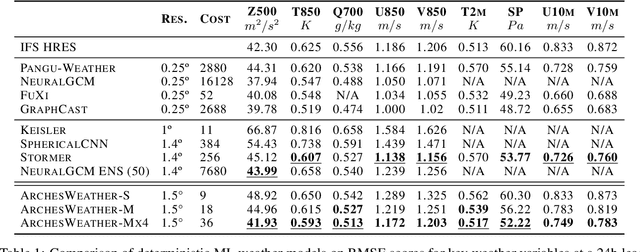

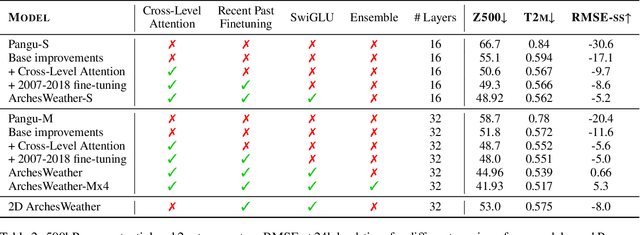

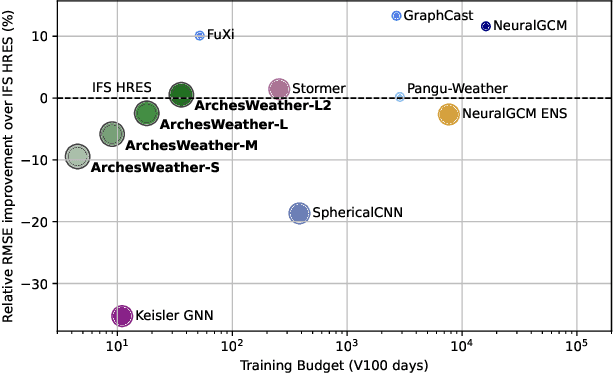

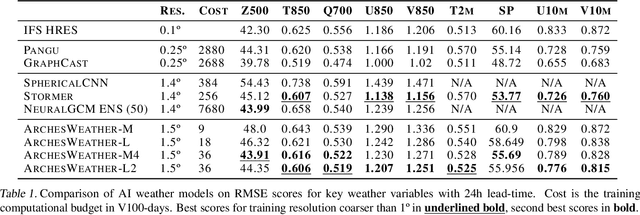

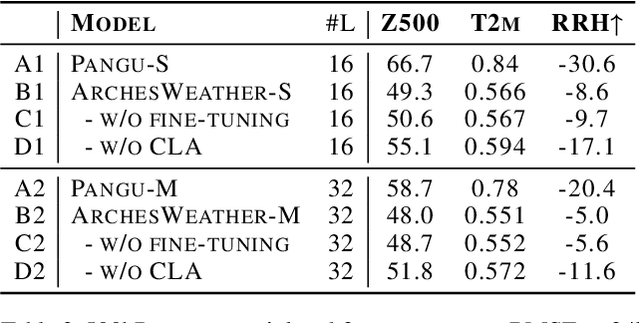

Abstract:Weather forecasting plays a vital role in today's society, from agriculture and logistics to predicting the output of renewable energies, and preparing for extreme weather events. Deep learning weather forecasting models trained with the next state prediction objective on ERA5 have shown great success compared to numerical global circulation models. However, for a wide range of applications, being able to provide representative samples from the distribution of possible future weather states is critical. In this paper, we propose a methodology to leverage deterministic weather models in the design of probabilistic weather models, leading to improved performance and reduced computing costs. We first introduce \textbf{ArchesWeather}, a transformer-based deterministic model that improves upon Pangu-Weather by removing overrestrictive inductive priors. We then design a probabilistic weather model called \textbf{ArchesWeatherGen} based on flow matching, a modern variant of diffusion models, that is trained to project ArchesWeather's predictions to the distribution of ERA5 weather states. ArchesWeatherGen is a true stochastic emulator of ERA5 and surpasses IFS ENS and NeuralGCM on all WeatherBench headline variables (except for NeuralGCM's geopotential). Our work also aims to democratize the use of deterministic and generative machine learning models in weather forecasting research, with academic computing resources. All models are trained at 1.5{\deg} resolution, with a training budget of $\sim$9 V100 days for ArchesWeather and $\sim$45 V100 days for ArchesWeatherGen. For inference, ArchesWeatherGen generates 15-day weather trajectories at a rate of 1 minute per ensemble member on a A100 GPU card. To make our work fully reproducible, our code and models are open source, including the complete pipeline for data preparation, training, and evaluation, at https://github.com/INRIA/geoarches .

ArchesWeather: An efficient AI weather forecasting model at 1.5° resolution

May 23, 2024

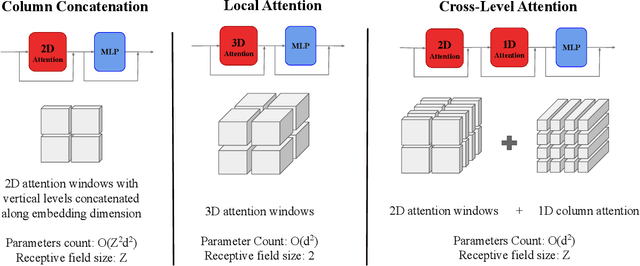

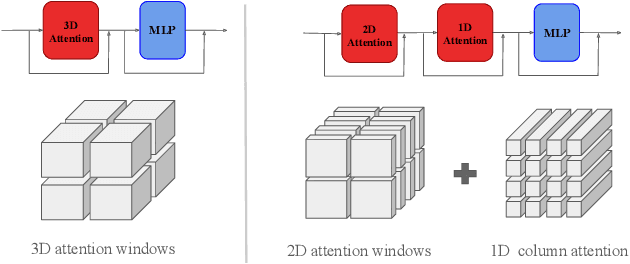

Abstract:One of the guiding principles for designing AI-based weather forecasting systems is to embed physical constraints as inductive priors in the neural network architecture. A popular prior is locality, where the atmospheric data is processed with local neural interactions, like 3D convolutions or 3D local attention windows as in Pangu-Weather. On the other hand, some works have shown great success in weather forecasting without this locality principle, at the cost of a much higher parameter count. In this paper, we show that the 3D local processing in Pangu-Weather is computationally sub-optimal. We design ArchesWeather, a transformer model that combines 2D attention with a column-wise attention-based feature interaction module, and demonstrate that this design improves forecasting skill. ArchesWeather is trained at 1.5{\deg} resolution and 24h lead time, with a training budget of a few GPU-days and a lower inference cost than competing methods. An ensemble of two of our best models shows competitive RMSE scores with the IFS HRES and outperforms the 1.4{\deg} 50-members NeuralGCM ensemble for one day ahead forecasting. Code and models will be made publicly available at https://github.com/gcouairon/ArchesWeather.

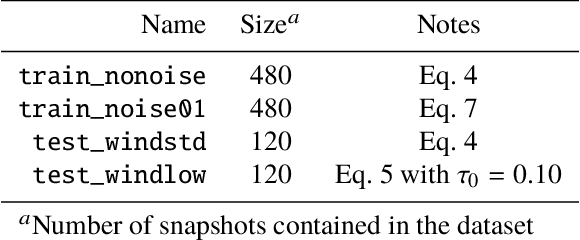

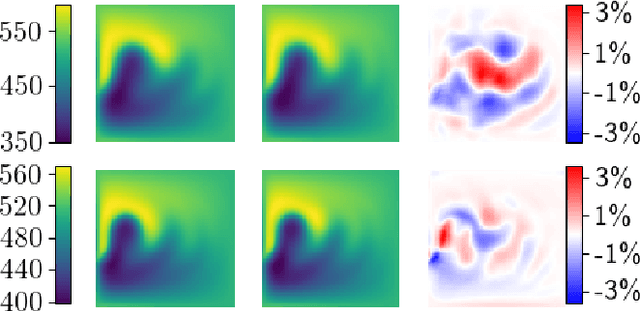

Unsupervised Learning of Sea Surface Height Interpolation from Multi-variate Simulated Satellite Observations

Oct 11, 2023Abstract:Satellite-based remote sensing missions have revolutionized our understanding of the Ocean state and dynamics. Among them, spaceborne altimetry provides valuable measurements of Sea Surface Height (SSH), which is used to estimate surface geostrophic currents. However, due to the sensor technology employed, important gaps occur in SSH observations. Complete SSH maps are produced by the altimetry community using linear Optimal Interpolations (OI) such as the widely-used Data Unification and Altimeter Combination System (DUACS). However, OI is known for producing overly smooth fields and thus misses some mesostructures and eddies. On the other hand, Sea Surface Temperature (SST) products have much higher data coverage and SST is physically linked to geostrophic currents through advection. We design a realistic twin experiment to emulate the satellite observations of SSH and SST to evaluate interpolation methods. We introduce a deep learning network able to use SST information, and a trainable in two settings: one where we have no access to ground truth during training and one where it is accessible. Our investigation involves a comparative analysis of the aforementioned network when trained using either supervised or unsupervised loss functions. We assess the quality of SSH reconstructions and further evaluate the network's performance in terms of eddy detection and physical properties. We find that it is possible, even in an unsupervised setting to use SST to improve reconstruction performance compared to SST-agnostic interpolations. We compare our reconstructions to DUACS's and report a decrease of 41\% in terms of root mean squared error.

Learning 4DVAR inversion directly from observations

Nov 17, 2022

Abstract:Variational data assimilation and deep learning share many algorithmic aspects in common. While the former focuses on system state estimation, the latter provides great inductive biases to learn complex relationships. We here design a hybrid architecture learning the assimilation task directly from partial and noisy observations, using the mechanistic constraint of the 4DVAR algorithm. Finally, we show in an experiment that the proposed method was able to learn the desired inversion with interesting regularizing properties and that it also has computational interests.

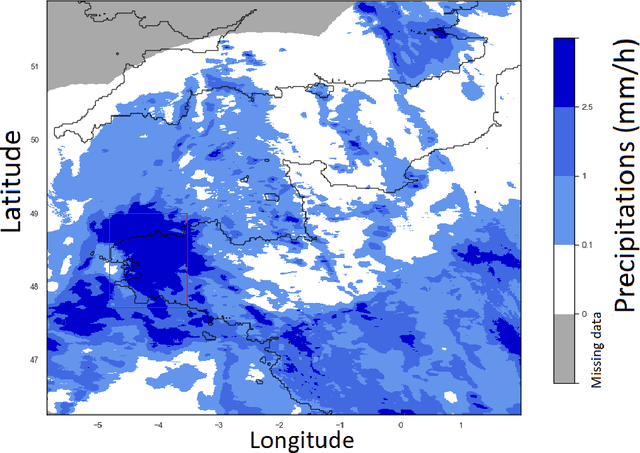

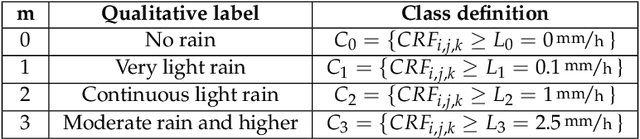

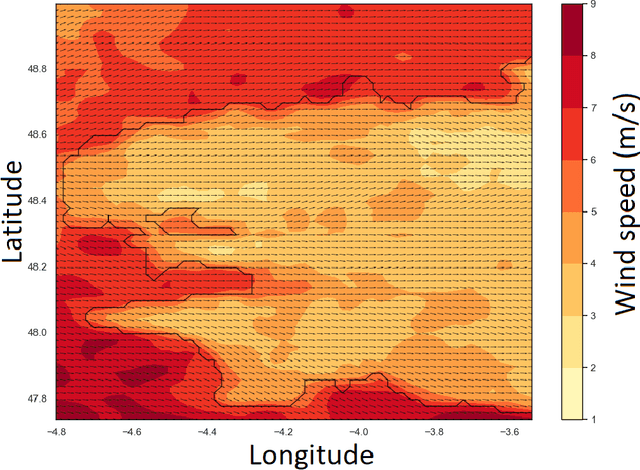

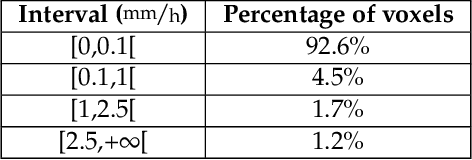

Fusion of rain radar images and wind forecasts in a deep learning model applied to rain nowcasting

Jan 12, 2021

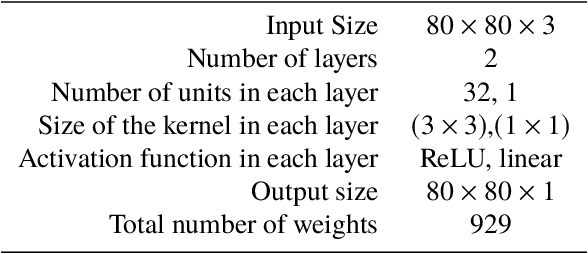

Abstract:Short- or mid-term rainfall forecasting is a major task with several environmental applications such as agricultural management or flood risk monitoring. Existing data-driven approaches, especially deep learning models, have shown significant skill at this task, using only rainfall radar images as inputs. In order to determine whether using other meteorological parameters such as wind would improve forecasts, we trained a deep learning model on a fusion of rainfall radar images and wind velocity produced by a weather forecast model. The network was compared to a similar architecture trained only on radar data, to a basic persistence model and to an approach based on optical flow. Our network outperforms by 8% the F1-score calculated for the optical flow on moderate and higher rain events for forecasts at a horizon time of 30 min. Furthermore, it outperforms by 7% the same architecture trained using only rainfall radar images. Merging rain and wind data has also proven to stabilize the training process and enabled significant improvement especially on the difficult-to-predict high precipitation rainfalls.

Representing ill-known parts of a numerical model using a machine learning approach

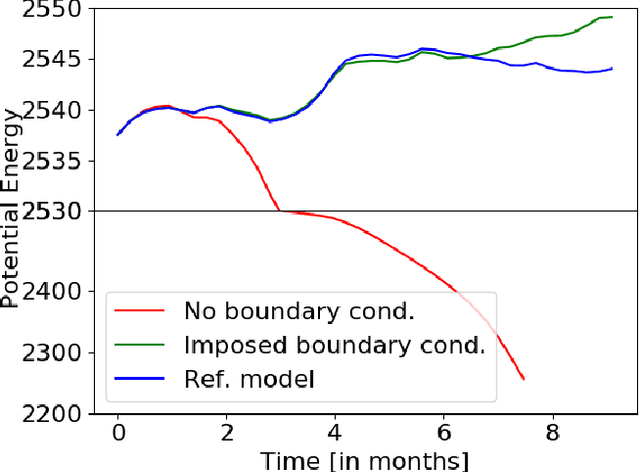

Mar 18, 2019

Abstract:In numerical modeling of the Earth System, many processes remain unknown or ill represented (let us quote sub-grid processes, the dependence to unknown latent variables or the non-inclusion of complex dynamics in numerical models) but sometimes can be observed. This paper proposes a methodology to produce a hybrid model combining a physical-based model (forecasting the well-known processes) with a neural-net model trained from observations (forecasting the remaining processes). The approach is applied to a shallow-water model in which the forcing, dissipative and diffusive terms are assumed to be unknown. We show that the hybrid model is able to reproduce with great accuracy the unknown terms (correlation close to 1). For long term simulations it reproduces with no significant difference the mean state, the kinetic energy, the potential energy and the potential vorticity of the system. Lastly it is able to function with new forcings that were not encountered during the training phase of the neural network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge