Chiew Tong Lau

MIGPerf: A Comprehensive Benchmark for Deep Learning Training and Inference Workloads on Multi-Instance GPUs

Jan 01, 2023Abstract:New architecture GPUs like A100 are now equipped with multi-instance GPU (MIG) technology, which allows the GPU to be partitioned into multiple small, isolated instances. This technology provides more flexibility for users to support both deep learning training and inference workloads, but efficiently utilizing it can still be challenging. The vision of this paper is to provide a more comprehensive and practical benchmark study for MIG in order to eliminate the need for tedious manual benchmarking and tuning efforts. To achieve this vision, the paper presents MIGPerf, an open-source tool that streamlines the benchmark study for MIG. Using MIGPerf, the authors conduct a series of experiments, including deep learning training and inference characterization on MIG, GPU sharing characterization, and framework compatibility with MIG. The results of these experiments provide new insights and guidance for users to effectively employ MIG, and lay the foundation for further research on the orchestration of hybrid training and inference workloads on MIGs. The code and results are released on https://github.com/MLSysOps/MIGProfiler. This work is still in progress and more results will be published soon.

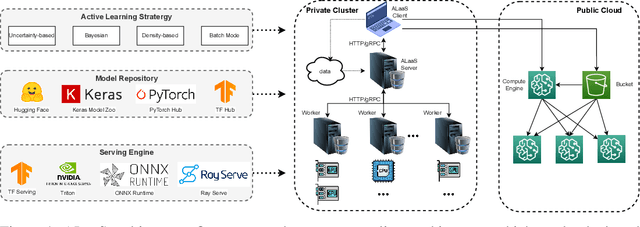

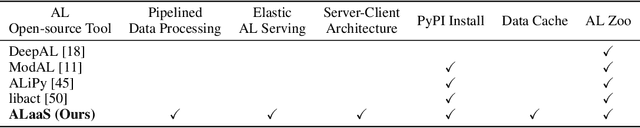

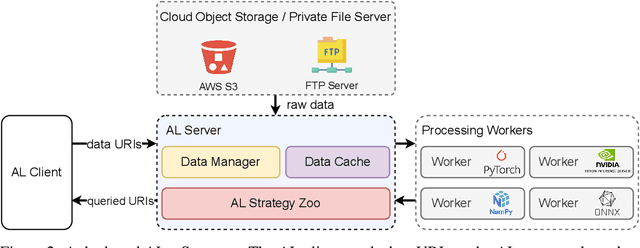

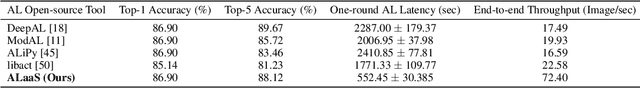

Active-Learning-as-a-Service: An Efficient MLOps System for Data-Centric AI

Jul 19, 2022

Abstract:The success of today's AI applications requires not only model training (Model-centric) but also data engineering (Data-centric). In data-centric AI, active learning (AL) plays a vital role, but current AL tools can not perform AL tasks efficiently. To this end, this paper presents an efficient MLOps system for AL, named ALaaS (Active-Learning-as-a-Service). Specifically, ALaaS adopts a server-client architecture to support an AL pipeline and implements stage-level parallelism for high efficiency. Meanwhile, caching and batching techniques are employed to further accelerate the AL process. In addition to efficiency, ALaaS ensures accessibility with the help of the design philosophy of configuration-as-a-service. It also abstracts an AL process to several components and provides rich APIs for advanced users to extend the system to new scenarios. Extensive experiments show that ALaaS outperforms all other baselines in terms of latency and throughput. Further ablation studies demonstrate the effectiveness of our design as well as ALaaS's ease to use. Our code is available at \url{https://github.com/MLSysOps/alaas}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge