Chidubem Arachie

Weakly Supervised Label Learning Flows

Feb 19, 2023

Abstract:Supervised learning usually requires a large amount of labelled data. However, attaining ground-truth labels is costly for many tasks. Alternatively, weakly supervised methods learn with cheap weak signals that only approximately label some data. Many existing weakly supervised learning methods learn a deterministic function that estimates labels given the input data and weak signals. In this paper, we develop label learning flows (LLF), a general framework for weakly supervised learning problems. Our method is a generative model based on normalizing flows. The main idea of LLF is to optimize the conditional likelihoods of all possible labelings of the data within a constrained space defined by weak signals. We develop a training method for LLF that trains the conditional flow inversely and avoids estimating the labels. Once a model is trained, we can make predictions with a sampling algorithm. We apply LLF to three weakly supervised learning problems. Experiment results show that our method outperforms many baselines we compare against.

Data Consistency for Weakly Supervised Learning

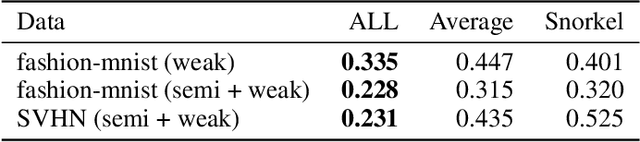

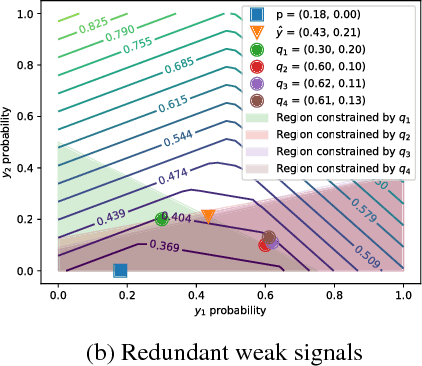

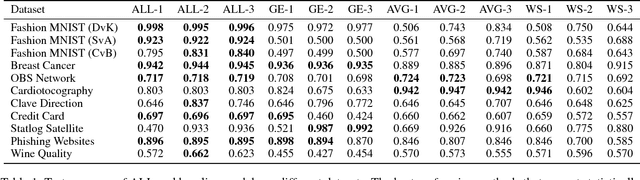

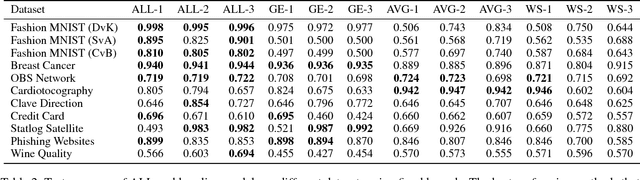

Feb 08, 2022Abstract:In many applications, training machine learning models involves using large amounts of human-annotated data. Obtaining precise labels for the data is expensive. Instead, training with weak supervision provides a low-cost alternative. We propose a novel weak supervision algorithm that processes noisy labels, i.e., weak signals, while also considering features of the training data to produce accurate labels for training. Our method searches over classifiers of the data representation to find plausible labelings. We call this paradigm data consistent weak supervision. A key facet of our framework is that we are able to estimate labels for data examples low or no coverage from the weak supervision. In addition, we make no assumptions about the joint distribution of the weak signals and true labels of the data. Instead, we use weak signals and the data features to solve a constrained optimization that enforces data consistency among the labels we generate. Empirical evaluation of our method on different datasets shows that it significantly outperforms state-of-the-art weak supervision methods on both text and image classification tasks.

Constrained Labeling for Weakly Supervised Learning

Sep 15, 2020

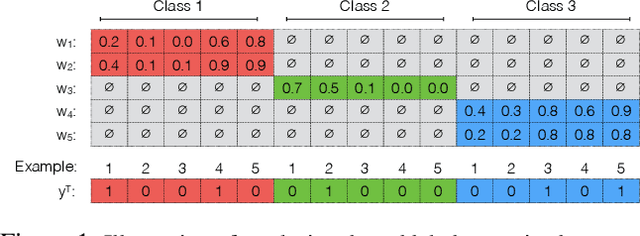

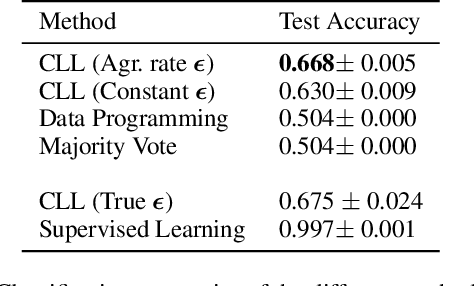

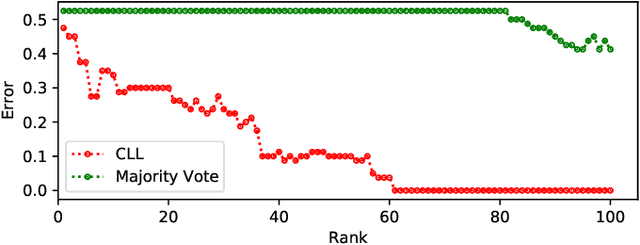

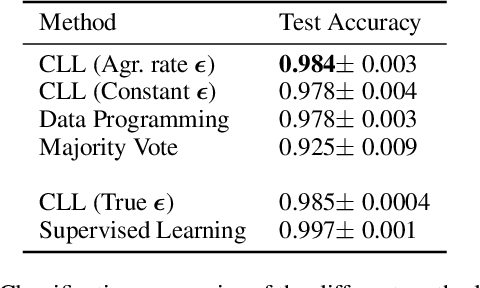

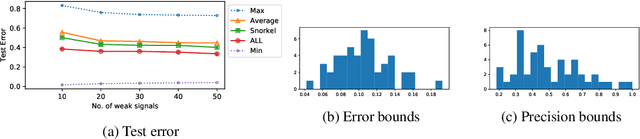

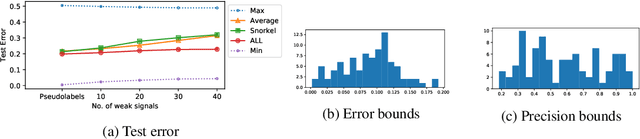

Abstract:Curation of large fully supervised datasets has become one of the major roadblocks for machine learning. Weak supervision provides an alternative to supervised learning by training with cheap, noisy, and possibly correlated labeling functions from varying sources. The key challenge in weakly supervised learning is combining the different weak supervision signals while navigating misleading correlations in their errors. In this paper, we propose a simple data-free approach for combining weak supervision signals by defining a constrained space for the possible labels of the weak signals and training with a random labeling within this constrained space. Our method is efficient and stable, converging after a few iterations of gradient descent. We prove theoretical conditions under which the worst-case error of the randomized label decreases with the rank of the linear constraints. We show experimentally that our method outperforms other weak supervision methods on various text- and image-classification tasks.

Unsupervised Detection of Sub-events in Large Scale Disasters

Dec 13, 2019

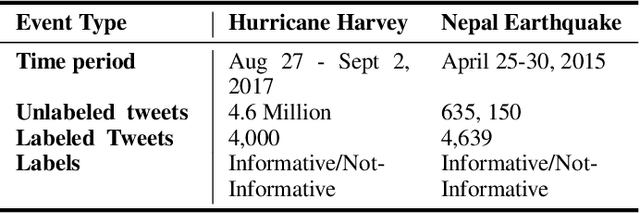

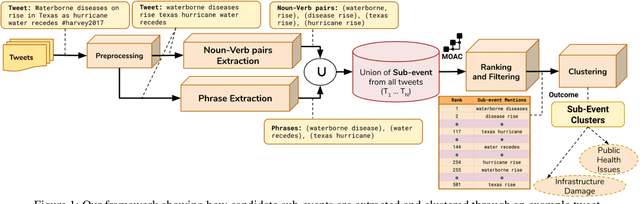

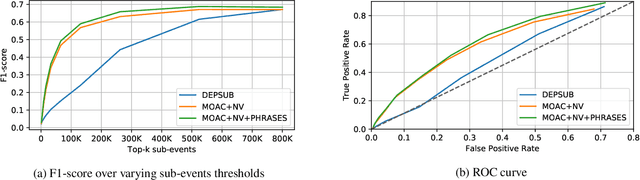

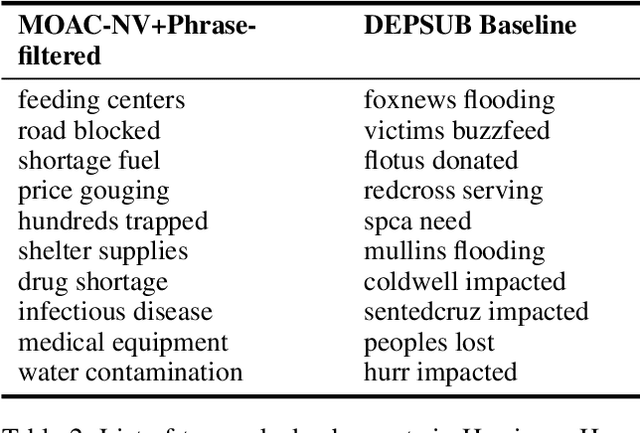

Abstract:Social media plays a major role during and after major natural disasters (e.g., hurricanes, large-scale fires, etc.), as people ``on the ground'' post useful information on what is actually happening. Given the large amounts of posts, a major challenge is identifying the information that is useful and actionable. Emergency responders are largely interested in finding out what events are taking place so they can properly plan and deploy resources. In this paper we address the problem of automatically identifying important sub-events (within a large-scale emergency ``event'', such as a hurricane). In particular, we present a novel, unsupervised learning framework to detect sub-events in Tweets for retrospective crisis analysis. We first extract noun-verb pairs and phrases from raw tweets as sub-event candidates. Then, we learn a semantic embedding of extracted noun-verb pairs and phrases, and rank them against a crisis-specific ontology. We filter out noisy and irrelevant information then cluster the noun-verb pairs and phrases so that the top-ranked ones describe the most important sub-events. Through quantitative experiments on two large crisis data sets (Hurricane Harvey and the 2015 Nepal Earthquake), we demonstrate the effectiveness of our approach over the state-of-the-art. Our qualitative evaluation shows better performance compared to our baseline.

An Adaptable Framework for Deep Adversarial Label Learning from Weak Supervision

Jun 03, 2019

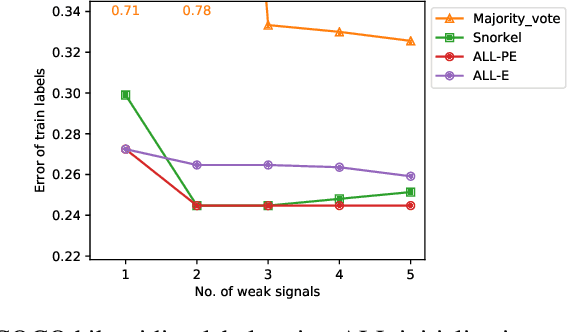

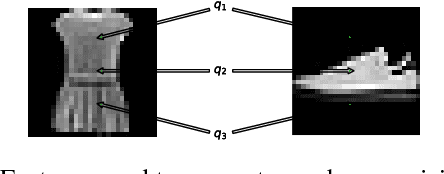

Abstract:In this paper, we propose a general framework for using adversarial label learning (ALL) [1] for multiclass classification when the data is weakly supervised. We introduce a new variant of ALL that incorporates human knowledge through multiple constraint types. Like adversarial label learning, we learn by adversarially finding labels constrained to be partially consistent with the weak supervision. However, we describe a different approach to solve the optimization that enjoys faster convergence when training large deep models. Our framework allows for human knowledge to be encoded into the algorithm as a set of linear constraints. We then solve a two-player game optimization subject to these constraints. We test our method on three data sets by training convolutional neural network models that learn to classify image objects with limited access to training labels. Our approach is able to learn even in settings where the weak supervision confounds state-of-the-art weakly supervised learning methods. The results of our experiments demonstrate the applicability of this approach to general classification tasks.

Adversarial Labeling for Learning without Labels

Jun 07, 2018

Abstract:We consider the task of training classifiers without labels. We propose a weakly supervised method---adversarial label learning---that trains classifiers to perform well against an adversary that chooses labels for training data. The weak supervision constrains what labels the adversary can choose. The method therefore minimizes an upper bound of the classifier's error rate using projected primal-dual subgradient descent. Minimizing this bound protects against bias and dependencies in the weak supervision. Experiments on three real datasets show that our method can train without labels and outperforms other approaches for weakly supervised learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge