An Adaptable Framework for Deep Adversarial Label Learning from Weak Supervision

Paper and Code

Jun 03, 2019

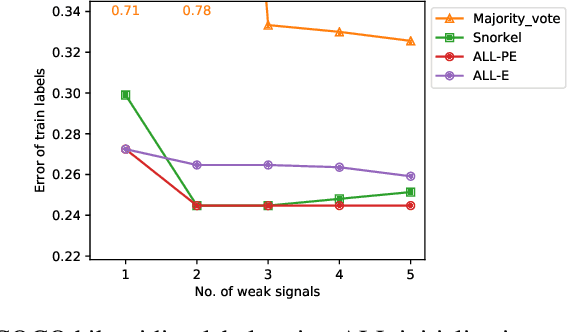

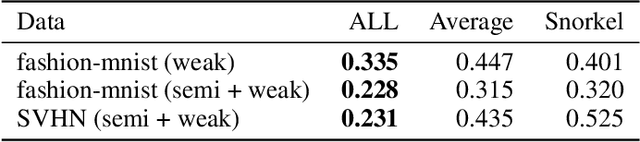

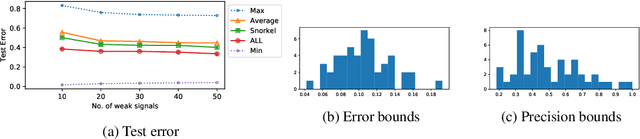

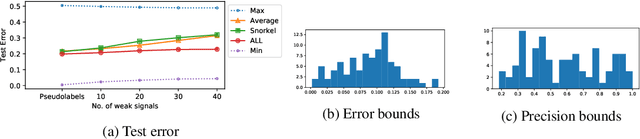

In this paper, we propose a general framework for using adversarial label learning (ALL) [1] for multiclass classification when the data is weakly supervised. We introduce a new variant of ALL that incorporates human knowledge through multiple constraint types. Like adversarial label learning, we learn by adversarially finding labels constrained to be partially consistent with the weak supervision. However, we describe a different approach to solve the optimization that enjoys faster convergence when training large deep models. Our framework allows for human knowledge to be encoded into the algorithm as a set of linear constraints. We then solve a two-player game optimization subject to these constraints. We test our method on three data sets by training convolutional neural network models that learn to classify image objects with limited access to training labels. Our approach is able to learn even in settings where the weak supervision confounds state-of-the-art weakly supervised learning methods. The results of our experiments demonstrate the applicability of this approach to general classification tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge