Chia Dai

Explainable Semantic Mapping for First Responders

Oct 15, 2019

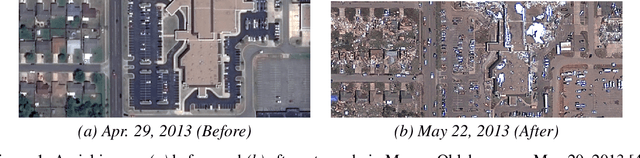

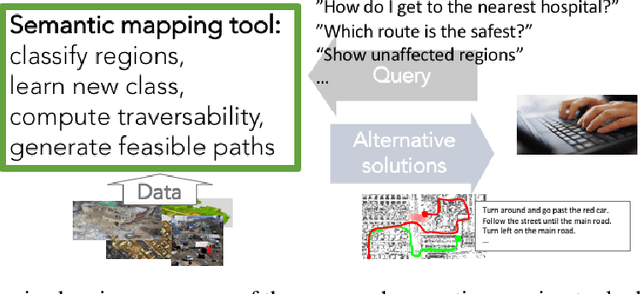

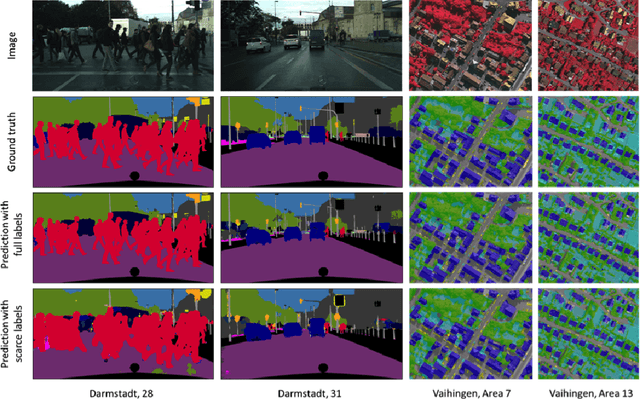

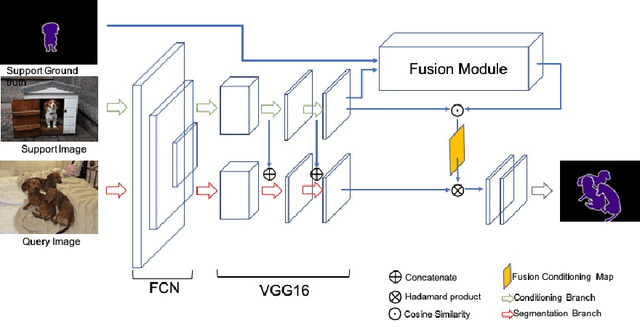

Abstract:One of the key challenges in the semantic mapping problem in postdisaster environments is how to analyze a large amount of data efficiently with minimal supervision. To address this challenge, we propose a deep learning-based semantic mapping tool consisting of three main ideas. First, we develop a frugal semantic segmentation algorithm that uses only a small amount of labeled data. Next, we investigate on the problem of learning to detect a new class of object using just a few training examples. Finally, we develop an explainable cost map learning algorithm that can be quickly trained to generate traversability cost maps using only raw sensor data such as aerial-view imagery. This paper presents an overview of the proposed idea and the lessons learned.

Very Deep Convolutional Neural Networks for Raw Waveforms

Oct 01, 2016

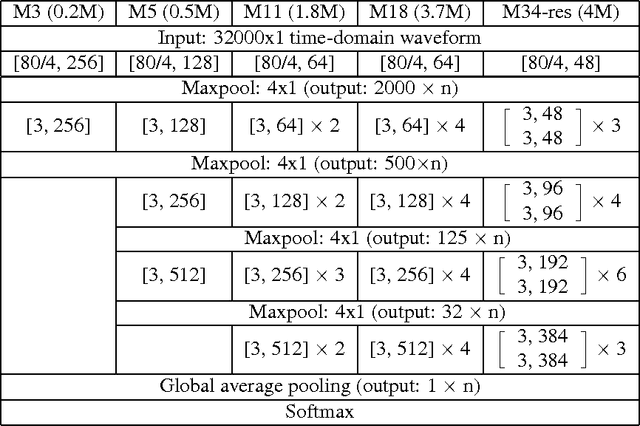

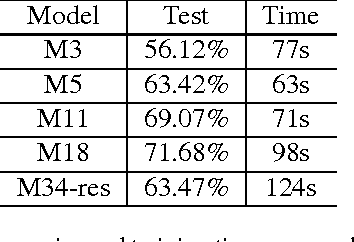

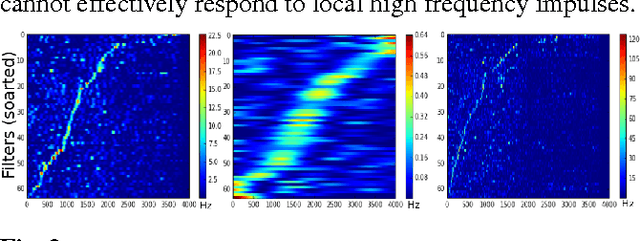

Abstract:Learning acoustic models directly from the raw waveform data with minimal processing is challenging. Current waveform-based models have generally used very few (~2) convolutional layers, which might be insufficient for building high-level discriminative features. In this work, we propose very deep convolutional neural networks (CNNs) that directly use time-domain waveforms as inputs. Our CNNs, with up to 34 weight layers, are efficient to optimize over very long sequences (e.g., vector of size 32000), necessary for processing acoustic waveforms. This is achieved through batch normalization, residual learning, and a careful design of down-sampling in the initial layers. Our networks are fully convolutional, without the use of fully connected layers and dropout, to maximize representation learning. We use a large receptive field in the first convolutional layer to mimic bandpass filters, but very small receptive fields subsequently to control the model capacity. We demonstrate the performance gains with the deeper models. Our evaluation shows that the CNN with 18 weight layers outperform the CNN with 3 weight layers by over 15% in absolute accuracy for an environmental sound recognition task and matches the performance of models using log-mel features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge