Explainable Semantic Mapping for First Responders

Paper and Code

Oct 15, 2019

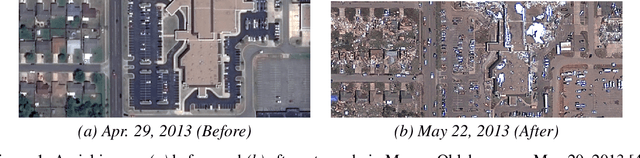

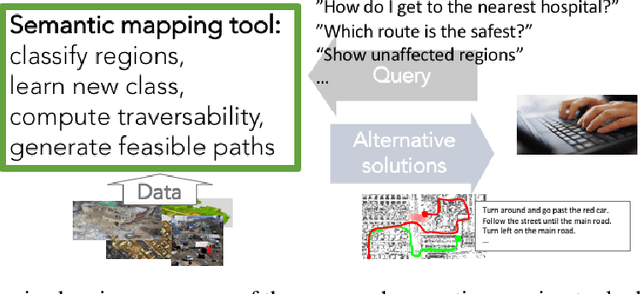

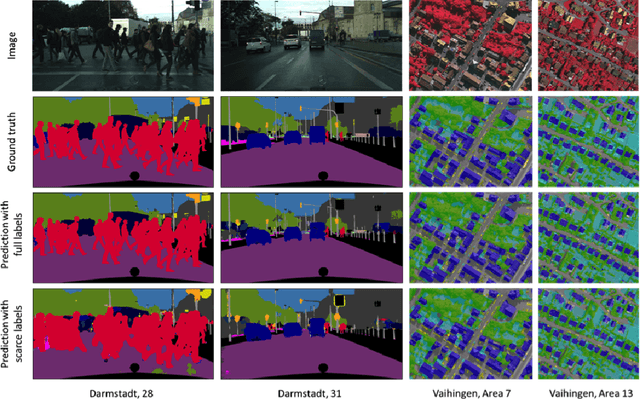

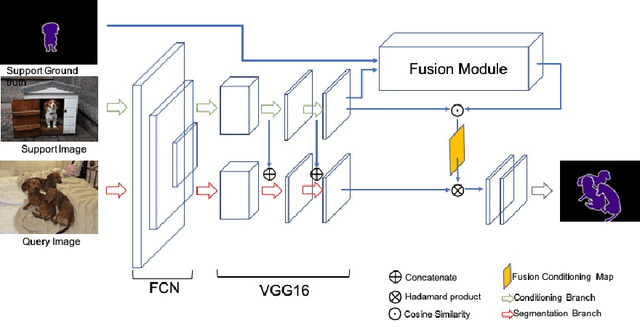

One of the key challenges in the semantic mapping problem in postdisaster environments is how to analyze a large amount of data efficiently with minimal supervision. To address this challenge, we propose a deep learning-based semantic mapping tool consisting of three main ideas. First, we develop a frugal semantic segmentation algorithm that uses only a small amount of labeled data. Next, we investigate on the problem of learning to detect a new class of object using just a few training examples. Finally, we develop an explainable cost map learning algorithm that can be quickly trained to generate traversability cost maps using only raw sensor data such as aerial-view imagery. This paper presents an overview of the proposed idea and the lessons learned.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge