Chengkang Shen

3D Gaussian Adaptive Reconstruction for Fourier Light-Field Microscopy

May 19, 2025Abstract:Compared to light-field microscopy (LFM), which enables high-speed volumetric imaging but suffers from non-uniform spatial sampling, Fourier light-field microscopy (FLFM) introduces sub-aperture division at the pupil plane, thereby ensuring spatially invariant sampling and enhancing spatial resolution. Conventional FLFM reconstruction methods, such as Richardson-Lucy (RL) deconvolution, exhibit poor axial resolution and signal degradation due to the ill-posed nature of the inverse problem. While data-driven approaches enhance spatial resolution by leveraging high-quality paired datasets or imposing structural priors, Neural Radiance Fields (NeRF)-based methods employ physics-informed self-supervised learning to overcome these limitations, yet they are hindered by substantial computational costs and memory demands. Therefore, we propose 3D Gaussian Adaptive Tomography (3DGAT) for FLFM, a 3D gaussian splatting based self-supervised learning framework that significantly improves the volumetric reconstruction quality of FLFM while maintaining computational efficiency. Experimental results indicate that our approach achieves higher resolution and improved reconstruction accuracy, highlighting its potential to advance FLFM imaging and broaden its applications in 3D optical microscopy.

Tracking Anything in Heart All at Once

Oct 04, 2023

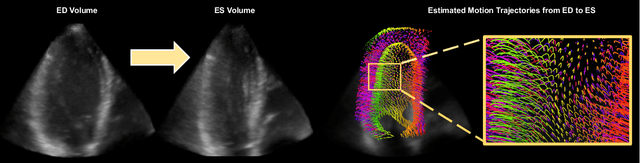

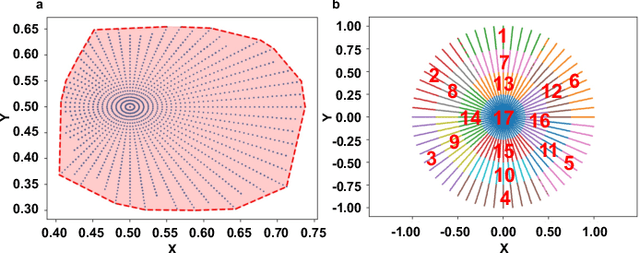

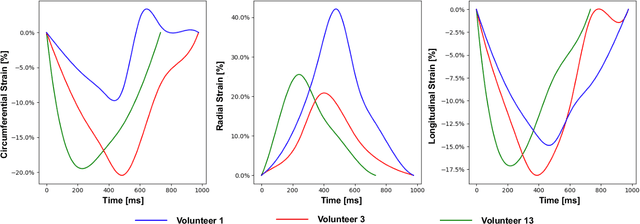

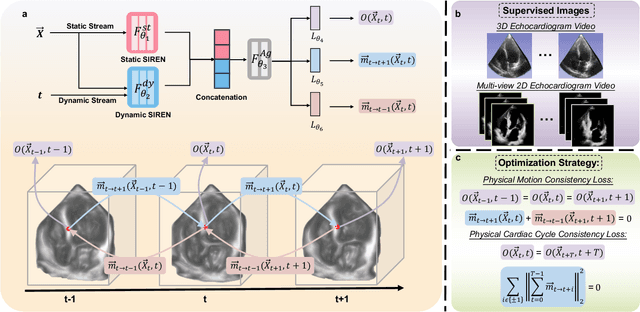

Abstract:Myocardial motion tracking stands as an essential clinical tool in the prevention and detection of Cardiovascular Diseases (CVDs), the foremost cause of death globally. However, current techniques suffer incomplete and inaccurate motion estimation of the myocardium both in spatial and temporal dimensions, hindering the early identification of myocardial dysfunction. In addressing these challenges, this paper introduces the Neural Cardiac Motion Field (NeuralCMF). NeuralCMF leverages the implicit neural representation (INR) to model the 3D structure and the comprehensive 6D forward/backward motion of the heart. This approach offers memory-efficient storage and continuous capability to query the precise shape and motion of the myocardium throughout the cardiac cycle at any specific point. Notably, NeuralCMF operates without the need for paired datasets, and its optimization is self-supervised through the physics knowledge priors both in space and time dimensions, ensuring compatibility with both 2D and 3D echocardiogram video inputs. Experimental validations across three representative datasets support the robustness and innovative nature of the NeuralCMF, marking significant advantages over existing state-of-the-arts in cardiac imaging and motion tracking.

Two-Stream Appearance Transfer Network for Person Image Generation

Nov 09, 2020

Abstract:Pose guided person image generation means to generate a photo-realistic person image conditioned on an input person image and a desired pose. This task requires spatial manipulation of the source image according to the target pose. However, the generative adversarial networks (GANs) widely used for image generation and translation rely on spatially local and translation equivariant operators, i.e., convolution, pooling and unpooling, which cannot handle large image deformation. This paper introduces a novel two-stream appearance transfer network (2s-ATN) to address this challenge. It is a multi-stage architecture consisting of a source stream and a target stream. Each stage features an appearance transfer module and several two-stream feature fusion modules. The former finds the dense correspondence between the two-stream feature maps and then transfers the appearance information from the source stream to the target stream. The latter exchange local information between the two streams and supplement the non-local appearance transfer. Both quantitative and qualitative results indicate the proposed 2s-ATN can effectively handle large spatial deformation and occlusion while retaining the appearance details. It outperforms prior states of the art on two widely used benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge