Chenchen Fu

CARE: Compatibility-Aware Incentive Mechanisms for Federated Learning with Budgeted Requesters

Apr 22, 2025Abstract:Federated learning (FL) is a promising approach that allows requesters (\eg, servers) to obtain local training models from workers (e.g., clients). Since workers are typically unwilling to provide training services/models freely and voluntarily, many incentive mechanisms in FL are designed to incentivize participation by offering monetary rewards from requesters. However, existing studies neglect two crucial aspects of real-world FL scenarios. First, workers can possess inherent incompatibility characteristics (e.g., communication channels and data sources), which can lead to degradation of FL efficiency (e.g., low communication efficiency and poor model generalization). Second, the requesters are budgeted, which limits the amount of workers they can hire for their tasks. In this paper, we investigate the scenario in FL where multiple budgeted requesters seek training services from incompatible workers with private training costs. We consider two settings: the cooperative budget setting where requesters cooperate to pool their budgets to improve their overall utility and the non-cooperative budget setting where each requester optimizes their utility within their own budgets. To address efficiency degradation caused by worker incompatibility, we develop novel compatibility-aware incentive mechanisms, CARE-CO and CARE-NO, for both settings to elicit true private costs and determine workers to hire for requesters and their rewards while satisfying requester budget constraints. Our mechanisms guarantee individual rationality, truthfulness, budget feasibility, and approximation performance. We conduct extensive experiments using real-world datasets to show that the proposed mechanisms significantly outperform existing baselines.

CorrDiff: Adaptive Delay-aware Detector with Temporal Cue Inputs for Real-time Object Detection

Jan 09, 2025

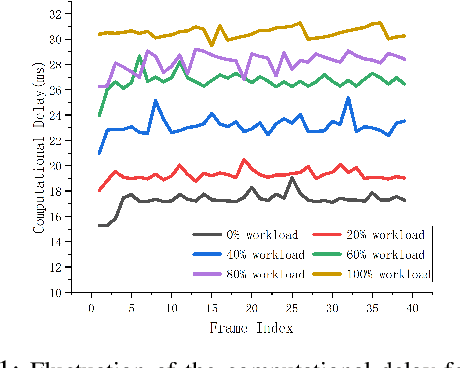

Abstract:Real-time object detection takes an essential part in the decision-making process of numerous real-world applications, including collision avoidance and path planning in autonomous driving systems. This paper presents a novel real-time streaming perception method named CorrDiff, designed to tackle the challenge of delays in real-time detection systems. The main contribution of CorrDiff lies in its adaptive delay-aware detector, which is able to utilize runtime-estimated temporal cues to predict objects' locations for multiple future frames, and selectively produce predictions that matches real-world time, effectively compensating for any communication and computational delays. The proposed model outperforms current state-of-the-art methods by leveraging motion estimation and feature enhancement, both for 1) single-frame detection for the current frame or the next frame, in terms of the metric mAP, and 2) the prediction for (multiple) future frame(s), in terms of the metric sAP (The sAP metric is to evaluate object detection algorithms in streaming scenarios, factoring in both latency and accuracy). It demonstrates robust performance across a range of devices, from powerful Tesla V100 to modest RTX 2080Ti, achieving the highest level of perceptual accuracy on all platforms. Unlike most state-of-the-art methods that struggle to complete computation within a single frame on less powerful devices, CorrDiff meets the stringent real-time processing requirements on all kinds of devices. The experimental results emphasize the system's adaptability and its potential to significantly improve the safety and reliability for many real-world systems, such as autonomous driving. Our code is completely open-sourced and is available at https://anonymous.4open.science/r/CorrDiff.

Transtreaming: Adaptive Delay-aware Transformer for Real-time Streaming Perception

Sep 10, 2024

Abstract:Real-time object detection is critical for the decision-making process for many real-world applications, such as collision avoidance and path planning in autonomous driving. This work presents an innovative real-time streaming perception method, Transtreaming, which addresses the challenge of real-time object detection with dynamic computational delay. The core innovation of Transtreaming lies in its adaptive delay-aware transformer, which can concurrently predict multiple future frames and select the output that best matches the real-world present time, compensating for any system-induced computation delays. The proposed model outperforms the existing state-of-the-art methods, even in single-frame detection scenarios, by leveraging a transformer-based methodology. It demonstrates robust performance across a range of devices, from powerful V100 to modest 2080Ti, achieving the highest level of perceptual accuracy on all platforms. Unlike most state-of-the-art methods that struggle to complete computation within a single frame on less powerful devices, Transtreaming meets the stringent real-time processing requirements on all kinds of devices. The experimental results emphasize the system's adaptability and its potential to significantly improve the safety and reliability for many real-world systems, such as autonomous driving.

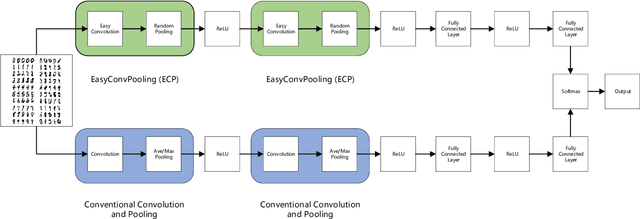

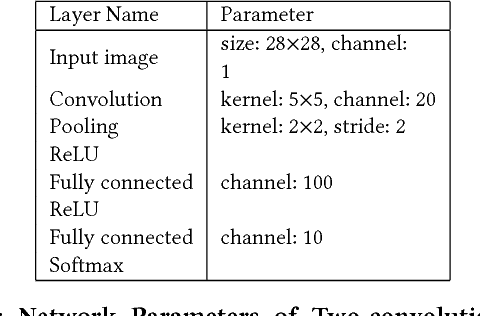

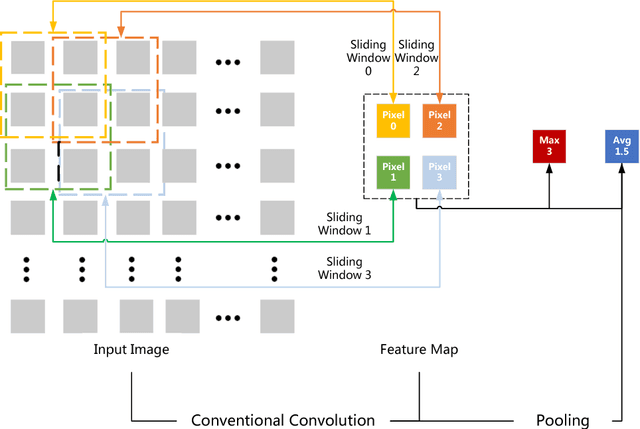

EasyConvPooling: Random Pooling with Easy Convolution for Accelerating Training and Testing

Jun 05, 2018

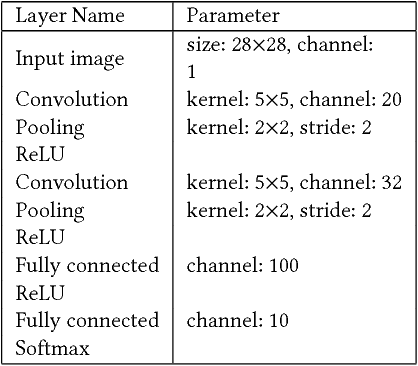

Abstract:Convolution operations dominate the overall execution time of Convolutional Neural Networks (CNNs). This paper proposes an easy yet efficient technique for both Convolutional Neural Network training and testing. The conventional convolution and pooling operations are replaced by Easy Convolution and Random Pooling (ECP). In ECP, we randomly select one pixel out of four and only conduct convolution operations of the selected pixel. As a result, only a quarter of the conventional convolution computations are needed. Experiments demonstrate that the proposed EasyConvPooling can achieve 1.45x speedup on training time and 1.64x on testing time. What's more, a speedup of 5.09x on pure Easy Convolution operations is obtained compared to conventional convolution operations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge