Carl Wellington

Convolutions for Spatial Interaction Modeling

Apr 15, 2021

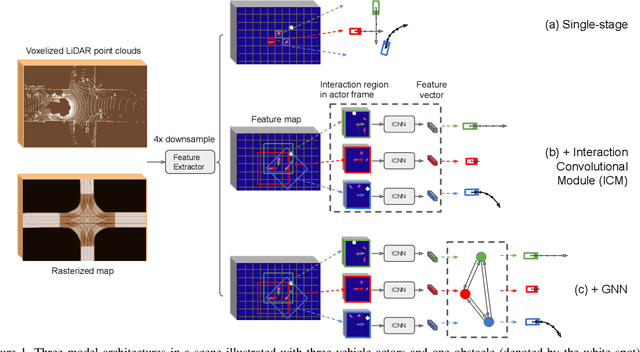

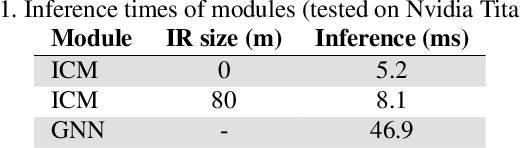

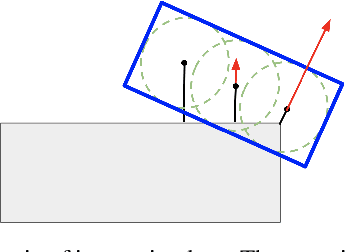

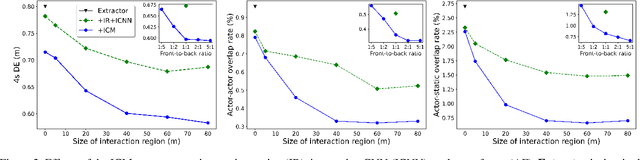

Abstract:In many different fields interactions between objects play a critical role in determining their behavior. Graph neural networks (GNNs) have emerged as a powerful tool for modeling interactions, although often at the cost of adding considerable complexity and latency. In this paper, we consider the problem of spatial interaction modeling in the context of predicting the motion of actors around autonomous vehicles, and investigate alternative approaches to GNNs. We revisit convolutions and show that they can demonstrate comparable performance to graph networks in modeling spatial interactions with lower latency, thus providing an effective and efficient alternative in time-critical systems. Moreover, we propose a novel interaction loss to further improve the interaction modeling of the considered methods.

3D Point Cloud Processing and Learning for Autonomous Driving

Mar 01, 2020

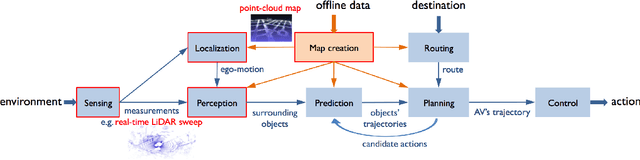

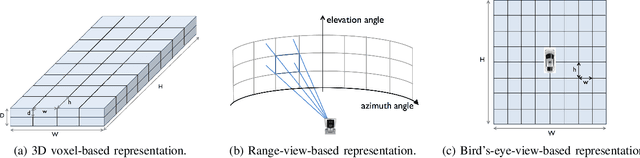

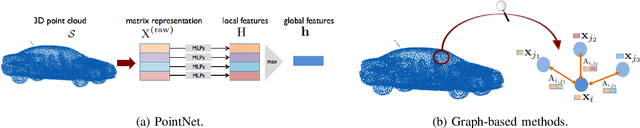

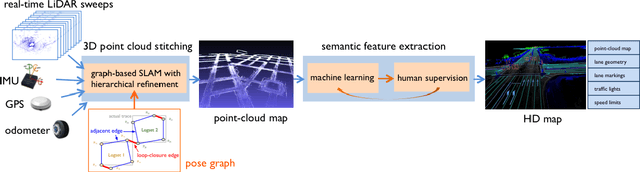

Abstract:We present a review of 3D point cloud processing and learning for autonomous driving. As one of the most important sensors in autonomous vehicles, light detection and ranging (LiDAR) sensors collect 3D point clouds that precisely record the external surfaces of objects and scenes. The tools for 3D point cloud processing and learning are critical to the map creation, localization, and perception modules in an autonomous vehicle. While much attention has been paid to data collected from cameras, such as images and videos, an increasing number of researchers have recognized the importance and significance of LiDAR in autonomous driving and have proposed processing and learning algorithms to exploit 3D point clouds. We review the recent progress in this research area and summarize what has been tried and what is needed for practical and safe autonomous vehicles. We also offer perspectives on open issues that are needed to be solved in the future.

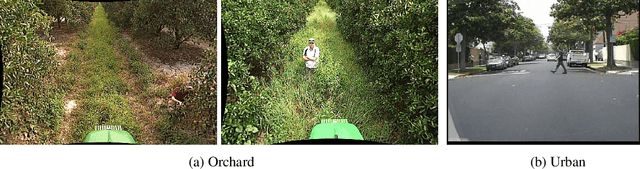

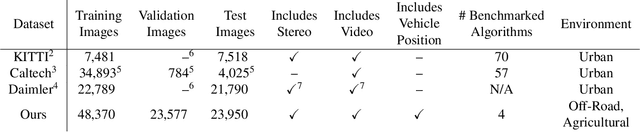

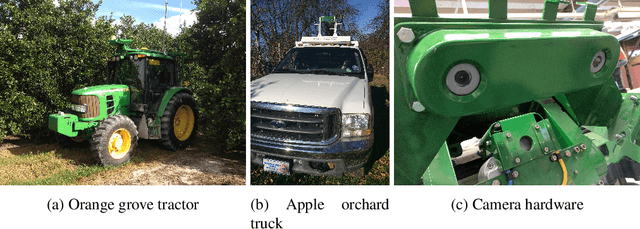

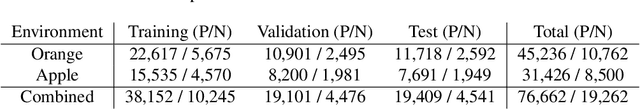

Comparing Apples and Oranges: Off-Road Pedestrian Detection on the NREC Agricultural Person-Detection Dataset

Oct 26, 2017

Abstract:Person detection from vehicles has made rapid progress recently with the advent of multiple highquality datasets of urban and highway driving, yet no large-scale benchmark is available for the same problem in off-road or agricultural environments. Here we present the NREC Agricultural Person-Detection Dataset to spur research in these environments. It consists of labeled stereo video of people in orange and apple orchards taken from two perception platforms (a tractor and a pickup truck), along with vehicle position data from RTK GPS. We define a benchmark on part of the dataset that combines a total of 76k labeled person images and 19k sampled person-free images. The dataset highlights several key challenges of the domain, including varying environment, substantial occlusion by vegetation, people in motion and in non-standard poses, and people seen from a variety of distances; meta-data are included to allow targeted evaluation of each of these effects. Finally, we present baseline detection performance results for three leading approaches from urban pedestrian detection and our own convolutional neural network approach that benefits from the incorporation of additional image context. We show that the success of existing approaches on urban data does not transfer directly to this domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge