Cagatay Basdogan

Efficient and Safe Contact-rich pHRI via Subtask Detection and Motion Estimation using Deep Learning

Jul 19, 2024

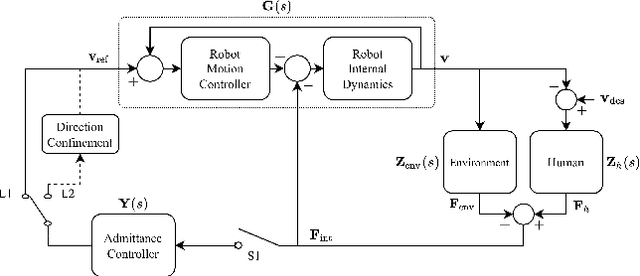

Abstract:This paper proposes an adaptive admittance controller for improving efficiency and safety in physical human-robot interaction (pHRI) tasks in small-batch manufacturing that involve contact with stiff environments, such as drilling, polishing, cutting, etc. We aim to minimize human effort and task completion time while maximizing precision and stability during the contact of the machine tool attached to the robot's end-effector with the workpiece. To this end, a two-layered learning-based human intention recognition mechanism is proposed, utilizing only the kinematic and kinetic data from the robot and two force sensors. A ``subtask detector" recognizes the human intent by estimating which phase of the task is being performed, e.g., \textit{Idle}, \textit{Tool-Attachment}, \textit{Driving}, and \textit{Contact}. Simultaneously, a ``motion estimator" continuously quantifies intent more precisely during the \textit{Driving} to predict when \textit{Contact} will begin. The controller is adapted online according to the subtask while allowing early adaptation before the \textit{Contact} to maximize precision and safety and prevent potential instabilities. Three sets of pHRI experiments were performed with multiple subjects under various conditions. Spring compression experiments were performed in virtual environments to train the data-driven models and validate the proposed adaptive system, and drilling experiments were performed in the physical world to test the proposed methods' efficacy in real-life scenarios. Experimental results show subtask classification accuracy of 84\% and motion estimation R\textsuperscript{2} score of 0.96. Furthermore, 57\% lower human effort was achieved during \textit{Driving} as well as 53\% lower oscillation amplitude at \textit{Contact} as a result of the proposed system.

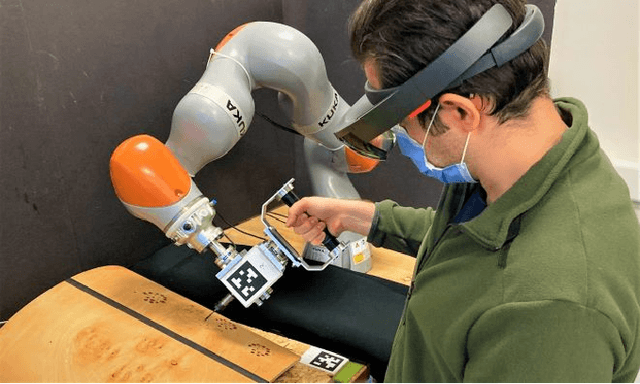

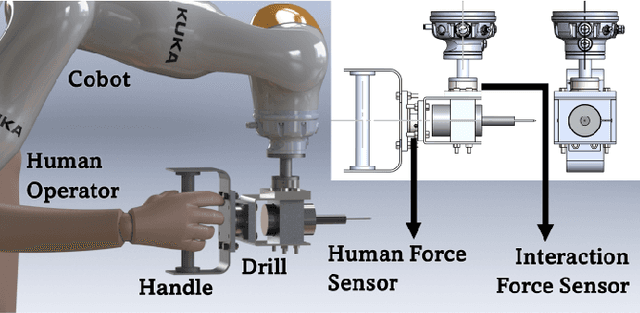

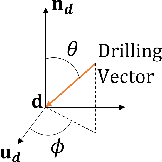

Robot-Assisted Drilling on Curved Surfaces with Haptic Guidance under Adaptive Admittance Control

Jul 28, 2022

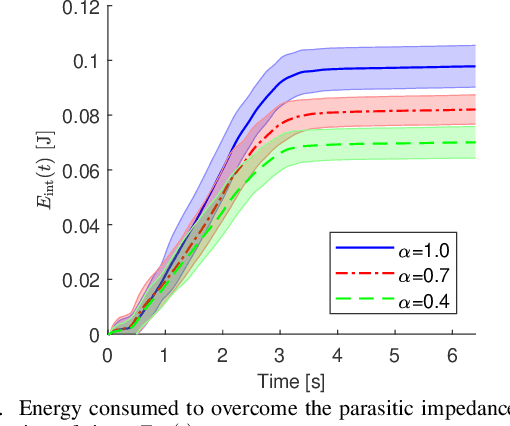

Abstract:Drilling a hole on a curved surface with a desired angle is prone to failure when done manually, due to the difficulties in drill alignment and also inherent instabilities of the task, potentially causing injury and fatigue to the workers. On the other hand, it can be impractical to fully automate such a task in real manufacturing environments because the parts arriving at an assembly line can have various complex shapes where drill point locations are not easily accessible, making automated path planning difficult. In this work, an adaptive admittance controller with 6 degrees of freedom is developed and deployed on a KUKA LBR iiwa 7 cobot such that the operator is able to manipulate a drill mounted on the robot with one hand comfortably and open holes on a curved surface with haptic guidance of the cobot and visual guidance provided through an AR interface. Real-time adaptation of the admittance damping provides more transparency when driving the robot in free space while ensuring stability during drilling. After the user brings the drill sufficiently close to the drill target and roughly aligns to the desired drilling angle, the haptic guidance module fine tunes the alignment first and then constrains the user movement to the drilling axis only, after which the operator simply pushes the drill into the workpiece with minimal effort. Two sets of experiments were conducted to investigate the potential benefits of the haptic guidance module quantitatively (Experiment I) and also the practical value of the proposed pHRI system for real manufacturing settings based on the subjective opinion of the participants (Experiment II).

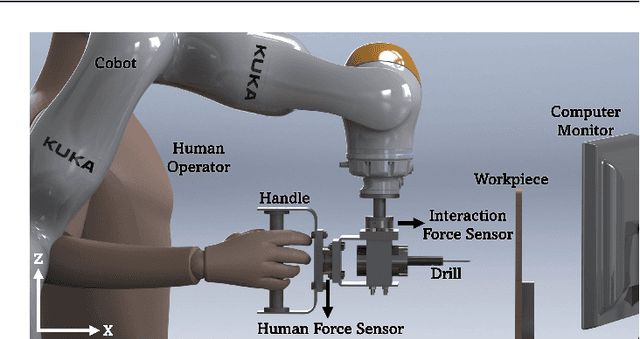

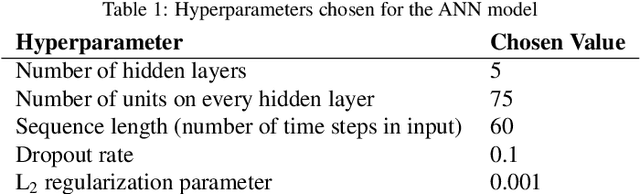

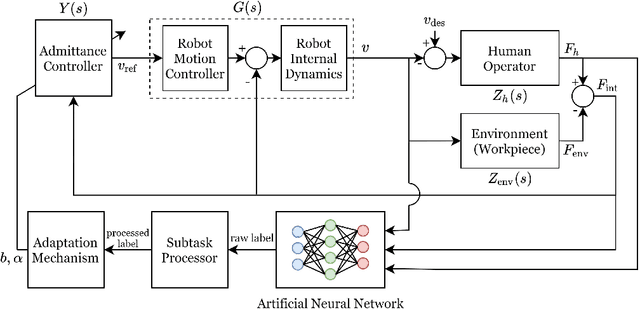

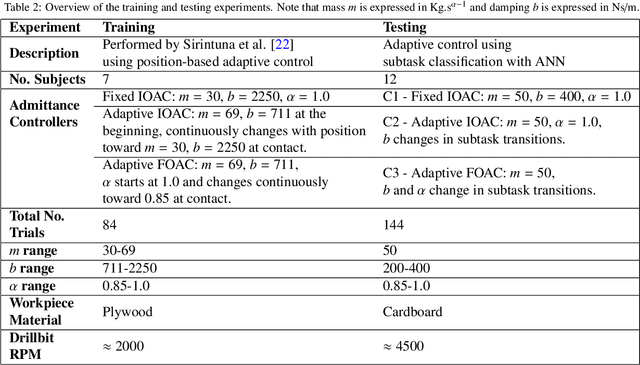

An adaptive admittance controller for collaborative drilling with a robot based on subtask classification via deep learning

May 31, 2022

Abstract:In this paper, we propose a supervised learning approach based on an Artificial Neural Network (ANN) model for real-time classification of subtasks in a physical human-robot interaction (pHRI) task involving contact with a stiff environment. In this regard, we consider three subtasks for a given pHRI task: Idle, Driving, and Contact. Based on this classification, the parameters of an admittance controller that regulates the interaction between human and robot are adjusted adaptively in real time to make the robot more transparent to the operator (i.e. less resistant) during the Driving phase and more stable during the Contact phase. The Idle phase is primarily used to detect the initiation of task. Experimental results have shown that the ANN model can learn to detect the subtasks under different admittance controller conditions with an accuracy of 98% for 12 participants. Finally, we show that the admittance adaptation based on the proposed subtask classifier leads to 20% lower human effort (i.e. higher transparency) in the Driving phase and 25% lower oscillation amplitude (i.e. higher stability) during drilling in the Contact phase compared to an admittance controller with fixed parameters.

Real-time visio-haptic interaction with static soft tissue model shaving geometric and material nonlinearity

Apr 09, 2021

Abstract:We propose a new approach allowing visio-haptic interaction with a FE model of a human liver having both non-linear geometric and material properties. The material properties used in the model are extracted from the experimental data of pig liver to make the simulations more realistic. Our computational approach consists of two main steps: a pre-computation of the configuration space of all possible deformation states of the model, followed by the interpolation of the precomputed data for the calculation of the reaction forces displayed to the user through a haptic device during the real-time interactions. No a priori assumptions or modeling simplifications about the mathematical complexity of the underlying soft tissue model, size and irregularity of the FE mesh are necessary. We show that deformation and force response of the liver in simulations are heavily influenced by the material model, boundary conditions, path of the loading and the type of function used for the interpolation of the pre-computed data.

HaptiStylus: A Novel Stylus Capable of Displaying Movement and Rotational Torque Effects

Apr 02, 2021

Abstract:With the emergence of pen-enabled tablets and mobile devices, stylus-based interaction has been receiving increasing attention. Unfortunately, styluses available in the market today are all passive instruments that are primarily used for writing and pointing. In this paper, we describe a novel stylus capable of displaying certain vibro-tactile and inertial haptic effects to the user. Our stylus is equipped with two vibration actuators at the ends, which are used to create a tactile sensation of up and down movement along the stylus. The stylus is also embedded with a DC motor, which is used to create a sense of bidirectional rotational torque about the long axis of the pen. Through two psychophysical experiments, we show that, when driven with carefully selected timing and actuation patterns, our haptic stylus can convey movement and rotational torque information to the user. Results from a further psychophysical experiment provide insight on how the shape of the actuation patterns effects the perception of rotational torque effect. Finally, experimental results from our interactive pen-based game show that our haptic stylus is effective in practical settings

HapTable: An Interactive Tabletop Providing Online Haptic Feedback for Touch Gestures

Mar 30, 2021

Abstract:We present HapTable; a multimodal interactive tabletop that allows users to interact with digital images and objects through natural touch gestures, and receive visual and haptic feedback accordingly. In our system, hand pose is registered by an infrared camera and hand gestures are classified using a Support Vector Machine (SVM) classifier. To display a rich set of haptic effects for both static and dynamic gestures, we integrated electromechanical and electrostatic actuation techniques effectively on tabletop surface of HapTable, which is a surface capacitive touch screen. We attached four piezo patches to the edges of tabletop to display vibrotactile feedback for static gestures. For this purpose, the vibration response of the tabletop, in the form of frequency response functions (FRFs), was obtained by a laser Doppler vibrometer for 84 grid points on its surface. Using these FRFs, it is possible to display localized vibrotactile feedback on the surface for static gestures. For dynamic gestures, we utilize the electrostatic actuation technique to modulate the frictional forces between finger skin and tabletop surface by applying voltage to its conductive layer. Here, we present two examples of such applications, one for static and one for dynamic gestures, along with detailed user studies. In the first one, user detects the direction of a virtual flow, such as that of wind or water, by putting their hand on the tabletop surface and feeling a vibrotactile stimulus traveling underneath it. In the second example, user rotates a virtual knob on the tabletop surface to select an item from a menu while feeling the knob's detents and resistance to rotation in the form of frictional haptic feedback.

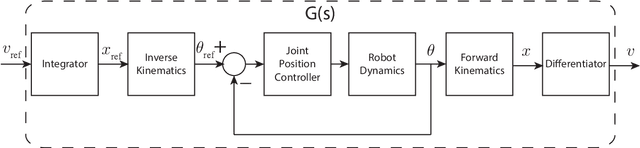

Towards Collaborative Drilling with a Cobot Using Admittance Controller

Jul 28, 2020

Abstract:In the near future, collaborative robots (cobots) are expected to play a vital role in the manufacturing and automation sectors. It is predicted that workers will work side by side in collaboration with cobots to surpass fully automated factories. In this regard, physical human-robot interaction (pHRI) aims to develop natural communication between the partners to bring speed, flexibility, and ergonomics to the execution of complex manufacturing tasks. One challenge in pHRI is to design an optimal interaction controller to balance the limitations introduced by the contradicting nature of transparency and stability requirements. In this paper, a general methodology to design an admittance controller for a pHRI system is developed by considering the stability and transparency objectives. In our approach, collaborative robot constrains the movement of human operator to help with a pHRI task while an augmented reality (AR) interface informs the operator about its phases. To this end, dynamical characterization of the collaborative robot (LBR IIWA 7 R800, KUKA Inc.) is presented first. Then, the stability and transparency analyses for our pHRI task involving collaborative drilling with this robot are reported. A range of allowable parameters for the admittance controller is determined by superimposing the stability and transparency graphs. Finally, three different sets of parameters are selected from the allowable range and the effect of admittance controllers utilizing these parameter sets on the task performance is investigated.

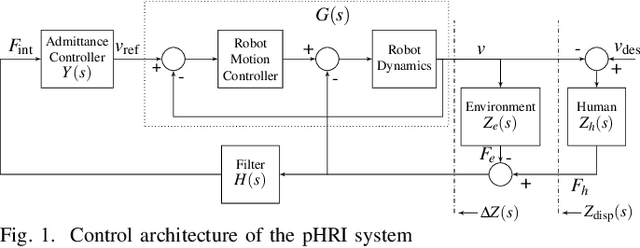

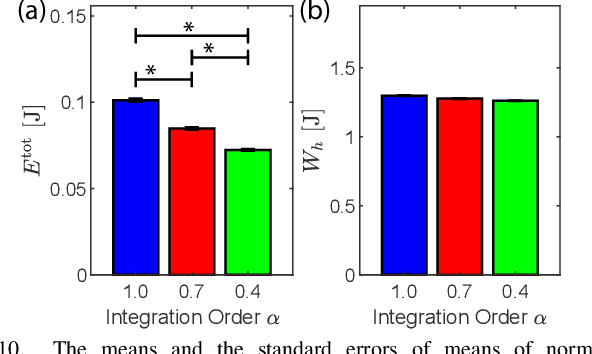

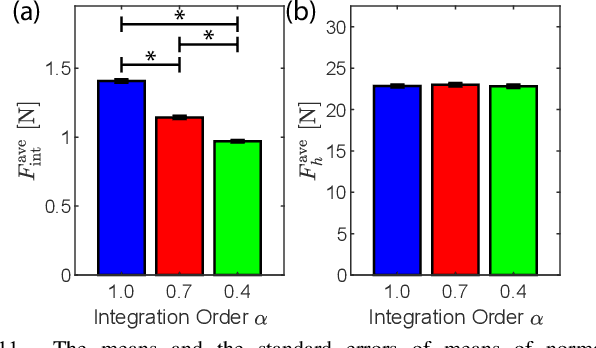

A Computational Multi-Criteria Optimization Approach to Controller Design for Physical Human-Robot Interaction

Jun 19, 2020

Abstract:Physical human-robot interaction (pHRI) integrates the benefits of human operator and a collaborative robot in tasks involving physical interaction, with the aim of increasing the task performance. However, the design of interaction controllers that achieve safe and transparent operations is challenging, mainly due to the contradicting nature of these objectives. Knowing that attaining perfect transparency is practically unachievable, controllers that allow better compromise between these objectives are desirable. In this paper, we propose a multi-criteria optimization framework, which jointly optimizes the stability robustness and transparency of a closed-loop pHRI system for a given interaction controller. In particular, we propose a Pareto optimization framework that allows the designer to make informed decisions by thoroughly studying the trade-off between stability robustness and transparency. The proposed framework involves a search over the discretized controller parameter space to compute the Pareto front curve and a selection of controller parameters that yield maximum attainable transparency and stability robustness by studying this trade-off curve. The proposed framework not only leads to the design of an optimal controller, but also enables a fair comparison among different interaction controllers. In order to demonstrate the practical use of the proposed approach, integer and fractional order admittance controllers are studied as a case study and compared both analytically and experimentally. The experimental results validate the proposed design framework and show that the achievable transparency under fractional order admittance controller is higher than that of integer order one, when both controllers are designed to ensure the same level of stability robustness.

A Review of Surface Haptics:Enabling Tactile Effects on Touch Surfaces

Apr 28, 2020

Abstract:We review the current technology underlying surface haptics that converts passive touch surfaces to active ones (machine haptics), our perception of tactile stimuli displayed through active touch surfaces (human haptics), their potential applications (human-machine interaction), and finally the challenges ahead of us in making them available through commercial systems. This review primarily covers the tactile interactions of human fingers or hands with surface-haptics displays by focusing on the three most popular actuation methods: vibrotactile, electrostatic, and ultrasonic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge