Brandon Araki

The Logical Options Framework

Feb 24, 2021

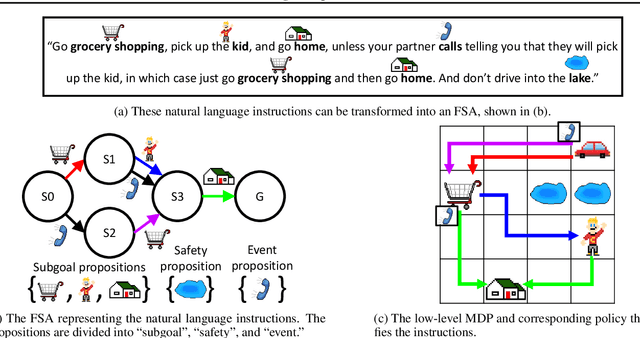

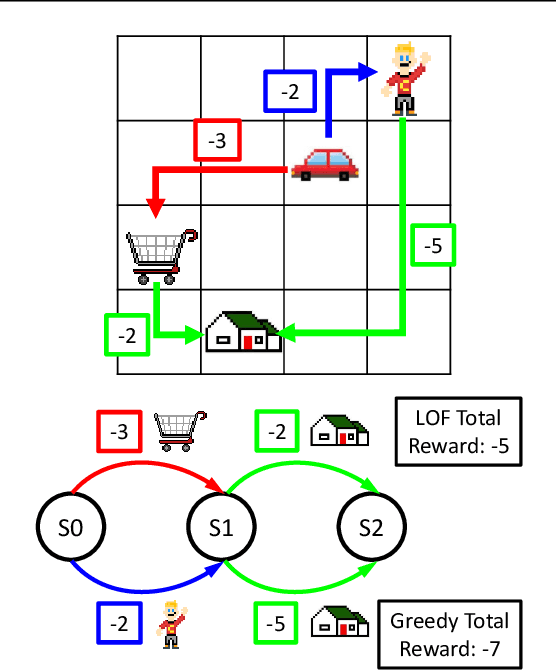

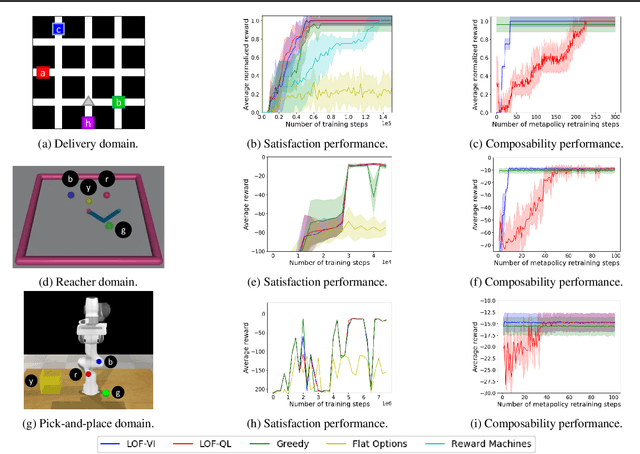

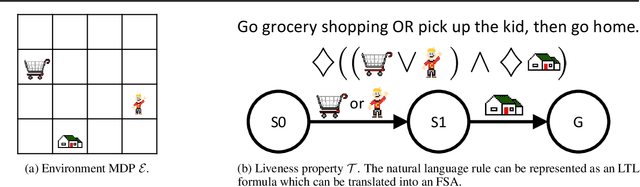

Abstract:Learning composable policies for environments with complex rules and tasks is a challenging problem. We introduce a hierarchical reinforcement learning framework called the Logical Options Framework (LOF) that learns policies that are satisfying, optimal, and composable. LOF efficiently learns policies that satisfy tasks by representing the task as an automaton and integrating it into learning and planning. We provide and prove conditions under which LOF will learn satisfying, optimal policies. And lastly, we show how LOF's learned policies can be composed to satisfy unseen tasks with only 10-50 retraining steps. We evaluate LOF on four tasks in discrete and continuous domains, including a 3D pick-and-place environment.

Functional Co-Optimization of Articulated Robots

Jul 20, 2017

Abstract:We present parametric trajectory optimization, a method for simultaneously computing physical parameters, actuation requirements, and robot motions for more efficient robot designs. In this scheme, robot dimensions, masses, and other physical parameters are solved for concurrently with traditional motion planning variables, including dynamically consistent robot states, actuation inputs, and contact forces. Our method requires minimal user domain knowledge, requiring only a coarse guess of the target robot configuration sequence and a parameterized robot topology as input. We demonstrate our results on four simulated robots, one of which we physically fabricated in order to demonstrate physical consistency. We demonstrate that by optimizing robot body parameters alongside robot trajectories, motion planning problems which would otherwise be infeasible can be made feasible, and actuation requirements can be significantly reduced.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge