Boyang Wan

Anomaly Detection in Video Sequences: A Benchmark and Computational Model

Jun 16, 2021

Abstract:Anomaly detection has attracted considerable search attention. However, existing anomaly detection databases encounter two major problems. Firstly, they are limited in scale. Secondly, training sets contain only video-level labels indicating the existence of an abnormal event during the full video while lacking annotations of precise time durations. To tackle these problems, we contribute a new Large-scale Anomaly Detection (LAD) database as the benchmark for anomaly detection in video sequences, which is featured in two aspects. 1) It contains 2000 video sequences including normal and abnormal video clips with 14 anomaly categories including crash, fire, violence, etc. with large scene varieties, making it the largest anomaly analysis database to date. 2) It provides the annotation data, including video-level labels (abnormal/normal video, anomaly type) and frame-level labels (abnormal/normal video frame) to facilitate anomaly detection. Leveraging the above benefits from the LAD database, we further formulate anomaly detection as a fully-supervised learning problem and propose a multi-task deep neural network to solve it. We first obtain the local spatiotemporal contextual feature by using an Inflated 3D convolutional (I3D) network. Then we construct a recurrent convolutional neural network fed the local spatiotemporal contextual feature to extract the spatiotemporal contextual feature. With the global spatiotemporal contextual feature, the anomaly type and score can be computed simultaneously by a multi-task neural network. Experimental results show that the proposed method outperforms the state-of-the-art anomaly detection methods on our database and other public databases of anomaly detection. Codes are available at https://github.com/wanboyang/anomaly_detection_LAD2000.

Weakly Supervised Video Anomaly Detection via Center-guided Discriminative Learning

Apr 15, 2021

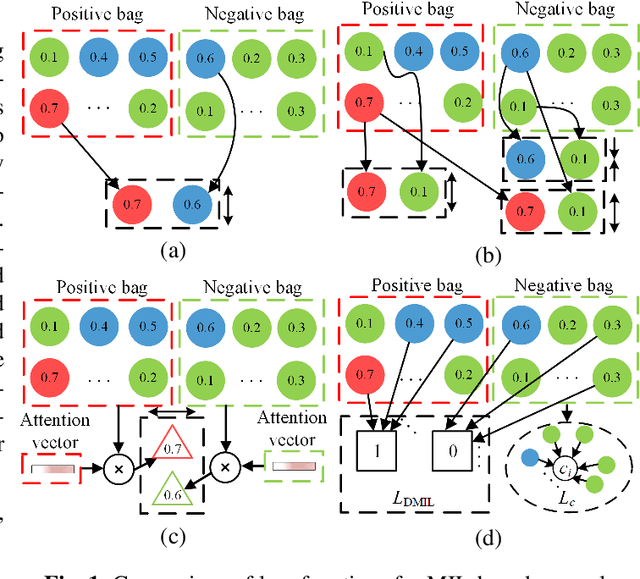

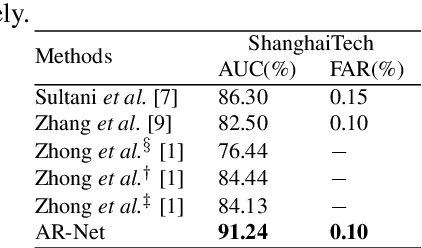

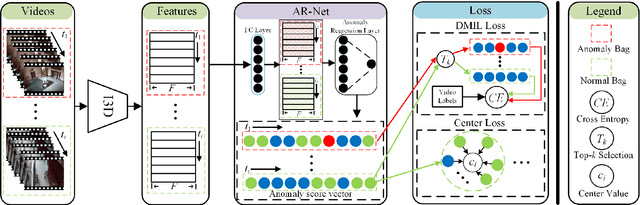

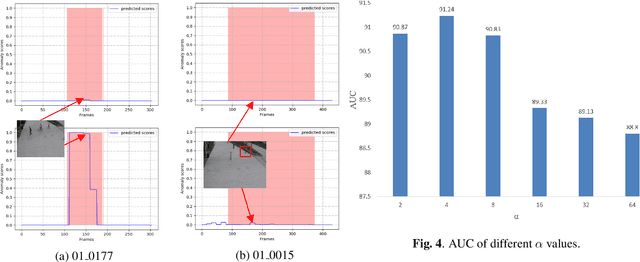

Abstract:Anomaly detection in surveillance videos is a challenging task due to the diversity of anomalous video content and duration. In this paper, we consider video anomaly detection as a regression problem with respect to anomaly scores of video clips under weak supervision. Hence, we propose an anomaly detection framework, called Anomaly Regression Net (AR-Net), which only requires video-level labels in training stage. Further, to learn discriminative features for anomaly detection, we design a dynamic multiple-instance learning loss and a center loss for the proposed AR-Net. The former is used to enlarge the inter-class distance between anomalous and normal instances, while the latter is proposed to reduce the intra-class distance of normal instances. Comprehensive experiments are performed on a challenging benchmark: ShanghaiTech. Our method yields a new state-of-the-art result for video anomaly detection on ShanghaiTech dataset

Deep Smoke Segmentation

Sep 04, 2018

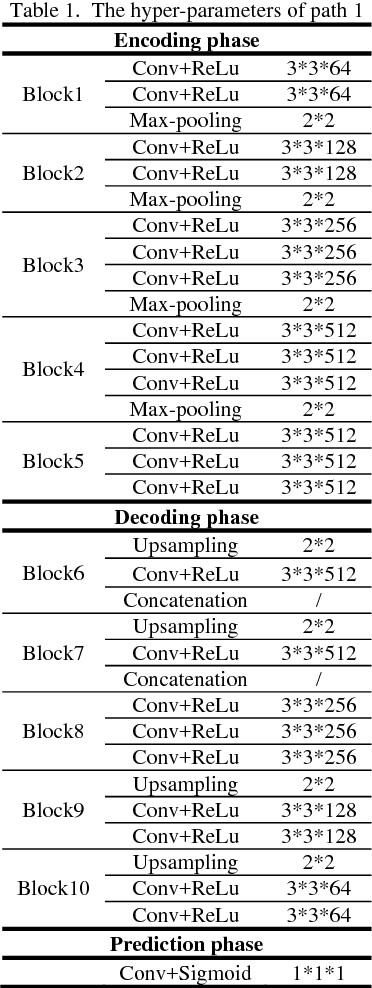

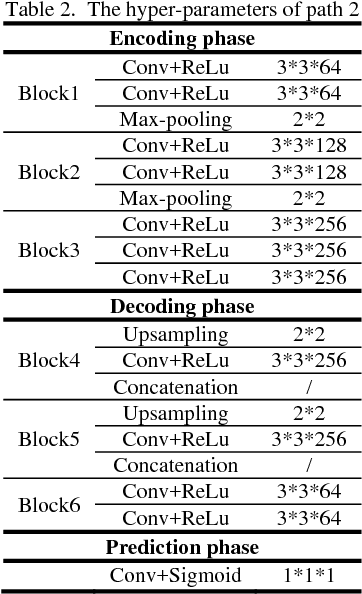

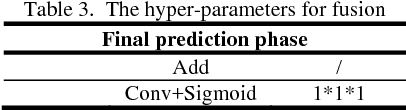

Abstract:Inspired by the recent success of fully convolutional networks (FCN) in semantic segmentation, we propose a deep smoke segmentation network to infer high quality segmentation masks from blurry smoke images. To overcome large variations in texture, color and shape of smoke appearance, we divide the proposed network into a coarse path and a fine path. The first path is an encoder-decoder FCN with skip structures, which extracts global context information of smoke and accordingly generates a coarse segmentation mask. To retain fine spatial details of smoke, the second path is also designed as an encoder-decoder FCN with skip structures, but it is shallower than the first path network. Finally, we propose a very small network containing only add, convolution and activation layers to fuse the results of the two paths. Thus, we can easily train the proposed network end to end for simultaneous optimization of network parameters. To avoid the difficulty in manually labelling fuzzy smoke objects, we propose a method to generate synthetic smoke images. According to results of our deep segmentation method, we can easily and accurately perform smoke detection from videos. Experiments on three synthetic smoke datasets and a realistic smoke dataset show that our method achieves much better performance than state-of-the-art segmentation algorithms based on FCNs. Test results of our method on videos are also appealing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge