Boyang Lyu

A principled approach to model validation in domain generalization

Apr 02, 2023Abstract:Domain generalization aims to learn a model with good generalization ability, that is, the learned model should not only perform well on several seen domains but also on unseen domains with different data distributions. State-of-the-art domain generalization methods typically train a representation function followed by a classifier jointly to minimize both the classification risk and the domain discrepancy. However, when it comes to model selection, most of these methods rely on traditional validation routines that select models solely based on the lowest classification risk on the validation set. In this paper, we theoretically demonstrate a trade-off between minimizing classification risk and mitigating domain discrepancy, i.e., it is impossible to achieve the minimum of these two objectives simultaneously. Motivated by this theoretical result, we propose a novel model selection method suggesting that the validation process should account for both the classification risk and the domain discrepancy. We validate the effectiveness of the proposed method by numerical results on several domain generalization datasets.

Trade-off between reconstruction loss and feature alignment for domain generalization

Oct 26, 2022Abstract:Domain generalization (DG) is a branch of transfer learning that aims to train the learning models on several seen domains and subsequently apply these pre-trained models to other unseen (unknown but related) domains. To deal with challenging settings in DG where both data and label of the unseen domain are not available at training time, the most common approach is to design the classifiers based on the domain-invariant representation features, i.e., the latent representations that are unchanged and transferable between domains. Contrary to popular belief, we show that designing classifiers based on invariant representation features alone is necessary but insufficient in DG. Our analysis indicates the necessity of imposing a constraint on the reconstruction loss induced by representation functions to preserve most of the relevant information about the label in the latent space. More importantly, we point out the trade-off between minimizing the reconstruction loss and achieving domain alignment in DG. Our theoretical results motivate a new DG framework that jointly optimizes the reconstruction loss and the domain discrepancy. Both theoretical and numerical results are provided to justify our approach.

* 13 pages, 2 tables

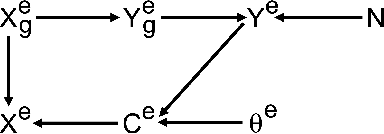

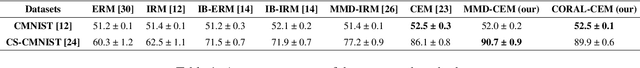

Joint covariate-alignment and concept-alignment: a framework for domain generalization

Aug 01, 2022

Abstract:In this paper, we propose a novel domain generalization (DG) framework based on a new upper bound to the risk on the unseen domain. Particularly, our framework proposes to jointly minimize both the covariate-shift as well as the concept-shift between the seen domains for a better performance on the unseen domain. While the proposed approach can be implemented via an arbitrary combination of covariate-alignment and concept-alignment modules, in this work we use well-established approaches for distributional alignment namely, Maximum Mean Discrepancy (MMD) and covariance Alignment (CORAL), and use an Invariant Risk Minimization (IRM)-based approach for concept alignment. Our numerical results show that the proposed methods perform as well as or better than the state-of-the-art for domain generalization on several data sets.

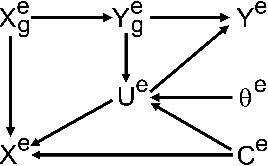

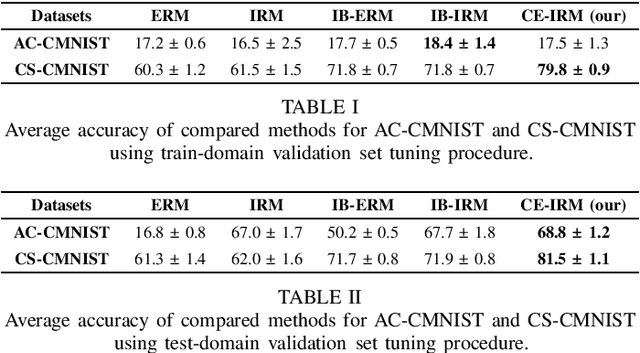

Conditional entropy minimization principle for learning domain invariant representation features

Jan 25, 2022

Abstract:Invariance principle-based methods, for example, Invariant Risk Minimization (IRM), have recently emerged as promising approaches for Domain Generalization (DG). Despite the promising theory, invariance principle-based approaches fail in common classification tasks due to the mixture of the true invariant features and the spurious invariant features. In this paper, we propose a framework based on the conditional entropy minimization principle to filter out the spurious invariant features leading to a new algorithm with a better generalization capability. We theoretically prove that under some particular assumptions, the representation function can precisely recover the true invariant features. In addition, we also show that the proposed approach is closely related to the well-known Information Bottleneck framework. Both the theoretical and numerical results are provided to justify our approach.

Barycenteric distribution alignment and manifold-restricted invertibility for domain generalization

Sep 04, 2021

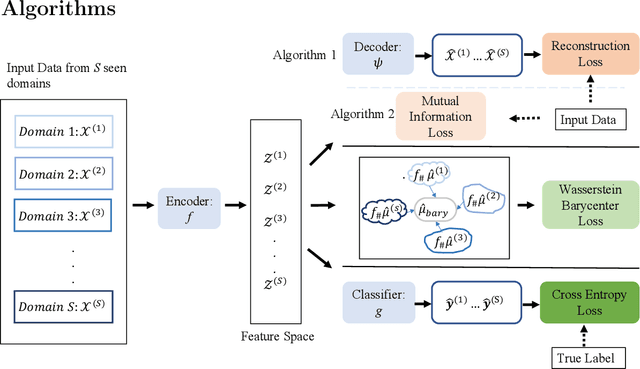

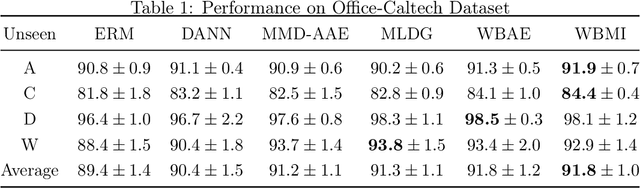

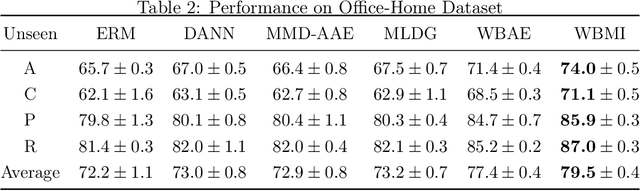

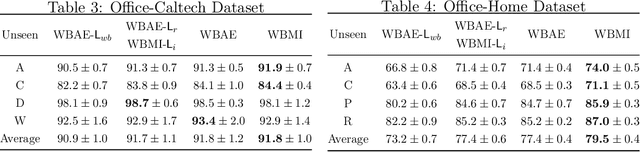

Abstract:For the Domain Generalization (DG) problem where the hypotheses are composed of a common representation function followed by a labeling function, we point out a shortcoming in existing approaches that fail to explicitly optimize for a term, appearing in a well-known and widely adopted upper bound to the risk on the unseen domain, that is dependent on the representation to be learned. To this end, we first derive a novel upper bound to the prediction risk. We show that imposing a mild assumption on the representation to be learned, namely manifold restricted invertibility, is sufficient to deal with this issue. Further, unlike existing approaches, our novel upper bound doesn't require the assumption of Lipschitzness of the loss function. In addition, the distributional discrepancy in the representation space is handled via the Wasserstein-2 barycenter cost. In this context, we creatively leverage old and recent transport inequalities, which link various optimal transport metrics, in particular the $L^1$ distance (also known as the total variation distance) and the Wasserstein-2 distances, with the Kullback-Liebler divergence. These analyses and insights motivate a new representation learning cost for DG that additively balances three competing objectives: 1) minimizing classification error across seen domains via cross-entropy, 2) enforcing domain-invariance in the representation space via the Wasserstein-2 barycenter cost, and 3) promoting non-degenerate, nearly-invertible representation via one of two mechanisms, viz., an autoencoder-based reconstruction loss or a mutual information loss. It is to be noted that the proposed algorithms completely bypass the use of any adversarial training mechanism that is typical of many current domain generalization approaches. Simulation results on several standard datasets demonstrate superior performance compared to several well-known DG algorithms.

Soft and subspace robust multivariate rank tests based on entropy regularized optimal transport

Mar 16, 2021

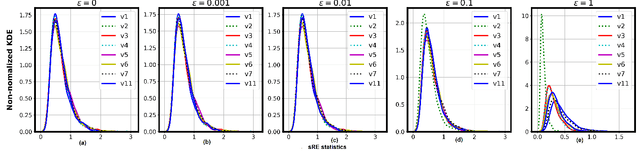

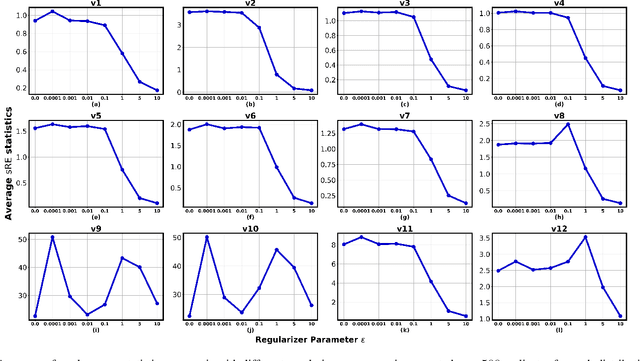

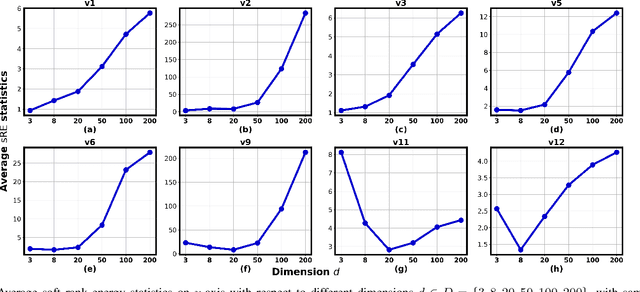

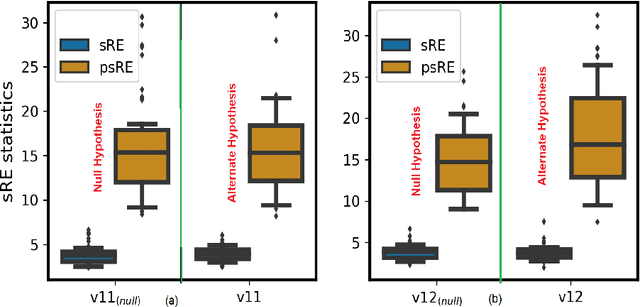

Abstract:In this paper, we extend the recently proposed multivariate rank energy distance, based on the theory of optimal transport, for statistical testing of distributional similarity, to soft rank energy distance. Being differentiable, this in turn allows us to extend the rank energy to a subspace robust rank energy distance, dubbed Projected soft-Rank Energy distance, which can be computed via optimization over the Stiefel manifold. We show via experiments that using projected soft rank energy one can trade-off the detection power vs the false alarm via projections onto an appropriately selected low dimensional subspace. We also show the utility of the proposed tests on unsupervised change point detection in multivariate time series data. All codes are publicly available at the link provided in the experiment section.

Domain Adaptation for Robust Workload Classification using fNIRS

Jul 02, 2020

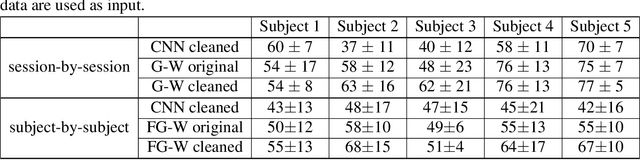

Abstract:Significance: We demonstrated the potential of using domain adaptation on functional Near-Infrared Spectroscopy (fNIRS) data to detect and discriminate different levels of n-back tasks that involve working memory across different experiment sessions and subjects. Aim: To address the domain shift in fNIRS data across sessions and subjects for task label alignment, we exploited two domain adaptation approaches - Gromov-Wasserstein (G-W) and Fused Gromov-Wasserstein (FG-W). Approach: We applied G-W for session-by-session alignment and FG-W for subject-by-subject alignment with Hellinger distance as underlying metric to fNIRS data acquired during different n-back task levels. We also compared with a supervised method - Convolutional Neural Network (CNN). Results: For session-by-session alignment, using G-W resulted in alignment accuracy of 70 $\pm$ 4 % (weighted mean $\pm$ standard error), whereas using CNN resulted in classification accuracy of 58 $\pm$ 5 % across five subjects. For subject-by-subject alignment, using FG-W resulted in alignment accuracy of 55 $\pm$ 3 %, whereas using CNN resulted in classification accuracy of 45 $\pm$ 1 %. Where in both cases 25 % represents chance. We also showed that removal of motion artifacts from the fNIRS data plays an important role in improving alignment performance. Conclusions: Domain adaptation is potential for session-by-session and subject-by-subject alignment using fNIRS data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge