Benoit Gosselin

Towards Robust and Interpretable EMG-based Hand Gesture Recognition using Deep Metric Meta Learning

Apr 17, 2024

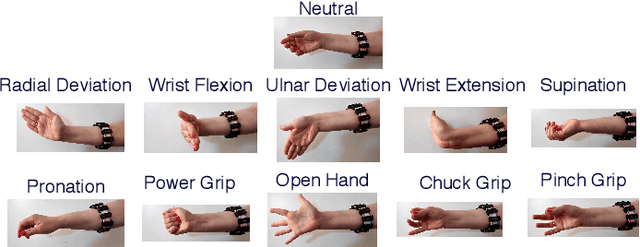

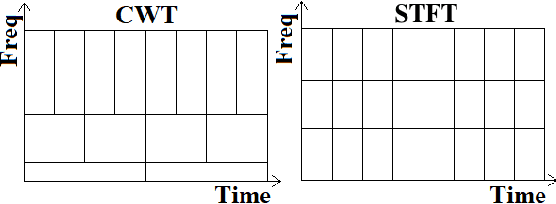

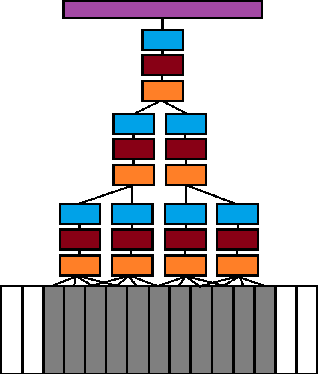

Abstract:Current electromyography (EMG) pattern recognition (PR) models have been shown to generalize poorly in unconstrained environments, setting back their adoption in applications such as hand gesture control. This problem is often due to limited training data, exacerbated by the use of supervised classification frameworks that are known to be suboptimal in such settings. In this work, we propose a shift to deep metric-based meta-learning in EMG PR to supervise the creation of meaningful and interpretable representations. We use a Siamese Deep Convolutional Neural Network (SDCNN) and contrastive triplet loss to learn an EMG feature embedding space that captures the distribution of the different classes. A nearest-centroid approach is subsequently employed for inference, relying on how closely a test sample aligns with the established data distributions. We derive a robust class proximity-based confidence estimator that leads to a better rejection of incorrect decisions, i.e. false positives, especially when operating beyond the training data domain. We show our approach's efficacy by testing the trained SDCNN's predictions and confidence estimations on unseen data, both in and out of the training domain. The evaluation metrics include the accuracy-rejection curve and the Kullback-Leibler divergence between the confidence distributions of accurate and inaccurate predictions. Outperforming comparable models on both metrics, our results demonstrate that the proposed meta-learning approach improves the classifier's precision in active decisions (after rejection), thus leading to better generalization and applicability.

A Flexible and Modular Body-Machine Interface for Individuals Living with Severe Disabilities

Jul 29, 2020

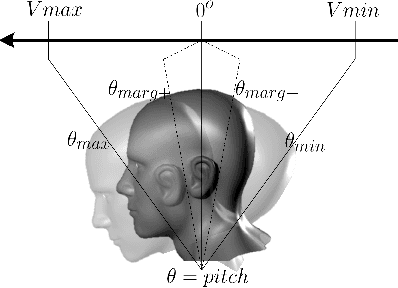

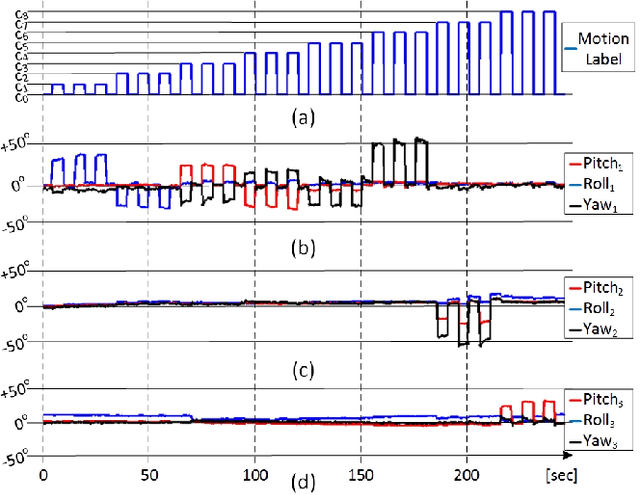

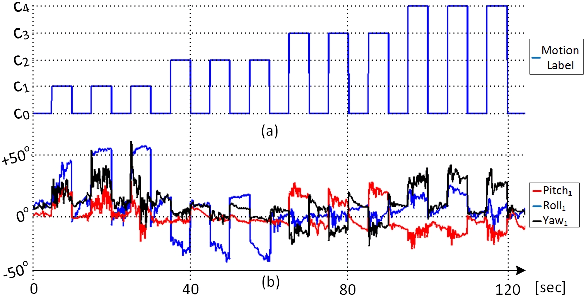

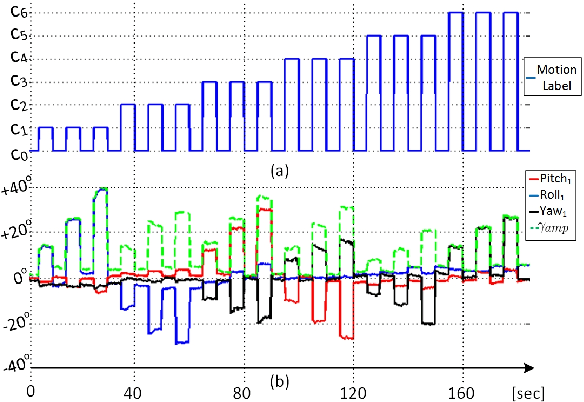

Abstract:This paper presents a control interface to translate the residual body motions of individuals living with severe disabilities, into control commands for body-machine interaction. A custom, wireless, wearable multi-sensor network is used to collect motion data from multiple points on the body in real-time. The solution proposed successfully leverage electromyography gesture recognition techniques for the recognition of inertial measurement units-based commands (IMU), without the need for cumbersome and noisy surface electrodes. Motion pattern recognition is performed using a computationally inexpensive classifier (Linear Discriminant Analysis) so that the solution can be deployed onto lightweight embedded platforms. Five participants (three able-bodied and two living with upper-body disabilities) presenting different motion limitations (e.g. spasms, reduced motion range) were recruited. They were asked to perform up to 9 different motion classes, including head, shoulder, finger, and foot motions, with respect to their residual functional capacities. The measured prediction performances show an average accuracy of 99.96% for able-bodied individuals and 91.66% for participants with upper-body disabilities. The recorded dataset has also been made available online to the research community. Proof of concept for the real-time use of the system is given through an assembly task replicating activities of daily living using the JACO arm from Kinova Robotics.

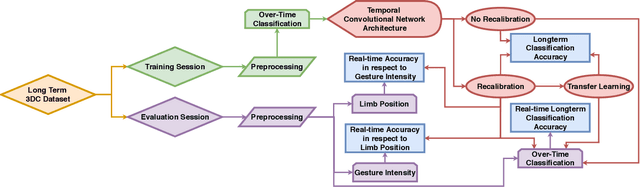

Unsupervised Domain Adversarial Self-Calibration for Electromyographic-based Gesture Recognition

Dec 21, 2019

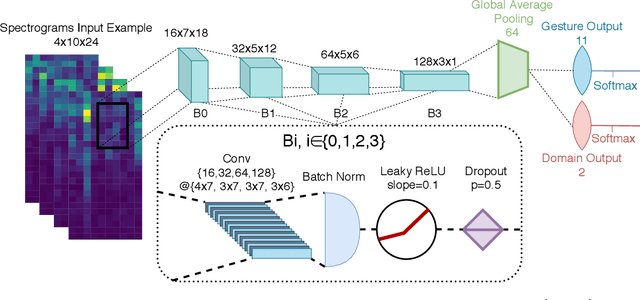

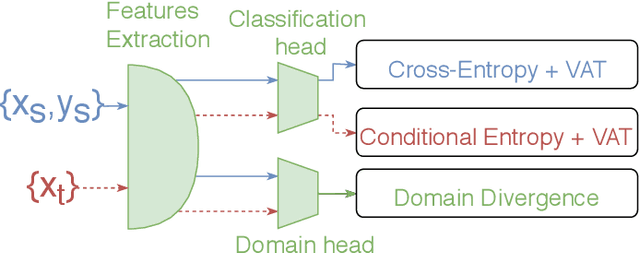

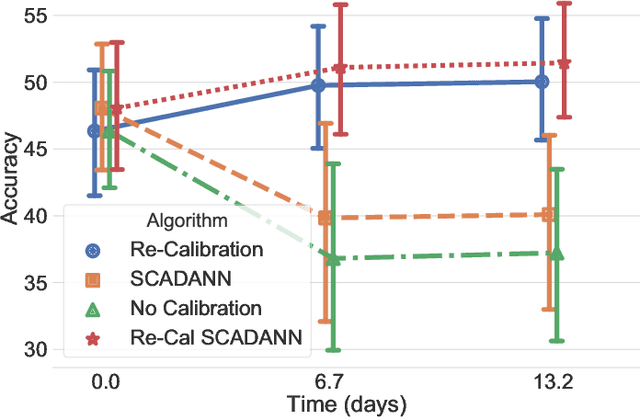

Abstract:Surface electromyography (sEMG) provides an intuitive and non-invasive interface from which to control machines. However, preserving the myoelectric control system's performance over multiple days is challenging, due to the transient nature of this recording technique. In practice, if the system is to remain usable, a time-consuming and periodic re-calibration is necessary. In the case where the sEMG interface is employed every few days, the user might need to do this re-calibration before every use. Thus, severely limiting the practicality of such a control method. Consequently, this paper proposes tackling the especially challenging task of adapting to sEMG signals when multiple days have elapsed between each recording, by presenting SCADANN, a new, deep learning-based, self-calibrating algorithm. SCADANN is ranked against three state of the art domain adversarial algorithms and a multiple-vote self-calibrating algorithm on both offline and online datasets. Overall, SCADANN is shown to systematically improve classifiers' performance over no adaptation and ranks first on almost all the cases tested.

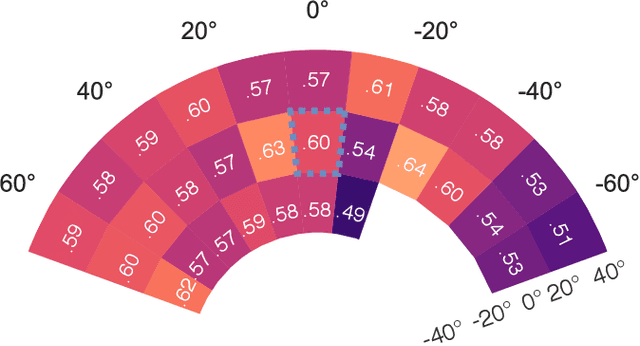

Virtual Reality to Study the Gap Between Offline and Real-Time EMG-based Gesture Recognition

Dec 16, 2019

Abstract:Within sEMG-based gesture recognition, a chasm exists in the literature between offline accuracy and real-time usability of a classifier. This gap mainly stems from the four main dynamic factors in sEMG-based gesture recognition: gesture intensity, limb position, electrode shift and transient changes in the signal. These factors are hard to include within an offline dataset as each of them exponentially augment the number of segments to be recorded. On the other hand, online datasets are biased towards the sEMG-based algorithms providing feedback to the participants, limiting the usability of such datasets as benchmarks. This paper proposes a virtual reality (VR) environment and a real-time experimental protocol from which the four main dynamic factors can more easily be studied. During the online experiment, the gesture recognition feedback is provided through the leap motion camera, enabling the proposed dataset to be re-used to compare future sEMG-based algorithms. 20 able-bodied persons took part in this study, completing three to four sessions over a period spanning between 14 and 21 days. Finally, TADANN, a new transfer learning-based algorithm, is proposed for long term gesture classification and significantly (p<0.05) outperforms fine-tuning a network.

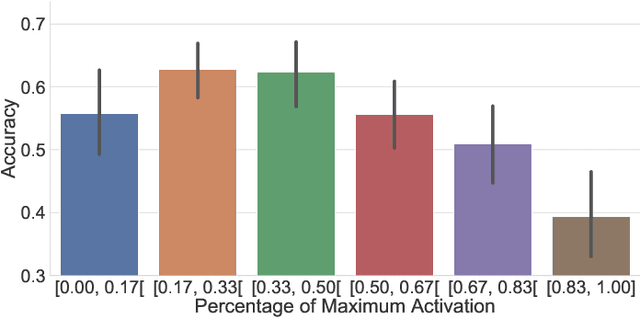

Interpreting Deep Learning Features for Myoelectric Control: A Comparison with Handcrafted Features

Nov 30, 2019

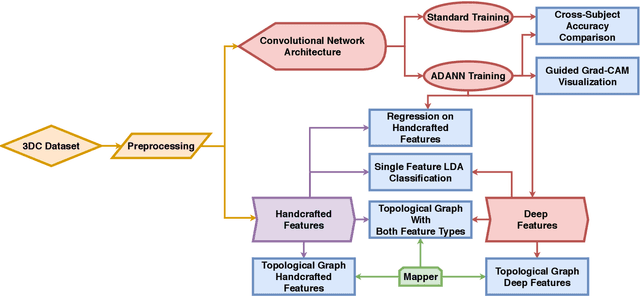

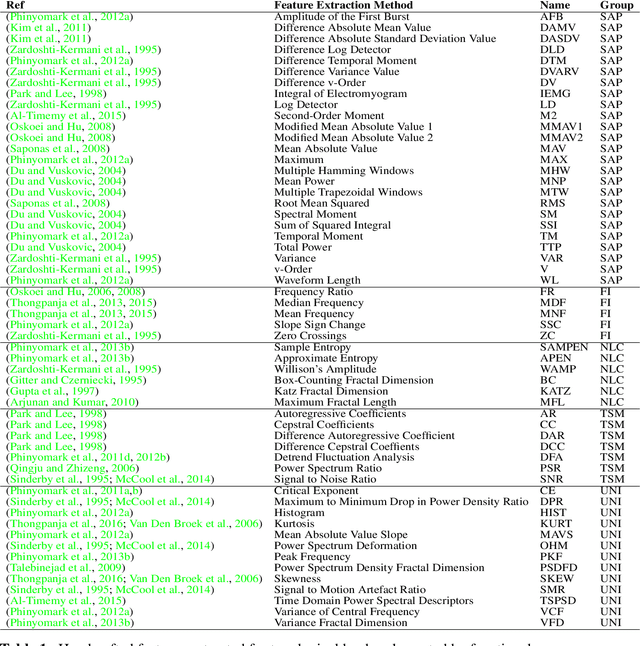

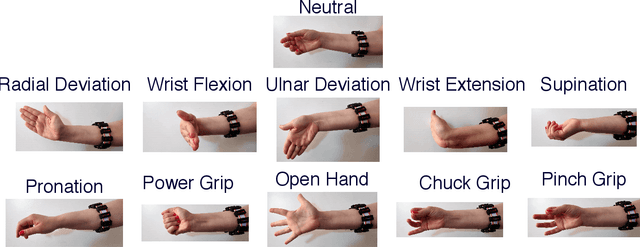

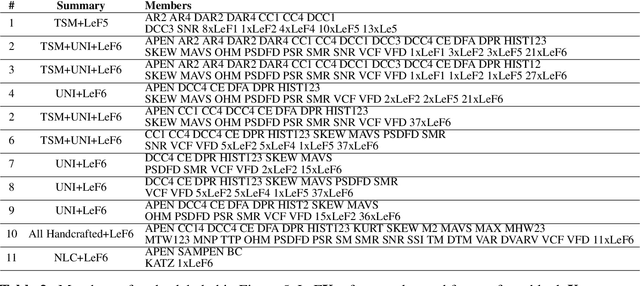

Abstract:The research in myoelectric control systems primarily focuses on extracting discriminative representations from the electromyographic (EMG) signal by designing handcrafted features. Recently, deep learning techniques have been applied to the challenging task of EMG-based gesture recognition. The adoption of these techniques slowly shifts the focus from feature engineering to feature learning. However, the black-box nature of deep learning makes it hard to understand the type of information learned by the network and how it relates to handcrafted features. Additionally, due to the high variability in EMG recordings between participants, deep features tend to generalize poorly across subjects using standard training methods. Consequently, this work introduces a new multi-domain learning algorithm, named ADANN, which significantly enhances (p=0.00004) inter-subject classification accuracy by an average of 19.40\% compared to standard training. Using ADANN-generated features, the main contribution of this work is to provide the first topological data analysis of EMG-based gesture recognition for the characterisation of the information encoded within a deep network, using handcrafted features as landmarks. This analysis reveals that handcrafted features and the learned features (in the earlier layers) both try to discriminate between all gestures, but do not encode the same information to do so. Furthermore, using convolutional network visualization techniques reveal that learned features tend to ignore the most activated channel during gesture contraction, which is in stark contrast with the prevalence of handcrafted features designed to capture amplitude information. Overall, this work paves the way for hybrid feature sets by providing a clear guideline of complementary information encoded within learned and handcrafted features.

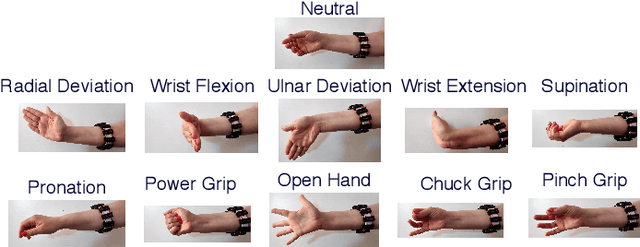

Deep Learning for Electromyographic Hand Gesture Signal Classification Using Transfer Learning

Jun 12, 2018

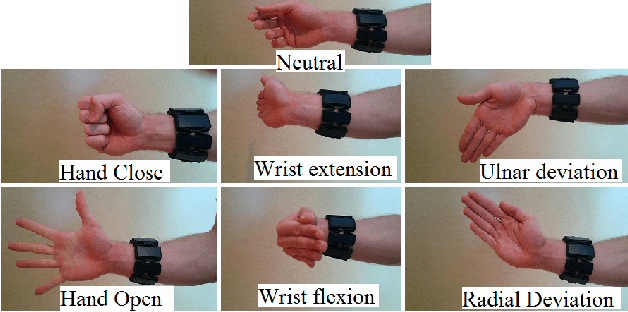

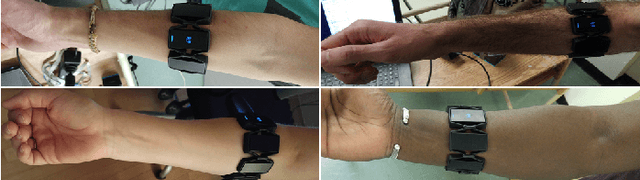

Abstract:In recent years, the use of deep learning algorithms has become increasingly more prominent for their unparalleled ability to automatically learn discriminant features from large amounts of data. However, within the field of electromyography-based gesture recognition, deep learning algorithms are seldom employed as it requires an unreasonable amount of time for a single person, in a single session, to generate tens of thousands of examples. This work's hypothesis is that general, informative features can be learned from the large amount of data generated by aggregating the signals of multiple users, thus reducing the recording burden imposed on a single person while enhancing gesture recognition. As such, this paper proposes applying transfer learning on the aggregated data of multiple users, while leveraging the capacity of deep learning algorithms to learn discriminant features from large dataset, without the need for in-depth feature engineering. To this end, two datasets are recorded with the Myo Armband (Thalmic Labs), a low-cost, low-sampling rate (200Hz), 8-channel, consumer-grade, dry electrode sEMG armband. These two datasets are comprised of 19 and 17 able-bodied participants respectively. A third dataset, also recorded with the Myo Armband, was taken from the NinaPro database and is comprised of 10 able-bodied participants. This transfer learning scheme is shown to outperform the current state-of-the-art in gesture recognition. It achieves an average accuracy of 98.31% for 7 hand/wrist gestures over 17 able-bodied participants and 65.57% for 18 hand/wrist gestures over 10 able-bodied participants. Finally, a use-case study employing eight able-bodied participants suggests that real-time feedback reduces the degradation in accuracy normally experienced over time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge