Virtual Reality to Study the Gap Between Offline and Real-Time EMG-based Gesture Recognition

Paper and Code

Dec 16, 2019

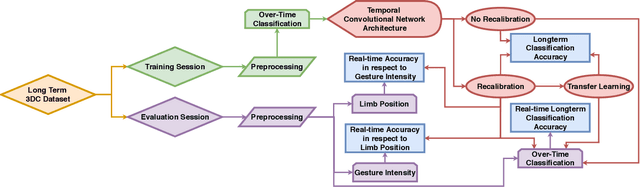

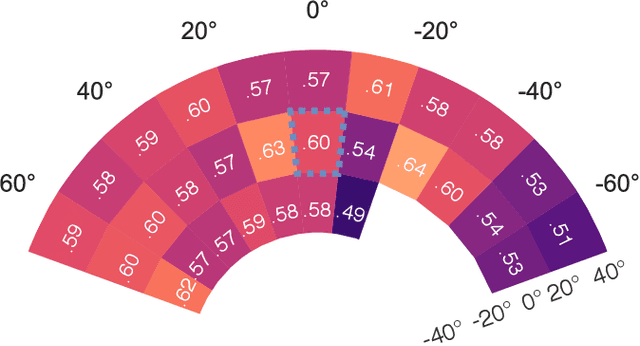

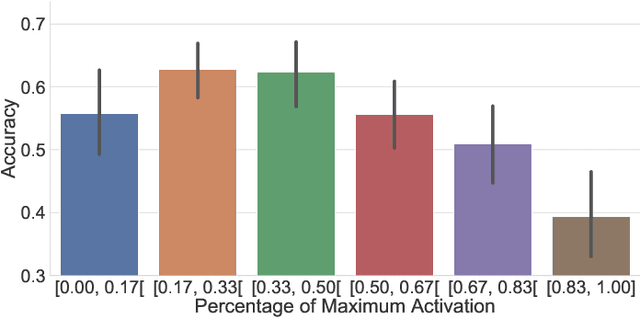

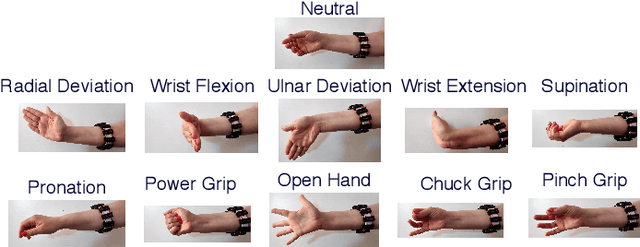

Within sEMG-based gesture recognition, a chasm exists in the literature between offline accuracy and real-time usability of a classifier. This gap mainly stems from the four main dynamic factors in sEMG-based gesture recognition: gesture intensity, limb position, electrode shift and transient changes in the signal. These factors are hard to include within an offline dataset as each of them exponentially augment the number of segments to be recorded. On the other hand, online datasets are biased towards the sEMG-based algorithms providing feedback to the participants, limiting the usability of such datasets as benchmarks. This paper proposes a virtual reality (VR) environment and a real-time experimental protocol from which the four main dynamic factors can more easily be studied. During the online experiment, the gesture recognition feedback is provided through the leap motion camera, enabling the proposed dataset to be re-used to compare future sEMG-based algorithms. 20 able-bodied persons took part in this study, completing three to four sessions over a period spanning between 14 and 21 days. Finally, TADANN, a new transfer learning-based algorithm, is proposed for long term gesture classification and significantly (p<0.05) outperforms fine-tuning a network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge