Alexandre Campeau-Lecours

Towards Robust and Interpretable EMG-based Hand Gesture Recognition using Deep Metric Meta Learning

Apr 17, 2024

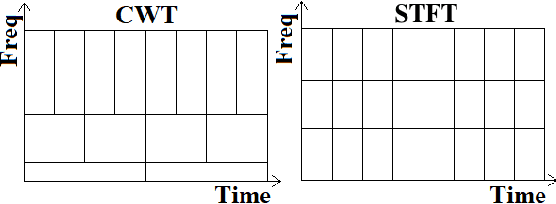

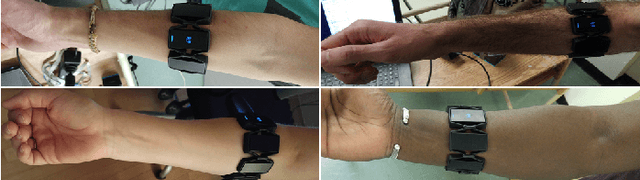

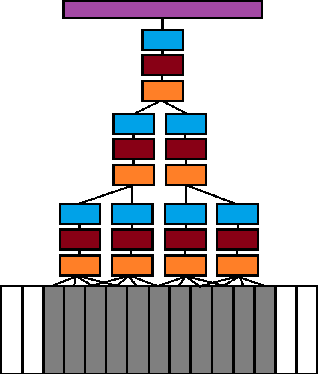

Abstract:Current electromyography (EMG) pattern recognition (PR) models have been shown to generalize poorly in unconstrained environments, setting back their adoption in applications such as hand gesture control. This problem is often due to limited training data, exacerbated by the use of supervised classification frameworks that are known to be suboptimal in such settings. In this work, we propose a shift to deep metric-based meta-learning in EMG PR to supervise the creation of meaningful and interpretable representations. We use a Siamese Deep Convolutional Neural Network (SDCNN) and contrastive triplet loss to learn an EMG feature embedding space that captures the distribution of the different classes. A nearest-centroid approach is subsequently employed for inference, relying on how closely a test sample aligns with the established data distributions. We derive a robust class proximity-based confidence estimator that leads to a better rejection of incorrect decisions, i.e. false positives, especially when operating beyond the training data domain. We show our approach's efficacy by testing the trained SDCNN's predictions and confidence estimations on unseen data, both in and out of the training domain. The evaluation metrics include the accuracy-rejection curve and the Kullback-Leibler divergence between the confidence distributions of accurate and inaccurate predictions. Outperforming comparable models on both metrics, our results demonstrate that the proposed meta-learning approach improves the classifier's precision in active decisions (after rejection), thus leading to better generalization and applicability.

Development and Validation of a Data Fusion Algorithm with Low-Cost Inertial Measurement Units to Analyze Shoulder Movements in Manual Workers

Oct 15, 2020

Abstract:Work-related upper extremity musculoskeletal disorders (WRUED) are a major problem in modern societies as they affect the quality of life of workers and lead to absenteeism and productivity loss. According to studies performed in North America and Western Europe, their prevalence has increased in the last few decades. This challenge calls for improvements in prevention methods. One avenue is through the development of wearable sensor systems to analyze worker's movements and provide feedback to workers and/or clinicians. Such systems could decrease the physical work demands and ultimately prevent musculoskeletal disorders. This paper presents the development and validation of a data fusion algorithm for inertial measurement units to analyze worker's arm elevation. The algorithm was implemented on two commercial sensor systems (Actigraph GT9X and LSM9DS1) and results were compared with the data fusion results from a validated commercial sensor (XSens MVN system). Cross-correlation analyses [r], root-mean-square error (RMSE) and average absolute error of estimate were used to establish the construct validity of the algorithm. Five subjects each performed ten different arm elevation tasks. The results show that the algorithm is valid to evaluate shoulder movements with high correlations between the results of the two different sensors and the commercial sensor (0.900-0.998) and relatively low RMSE value for the ten tasks (1.66-11.24{\deg}). The proposed data fusion algorithm could thus be used to estimate arm elevation.

Intuitive sequence matching algorithm applied to a sip-and-puff control interface for robotic assistive devices

Oct 15, 2020

Abstract:This paper presents the development and preliminary validation of a control interface based on a sequence matching algorithm. An important challenge in the field of assistive technology is for users to control high dimensionality devices (e.g., assistive robot with several degrees of freedom, or computer) with low dimensionality control interfaces (e.g., a few switches). Sequence matching consists in the recognition of a pattern obtained from a sensor's signal compared to a predefined pattern library. The objective is to allow the user to input several different commands with a low dimensionality interface (e.g., Morse code allowing inputting several letters with a single switch). In this paper, the algorithm is used in the context of the control of an assistive robotic arm and has been adapted to a sip-and-puff interface where short and long bursts can be detected. Compared to a classic sip-and-puff interface, a preliminary validation with 8 healthy subjects has shown that sequence matching makes the control faster, easier and more comfortable. This paper is a proof of concept that leads the way towards a more advanced algorithm and the usage of more versatile sensors such as inertial measurement units (IMU).

Mechanical Design Improvement of a Passive Device to Assist Eating in People Living with Movement Disorders

Oct 14, 2020Abstract:Many people living with neurological disorders, such as cerebral palsy, stroke, muscular dystrophy or dystonia experience upper limb impairments (muscle spasticity, loss of selective motor control, muscle weakness or tremors) and have difficulty to eat independently. The general goal of this project is to develop a new device to assist with eating, aimed at stabilizing the movement of people who have movement disorders. A first iteration of the device was validated with children living with cerebral palsy and showed promising results. This validation however pointed out important drawbacks. This paper presents an iteration of the design which includes a new mechanism reducing the required arm elevation, improving safety through a compliant utensil attachment, and improving damping and other static balancing factors.

Mechanical Orthosis Mechanism to Facilitate the Extension of the Leg

Oct 14, 2020

Abstract:This paper presents the design of a mechanism to help people using mechanical orthoses to extend their legs easily and lock the knee joint of their orthosis. Many people living with spinal cord injury are living with paraplegia and thus need orthoses to be able to stand and walk. Mechanical orthoses are common types of orthopedic devices. This paper proposes to add a mechanism that creates a lever arm just below the knee joint to help the user extend the leg. The development of the mechanism is first presented, then followed by the results of the tests that were conducted.

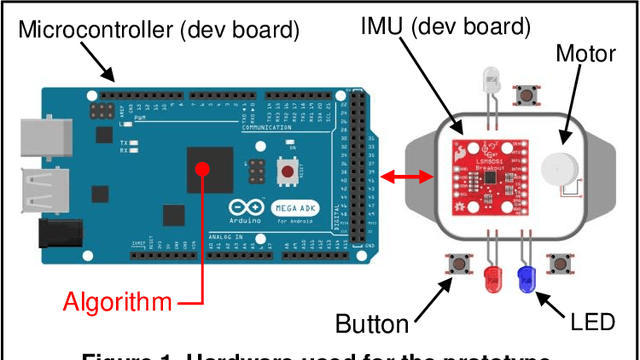

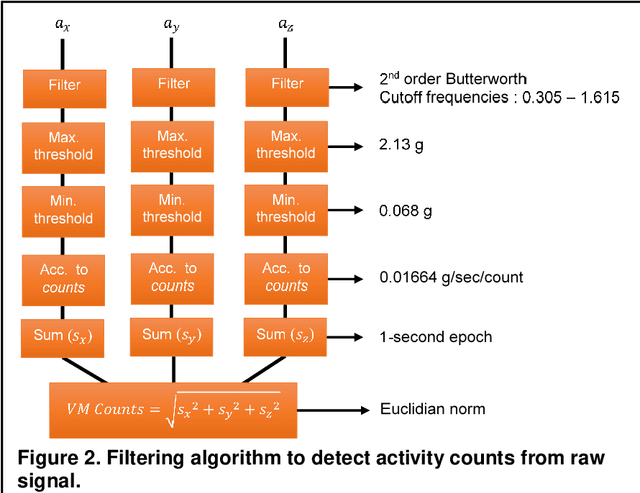

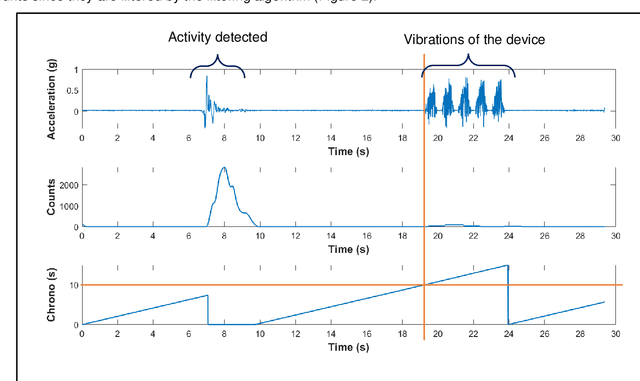

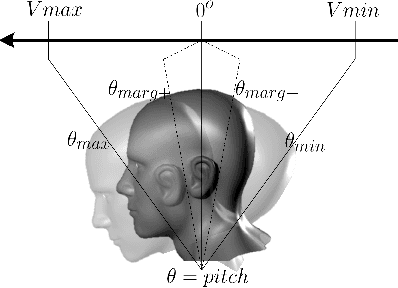

Preliminary Development of a Wearable Device to Help Children with Unilateral Cerebral Palsy Increase Their Consciousness of Their Upper Extremity

Oct 12, 2020

Abstract:Children with unilateral cerebral palsy have movement impairments that are predominant to one of their upper extremities (UE) and are prone to a phenomenon named "developmental disregard", which is characterized by the neglect of their most affected UE because of their altered perception or consciousness of this limb. This can cause them not to use their most affected hand to its full capacity in their day-to-day life. This paper presents a prototype of a wearable technology with the appearance of a smartwatch, which delivers haptic feedback to remind children with unilateral cerebral palsy to use their most affected limb, and which increase sensory afferents to possibly influence brain plasticity. The prototype consists of an accelerometer, a vibration motor and a microcontroller with an algorithm that detects movement of the limb. After a given period of inactivity, the watch starts vibrating to alert the user.

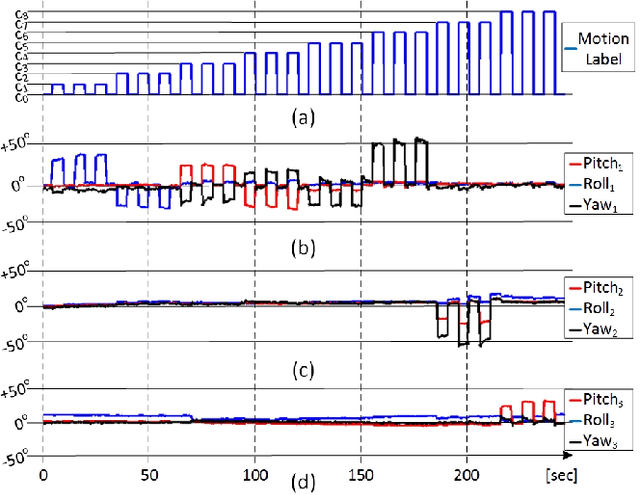

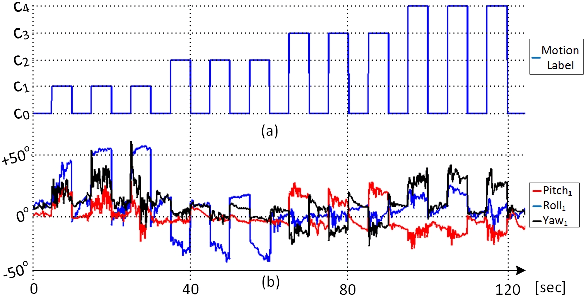

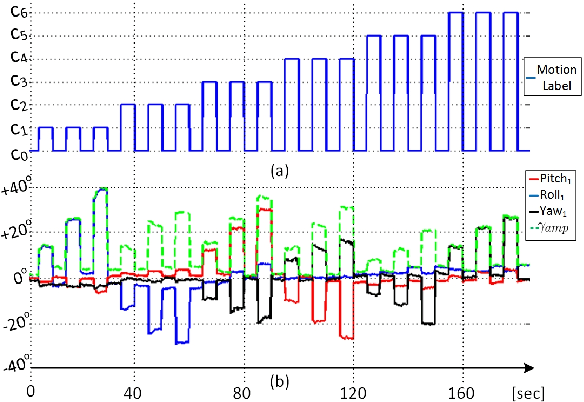

A Flexible and Modular Body-Machine Interface for Individuals Living with Severe Disabilities

Jul 29, 2020

Abstract:This paper presents a control interface to translate the residual body motions of individuals living with severe disabilities, into control commands for body-machine interaction. A custom, wireless, wearable multi-sensor network is used to collect motion data from multiple points on the body in real-time. The solution proposed successfully leverage electromyography gesture recognition techniques for the recognition of inertial measurement units-based commands (IMU), without the need for cumbersome and noisy surface electrodes. Motion pattern recognition is performed using a computationally inexpensive classifier (Linear Discriminant Analysis) so that the solution can be deployed onto lightweight embedded platforms. Five participants (three able-bodied and two living with upper-body disabilities) presenting different motion limitations (e.g. spasms, reduced motion range) were recruited. They were asked to perform up to 9 different motion classes, including head, shoulder, finger, and foot motions, with respect to their residual functional capacities. The measured prediction performances show an average accuracy of 99.96% for able-bodied individuals and 91.66% for participants with upper-body disabilities. The recorded dataset has also been made available online to the research community. Proof of concept for the real-time use of the system is given through an assembly task replicating activities of daily living using the JACO arm from Kinova Robotics.

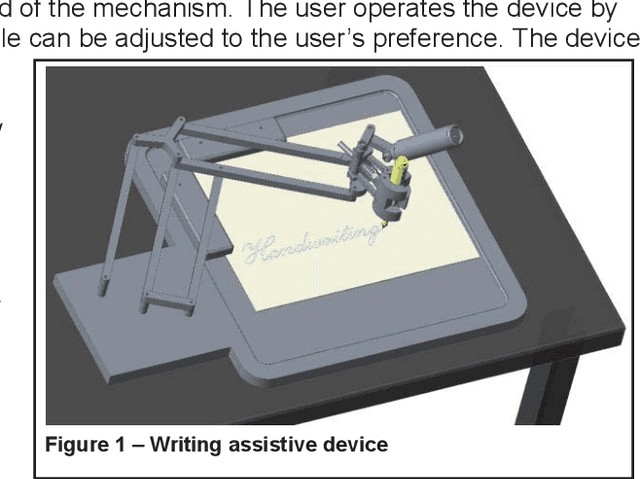

Preliminary design of a device to assist handwriting in children with movement disorders

Aug 06, 2019

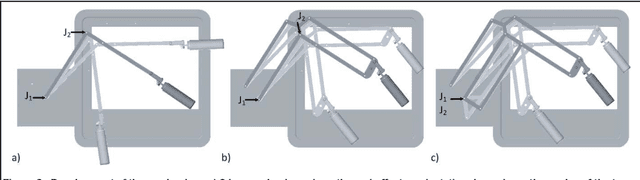

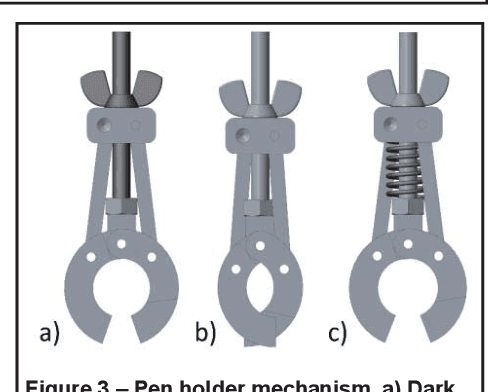

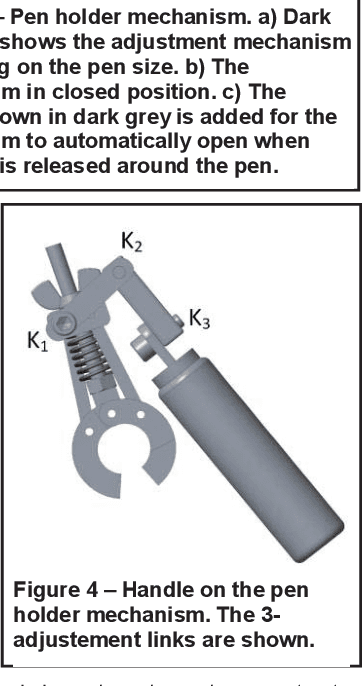

Abstract:This paper presents the development of a new passive assistive handwriting device, which aims to stabilize the motion of people living with movement disorders. Many people living with conditions such as cerebral palsy, stroke, muscular dystrophy or dystonia experience upper limbs impairments (muscle spasticity, unselective motor control, muscle weakness or tremors) and are unable to write or draw on their own. The proposed device is designed to be fixed on a table. A pen is attached to the device using a pen holder, which maintains the pen in a fixed orientation. The user interacts with the device using a handle while mechanical dampers and inertia contribute to the stabilization of the user's movements. The overall mechanical design of the device is first presented, followed by the design of the pen holder mechanism.

Assistive robotic device: evaluation of intelligent algorithms

Dec 18, 2018

Abstract:Assistive robotic devices can be used to help people with upper body disabilities gaining more autonomy in their daily life. Although basic motions such as positioning and orienting an assistive robot gripper in space allow performance of many tasks, it might be time consuming and tedious to perform more complex tasks. To overcome these difficulties, improvements can be implemented at different levels, such as mechanical design, control interfaces and intelligent control algorithms. In order to guide the design of solutions, it is important to assess the impact and potential of different innovations. This paper thus presents the evaluation of three intelligent algorithms aiming to improve the performance of the JACO robotic arm (Kinova Robotics). The evaluated algorithms are 'preset position', 'fluidity filter' and 'drinking mode'. The algorithm evaluation was performed with 14 motorized wheelchair's users and showed a statistically significant improvement of the robot's performance.

* 4 pages

Deep Learning for Electromyographic Hand Gesture Signal Classification Using Transfer Learning

Jun 12, 2018

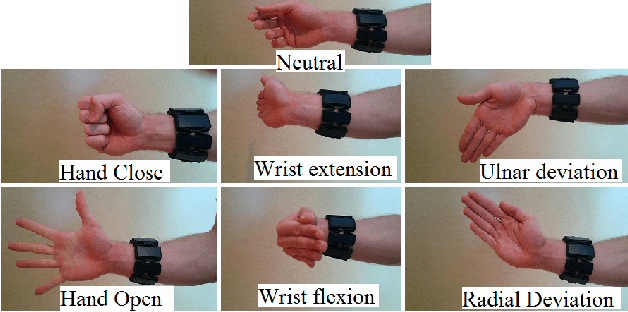

Abstract:In recent years, the use of deep learning algorithms has become increasingly more prominent for their unparalleled ability to automatically learn discriminant features from large amounts of data. However, within the field of electromyography-based gesture recognition, deep learning algorithms are seldom employed as it requires an unreasonable amount of time for a single person, in a single session, to generate tens of thousands of examples. This work's hypothesis is that general, informative features can be learned from the large amount of data generated by aggregating the signals of multiple users, thus reducing the recording burden imposed on a single person while enhancing gesture recognition. As such, this paper proposes applying transfer learning on the aggregated data of multiple users, while leveraging the capacity of deep learning algorithms to learn discriminant features from large dataset, without the need for in-depth feature engineering. To this end, two datasets are recorded with the Myo Armband (Thalmic Labs), a low-cost, low-sampling rate (200Hz), 8-channel, consumer-grade, dry electrode sEMG armband. These two datasets are comprised of 19 and 17 able-bodied participants respectively. A third dataset, also recorded with the Myo Armband, was taken from the NinaPro database and is comprised of 10 able-bodied participants. This transfer learning scheme is shown to outperform the current state-of-the-art in gesture recognition. It achieves an average accuracy of 98.31% for 7 hand/wrist gestures over 17 able-bodied participants and 65.57% for 18 hand/wrist gestures over 10 able-bodied participants. Finally, a use-case study employing eight able-bodied participants suggests that real-time feedback reduces the degradation in accuracy normally experienced over time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge