Bastian Steudel

Justifying Information-Geometric Causal Inference

Feb 11, 2014

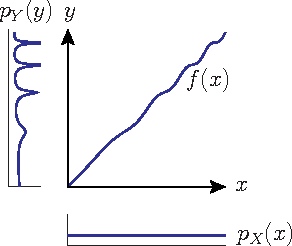

Abstract:Information Geometric Causal Inference (IGCI) is a new approach to distinguish between cause and effect for two variables. It is based on an independence assumption between input distribution and causal mechanism that can be phrased in terms of orthogonality in information space. We describe two intuitive reinterpretations of this approach that makes IGCI more accessible to a broader audience. Moreover, we show that the described independence is related to the hypothesis that unsupervised learning and semi-supervised learning only works for predicting the cause from the effect and not vice versa.

Inferring deterministic causal relations

Mar 15, 2012

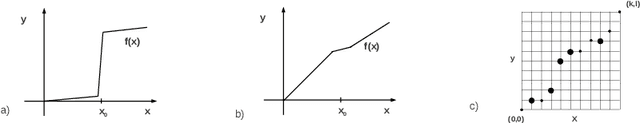

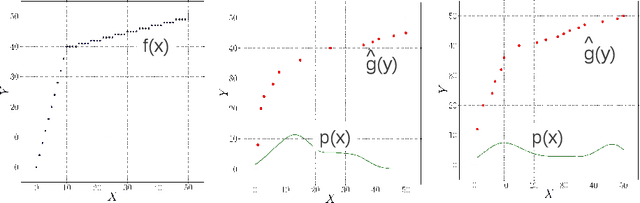

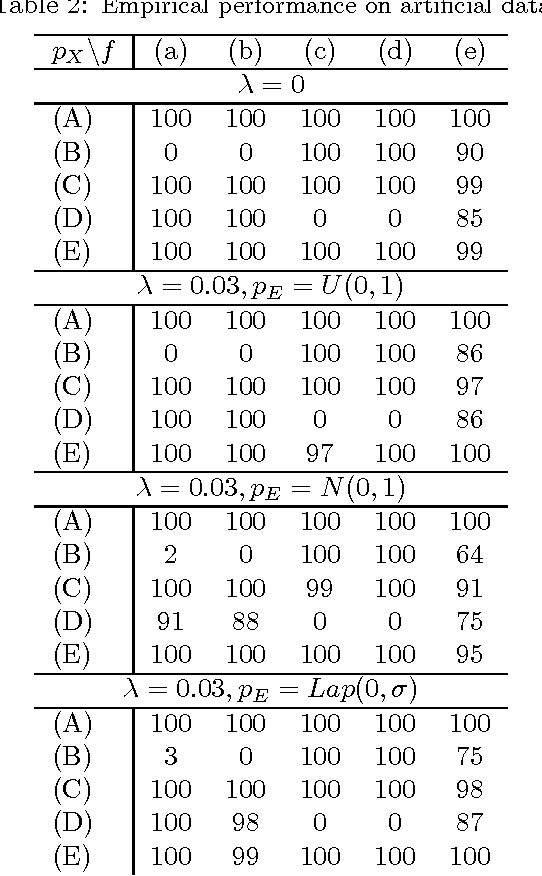

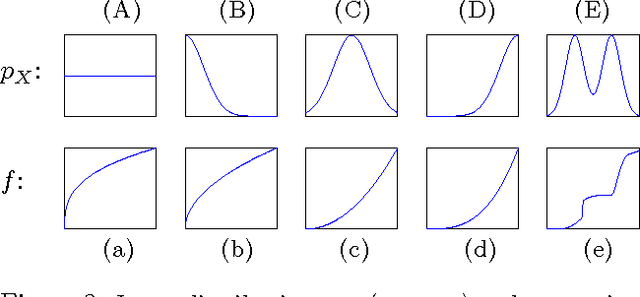

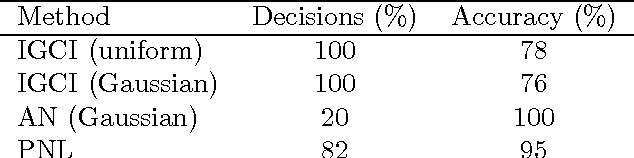

Abstract:We consider two variables that are related to each other by an invertible function. While it has previously been shown that the dependence structure of the noise can provide hints to determine which of the two variables is the cause, we presently show that even in the deterministic (noise-free) case, there are asymmetries that can be exploited for causal inference. Our method is based on the idea that if the function and the probability density of the cause are chosen independently, then the distribution of the effect will, in a certain sense, depend on the function. We provide a theoretical analysis of this method, showing that it also works in the low noise regime, and link it to information geometry. We report strong empirical results on various real-world data sets from different domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge