Bach Do

Optimizing Posterior Samples for Bayesian Optimization via Rootfinding

Oct 29, 2024

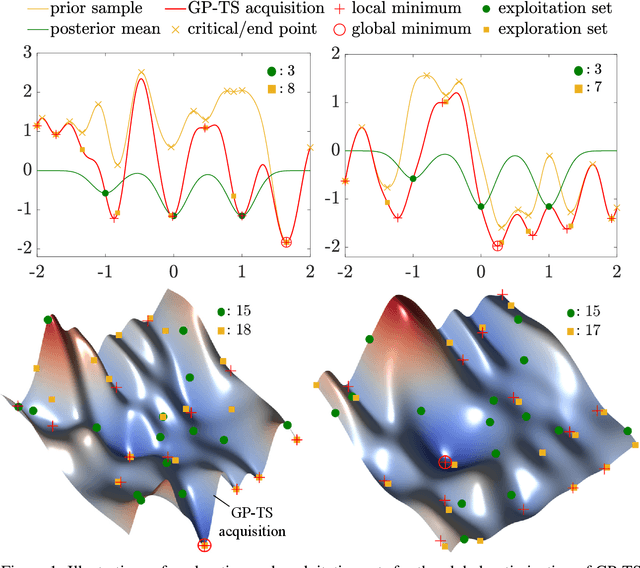

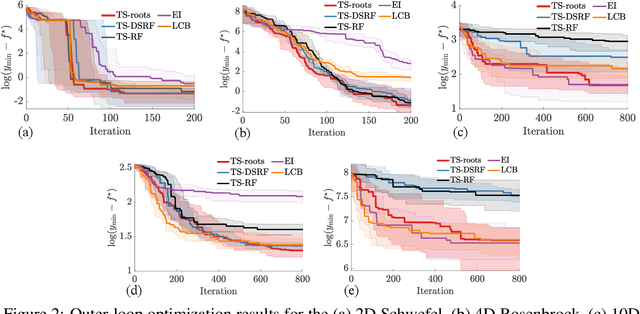

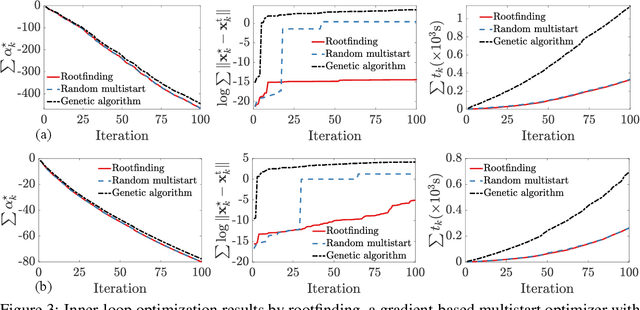

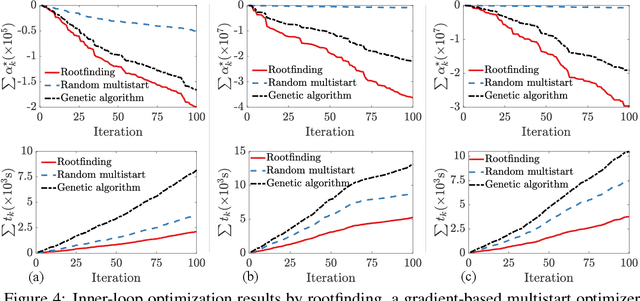

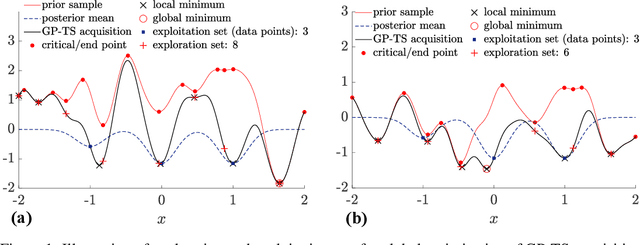

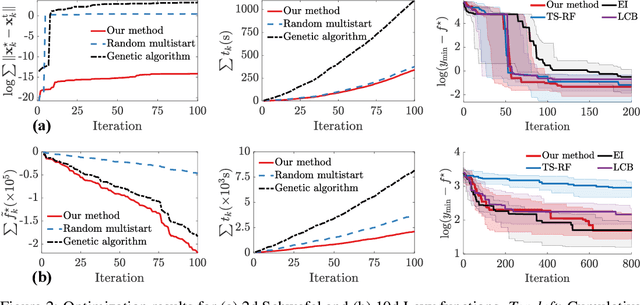

Abstract:Bayesian optimization devolves the global optimization of a costly objective function to the global optimization of a sequence of acquisition functions. This inner-loop optimization can be catastrophically difficult if it involves posterior samples, especially in higher dimensions. We introduce an efficient global optimization strategy for posterior samples based on global rootfinding. It provides gradient-based optimizers with judiciously selected starting points, designed to combine exploitation and exploration. The algorithm scales practically linearly to high dimensions. For posterior sample-based acquisition functions such as Gaussian process Thompson sampling (GP-TS) and variants of entropy search, we demonstrate remarkable improvement in both inner- and outer-loop optimization, surprisingly outperforming alternatives like EI and GP-UCB in most cases. We also propose a sample-average formulation of GP-TS, which has a parameter to explicitly control exploitation and can be computed at the cost of one posterior sample. Our implementation is available at https://github.com/UQUH/TSRoots .

Gaussian Process Thompson Sampling via Rootfinding

Oct 10, 2024

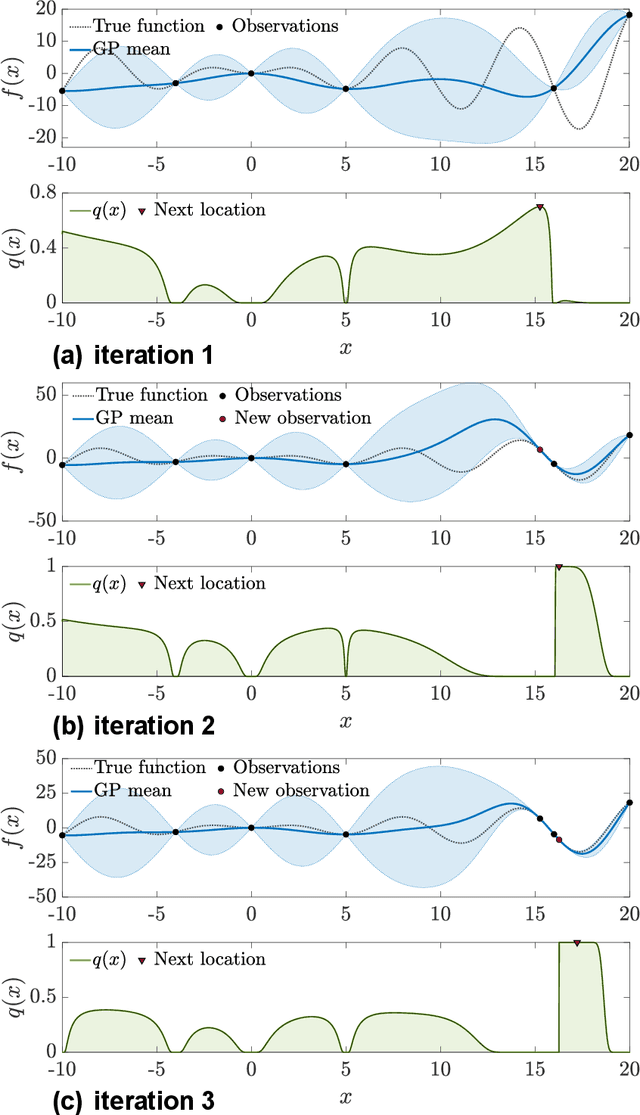

Abstract:Thompson sampling (TS) is a simple, effective stochastic policy in Bayesian decision making. It samples the posterior belief about the reward profile and optimizes the sample to obtain a candidate decision. In continuous optimization, the posterior of the objective function is often a Gaussian process (GP), whose sample paths have numerous local optima, making their global optimization challenging. In this work, we introduce an efficient global optimization strategy for GP-TS that carefully selects starting points for gradient-based multi-start optimizers. It identifies all local optima of the prior sample via univariate global rootfinding, and optimizes the posterior sample using a differentiable, decoupled representation. We demonstrate remarkable improvement in the global optimization of GP posterior samples, especially in high dimensions. This leads to dramatic improvements in the overall performance of Bayesian optimization using GP-TS acquisition functions, surprisingly outperforming alternatives like GP-UCB and EI.

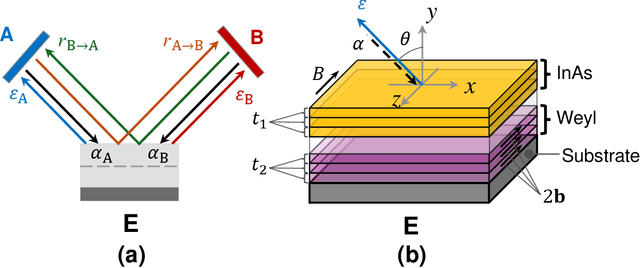

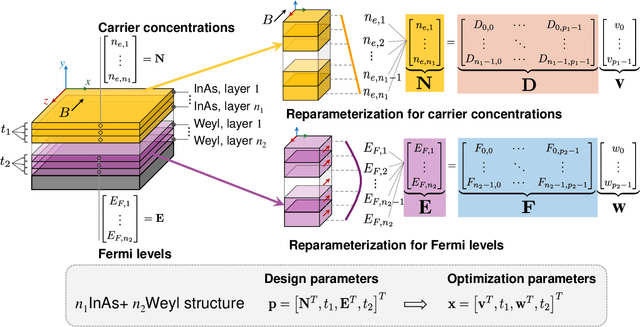

Automated design of nonreciprocal thermal emitters via Bayesian optimization

Sep 13, 2024

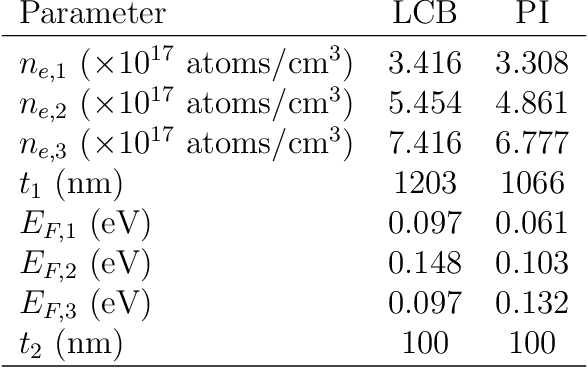

Abstract:Nonreciprocal thermal emitters that break Kirchhoff's law of thermal radiation promise exciting applications for thermal and energy applications. The design of the bandwidth and angular range of the nonreciprocal effect, which directly affects the performance of nonreciprocal emitters, typically relies on physical intuition. In this study, we present a general numerical approach to maximize the nonreciprocal effect. We choose doped magneto-optic materials and magnetic Weyl semimetal materials as model materials and focus on pattern-free multilayer structures. The optimization randomly starts from a less effective structure and incrementally improves the broadband nonreciprocity through the combination of Bayesian optimization and reparameterization. Optimization results show that the proposed approach can discover structures that can achieve broadband nonreciprocal emission at wavelengths from 5 to 40 micrometers using only a fewer layers, significantly outperforming current state-of-the-art designs based on intuition in terms of both performance and simplicity.

Epsilon-Greedy Thompson Sampling to Bayesian Optimization

Mar 01, 2024Abstract:Thompson sampling (TS) serves as a solution for addressing the exploitation-exploration dilemma in Bayesian optimization (BO). While it prioritizes exploration by randomly generating and maximizing sample paths of Gaussian process (GP) posteriors, TS weakly manages its exploitation by gathering information about the true objective function after each exploration is performed. In this study, we incorporate the epsilon-greedy ($\varepsilon$-greedy) policy, a well-established selection strategy in reinforcement learning, into TS to improve its exploitation. We first delineate two extremes of TS applied for BO, namely the generic TS and a sample-average TS. The former and latter promote exploration and exploitation, respectively. We then use $\varepsilon$-greedy policy to randomly switch between the two extremes. A small value of $\varepsilon \in (0,1)$ prioritizes exploitation, and vice versa. We empirically show that $\varepsilon$-greedy TS with an appropriate $\varepsilon$ is better than one of its two extremes and competes with the other.

Multi-fidelity Bayesian Optimization in Engineering Design

Nov 21, 2023

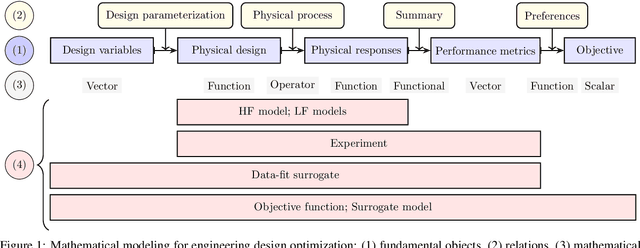

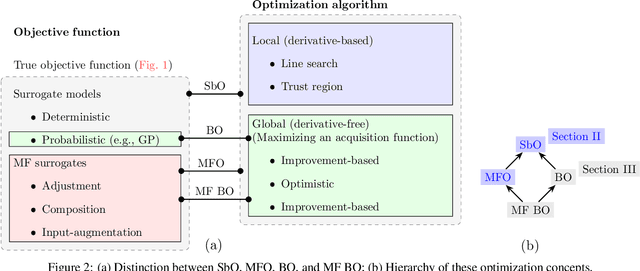

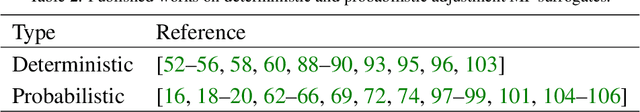

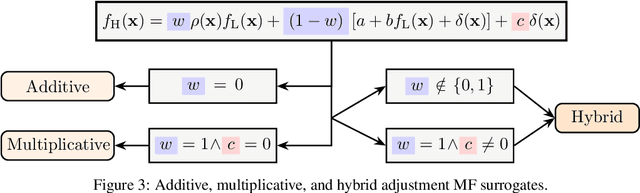

Abstract:Resided at the intersection of multi-fidelity optimization (MFO) and Bayesian optimization (BO), MF BO has found a niche in solving expensive engineering design optimization problems, thanks to its advantages in incorporating physical and mathematical understandings of the problems, saving resources, addressing exploitation-exploration trade-off, considering uncertainty, and processing parallel computing. The increasing number of works dedicated to MF BO suggests the need for a comprehensive review of this advanced optimization technique. In this paper, we survey recent developments of two essential ingredients of MF BO: Gaussian process (GP) based MF surrogates and acquisition functions. We first categorize the existing MF modeling methods and MFO strategies to locate MF BO in a large family of surrogate-based optimization and MFO algorithms. We then exploit the common properties shared between the methods from each ingredient of MF BO to describe important GP-based MF surrogate models and review various acquisition functions. By doing so, we expect to provide a structured understanding of MF BO. Finally, we attempt to reveal important aspects that require further research for applications of MF BO in solving intricate yet important design optimization problems, including constrained optimization, high-dimensional optimization, optimization under uncertainty, and multi-objective optimization.

Ensemble Learning of Myocardial Displacements for Myocardial Infarction Detection in Echocardiography

Mar 12, 2023

Abstract:Early detection and localization of myocardial infarction (MI) can reduce the severity of cardiac damage through timely treatment interventions. In recent years, deep learning techniques have shown promise for detecting MI in echocardiographic images. However, there has been no examination of how segmentation accuracy affects MI classification performance and the potential benefits of using ensemble learning approaches. Our study investigates this relationship and introduces a robust method that combines features from multiple segmentation models to improve MI classification performance by leveraging ensemble learning. Our method combines myocardial segment displacement features from multiple segmentation models, which are then input into a typical classifier to estimate the risk of MI. We validated the proposed approach on two datasets: the public HMC-QU dataset (109 echocardiograms) for training and validation, and an E-Hospital dataset (60 echocardiograms) from a local clinical site in Vietnam for independent testing. Model performance was evaluated based on accuracy, sensitivity, and specificity. The proposed approach demonstrated excellent performance in detecting MI. The results showed that the proposed approach outperformed the state-of-the-art feature-based method. Further research is necessary to determine its potential use in clinical settings as a tool to assist cardiologists and technicians with objective assessments and reduce dependence on operator subjectivity. Our research codes are available on GitHub at https://github.com/vinuni-vishc/mi-detection-echo.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge