Atsushi Uchida

Blending Optimal Control and Biologically Plausible Learning for Noise-Robust Physical Neural Networks

Feb 26, 2025Abstract:The rapidly increasing computational demands for artificial intelligence (AI) have spurred the exploration of computing principles beyond conventional digital computers. Physical neural networks (PNNs) offer efficient neuromorphic information processing by harnessing the innate computational power of physical processes; however, training their weight parameters is computationally expensive. We propose a training approach for substantially reducing this training cost. Our training approach merges an optimal control method for continuous-time dynamical systems with a biologically plausible training method--direct feedback alignment. In addition to the reduction of training time, this approach achieves robust processing even under measurement errors and noise without requiring detailed system information. The effectiveness was numerically and experimentally verified in an optoelectronic delay system. Our approach significantly extends the range of physical systems practically usable as PNNs.

* 28 pages, 10 figures

Attention-Enhanced Reservoir Computing

Dec 27, 2023Abstract:Photonic reservoir computing has been recently utilized in time series forecasting as the need for hardware implementations to accelerate these predictions has increased. Forecasting chaotic time series remains a significant challenge, an area where the conventional reservoir computing framework encounters limitations of prediction accuracy. We introduce an attention mechanism to the reservoir computing model in the output stage. This attention layer is designed to prioritize distinct features and temporal sequences, thereby substantially enhancing the forecasting accuracy. Our results show that a photonic reservoir computer enhanced with the attention mechanism exhibits improved forecasting capabilities for smaller reservoirs. These advancements highlight the transformative possibilities of reservoir computing for practical applications where accurate forecasting of chaotic time series is crucial.

Ultrafast single-channel machine vision based on neuro-inspired photonic computing

Feb 15, 2023Abstract:High-speed machine vision is increasing its importance in both scientific and technological applications. Neuro-inspired photonic computing is a promising approach to speed-up machine vision processing with ultralow latency. However, the processing rate is fundamentally limited by the low frame rate of image sensors, typically operating at tens of hertz. Here, we propose an image-sensor-free machine vision framework, which optically processes real-world visual information with only a single input channel, based on a random temporal encoding technique. This approach allows for compressive acquisitions of visual information with a single channel at gigahertz rates, outperforming conventional approaches, and enables its direct photonic processing using a photonic reservoir computer in a time domain. We experimentally demonstrate that the proposed approach is capable of high-speed image recognition and anomaly detection, and furthermore, it can be used for high-speed imaging. The proposed approach is multipurpose and can be extended for a wide range of applications, including tracking, controlling, and capturing sub-nanosecond phenomena.

Parallel photonic accelerator for decision making using optical spatiotemporal chaos

Oct 12, 2022

Abstract:Photonic accelerators have attracted increasing attention in artificial intelligence applications. The multi-armed bandit problem is a fundamental problem of decision making using reinforcement learning. However, the scalability of photonic decision making has not yet been demonstrated in experiments, owing to technical difficulties in physical realization. We propose a parallel photonic decision-making system for solving large-scale multi-armed bandit problems using optical spatiotemporal chaos. We solve a 512-armed bandit problem online, which is much larger than previous experiments by two orders of magnitude. The scaling property for correct decision making is examined as a function of the number of slot machines, evaluated as an exponent of 0.86. This exponent is smaller than that in previous work, indicating the superiority of the proposed parallel principle. This experimental demonstration facilitates photonic decision making to solve large-scale multi-armed bandit problems for future photonic accelerators.

Parallel bandit architecture based on laser chaos for reinforcement learning

May 19, 2022

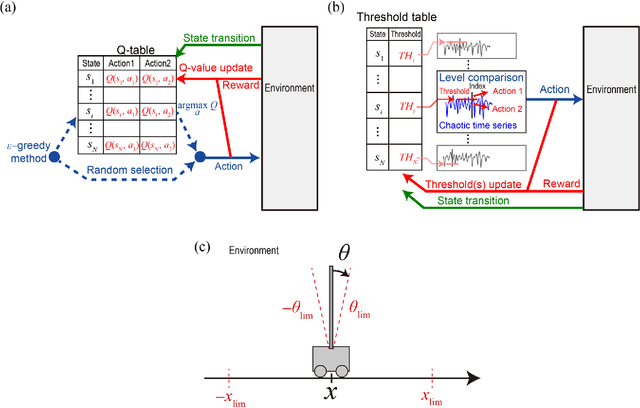

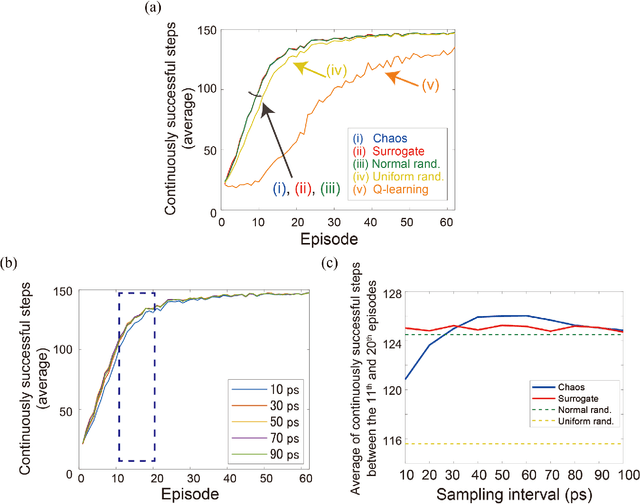

Abstract:Accelerating artificial intelligence by photonics is an active field of study aiming to exploit the unique properties of photons. Reinforcement learning is an important branch of machine learning, and photonic decision-making principles have been demonstrated with respect to the multi-armed bandit problems. However, reinforcement learning could involve a massive number of states, unlike previously demonstrated bandit problems where the number of states is only one. Q-learning is a well-known approach in reinforcement learning that can deal with many states. The architecture of Q-learning, however, does not fit well photonic implementations due to its separation of update rule and the action selection. In this study, we organize a new architecture for multi-state reinforcement learning as a parallel array of bandit problems in order to benefit from photonic decision-makers, which we call parallel bandit architecture for reinforcement learning or PBRL in short. Taking a cart-pole balancing problem as an instance, we demonstrate that PBRL adapts to the environment in fewer time steps than Q-learning. Furthermore, PBRL yields faster adaptation when operated with a chaotic laser time series than the case with uniformly distributed pseudorandom numbers where the autocorrelation inherent in the laser chaos provides a positive effect. We also find that the variety of states that the system undergoes during the learning phase exhibits completely different properties between PBRL and Q-learning. The insights obtained through the present study are also beneficial for existing computing platforms, not just photonic realizations, in accelerating performances by the PBRL algorithms and correlated random sequences.

Controlling chaotic itinerancy in laser dynamics for reinforcement learning

May 12, 2022

Abstract:Photonic artificial intelligence has attracted considerable interest in accelerating machine learning; however, the unique optical properties have not been fully utilized for achieving higher-order functionalities. Chaotic itinerancy, with its spontaneous transient dynamics among multiple quasi-attractors, can be employed to realize brain-like functionalities. In this paper, we propose a method for controlling the chaotic itinerancy in a multi-mode semiconductor laser to solve a machine learning task, known as the multi-armed bandit problem, which is fundamental to reinforcement learning. The proposed method utilizes ultrafast chaotic itinerant motion in mode competition dynamics controlled via optical injection. We found that the exploration mechanism is completely different from a conventional searching algorithm and is highly scalable, outperforming the conventional approaches for large-scale bandit problems. This study paves the way to utilize chaotic itinerancy for effectively solving complex machine learning tasks as photonic hardware accelerators.

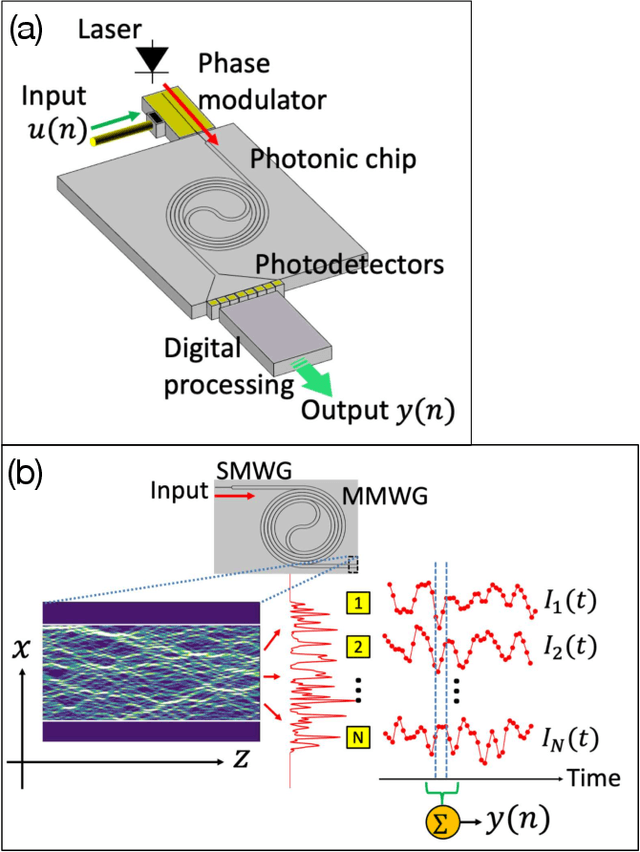

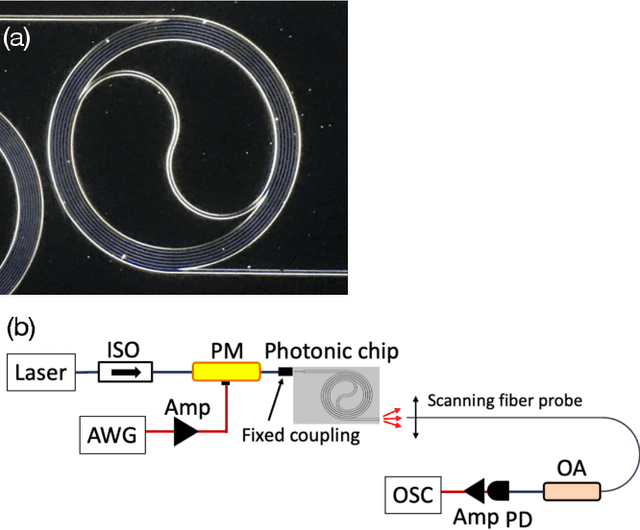

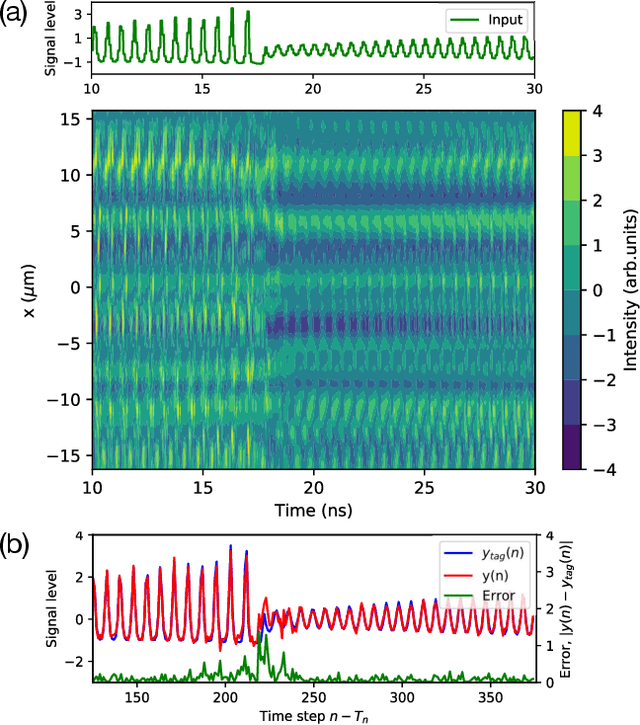

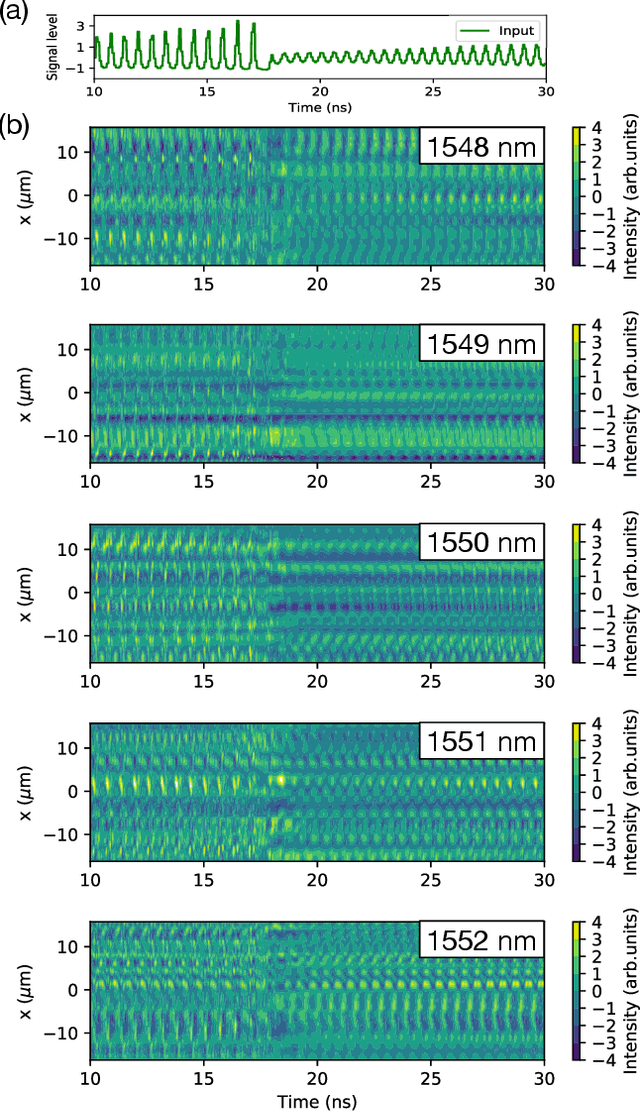

Photonic neural field on a silicon chip: large-scale, high-speed neuro-inspired computing and sensing

May 22, 2021

Abstract:Photonic neural networks have significant potential for high-speed neural processing with low latency and ultralow energy consumption. However, the on-chip implementation of a large-scale neural network is still challenging owing to its low scalability. Herein, we propose the concept of a photonic neural field and implement it experimentally on a silicon chip to realize highly scalable neuro-inspired computing. In contrast to existing photonic neural networks, the photonic neural field is a spatially continuous field that nonlinearly responds to optical inputs, and its high spatial degrees of freedom allow for large-scale and high-density neural processing on a millimeter-scale chip. In this study, we use the on-chip photonic neural field as a reservoir of information and demonstrate a high-speed chaotic time-series prediction with low errors using a training approach similar to reservoir computing. We discuss that the photonic neural field is potentially capable of executing more than one peta multiply-accumulate operations per second for a single input wavelength on a footprint as small as a few square millimeters. In addition to processing, the photonic neural field can be used for rapidly sensing the temporal variation of an optical phase, facilitated by its high sensitivity to optical inputs. The merging of optical processing with optical sensing paves the way for an end-to-end data-driven optical sensing scheme.

Adaptive model selection in photonic reservoir computing by reinforcement learning

Apr 27, 2020

Abstract:Photonic reservoir computing is an emergent technology toward beyond-Neumann computing. Although photonic reservoir computing provides superior performance in environments whose characteristics are coincident with the training datasets for the reservoir, the performance is significantly degraded if these characteristics deviate from the original knowledge used in the training phase. Here, we propose a scheme of adaptive model selection in photonic reservoir computing using reinforcement learning. In this scheme, a temporal waveform is generated by different dynamic source models that change over time. The system autonomously identifies the best source model for the task of time series prediction using photonic reservoir computing and reinforcement learning. We prepare two types of output weights for the source models, and the system adaptively selected the correct model using reinforcement learning, where the prediction errors are associated with rewards. We succeed in adaptive model selection when the source signal is temporally mixed, having originally been generated by two different dynamic system models, as well as when the signal is a mixture from the same model but with different parameter values. This study paves the way for autonomous behavior in photonic artificial intelligence and could lead to new applications in load forecasting and multi-objective control, where frequent environment changes are expected.

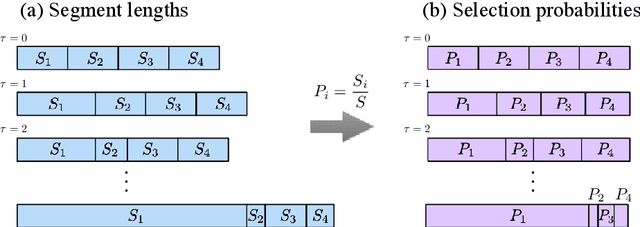

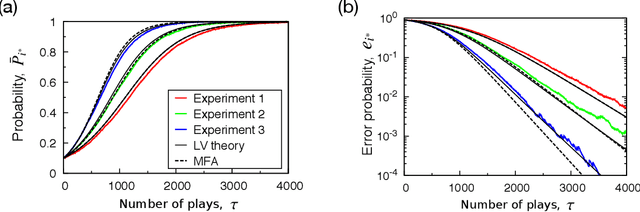

Lotka-Volterra competition mechanism embedded in a decision-making method

Jul 29, 2019

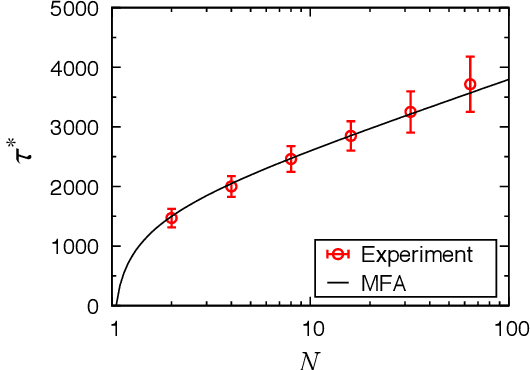

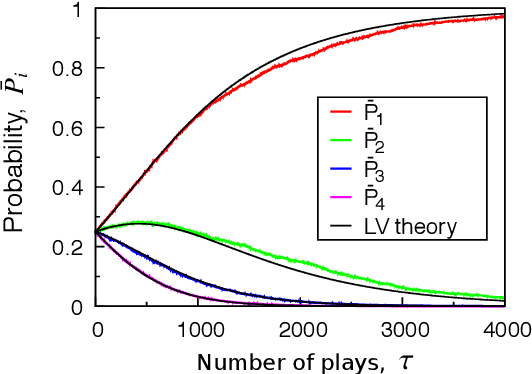

Abstract:Decision making is a fundamental capability of living organisms, and has recently been gaining increasing importance in many engineering applications. Here, we consider a simple decision-making principle to identify an optimal choice in multi-armed bandit (MAB) problems, which is fundamental in the context of reinforcement learning. We demonstrate that the identification mechanism of the method is well described by using a competitive ecosystem model, i.e., the competitive Lotka-Volterra (LV) model. Based on the "winner-take-all" mechanism in the competitive LV model, we demonstrate that non-best choices are eliminated and only the best choice survives; the failure of the non-best choices exponentially decreases while repeating the choice trials. Furthermore, we apply a mean-field approximation to the proposed decision-making method and show that the method has an excellent scalability of O(log N) with respect to the number of choices N. These results allow for a new perspective on optimal search capabilities in competitive systems.

Generative adversarial network based on chaotic time series

May 24, 2019

Abstract:Generative adversarial network (GAN) is gaining increased importance in artificially constructing natural images and related functionalities wherein two networks called generator and discriminator are evolving through adversarial mechanisms. Using deep convolutional neural networks and related techniques, high-resolution, highly realistic scenes, human faces, among others have been generated. While GAN in general needs a large amount of genuine training data sets, it is noteworthy that vast amounts of pseudorandom numbers are required. Here we utilize chaotic time series generated experimentally by semiconductor lasers for the latent variables of GAN whereby the inherent nature of chaos can be reflected or transformed into the generated output data. We show that the similarity in proximity, which is a degree of robustness of the generated images with respects to a minute change in the input latent variables, is enhanced while the versatility as a whole is not severely degraded. Furthermore, we demonstrate that the surrogate chaos time series eliminates the signature of generated images that is originally observed corresponding to the negative autocorrelation inherent in the chaos sequence. We also discuss the impact of utilizing chaotic time series in retrieving images from the trained generator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge