Makoto Naruse

Asymmetric leader-laggard cluster synchronization for collective decision-making with laser network

Dec 05, 2023Abstract:Photonic accelerators have recently attracted soaring interest, harnessing the ultimate nature of light for information processing. Collective decision-making with a laser network, employing the chaotic and synchronous dynamics of optically interconnected lasers to address the competitive multi-armed bandit (CMAB) problem, is a highly compelling approach due to its scalability and experimental feasibility. We investigated essential network structures for collective decision-making through quantitative stability analysis. Moreover, we demonstrated the asymmetric preferences of players in the CMAB problem, extending its functionality to more practical applications. Our study highlights the capability and significance of machine learning built upon chaotic lasers and photonic devices.

Conflict-free joint decision by lag and zero-lag synchronization in laser network

Jul 28, 2023Abstract:With the end of Moore's Law and the increasing demand for computing, photonic accelerators are garnering considerable attention. This is due to the physical characteristics of light, such as high bandwidth and multiplicity, and the various synchronization phenomena that emerge in the realm of laser physics. These factors come into play as computer performance approaches its limits. In this study, we explore the application of a laser network, acting as a photonic accelerator, to the competitive multi-armed bandit problem. In this context, conflict avoidance is key to maximizing environmental rewards. We experimentally demonstrate cooperative decision-making using zero-lag and lag synchronization within a network of four semiconductor lasers. Lag synchronization of chaos realizes effective decision-making and zero-delay synchronization is responsible for the realization of the collision avoidance function. We experimentally verified a low collision rate and high reward in a fundamental 2-player, 2-slot scenario, and showed the scalability of this system. This system architecture opens up new possibilities for intelligent functionalities in laser dynamics.

Asymmetric quantum decision-making

May 03, 2023Abstract:Collective decision-making is crucial to information and communication systems. Decision conflicts among agents hinder the maximization of potential utilities of the entire system. Quantum processes can realize conflict-free joint decisions among two agents using the entanglement of photons or quantum interference of orbital angular momentum (OAM). However, previous studies have always presented symmetric resultant joint decisions. Although this property helps maintain and preserve equality, it cannot resolve disparities. Global challenges, such as ethics and equity, are recognized in the field of responsible artificial intelligence as responsible research and innovation paradigm. Thus, decision-making systems must not only preserve existing equality but also tackle disparities. This study theoretically and numerically investigates asymmetric collective decision-making using quantum interference of photons carrying OAM or entangled photons. Although asymmetry is successfully realized, a photon loss is inevitable in the proposed models. The available range of asymmetry and method for obtaining the desired degree of asymmetry are analytically formulated.

Bandit Algorithm Driven by a Classical Random Walk and a Quantum Walk

Apr 20, 2023Abstract:Quantum walks (QWs) have the property that classical random walks (RWs) do not possess -- coexistence of linear spreading and localization -- and this property is utilized to implement various kinds of applications. This paper proposes a quantum-walk-based algorithm for multi-armed-bandit (MAB) problems by associating the two operations that make MAB problems difficult -- exploration and exploitation -- with these two behaviors of QWs. We show that this new policy based on the QWs realizes high performance compared with the corresponding RW-based one.

Bandit approach to conflict-free multi-agent Q-learning in view of photonic implementation

Dec 20, 2022Abstract:Recently, extensive studies on photonic reinforcement learning to accelerate the process of calculation by exploiting the physical nature of light have been conducted. Previous studies utilized quantum interference of photons to achieve collective decision-making without choice conflicts when solving the competitive multi-armed bandit problem, a fundamental example of reinforcement learning. However, the bandit problem deals with a static environment where the agent's action does not influence the reward probabilities. This study aims to extend the conventional approach to a more general multi-agent reinforcement learning targeting the grid world problem. Unlike the conventional approach, the proposed scheme deals with a dynamic environment where the reward changes because of agents' actions. A successful photonic reinforcement learning scheme requires both a photonic system that contributes to the quality of learning and a suitable algorithm. This study proposes a novel learning algorithm, discontinuous bandit Q-learning, in view of a potential photonic implementation. Here, state-action pairs in the environment are regarded as slot machines in the context of the bandit problem and an updated amount of Q-value is regarded as the reward of the bandit problem. We perform numerical simulations to validate the effectiveness of the bandit algorithm. In addition, we propose a multi-agent architecture in which agents are indirectly connected through quantum interference of light and quantum principles ensure the conflict-free property of state-action pair selections among agents. We demonstrate that multi-agent reinforcement learning can be accelerated owing to conflict avoidance among multiple agents.

Parallel photonic accelerator for decision making using optical spatiotemporal chaos

Oct 12, 2022

Abstract:Photonic accelerators have attracted increasing attention in artificial intelligence applications. The multi-armed bandit problem is a fundamental problem of decision making using reinforcement learning. However, the scalability of photonic decision making has not yet been demonstrated in experiments, owing to technical difficulties in physical realization. We propose a parallel photonic decision-making system for solving large-scale multi-armed bandit problems using optical spatiotemporal chaos. We solve a 512-armed bandit problem online, which is much larger than previous experiments by two orders of magnitude. The scaling property for correct decision making is examined as a function of the number of slot machines, evaluated as an exponent of 0.86. This exponent is smaller than that in previous work, indicating the superiority of the proposed parallel principle. This experimental demonstration facilitates photonic decision making to solve large-scale multi-armed bandit problems for future photonic accelerators.

Conflict-free joint sampling for preference satisfaction through quantum interference

Aug 05, 2022

Abstract:Collective decision-making is vital for recent information and communications technologies. In our previous research, we mathematically derived conflict-free joint decision-making that optimally satisfies players' probabilistic preference profiles. However, two problems exist regarding the optimal joint decision-making method. First, as the number of choices increases, the computational cost of calculating the optimal joint selection probability matrix explodes. Second, to derive the optimal joint selection probability matrix, all players must disclose their probabilistic preferences. Now, it is noteworthy that explicit calculation of the joint probability distribution is not necessarily needed; what is necessary for collective decisions is sampling. This study examines several sampling methods that converge to heuristic joint selection probability matrices that satisfy players' preferences. We show that they can significantly reduce the above problems of computational cost and confidentiality. We analyze the probability distribution each of the sampling methods converges to, as well as the computational cost required and the confidentiality secured. In particular, we introduce two conflict-free joint sampling methods through quantum interference of photons. The first system allows the players to hide their choices while satisfying the players' preferences almost perfectly when they have the same preferences. The second system, where the physical nature of light replaces the expensive computational cost, also conceals their choices under the assumption that they have a trusted third party.

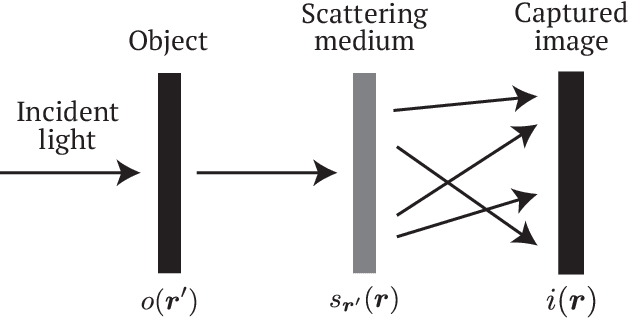

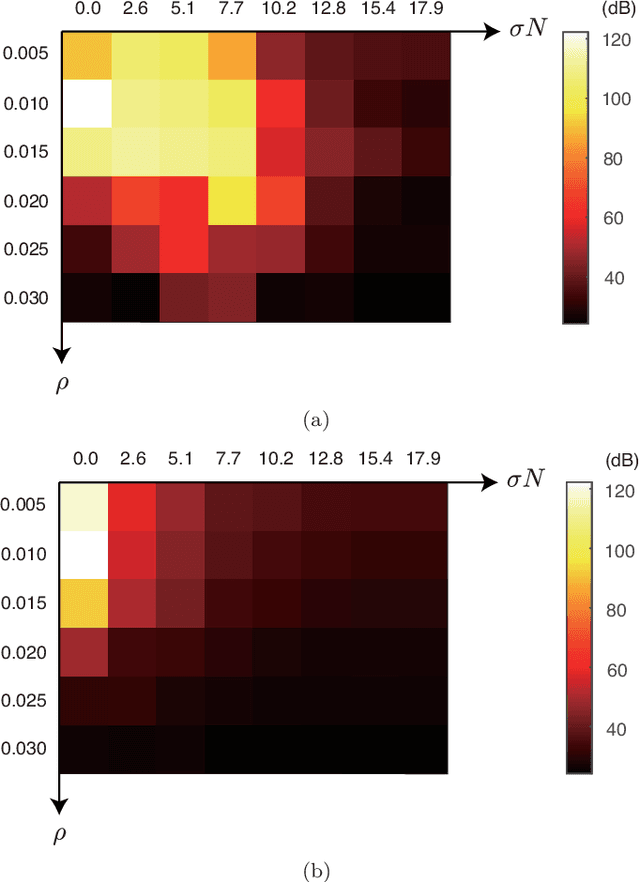

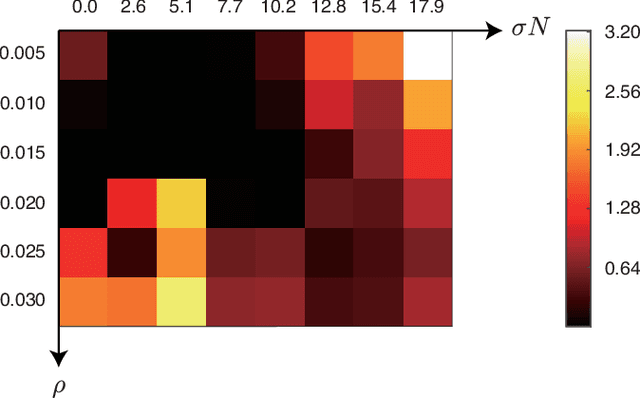

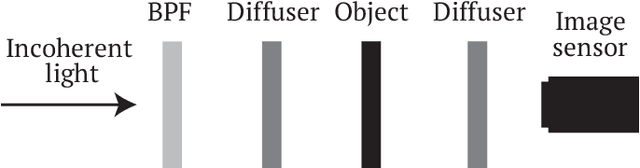

Extended field-of-view speckle-correlation imaging by estimating autocorrelation

Jun 19, 2022

Abstract:Imaging through scattering media is a longstanding issue in a wide range of applications, including biomedicine, security, and astronomy. Speckle-correlation imaging is promising for non-invasively seeing through scattering media by assuming shift-invariance of the scattering process called the memory effect. However, the memory effect is known to be severely limited when the medium is thick. Under such a scattering condition, speckle-correlation imaging is not practical because the correlation of the speckle decays, reducing the field of view. To address this problem, we present a method for expanding the field of view of single-shot speckle-correlation imaging through scattering media with a limited memory effect. We derive the imaging model under this scattering condition and its inversion for reconstructing the object. Our method simultaneously estimates both the object and the decay of the speckle correlation based on the gradient descent method. We numerically and experimentally demonstrate the proposed method by reconstructing point sources behind scattering media with a limited memory effect. In the demonstrations, our speckle-correlation imaging method with a minimal lensless optical setup realized a larger field of view compared with the conventional one. This study will make techniques for imaging through scattering media more practical in various fields.

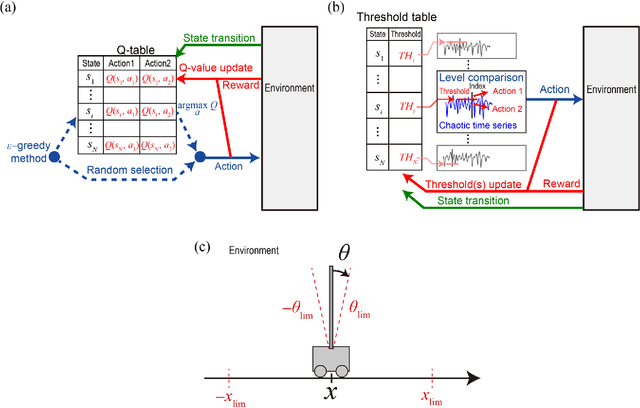

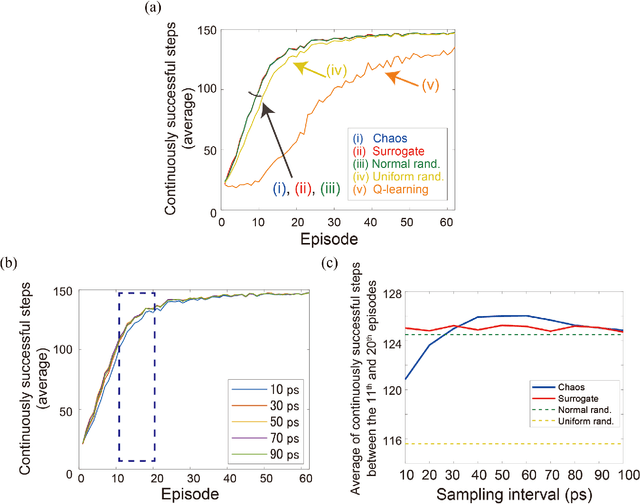

Parallel bandit architecture based on laser chaos for reinforcement learning

May 19, 2022

Abstract:Accelerating artificial intelligence by photonics is an active field of study aiming to exploit the unique properties of photons. Reinforcement learning is an important branch of machine learning, and photonic decision-making principles have been demonstrated with respect to the multi-armed bandit problems. However, reinforcement learning could involve a massive number of states, unlike previously demonstrated bandit problems where the number of states is only one. Q-learning is a well-known approach in reinforcement learning that can deal with many states. The architecture of Q-learning, however, does not fit well photonic implementations due to its separation of update rule and the action selection. In this study, we organize a new architecture for multi-state reinforcement learning as a parallel array of bandit problems in order to benefit from photonic decision-makers, which we call parallel bandit architecture for reinforcement learning or PBRL in short. Taking a cart-pole balancing problem as an instance, we demonstrate that PBRL adapts to the environment in fewer time steps than Q-learning. Furthermore, PBRL yields faster adaptation when operated with a chaotic laser time series than the case with uniformly distributed pseudorandom numbers where the autocorrelation inherent in the laser chaos provides a positive effect. We also find that the variety of states that the system undergoes during the learning phase exhibits completely different properties between PBRL and Q-learning. The insights obtained through the present study are also beneficial for existing computing platforms, not just photonic realizations, in accelerating performances by the PBRL algorithms and correlated random sequences.

Controlling chaotic itinerancy in laser dynamics for reinforcement learning

May 12, 2022

Abstract:Photonic artificial intelligence has attracted considerable interest in accelerating machine learning; however, the unique optical properties have not been fully utilized for achieving higher-order functionalities. Chaotic itinerancy, with its spontaneous transient dynamics among multiple quasi-attractors, can be employed to realize brain-like functionalities. In this paper, we propose a method for controlling the chaotic itinerancy in a multi-mode semiconductor laser to solve a machine learning task, known as the multi-armed bandit problem, which is fundamental to reinforcement learning. The proposed method utilizes ultrafast chaotic itinerant motion in mode competition dynamics controlled via optical injection. We found that the exploration mechanism is completely different from a conventional searching algorithm and is highly scalable, outperforming the conventional approaches for large-scale bandit problems. This study paves the way to utilize chaotic itinerancy for effectively solving complex machine learning tasks as photonic hardware accelerators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge