Astrid Vanneste

Safety Aware Autonomous Path Planning Using Model Predictive Reinforcement Learning for Inland Waterways

Nov 16, 2023Abstract:In recent years, interest in autonomous shipping in urban waterways has increased significantly due to the trend of keeping cars and trucks out of city centers. Classical approaches such as Frenet frame based planning and potential field navigation often require tuning of many configuration parameters and sometimes even require a different configuration depending on the situation. In this paper, we propose a novel path planning approach based on reinforcement learning called Model Predictive Reinforcement Learning (MPRL). MPRL calculates a series of waypoints for the vessel to follow. The environment is represented as an occupancy grid map, allowing us to deal with any shape of waterway and any number and shape of obstacles. We demonstrate our approach on two scenarios and compare the resulting path with path planning using a Frenet frame and path planning based on a proximal policy optimization (PPO) agent. Our results show that MPRL outperforms both baselines in both test scenarios. The PPO based approach was not able to reach the goal in either scenario while the Frenet frame approach failed in the scenario consisting of a corner with obstacles. MPRL was able to safely (collision free) navigate to the goal in both of the test scenarios.

Scalability of Message Encoding Techniques for Continuous Communication Learned with Multi-Agent Reinforcement Learning

Aug 09, 2023Abstract:Many multi-agent systems require inter-agent communication to properly achieve their goal. By learning the communication protocol alongside the action protocol using multi-agent reinforcement learning techniques, the agents gain the flexibility to determine which information should be shared. However, when the number of agents increases we need to create an encoding of the information contained in these messages. In this paper, we investigate the effect of increasing the amount of information that should be contained in a message and increasing the number of agents. We evaluate these effects on two different message encoding methods, the mean message encoder and the attention message encoder. We perform our experiments on a matrix environment. Surprisingly, our results show that the mean message encoder consistently outperforms the attention message encoder. Therefore, we analyse the communication protocol used by the agents that use the mean message encoder and can conclude that the agents use a combination of an exponential and a logarithmic function in their communication policy to avoid the loss of important information after applying the mean message encoder.

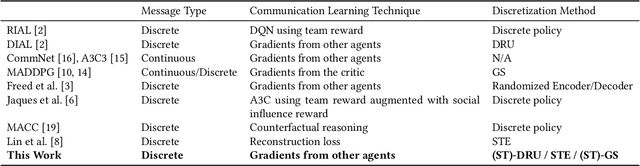

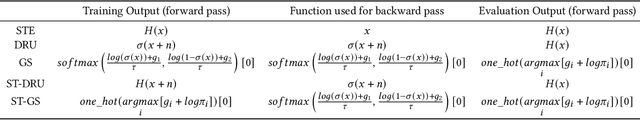

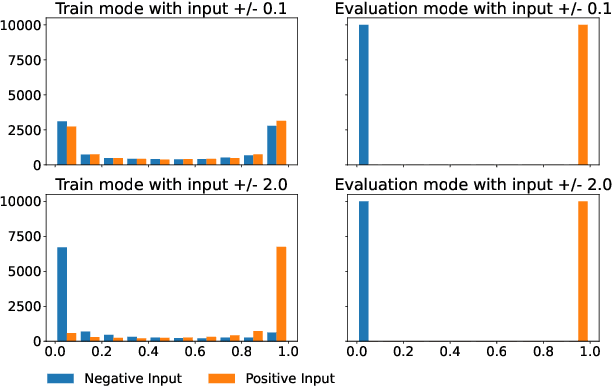

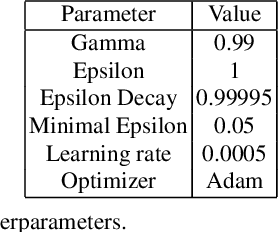

An In-Depth Analysis of Discretization Methods for Communication Learning using Backpropagation with Multi-Agent Reinforcement Learning

Aug 09, 2023Abstract:Communication is crucial in multi-agent reinforcement learning when agents are not able to observe the full state of the environment. The most common approach to allow learned communication between agents is the use of a differentiable communication channel that allows gradients to flow between agents as a form of feedback. However, this is challenging when we want to use discrete messages to reduce the message size, since gradients cannot flow through a discrete communication channel. Previous work proposed methods to deal with this problem. However, these methods are tested in different communication learning architectures and environments, making it hard to compare them. In this paper, we compare several state-of-the-art discretization methods as well as a novel approach. We do this comparison in the context of communication learning using gradients from other agents and perform tests on several environments. In addition, we present COMA-DIAL, a communication learning approach based on DIAL and COMA extended with learning rate scaling and adapted exploration. Using COMA-DIAL allows us to perform experiments on more complex environments. Our results show that the novel ST-DRU method, proposed in this paper, achieves the best results out of all discretization methods across the different environments. It achieves the best or close to the best performance in each of the experiments and is the only method that does not fail on any of the tested environments.

An Analysis of Discretization Methods for Communication Learning with Multi-Agent Reinforcement Learning

Apr 12, 2022

Abstract:Communication is crucial in multi-agent reinforcement learning when agents are not able to observe the full state of the environment. The most common approach to allow learned communication between agents is the use of a differentiable communication channel that allows gradients to flow between agents as a form of feedback. However, this is challenging when we want to use discrete messages to reduce the message size since gradients cannot flow through a discrete communication channel. Previous work proposed methods to deal with this problem. However, these methods are tested in different communication learning architectures and environments, making it hard to compare them. In this paper, we compare several state-of-the-art discretization methods as well as two methods that have not been used for communication learning before. We do this comparison in the context of communication learning using gradients from other agents and perform tests on several environments. Our results show that none of the methods is best in all environments. The best choice in discretization method greatly depends on the environment. However, the discretize regularize unit (DRU), straight through DRU and the straight through gumbel softmax show the most consistent results across all the tested environments. Therefore, these methods prove to be the best choice for general use while the straight through estimator and the gumbel softmax may provide better results in specific environments but fail completely in others.

Learning to Communicate with Reinforcement Learning for an Adaptive Traffic Control System

Oct 29, 2021

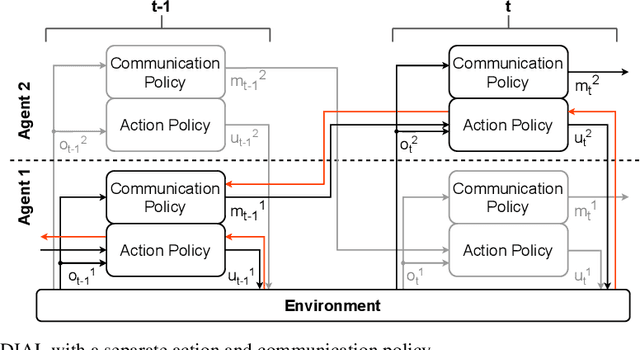

Abstract:Recent work in multi-agent reinforcement learning has investigated inter agent communication which is learned simultaneously with the action policy in order to improve the team reward. In this paper, we investigate independent Q-learning (IQL) without communication and differentiable inter-agent learning (DIAL) with learned communication on an adaptive traffic control system (ATCS). In real world ATCS, it is impossible to present the full state of the environment to every agent so in our simulation, the individual agents will only have a limited observation of the full state of the environment. The ATCS will be simulated using the Simulation of Urban MObility (SUMO) traffic simulator in which two connected intersections are simulated. Every intersection is controlled by an agent which has the ability to change the direction of the traffic flow. Our results show that a DIAL agent outperforms an independent Q-learner on both training time and on maximum achieved reward as it is able to share relevant information with the other agents.

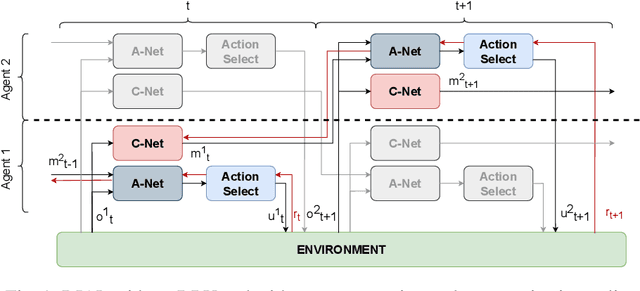

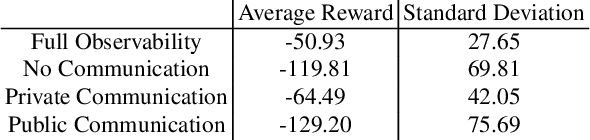

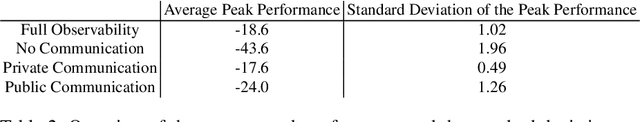

Mixed Cooperative-Competitive Communication Using Multi-Agent Reinforcement Learning

Oct 29, 2021

Abstract:By using communication between multiple agents in multi-agent environments, one can reduce the effects of partial observability by combining one agent's observation with that of others in the same dynamic environment. While a lot of successful research has been done towards communication learning in cooperative settings, communication learning in mixed cooperative-competitive settings is also important and brings its own complexities such as the opposing team overhearing the communication. In this paper, we apply differentiable inter-agent learning (DIAL), designed for cooperative settings, to a mixed cooperative-competitive setting. We look at the difference in performance between communication that is private for a team and communication that can be overheard by the other team. Our research shows that communicating agents are able to achieve similar performance to fully observable agents after a given training period in our chosen environment. Overall, we find that sharing communication across teams results in decreased performance for the communicating team in comparison to results achieved with private communication.

Learning to Communicate Using Counterfactual Reasoning

Jun 12, 2020

Abstract:This paper introduces a new approach for multi-agent communication learning called multi-agent counterfactual communication (MACC) learning. Many real-world problems are currently tackled using multi-agent techniques. However, in many of these tasks the agents do not observe the full state of the environment but only a limited observation. This absence of knowledge about the full state makes completing the objectives significantly more complex or even impossible. The key to this problem lies in sharing observation information between agents or learning how to communicate the essential data. In this paper we present a novel multi-agent communication learning approach called MACC. It addresses the partial observability problem of the agents. MACC lets the agent learn the action policy and the communication policy simultaneously. We focus on decentralized Markov Decision Processes (Dec-MDP), where the agents have joint observability. This means that the full state of the environment can be determined using the observations of all agents. MACC uses counterfactual reasoning to train both the action and the communication policy. This allows the agents to anticipate on how other agents will react to certain messages and on how the environment will react to certain actions, allowing them to learn more effective policies. MACC uses actor-critic with a centralized critic and decentralized actors. The critic is used to calculate an advantage for both the action and communication policy. We demonstrate our method by applying it on the Simple Reference Particle environment of OpenAI and a MNIST game. Our results are compared with a communication and non-communication baseline. These experiments demonstrate that MACC is able to train agents for each of these problems with effective communication policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge