Ashutosh Dhar

WinoCNN: Kernel Sharing Winograd Systolic Array for Efficient Convolutional Neural Network Acceleration on FPGAs

Jul 09, 2021

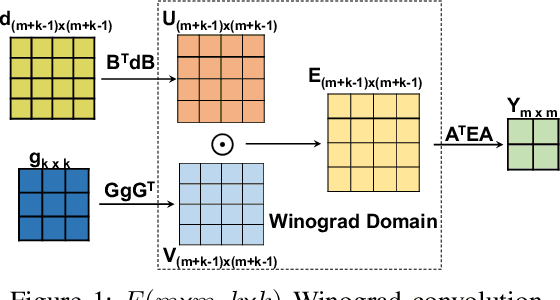

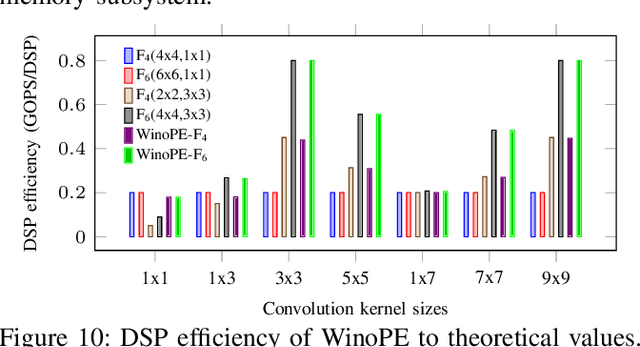

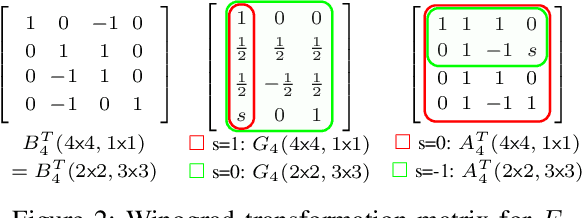

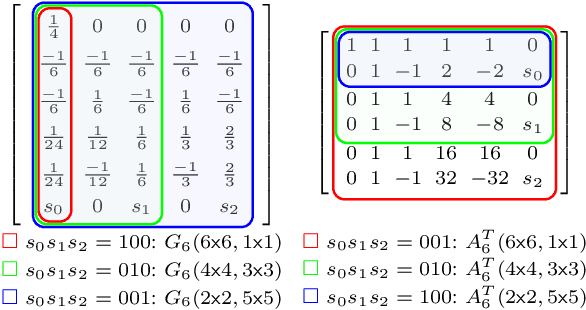

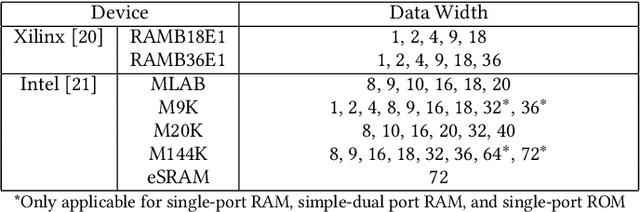

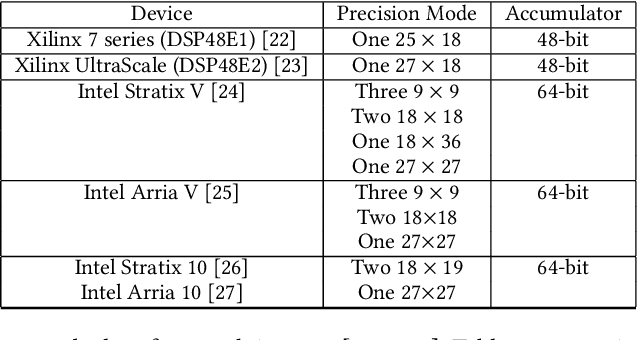

Abstract:The combination of Winograd's algorithm and systolic array architecture has demonstrated the capability of improving DSP efficiency in accelerating convolutional neural networks (CNNs) on FPGA platforms. However, handling arbitrary convolution kernel sizes in FPGA-based Winograd processing elements and supporting efficient data access remain underexplored. In this work, we are the first to propose an optimized Winograd processing element (WinoPE), which can naturally support multiple convolution kernel sizes with the same amount of computing resources and maintains high runtime DSP efficiency. Using the proposed WinoPE, we construct a highly efficient systolic array accelerator, termed WinoCNN. We also propose a dedicated memory subsystem to optimize the data access. Based on the accelerator architecture, we build accurate resource and performance modeling to explore optimal accelerator configurations under different resource constraints. We implement our proposed accelerator on multiple FPGAs, which outperforms the state-of-the-art designs in terms of both throughput and DSP efficiency. Our implementation achieves DSP efficiency up to 1.33 GOPS/DSP and throughput up to 3.1 TOPS with the Xilinx ZCU102 FPGA. These are 29.1\% and 20.0\% better than the best solutions reported previously, respectively.

NAIS: Neural Architecture and Implementation Search and its Applications in Autonomous Driving

Nov 18, 2019

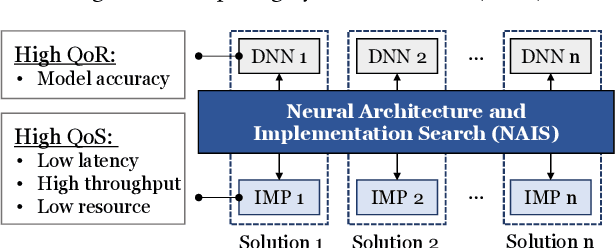

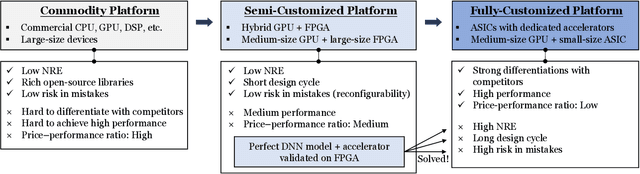

Abstract:The rapidly growing demands for powerful AI algorithms in many application domains have motivated massive investment in both high-quality deep neural network (DNN) models and high-efficiency implementations. In this position paper, we argue that a simultaneous DNN/implementation co-design methodology, named Neural Architecture and Implementation Search (NAIS), deserves more research attention to boost the development productivity and efficiency of both DNN models and implementation optimization. We propose a stylized design methodology that can drastically cut down the search cost while preserving the quality of the end solution.As an illustration, we discuss this DNN/implementation methodology in the context of both FPGAs and GPUs. We take autonomous driving as a key use case as it is one of the most demanding areas for high quality AI algorithms and accelerators. We discuss how such a co-design methodology can impact the autonomous driving industry significantly. We identify several research opportunities in this exciting domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge