Ashis G. Banerjee

Mapping-Guided Task Discovery and Allocation for Robotic Inspection of Underwater Structures

Feb 02, 2026Abstract:Task generation for underwater multi-robot inspections without prior knowledge of existing geometry can be achieved and optimized through examination of simultaneous localization and mapping (SLAM) data. By considering hardware parameters and environmental conditions, a set of tasks is generated from SLAM meshes and optimized through expected keypoint scores and distance-based pruning. In-water tests are used to demonstrate the effectiveness of the algorithm and determine the appropriate parameters. These results are compared to simulated Voronoi partitions and boustrophedon patterns for inspection coverage on a model of the test environment. The key benefits of the presented task discovery method include adaptability to unexpected geometry and distributions that maintain coverage while focusing on areas more likely to present defects or damage.

Rapidly Converging Time-Discounted Ergodicity on Graphs for Active Inspection of Confined Spaces

Mar 13, 2025

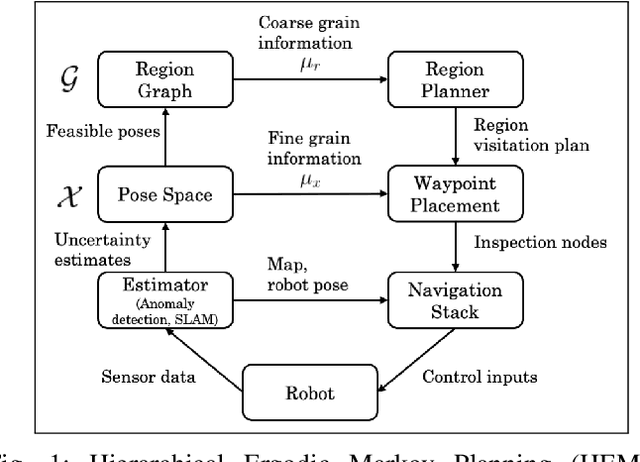

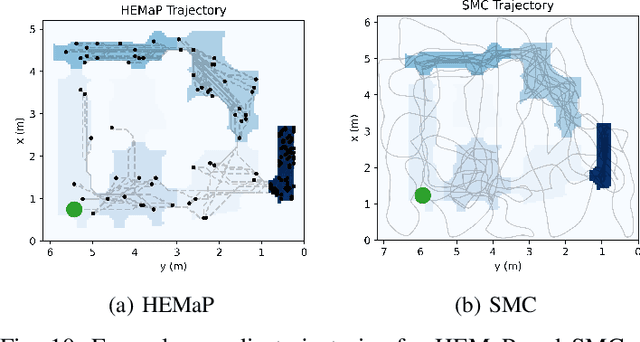

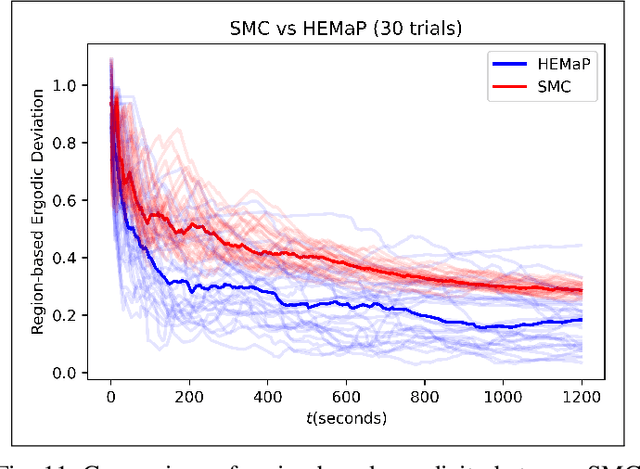

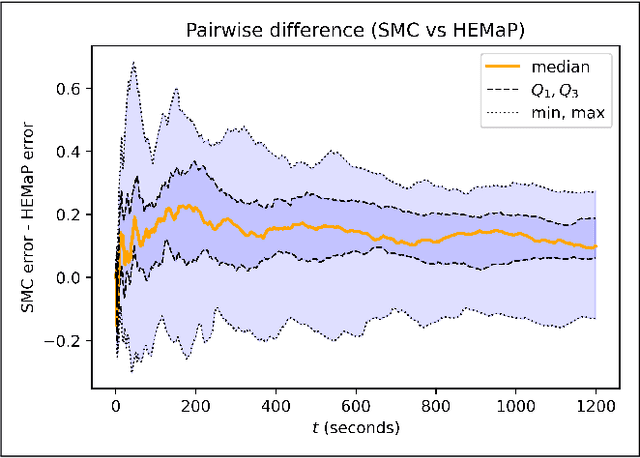

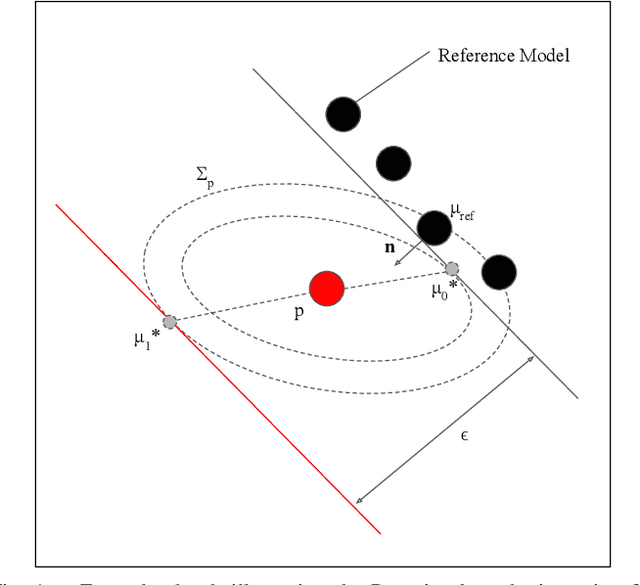

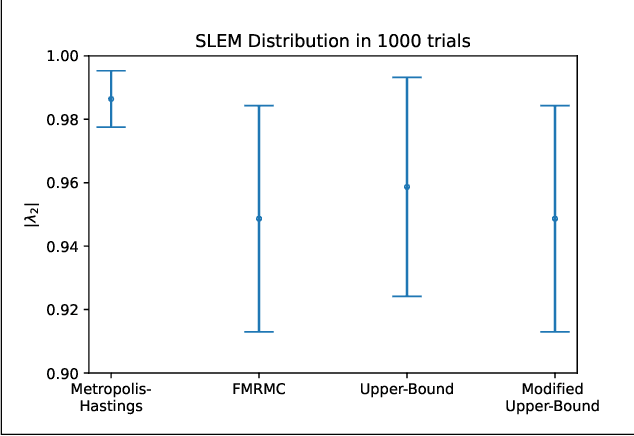

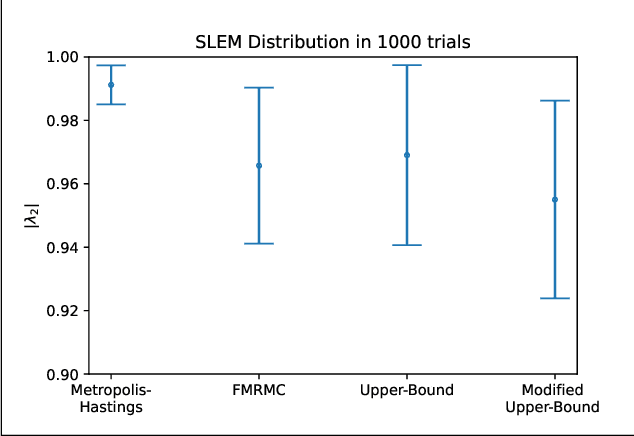

Abstract:Ergodic exploration has spawned a lot of interest in mobile robotics due to its ability to design time trajectories that match desired spatial coverage statistics. However, current ergodic approaches are for continuous spaces, which require detailed sensory information at each point and can lead to fractal-like trajectories that cannot be tracked easily. This paper presents a new ergodic approach for graph-based discretization of continuous spaces. It also introduces a new time-discounted ergodicity metric, wherein early visitations of information-rich nodes are weighted more than late visitations. A Markov chain synthesized using a convex program is shown to converge more rapidly to time-discounted ergodicity than the traditional fastest mixing Markov chain. The resultant ergodic traversal method is used within a hierarchical framework for active inspection of confined spaces with the goal of detecting anomalies robustly using SLAM-driven Bayesian hypothesis testing. Both simulation and physical experiments on a ground robot show the advantages of this framework over greedy and random exploration methods for left-behind foreign object debris detection in a ballast tank.

THOR2: Leveraging Topological Soft Clustering of Color Space for Human-Inspired Object Recognition in Unseen Environments

Aug 02, 2024Abstract:Visual object recognition in unseen and cluttered indoor environments is a challenging problem for mobile robots. This study presents a 3D shape and color-based descriptor, TOPS2, for point clouds generated from RGB-D images and an accompanying recognition framework, THOR2. The TOPS2 descriptor embodies object unity, a human cognition mechanism, by retaining the slicing-based topological representation of 3D shape from the TOPS descriptor while capturing object color information through slicing-based color embeddings computed using a network of coarse color regions. These color regions, analogous to the MacAdam ellipses identified in human color perception, are obtained using the Mapper algorithm, a topological soft-clustering technique. THOR2, trained using synthetic data, demonstrates markedly improved recognition accuracy compared to THOR, its 3D shape-based predecessor, on two benchmark real-world datasets: the OCID dataset capturing cluttered scenes from different viewpoints and the UW-IS Occluded dataset reflecting different environmental conditions and degrees of object occlusion recorded using commodity hardware. THOR2 also outperforms baseline deep learning networks, and a widely-used ViT adapted for RGB-D inputs on both the datasets. Therefore, THOR2 is a promising step toward achieving robust recognition in low-cost robots.

Sparse Color-Code Net: Real-Time RGB-Based 6D Object Pose Estimation on Edge Devices

Jun 05, 2024

Abstract:As robotics and augmented reality applications increasingly rely on precise and efficient 6D object pose estimation, real-time performance on edge devices is required for more interactive and responsive systems. Our proposed Sparse Color-Code Net (SCCN) embodies a clear and concise pipeline design to effectively address this requirement. SCCN performs pixel-level predictions on the target object in the RGB image, utilizing the sparsity of essential object geometry features to speed up the Perspective-n-Point (PnP) computation process. Additionally, it introduces a novel pixel-level geometry-based object symmetry representation that seamlessly integrates with the initial pose predictions, effectively addressing symmetric object ambiguities. SCCN notably achieves an estimation rate of 19 frames per second (FPS) and 6 FPS on the benchmark LINEMOD dataset and the Occlusion LINEMOD dataset, respectively, for an NVIDIA Jetson AGX Xavier, while consistently maintaining high estimation accuracy at these rates.

Toward Automated Formation of Composite Micro-Structures Using Holographic Optical Tweezers

Apr 25, 2024

Abstract:Holographic Optical Tweezers (HOT) are powerful tools that can manipulate micro and nano-scale objects with high accuracy and precision. They are most commonly used for biological applications, such as cellular studies, and more recently, micro-structure assemblies. Automation has been of significant interest in the HOT field, since human-run experiments are time-consuming and require skilled operator(s). Automated HOTs, however, commonly use point traps, which focus high intensity laser light at specific spots in fluid media to attract and move micro-objects. In this paper, we develop a novel automated system of tweezing multiple micro-objects more efficiently using multiplexed optical traps. Multiplexed traps enable the simultaneous trapping of multiple beads in various alternate multiplexing formations, such as annular rings and line patterns. Our automated system is realized by augmenting the capabilities of a commercially available HOT with real-time bead detection and tracking, and wavefront-based path planning. We demonstrate the usefulness of the system by assembling two different composite micro-structures, comprising 5 $\mu m$ polystyrene beads, using both annular and line shaped traps in obstacle-rich environments.

Data-Driven Ergonomic Risk Assessment of Complex Hand-intensive Manufacturing Processes

Mar 05, 2024Abstract:Hand-intensive manufacturing processes, such as composite layup and textile draping, require significant human dexterity to accommodate task complexity. These strenuous hand motions often lead to musculoskeletal disorders and rehabilitation surgeries. We develop a data-driven ergonomic risk assessment system with a special focus on hand and finger activity to better identify and address ergonomic issues related to hand-intensive manufacturing processes. The system comprises a multi-modal sensor testbed to collect and synchronize operator upper body pose, hand pose and applied forces; a Biometric Assessment of Complete Hand (BACH) formulation to measure high-fidelity hand and finger risks; and industry-standard risk scores associated with upper body posture, RULA, and hand activity, HAL. Our findings demonstrate that BACH captures injurious activity with a higher granularity in comparison to the existing metrics. Machine learning models are also used to automate RULA and HAL scoring, and generalize well to unseen participants. Our assessment system, therefore, provides ergonomic interpretability of the manufacturing processes studied, and could be used to mitigate risks through minor workplace optimization and posture corrections.

Active Anomaly Detection in Confined Spaces Using Ergodic Traversal of Directed Region Graphs

Oct 01, 2023

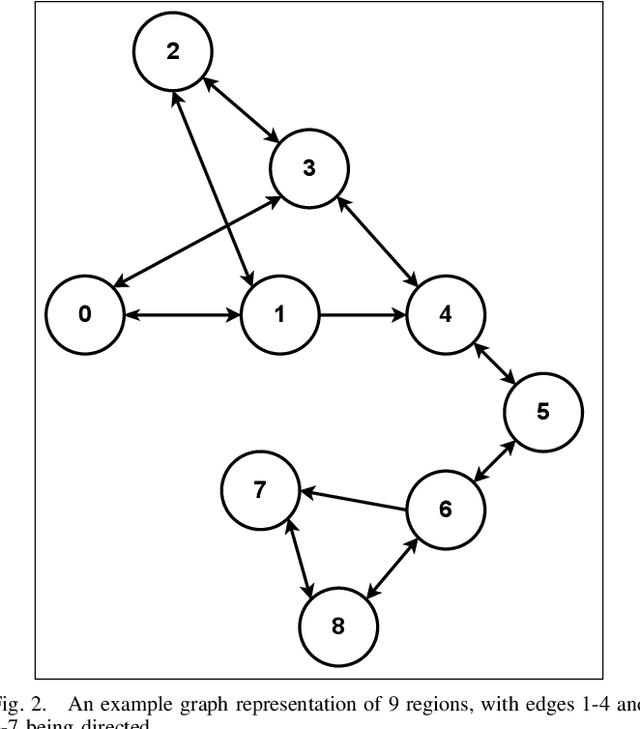

Abstract:We provide the first step toward developing a hierarchical control-estimation framework to actively plan robot trajectories for anomaly detection in confined spaces. The space is represented globally using a directed region graph, where a region is a landmark that needs to be visited (inspected). We devise a fast mixing Markov chain to find an ergodic route that traverses this graph so that the region visitation frequency is proportional to its anomaly detection uncertainty, while satisfying the edge directionality (region transition) constraint(s). Preliminary simulation results show fast convergence to the ergodic solution and confident estimation of the presence of anomalies in the inspected regions.

Human-Inspired Topological Representations for Visual Object Recognition in Unseen Environments

Sep 15, 2023Abstract:Visual object recognition in unseen and cluttered indoor environments is a challenging problem for mobile robots. Toward this goal, we extend our previous work to propose the TOPS2 descriptor, and an accompanying recognition framework, THOR2, inspired by a human reasoning mechanism known as object unity. We interleave color embeddings obtained using the Mapper algorithm for topological soft clustering with the shape-based TOPS descriptor to obtain the TOPS2 descriptor. THOR2, trained using synthetic data, achieves substantially higher recognition accuracy than the shape-based THOR framework and outperforms RGB-D ViT on two real-world datasets: the benchmark OCID dataset and the UW-IS Occluded dataset. Therefore, THOR2 is a promising step toward achieving robust recognition in low-cost robots.

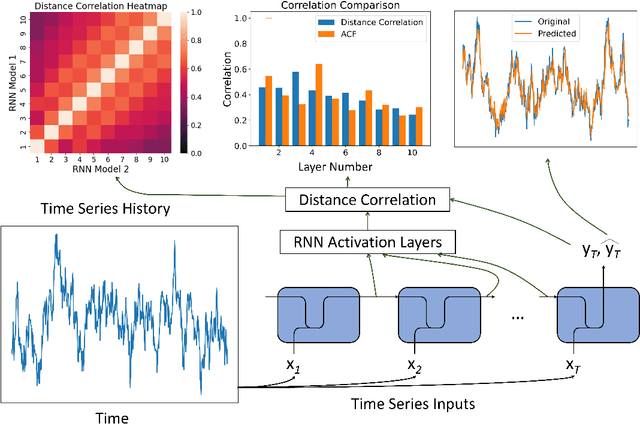

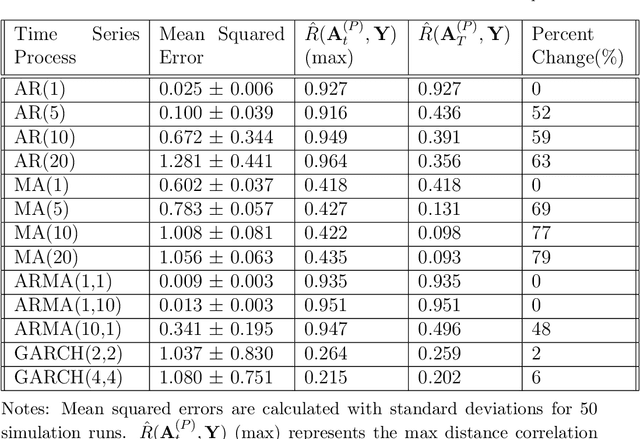

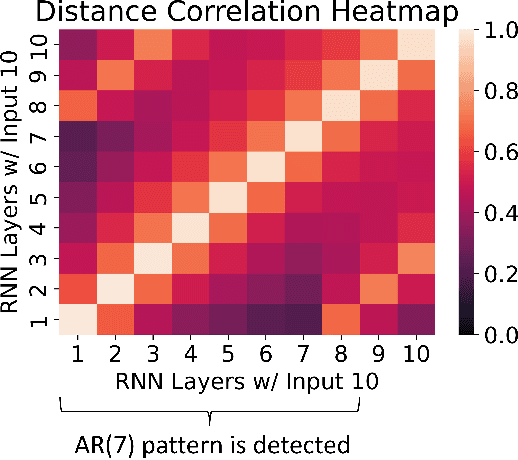

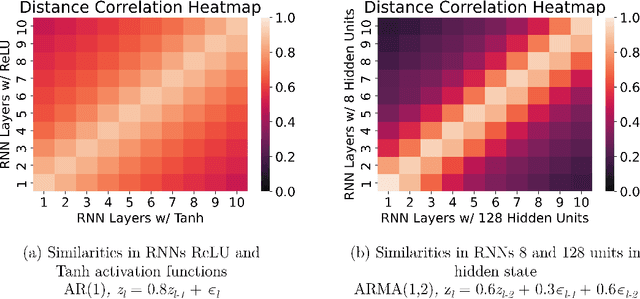

A Distance Correlation-Based Approach to Characterize the Effectiveness of Recurrent Neural Networks for Time Series Forecasting

Jul 28, 2023

Abstract:Time series forecasting has received a lot of attention with recurrent neural networks (RNNs) being one of the widely used models due to their ability to handle sequential data. Prior studies of RNNs for time series forecasting yield inconsistent results with limited insights as to why the performance varies for different datasets. In this paper, we provide an approach to link the characteristics of time series with the components of RNNs via the versatile metric of distance correlation. This metric allows us to examine the information flow through the RNN activation layers to be able to interpret and explain their performance. We empirically show that the RNN activation layers learn the lag structures of time series well. However, they gradually lose this information over a span of a few consecutive layers, thereby worsening the forecast quality for series with large lag structures. We also show that the activation layers cannot adequately model moving average and heteroskedastic time series processes. Last, we generate heatmaps for visual comparisons of the activation layers for different choices of the network hyperparameters to identify which of them affect the forecast performance. Our findings can, therefore, aid practitioners in assessing the effectiveness of RNNs for given time series data without actually training and evaluating the networks.

Persistent Homology Meets Object Unity: Object Recognition in Clutter

May 05, 2023

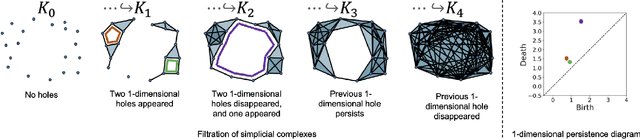

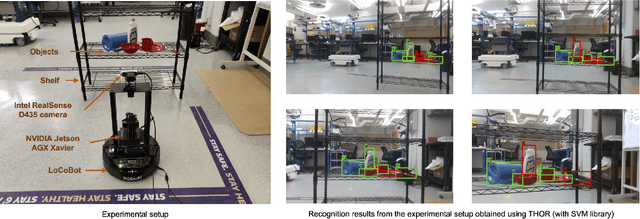

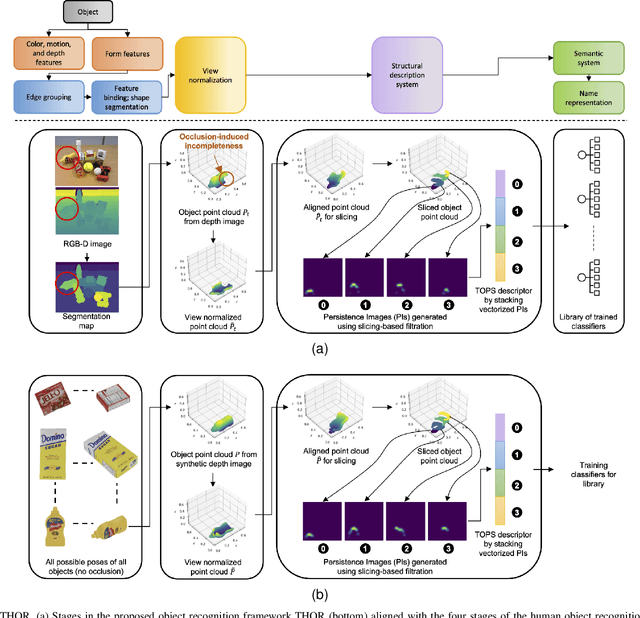

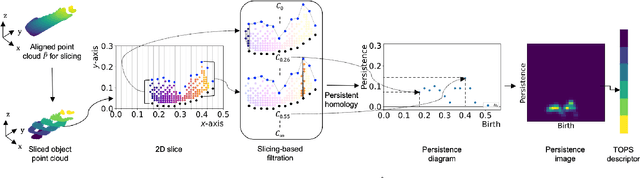

Abstract:Recognition of occluded objects in unseen and unstructured indoor environments is a challenging problem for mobile robots. To address this challenge, we propose a new descriptor, TOPS, for point clouds generated from depth images and an accompanying recognition framework, THOR, inspired by human reasoning. The descriptor employs a novel slicing-based approach to compute topological features from filtrations of simplicial complexes using persistent homology, and facilitates reasoning-based recognition using object unity. Apart from a benchmark dataset, we report performance on a new dataset, the UW Indoor Scenes (UW-IS) Occluded dataset, curated using commodity hardware to reflect real-world scenarios with different environmental conditions and degrees of object occlusion. THOR outperforms state-of-the-art methods on both the datasets and achieves substantially higher recognition accuracy for all the scenarios of the UW-IS Occluded dataset. Therefore, THOR, is a promising step toward robust recognition in low-cost robots, meant for everyday use in indoor settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge