Aritz D. Martinez

Evolutionary Multitask Optimization: a Methodological Overview, Challenges and Future Research Directions

Feb 04, 2021

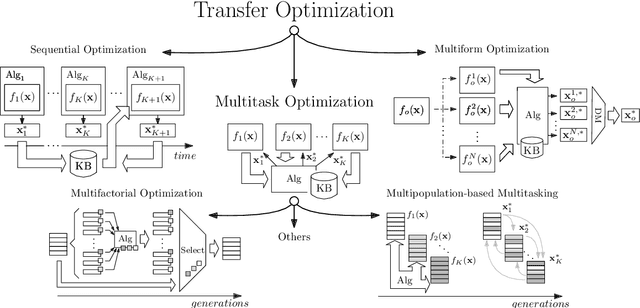

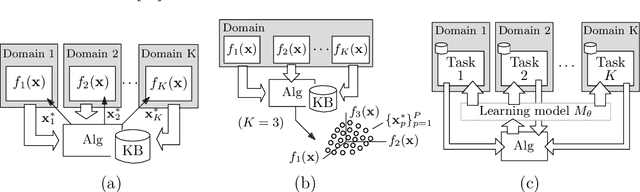

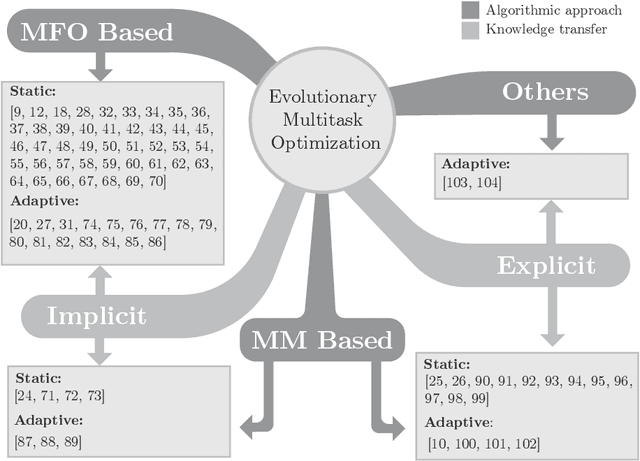

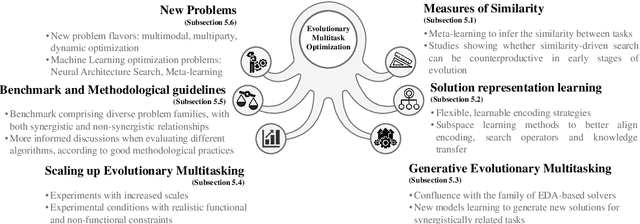

Abstract:In this work we consider multitasking in the context of solving multiple optimization problems simultaneously by conducting a single search process. The principal goal when dealing with this scenario is to dynamically exploit the existing complementarities among the problems (tasks) being optimized, helping each other through the exchange of valuable knowledge. Additionally, the emerging paradigm of Evolutionary Multitasking tackles multitask optimization scenarios by using as inspiration concepts drawn from Evolutionary Computation. The main purpose of this survey is to collect, organize and critically examine the abundant literature published so far in Evolutionary Multitasking, with an emphasis on the methodological patterns followed when designing new algorithmic proposals in this area (namely, multifactorial optimization and multipopulation-based multitasking). We complement our critical analysis with an identification of challenges that remain open to date, along with promising research directions that can stimulate future efforts in this topic. Our discussions held throughout this manuscript are offered to the audience as a reference of the general trajectory followed by the community working in this field in recent times, as well as a self-contained entry point for newcomers and researchers interested to join this exciting research avenue.

AT-MFCGA: An Adaptive Transfer-guided Multifactorial Cellular Genetic Algorithm for Evolutionary Multitasking

Oct 08, 2020

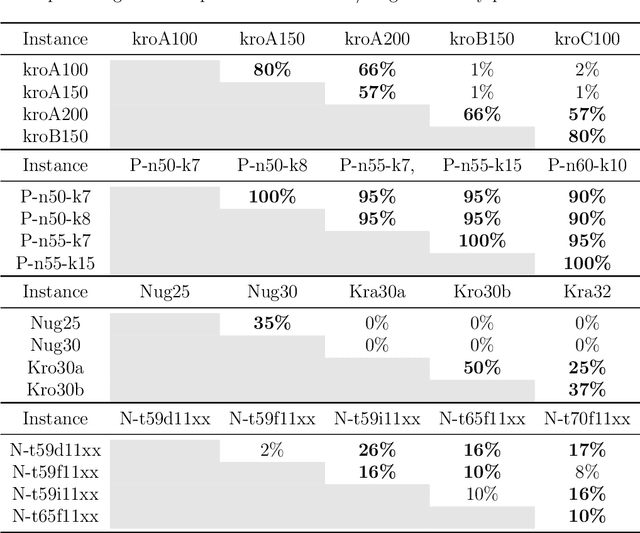

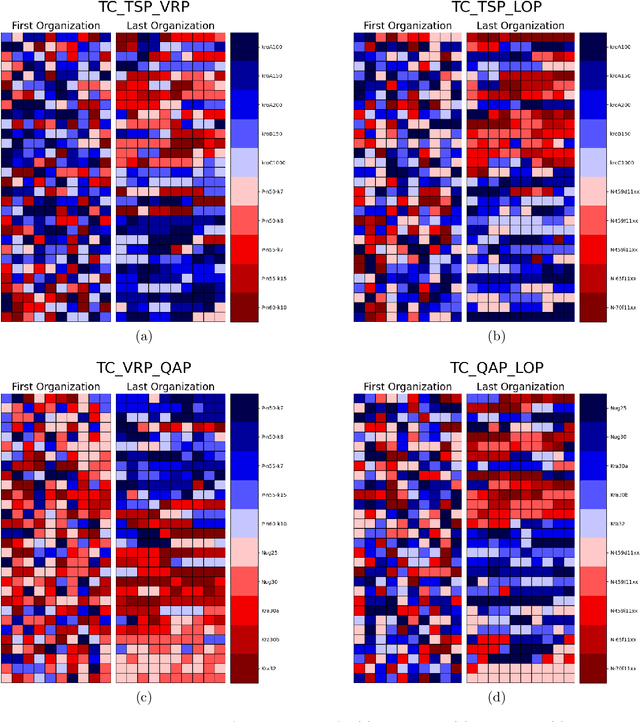

Abstract:Transfer Optimization is an incipient research area dedicated to the simultaneous solving of multiple optimization tasks. Among the different approaches that can address this problem effectively, Evolutionary Multitasking resorts to concepts from Evolutionary Computation to solve multiple problems within a single search process. In this paper we introduce a novel adaptive metaheuristic algorithm for dealing with Evolutionary Multitasking environments coined as Adaptive Transfer-guided Multifactorial Cellular Genetic Algorithm (AT-MFCGA). AT-MFCGA relies on cellular automata to implement mechanisms for exchanging knowledge among the optimization problems under consideration. Furthermore, our approach is able to explain by itself the synergies among tasks that were encountered and exploited during the search, which helps understand interactions between related optimization tasks. A comprehensive experimental setup is designed for assessing and comparing the performance of AT-MFCGA to that of other renowned evolutionary multitasking alternatives (MFEA and MFEA-II). Experiments comprise 11 multitasking scenarios composed by 20 instances of 4 combinatorial optimization problems, yielding the largest discrete multitasking environment solved to date. Results are conclusive in regards to the superior quality of solutions provided by AT-MFCGA with respect to the rest of methods, which are complemented by a quantitative examination of the genetic transferability among tasks along the search process.

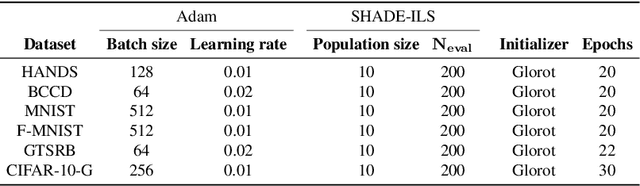

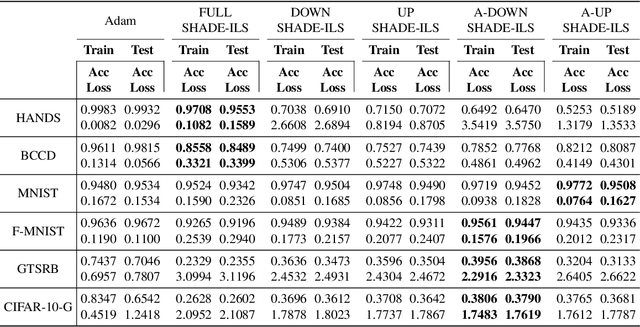

Lights and Shadows in Evolutionary Deep Learning: Taxonomy, Critical Methodological Analysis, Cases of Study, Learned Lessons, Recommendations and Challenges

Aug 09, 2020

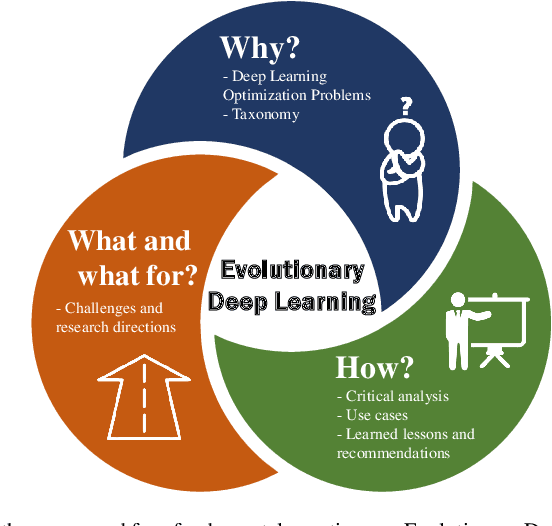

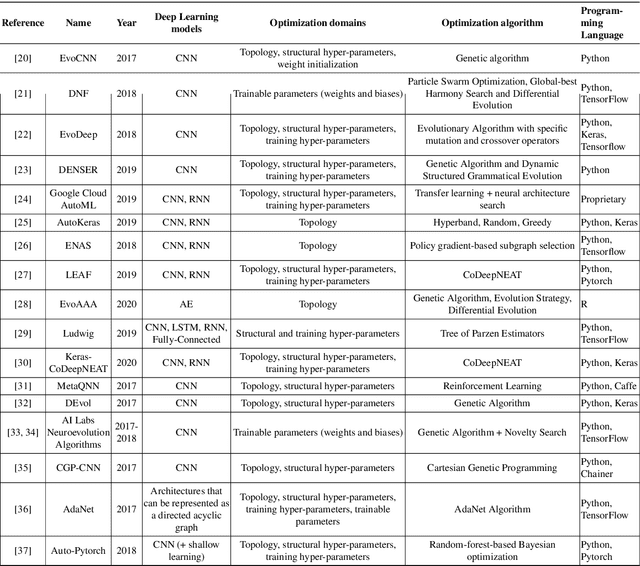

Abstract:Much has been said about the fusion of bio-inspired optimization algorithms and Deep Learning models for several purposes: from the discovery of network topologies and hyper-parametric configurations with improved performance for a given task, to the optimization of the model's parameters as a replacement for gradient-based solvers. Indeed, the literature is rich in proposals showcasing the application of assorted nature-inspired approaches for these tasks. In this work we comprehensively review and critically examine contributions made so far based on three axes, each addressing a fundamental question in this research avenue: a) optimization and taxonomy (Why?), including a historical perspective, definitions of optimization problems in Deep Learning, and a taxonomy associated with an in-depth analysis of the literature, b) critical methodological analysis (How?), which together with two case studies, allows us to address learned lessons and recommendations for good practices following the analysis of the literature, and c) challenges and new directions of research (What can be done, and what for?). In summary, three axes - optimization and taxonomy, critical analysis, and challenges - which outline a complete vision of a merger of two technologies drawing up an exciting future for this area of fusion research.

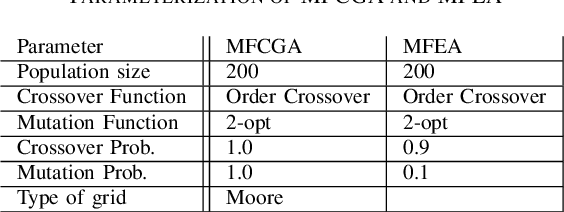

On the Transferability of Knowledge among Vehicle Routing Problems by using Cellular Evolutionary Multitasking

May 17, 2020

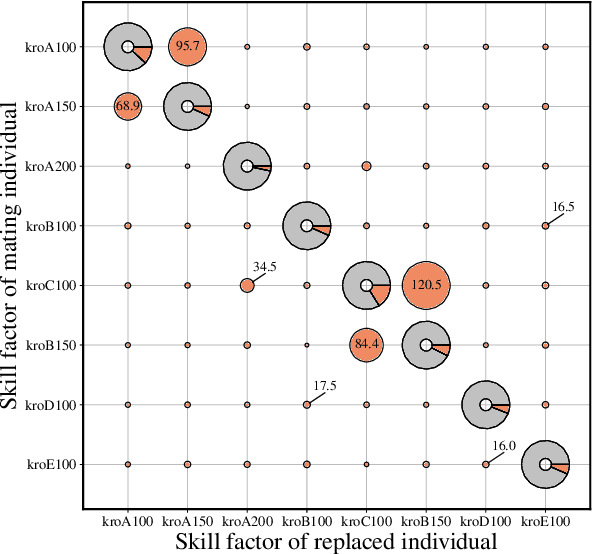

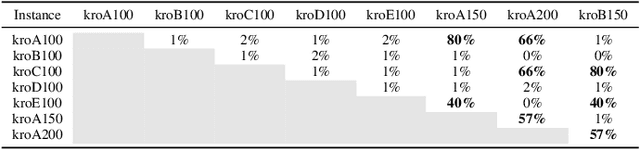

Abstract:Multitasking optimization is a recently introduced paradigm, focused on the simultaneous solving of multiple optimization problem instances (tasks). The goal of multitasking environments is to dynamically exploit existing complementarities and synergies among tasks, helping each other through the transfer of genetic material. More concretely, Evolutionary Multitasking (EM) regards to the resolution of multitasking scenarios using concepts inherited from Evolutionary Computation. EM approaches such as the well-known Multifactorial Evolutionary Algorithm (MFEA) are lately gaining a notable research momentum when facing with multiple optimization problems. This work is focused on the application of the recently proposed Multifactorial Cellular Genetic Algorithm (MFCGA) to the well-known Capacitated Vehicle Routing Problem (CVRP). In overall, 11 different multitasking setups have been built using 12 datasets. The contribution of this research is twofold. On the one hand, it is the first application of the MFCGA to the Vehicle Routing Problem family of problems. On the other hand, equally interesting is the second contribution, which is focused on the quantitative analysis of the positive genetic transferability among the problem instances. To do that, we provide an empirical demonstration of the synergies arisen between the different optimization tasks.

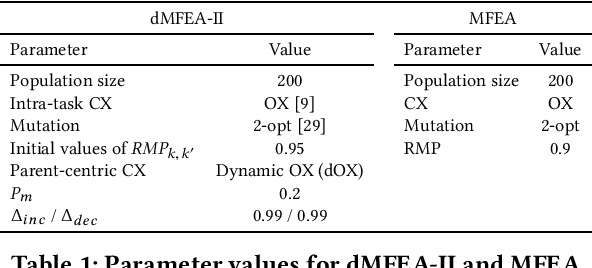

dMFEA-II: An Adaptive Multifactorial Evolutionary Algorithm for Permutation-based Discrete Optimization Problems

May 13, 2020

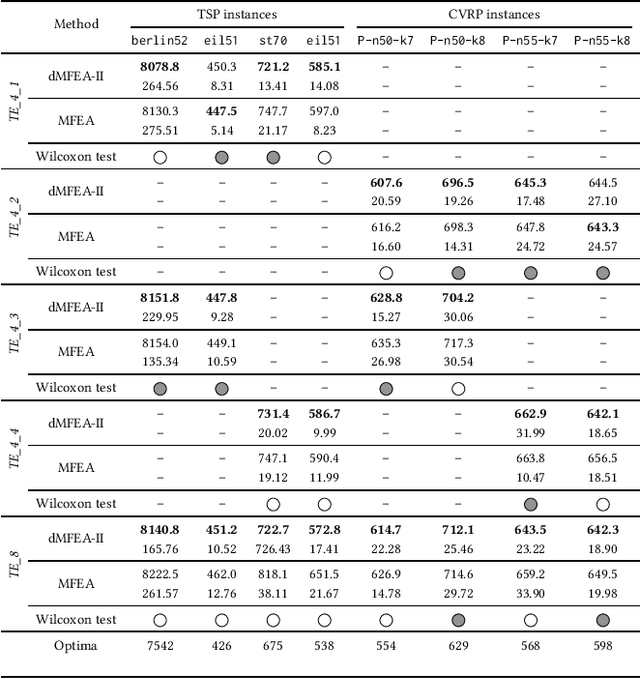

Abstract:The emerging research paradigm coined as multitasking optimization aims to solve multiple optimization tasks concurrently by means of a single search process. For this purpose, the exploitation of complementarities among the tasks to be solved is crucial, which is often achieved via the transfer of genetic material, thereby forging the Transfer Optimization field. In this context, Evolutionary Multitasking addresses this paradigm by resorting to concepts from Evolutionary Computation. Within this specific branch, approaches such as the Multifactorial Evolutionary Algorithm (MFEA) has lately gained a notable momentum when tackling multiple optimization tasks. This work contributes to this trend by proposing the first adaptation of the recently introduced Multifactorial Evolutionary Algorithm II (MFEA-II) to permutation-based discrete optimization environments. For modeling this adaptation, some concepts cannot be directly applied to discrete search spaces, such as parent-centric interactions. In this paper we entirely reformulate such concepts, making them suited to deal with permutation-based search spaces without loosing the inherent benefits of MFEA-II. The performance of the proposed solver has been assessed over 5 different multitasking setups, composed by 8 datasets of the well-known Traveling Salesman (TSP) and Capacitated Vehicle Routing Problems (CVRP). The obtained results and their comparison to those by the discrete version of the MFEA confirm the good performance of the developed dMFEA-II, and concur with the insights drawn in previous studies for continuous optimization.

Multifactorial Cellular Genetic Algorithm (MFCGA): Algorithmic Design, Performance Comparison and Genetic Transferability Analysis

Mar 24, 2020

Abstract:Multitasking optimization is an incipient research area which is lately gaining a notable research momentum. Unlike traditional optimization paradigm that focuses on solving a single task at a time, multitasking addresses how multiple optimization problems can be tackled simultaneously by performing a single search process. The main objective to achieve this goal efficiently is to exploit synergies between the problems (tasks) to be optimized, helping each other via knowledge transfer (thereby being referred to as Transfer Optimization). Furthermore, the equally recent concept of Evolutionary Multitasking (EM) refers to multitasking environments adopting concepts from Evolutionary Computation as their inspiration for the simultaneous solving of the problems under consideration. As such, EM approaches such as the Multifactorial Evolutionary Algorithm (MFEA) has shown a remarkable success when dealing with multiple discrete, continuous, single-, and/or multi-objective optimization problems. In this work we propose a novel algorithmic scheme for Multifactorial Optimization scenarios - the Multifactorial Cellular Genetic Algorithm (MFCGA) - that hinges on concepts from Cellular Automata to implement mechanisms for exchanging knowledge among problems. We conduct an extensive performance analysis of the proposed MFCGA and compare it to the canonical MFEA under the same algorithmic conditions and over 15 different multitasking setups (encompassing different reference instances of the discrete Traveling Salesman Problem). A further contribution of this analysis beyond performance benchmarking is a quantitative examination of the genetic transferability among the problem instances, eliciting an empirical demonstration of the synergies emerged between the different optimization tasks along the MFCGA search process.

Simultaneously Evolving Deep Reinforcement Learning Models using Multifactorial Optimization

Mar 23, 2020

Abstract:In recent years, Multifactorial Optimization (MFO) has gained a notable momentum in the research community. MFO is known for its inherent capability to efficiently address multiple optimization tasks at the same time, while transferring information among such tasks to improve their convergence speed. On the other hand, the quantum leap made by Deep Q Learning (DQL) in the Machine Learning field has allowed facing Reinforcement Learning (RL) problems of unprecedented complexity. Unfortunately, complex DQL models usually find it difficult to converge to optimal policies due to the lack of exploration or sparse rewards. In order to overcome these drawbacks, pre-trained models are widely harnessed via Transfer Learning, extrapolating knowledge acquired in a source task to the target task. Besides, meta-heuristic optimization has been shown to reduce the lack of exploration of DQL models. This work proposes a MFO framework capable of simultaneously evolving several DQL models towards solving interrelated RL tasks. Specifically, our proposed framework blends together the benefits of meta-heuristic optimization, Transfer Learning and DQL to automate the process of knowledge transfer and policy learning of distributed RL agents. A thorough experimentation is presented and discussed so as to assess the performance of the framework, its comparison to the traditional methodology for Transfer Learning in terms of convergence, speed and policy quality , and the intertask relationships found and exploited over the search process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge