Ariel Schwartzman

SLAC National Accelerator Laboratory, Menlo Park, CA, USA

Novel Light Field Imaging Device with Enhanced Light Collection for Cold Atom Clouds

May 23, 2022

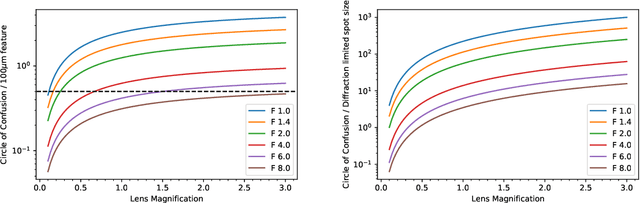

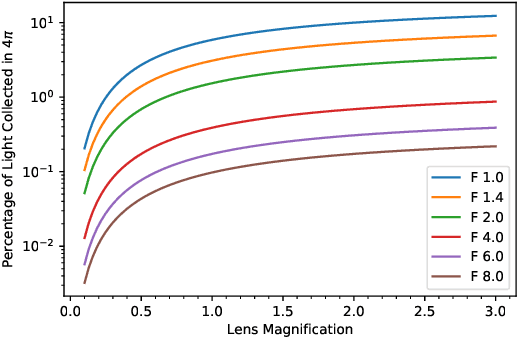

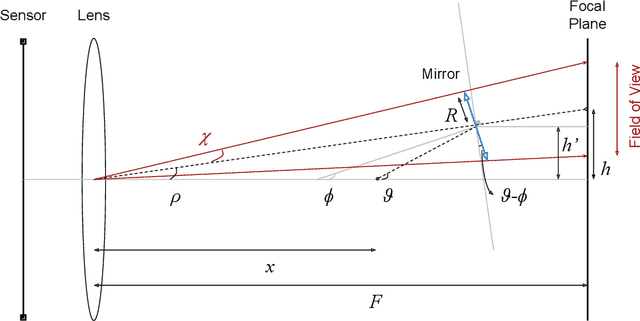

Abstract:We present a light field imaging system that captures multiple views of an object with a single shot. The system is designed to maximize the total light collection by accepting a larger solid angle of light than a conventional lens with equivalent depth of field. This is achieved by populating a plane of virtual objects using mirrors and fully utilizing the available field of view and depth of field. Simulation results demonstrate that this design is capable of single-shot tomography of objects of size $\mathcal{O}$(1 mm$^3$), reconstructing the 3-dimensional (3D) distribution and features not accessible from any single view angle in isolation. In particular, for atom clouds used in atom interferometry experiments, the system can reconstruct 3D fringe patterns with size $\mathcal{O}$(100 $\mu$m). We also demonstrate this system with a 3D-printed prototype. The prototype is used to take images of $\mathcal{O}$(1 mm$^{3}$) sized objects, and 3D reconstruction algorithms running on a single-shot image successfully reconstruct $\mathcal{O}$(100 $\mu$m) internal features. The prototype also shows that the system can be built with 3D printing technology and hence can be deployed quickly and cost-effectively in experiments with needs for enhanced light collection or 3D reconstruction. Imaging of cold atom clouds in atom interferometry is a key application of this new type of imaging device where enhanced light collection, high depth of field, and 3D tomographic reconstruction can provide new handles to characterize the atom clouds.

Machine Learning in High Energy Physics Community White Paper

Jul 08, 2018

Abstract:Machine learning is an important research area in particle physics, beginning with applications to high-level physics analysis in the 1990s and 2000s, followed by an explosion of applications in particle and event identification and reconstruction in the 2010s. In this document we discuss promising future research and development areas in machine learning in particle physics with a roadmap for their implementation, software and hardware resource requirements, collaborative initiatives with the data science community, academia and industry, and training the particle physics community in data science. The main objective of the document is to connect and motivate these areas of research and development with the physics drivers of the High-Luminosity Large Hadron Collider and future neutrino experiments and identify the resource needs for their implementation. Additionally we identify areas where collaboration with external communities will be of great benefit.

Weakly Supervised Classification in High Energy Physics

Jul 03, 2017

Abstract:As machine learning algorithms become increasingly sophisticated to exploit subtle features of the data, they often become more dependent on simulations. This paper presents a new approach called weakly supervised classification in which class proportions are the only input into the machine learning algorithm. Using one of the most challenging binary classification tasks in high energy physics - quark versus gluon tagging - we show that weakly supervised classification can match the performance of fully supervised algorithms. Furthermore, by design, the new algorithm is insensitive to any mis-modeling of discriminating features in the data by the simulation. Weakly supervised classification is a general procedure that can be applied to a wide variety of learning problems to boost performance and robustness when detailed simulations are not reliable or not available.

* 8 pages, 4 figures

Jet-Images -- Deep Learning Edition

Jan 22, 2017

Abstract:Building on the notion of a particle physics detector as a camera and the collimated streams of high energy particles, or jets, it measures as an image, we investigate the potential of machine learning techniques based on deep learning architectures to identify highly boosted W bosons. Modern deep learning algorithms trained on jet images can out-perform standard physically-motivated feature driven approaches to jet tagging. We develop techniques for visualizing how these features are learned by the network and what additional information is used to improve performance. This interplay between physically-motivated feature driven tools and supervised learning algorithms is general and can be used to significantly increase the sensitivity to discover new particles and new forces, and gain a deeper understanding of the physics within jets.

* 32 pages, 24 figures. Version that is published in JHEP

Fuzzy Jets

Sep 07, 2015

Abstract:Collimated streams of particles produced in high energy physics experiments are organized using clustering algorithms to form jets. To construct jets, the experimental collaborations based at the Large Hadron Collider (LHC) primarily use agglomerative hierarchical clustering schemes known as sequential recombination. We propose a new class of algorithms for clustering jets that use infrared and collinear safe mixture models. These new algorithms, known as fuzzy jets, are clustered using maximum likelihood techniques and can dynamically determine various properties of jets like their size. We show that the fuzzy jet size adds additional information to conventional jet tagging variables. Furthermore, we study the impact of pileup and show that with some slight modifications to the algorithm, fuzzy jets can be stable up to high pileup interaction multiplicities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge