Maxime Vandegar

SLAC National Accelerator Laboratory, Menlo Park, CA, USA

Dynamic NeRFs for Soccer Scenes

Sep 13, 2023Abstract:The long-standing problem of novel view synthesis has many applications, notably in sports broadcasting. Photorealistic novel view synthesis of soccer actions, in particular, is of enormous interest to the broadcast industry. Yet only a few industrial solutions have been proposed, and even fewer that achieve near-broadcast quality of the synthetic replays. Except for their setup of multiple static cameras around the playfield, the best proprietary systems disclose close to no information about their inner workings. Leveraging multiple static cameras for such a task indeed presents a challenge rarely tackled in the literature, for a lack of public datasets: the reconstruction of a large-scale, mostly static environment, with small, fast-moving elements. Recently, the emergence of neural radiance fields has induced stunning progress in many novel view synthesis applications, leveraging deep learning principles to produce photorealistic results in the most challenging settings. In this work, we investigate the feasibility of basing a solution to the task on dynamic NeRFs, i.e., neural models purposed to reconstruct general dynamic content. We compose synthetic soccer environments and conduct multiple experiments using them, identifying key components that help reconstruct soccer scenes with dynamic NeRFs. We show that, although this approach cannot fully meet the quality requirements for the target application, it suggests promising avenues toward a cost-efficient, automatic solution. We also make our work dataset and code publicly available, with the goal to encourage further efforts from the research community on the task of novel view synthesis for dynamic soccer scenes. For code, data, and video results, please see https://soccernerfs.isach.be.

Novel Light Field Imaging Device with Enhanced Light Collection for Cold Atom Clouds

May 23, 2022

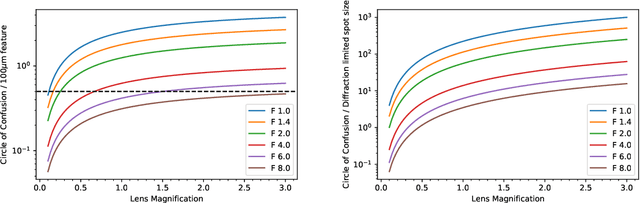

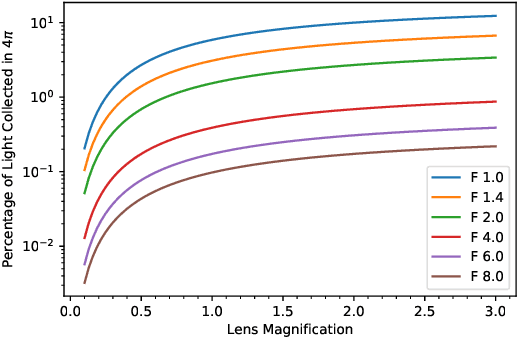

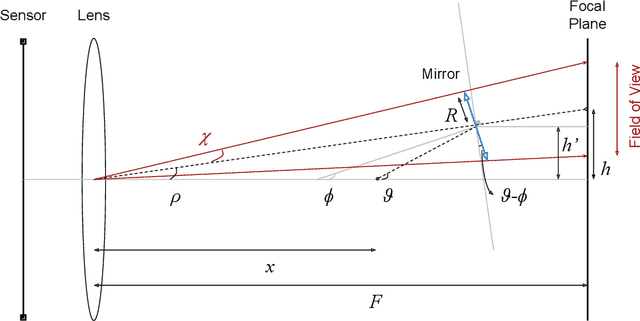

Abstract:We present a light field imaging system that captures multiple views of an object with a single shot. The system is designed to maximize the total light collection by accepting a larger solid angle of light than a conventional lens with equivalent depth of field. This is achieved by populating a plane of virtual objects using mirrors and fully utilizing the available field of view and depth of field. Simulation results demonstrate that this design is capable of single-shot tomography of objects of size $\mathcal{O}$(1 mm$^3$), reconstructing the 3-dimensional (3D) distribution and features not accessible from any single view angle in isolation. In particular, for atom clouds used in atom interferometry experiments, the system can reconstruct 3D fringe patterns with size $\mathcal{O}$(100 $\mu$m). We also demonstrate this system with a 3D-printed prototype. The prototype is used to take images of $\mathcal{O}$(1 mm$^{3}$) sized objects, and 3D reconstruction algorithms running on a single-shot image successfully reconstruct $\mathcal{O}$(100 $\mu$m) internal features. The prototype also shows that the system can be built with 3D printing technology and hence can be deployed quickly and cost-effectively in experiments with needs for enhanced light collection or 3D reconstruction. Imaging of cold atom clouds in atom interferometry is a key application of this new type of imaging device where enhanced light collection, high depth of field, and 3D tomographic reconstruction can provide new handles to characterize the atom clouds.

Neural Empirical Bayes: Source Distribution Estimation and its Applications to Simulation-Based Inference

Nov 11, 2020

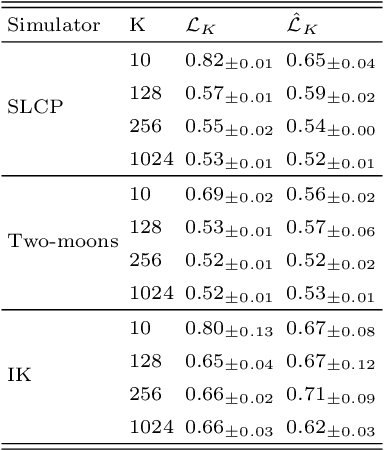

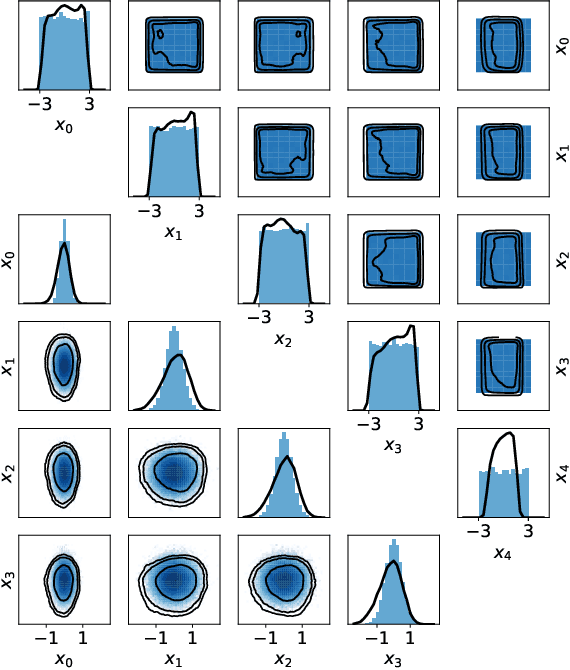

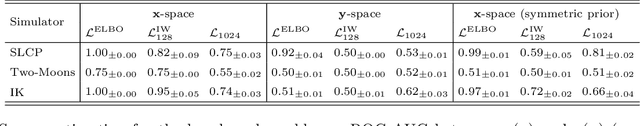

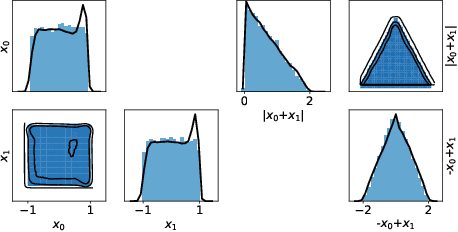

Abstract:We revisit empirical Bayes in the absence of a tractable likelihood function, as is typical in scientific domains relying on computer simulations. We investigate how the empirical Bayesian can make use of neural density estimators first to use all noise-corrupted observations to estimate a prior or source distribution over uncorrupted samples, and then to perform single-observation posterior inference using the fitted source distribution. We propose an approach based on the direct maximization of the log-marginal likelihood of the observations, examining both biased and de-biased estimators, and comparing to variational approaches. We find that, up to symmetries, a neural empirical Bayes approach recovers ground truth source distributions. With the learned source distribution in hand, we show the applicability to likelihood-free inference and examine the quality of the resulting posterior estimates. Finally, we demonstrate the applicability of Neural Empirical Bayes on an inverse problem from collider physics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge