Ariana Strandburg-Peshkin

animal2vec and MeerKAT: A self-supervised transformer for rare-event raw audio input and a large-scale reference dataset for bioacoustics

Jun 03, 2024

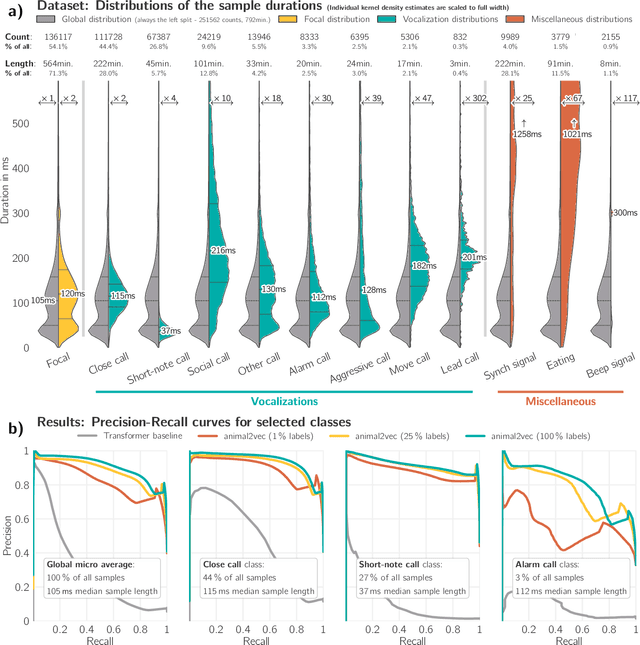

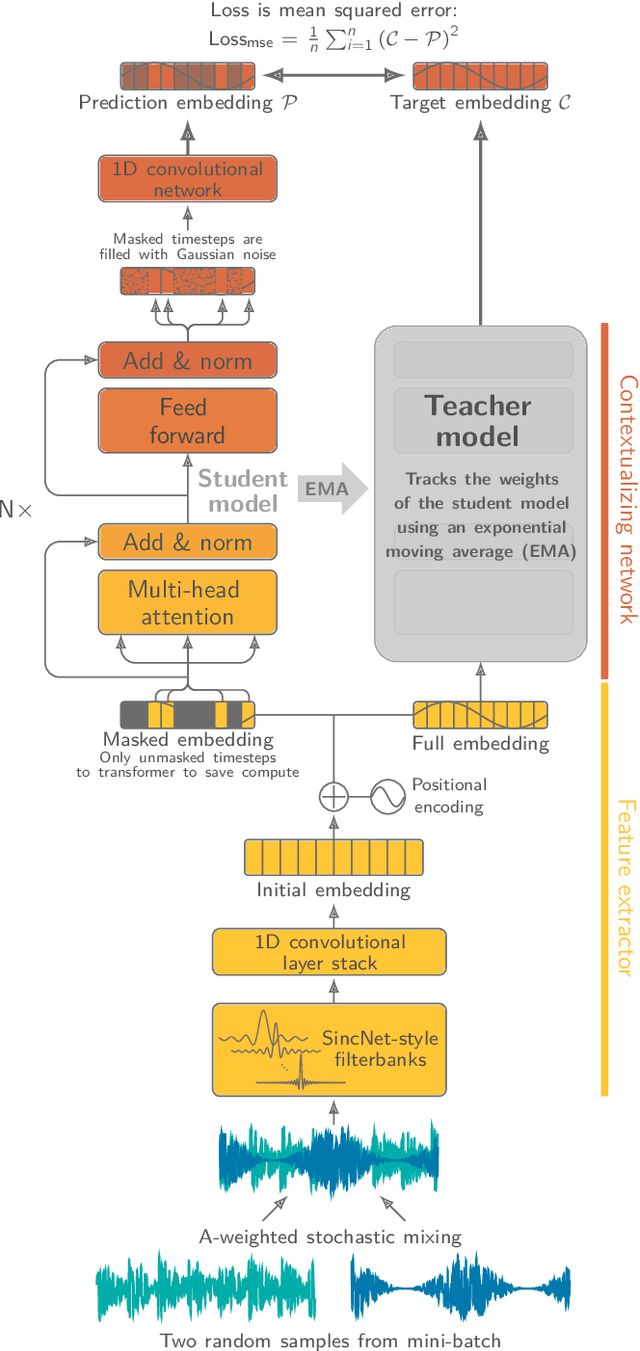

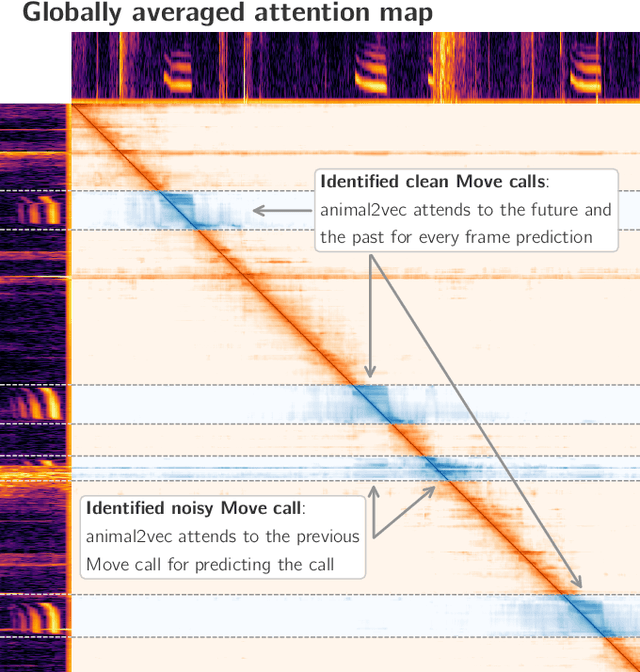

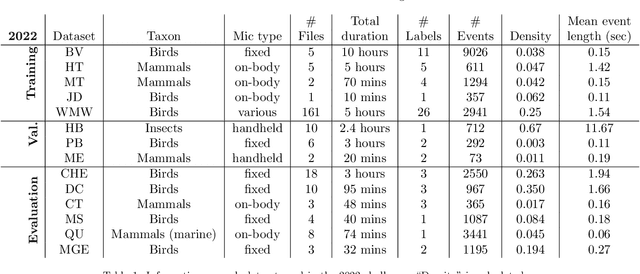

Abstract:Bioacoustic research provides invaluable insights into the behavior, ecology, and conservation of animals. Most bioacoustic datasets consist of long recordings where events of interest, such as vocalizations, are exceedingly rare. Analyzing these datasets poses a monumental challenge to researchers, where deep learning techniques have emerged as a standard method. Their adaptation remains challenging, focusing on models conceived for computer vision, where the audio waveforms are engineered into spectrographic representations for training and inference. We improve the current state of deep learning in bioacoustics in two ways: First, we present the animal2vec framework: a fully interpretable transformer model and self-supervised training scheme tailored for sparse and unbalanced bioacoustic data. Second, we openly publish MeerKAT: Meerkat Kalahari Audio Transcripts, a large-scale dataset containing audio collected via biologgers deployed on free-ranging meerkats with a length of over 1068h, of which 184h have twelve time-resolved vocalization-type classes, each with ms-resolution, making it the largest publicly-available labeled dataset on terrestrial mammals. Further, we benchmark animal2vec against the NIPS4Bplus birdsong dataset. We report new state-of-the-art results on both datasets and evaluate the few-shot capabilities of animal2vec of labeled training data. Finally, we perform ablation studies to highlight the differences between our architecture and a vanilla transformer baseline for human-produced sounds. animal2vec allows researchers to classify massive amounts of sparse bioacoustic data even with little ground truth information available. In addition, the MeerKAT dataset is the first large-scale, millisecond-resolution corpus for benchmarking bioacoustic models in the pretrain/finetune paradigm. We believe this sets the stage for a new reference point for bioacoustics.

Few-shot bioacoustic event detection at the DCASE 2023 challenge

Jun 15, 2023

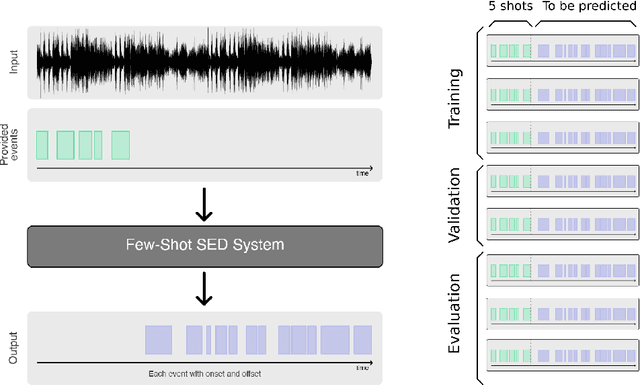

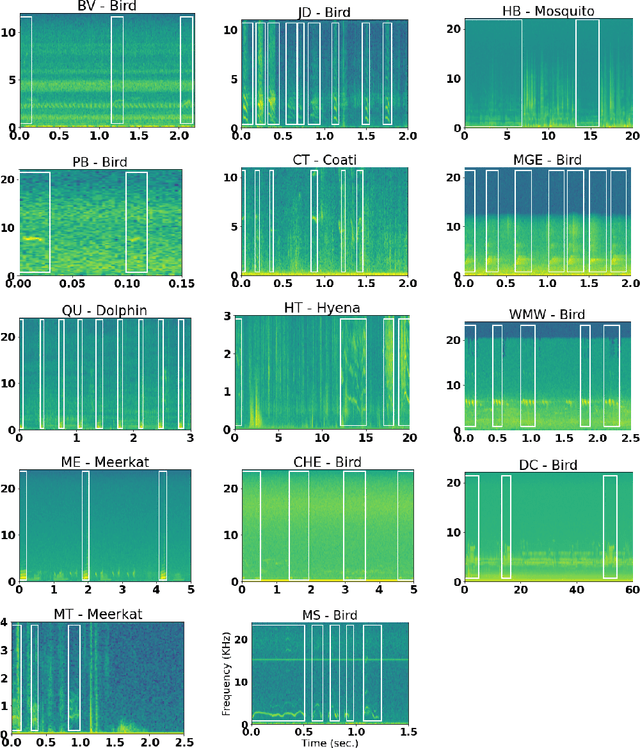

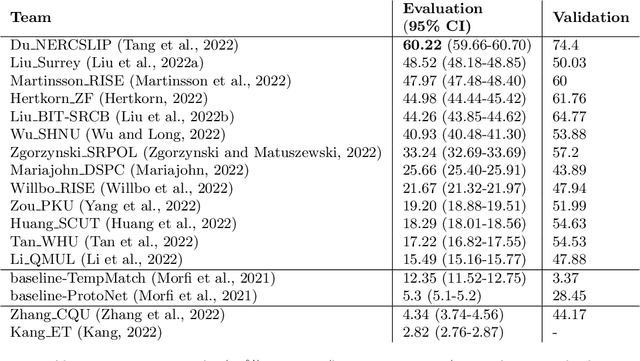

Abstract:Few-shot bioacoustic event detection consists in detecting sound events of specified types, in varying soundscapes, while having access to only a few examples of the class of interest. This task ran as part of the DCASE challenge for the third time this year with an evaluation set expanded to include new animal species, and a new rule: ensemble models were no longer allowed. The 2023 few shot task received submissions from 6 different teams with F-scores reaching as high as 63% on the evaluation set. Here we describe the task, focusing on describing the elements that differed from previous years. We also take a look back at past editions to describe how the task has evolved. Not only have the F-score results steadily improved (40% to 60% to 63%), but the type of systems proposed have also become more complex. Sound event detection systems are no longer simple variations of the baselines provided: multiple few-shot learning methodologies are still strong contenders for the task.

Learning to detect an animal sound from five examples

May 22, 2023

Abstract:Automatic detection and classification of animal sounds has many applications in biodiversity monitoring and animal behaviour. In the past twenty years, the volume of digitised wildlife sound available has massively increased, and automatic classification through deep learning now shows strong results. However, bioacoustics is not a single task but a vast range of small-scale tasks (such as individual ID, call type, emotional indication) with wide variety in data characteristics, and most bioacoustic tasks do not come with strongly-labelled training data. The standard paradigm of supervised learning, focussed on a single large-scale dataset and/or a generic pre-trained algorithm, is insufficient. In this work we recast bioacoustic sound event detection within the AI framework of few-shot learning. We adapt this framework to sound event detection, such that a system can be given the annotated start/end times of as few as 5 events, and can then detect events in long-duration audio -- even when the sound category was not known at the time of algorithm training. We introduce a collection of open datasets designed to strongly test a system's ability to perform few-shot sound event detections, and we present the results of a public contest to address the task. We show that prototypical networks are a strong-performing method, when enhanced with adaptations for general characteristics of animal sounds. We demonstrate that widely-varying sound event durations are an important factor in performance, as well as non-stationarity, i.e. gradual changes in conditions throughout the duration of a recording. For fine-grained bioacoustic recognition tasks without massive annotated training data, our results demonstrate that few-shot sound event detection is a powerful new method, strongly outperforming traditional signal-processing detection methods in the fully automated scenario.

FLICA: A Framework for Leader Identification in Coordinated Activity

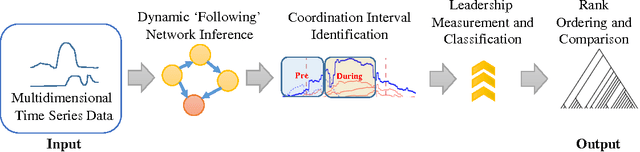

Mar 04, 2016

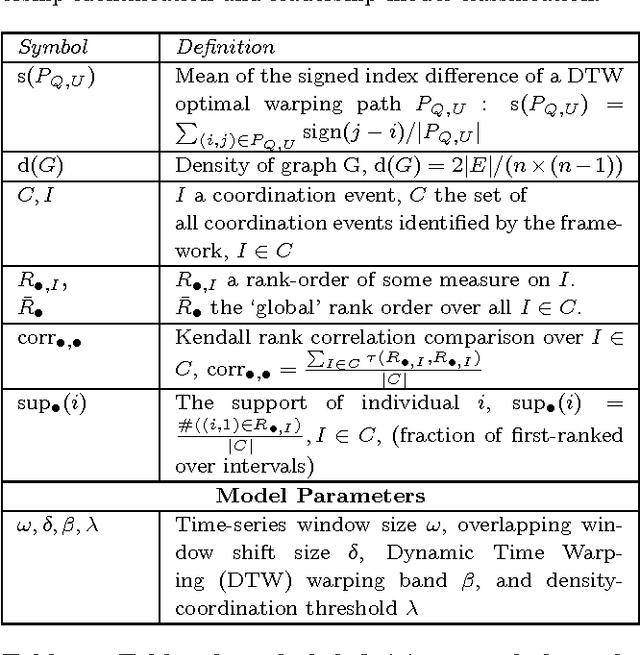

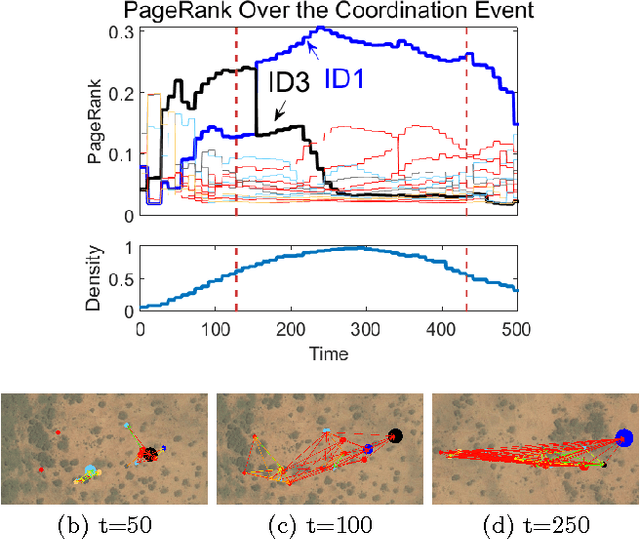

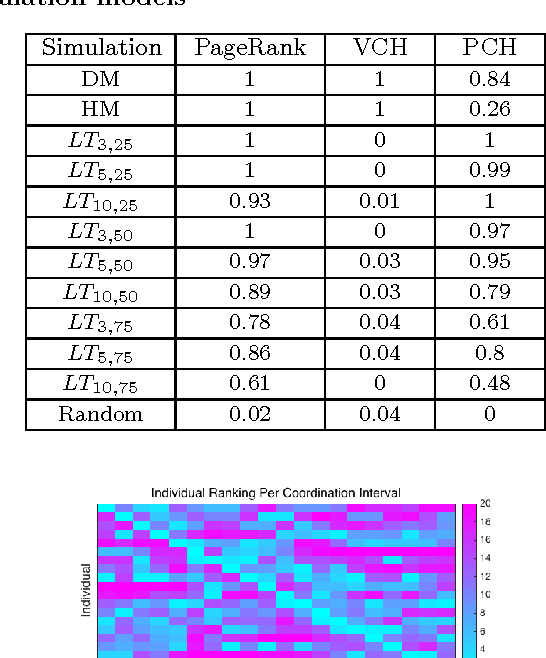

Abstract:Leadership is an important aspect of social organization that affects the processes of group formation, coordination, and decision-making in human societies, as well as in the social system of many other animal species. The ability to identify leaders based on their behavior and the subsequent reactions of others opens opportunities to explore how group decisions are made. Understanding who exerts influence provides key insights into the structure of social organizations. In this paper, we propose a simple yet powerful leadership inference framework extracting group coordination periods and determining leadership based on the activity of individuals within a group. We are able to not only identify a leader or leaders but also classify the type of leadership model that is consistent with observed patterns of group decision-making. The framework performs well in differentiating a variety of leadership models (e.g. dictatorship, linear hierarchy, or local influence). We propose five simple features that can be used to categorize characteristics of each leadership model, and thus make model classification possible. The proposed approach automatically (1) identifies periods of coordinated group activity, (2) determines the identities of leaders, and (3) classifies the likely mechanism by which the group coordination occurred. We demonstrate our framework on both simulated and real-world data: GPS tracks of a baboon troop and video-tracking of fish schools, as well as stock market closing price data of the NASDAQ index. The results of our leadership model are consistent with ground-truthed biological data and the framework finds many known events in financial data which are not otherwise reflected in the aggregate NASDAQ index. Our approach is easily generalizable to any coordinated activity data from interacting entities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge