Ester Vidaña-Vila

Few-shot bioacoustic event detection at the DCASE 2023 challenge

Jun 15, 2023

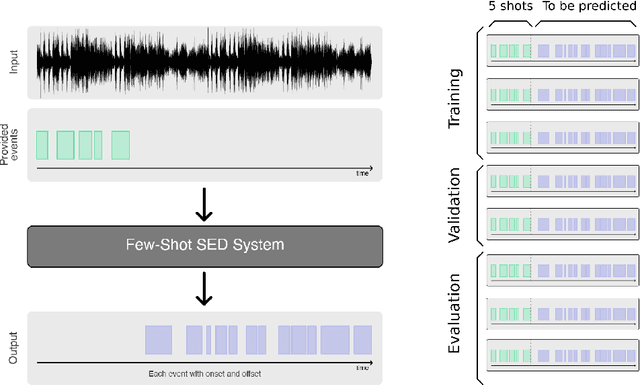

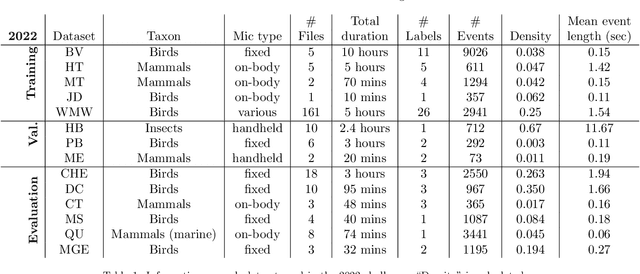

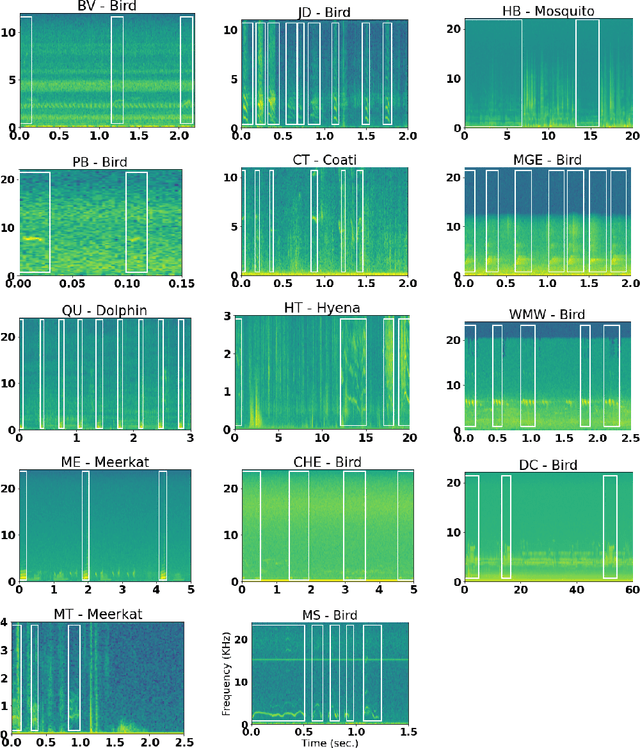

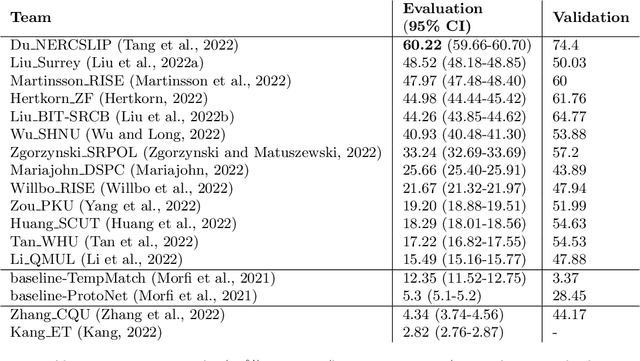

Abstract:Few-shot bioacoustic event detection consists in detecting sound events of specified types, in varying soundscapes, while having access to only a few examples of the class of interest. This task ran as part of the DCASE challenge for the third time this year with an evaluation set expanded to include new animal species, and a new rule: ensemble models were no longer allowed. The 2023 few shot task received submissions from 6 different teams with F-scores reaching as high as 63% on the evaluation set. Here we describe the task, focusing on describing the elements that differed from previous years. We also take a look back at past editions to describe how the task has evolved. Not only have the F-score results steadily improved (40% to 60% to 63%), but the type of systems proposed have also become more complex. Sound event detection systems are no longer simple variations of the baselines provided: multiple few-shot learning methodologies are still strong contenders for the task.

Learning to detect an animal sound from five examples

May 22, 2023

Abstract:Automatic detection and classification of animal sounds has many applications in biodiversity monitoring and animal behaviour. In the past twenty years, the volume of digitised wildlife sound available has massively increased, and automatic classification through deep learning now shows strong results. However, bioacoustics is not a single task but a vast range of small-scale tasks (such as individual ID, call type, emotional indication) with wide variety in data characteristics, and most bioacoustic tasks do not come with strongly-labelled training data. The standard paradigm of supervised learning, focussed on a single large-scale dataset and/or a generic pre-trained algorithm, is insufficient. In this work we recast bioacoustic sound event detection within the AI framework of few-shot learning. We adapt this framework to sound event detection, such that a system can be given the annotated start/end times of as few as 5 events, and can then detect events in long-duration audio -- even when the sound category was not known at the time of algorithm training. We introduce a collection of open datasets designed to strongly test a system's ability to perform few-shot sound event detections, and we present the results of a public contest to address the task. We show that prototypical networks are a strong-performing method, when enhanced with adaptations for general characteristics of animal sounds. We demonstrate that widely-varying sound event durations are an important factor in performance, as well as non-stationarity, i.e. gradual changes in conditions throughout the duration of a recording. For fine-grained bioacoustic recognition tasks without massive annotated training data, our results demonstrate that few-shot sound event detection is a powerful new method, strongly outperforming traditional signal-processing detection methods in the fully automated scenario.

Album cover art image generation with Generative Adversarial Networks

Dec 09, 2022Abstract:Generative Adversarial Networks (GANs) were introduced by Goodfellow in 2014, and since then have become popular for constructing generative artificial intelligence models. However, the drawbacks of such networks are numerous, like their longer training times, their sensitivity to hyperparameter tuning, several types of loss and optimization functions and other difficulties like mode collapse. Current applications of GANs include generating photo-realistic human faces, animals and objects. However, I wanted to explore the artistic ability of GANs in more detail, by using existing models and learning from them. This dissertation covers the basics of neural networks and works its way up to the particular aspects of GANs, together with experimentation and modification of existing available models, from least complex to most. The intention is to see if state of the art GANs (specifically StyleGAN2) can generate album art covers and if it is possible to tailor them by genre. This was attempted by first familiarizing myself with 3 existing GANs architectures, including the state of the art StyleGAN2. The StyleGAN2 code was used to train a model with a dataset containing 80K album cover images, then used to style images by picking curated images and mixing their styles.

Western Mediterranean wetlands bird species classification: evaluating small-footprint deep learning approaches on a new annotated dataset

Jul 12, 2022

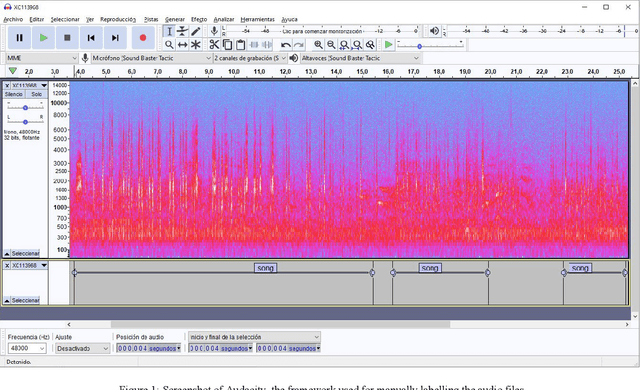

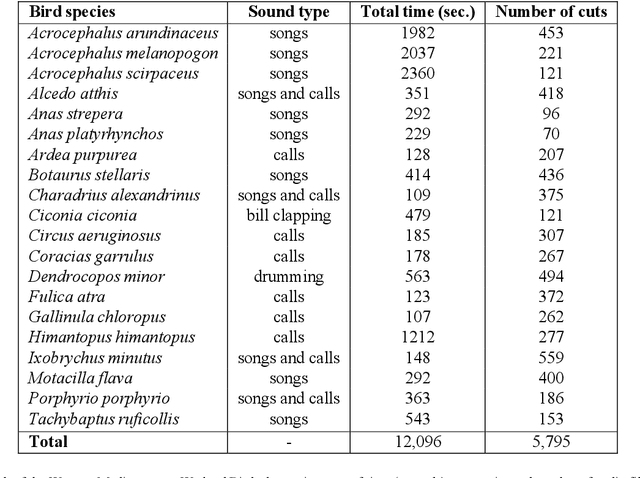

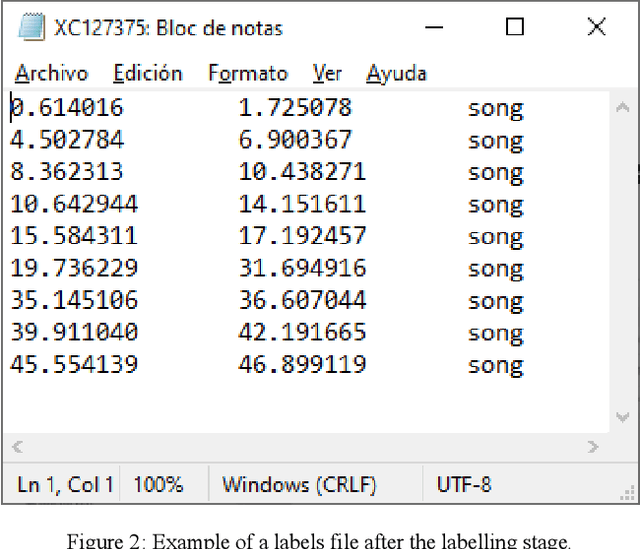

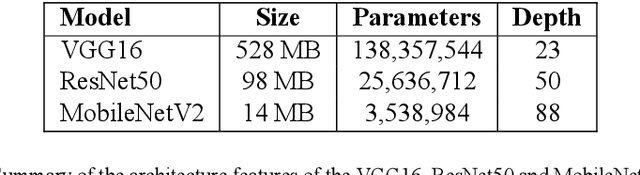

Abstract:The deployment of an expert system running over a wireless acoustic sensors network made up of bioacoustic monitoring devices that recognise bird species from their sounds would enable the automation of many tasks of ecological value, including the analysis of bird population composition or the detection of endangered species in areas of environmental interest. Endowing these devices with accurate audio classification capabilities is possible thanks to the latest advances in artificial intelligence, among which deep learning techniques excel. However, a key issue to make bioacoustic devices affordable is the use of small footprint deep neural networks that can be embedded in resource and battery constrained hardware platforms. For this reason, this work presents a critical comparative analysis between two heavy and large footprint deep neural networks (VGG16 and ResNet50) and a lightweight alternative, MobileNetV2. Our experimental results reveal that MobileNetV2 achieves an average F1-score less than a 5\% lower than ResNet50 (0.789 vs. 0.834), performing better than VGG16 with a footprint size nearly 40 times smaller. Moreover, to compare the models, we have created and made public the Western Mediterranean Wetland Birds dataset, consisting of 201.6 minutes and 5,795 audio excerpts of 20 endemic bird species of the Aiguamolls de l'Empord\`a Natural Park.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge