Anzhe Chen

Seeing to Act, Prompting to Specify: A Bayesian Factorization of Vision Language Action Policy

Dec 12, 2025Abstract:The pursuit of out-of-distribution generalization in Vision-Language-Action (VLA) models is often hindered by catastrophic forgetting of the Vision-Language Model (VLM) backbone during fine-tuning. While co-training with external reasoning data helps, it requires experienced tuning and data-related overhead. Beyond such external dependencies, we identify an intrinsic cause within VLA datasets: modality imbalance, where language diversity is much lower than visual and action diversity. This imbalance biases the model toward visual shortcuts and language forgetting. To address this, we introduce BayesVLA, a Bayesian factorization that decomposes the policy into a visual-action prior, supporting seeing-to-act, and a language-conditioned likelihood, enabling prompt-to-specify. This inherently preserves generalization and promotes instruction following. We further incorporate pre- and post-contact phases to better leverage pre-trained foundation models. Information-theoretic analysis formally validates our effectiveness in mitigating shortcut learning. Extensive experiments show superior generalization to unseen instructions, objects, and environments compared to existing methods. Project page is available at: https://xukechun.github.io/papers/BayesVLA.

Toward Embodiment Equivariant Vision-Language-Action Policy

Sep 18, 2025Abstract:Vision-language-action policies learn manipulation skills across tasks, environments and embodiments through large-scale pre-training. However, their ability to generalize to novel robot configurations remains limited. Most approaches emphasize model size, dataset scale and diversity while paying less attention to the design of action spaces. This leads to the configuration generalization problem, which requires costly adaptation. We address this challenge by formulating cross-embodiment pre-training as designing policies equivariant to embodiment configuration transformations. Building on this principle, we propose a framework that (i) establishes a embodiment equivariance theory for action space and policy design, (ii) introduces an action decoder that enforces configuration equivariance, and (iii) incorporates a geometry-aware network architecture to enhance embodiment-agnostic spatial reasoning. Extensive experiments in both simulation and real-world settings demonstrate that our approach improves pre-training effectiveness and enables efficient fine-tuning on novel robot embodiments. Our code is available at https://github.com/hhcaz/e2vla

CNS: Correspondence Encoded Neural Image Servo Policy

Sep 16, 2023

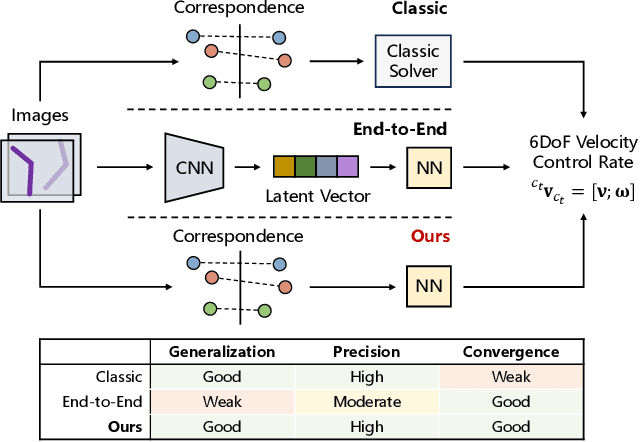

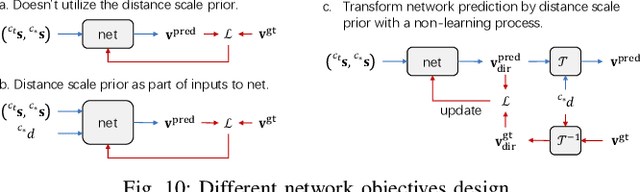

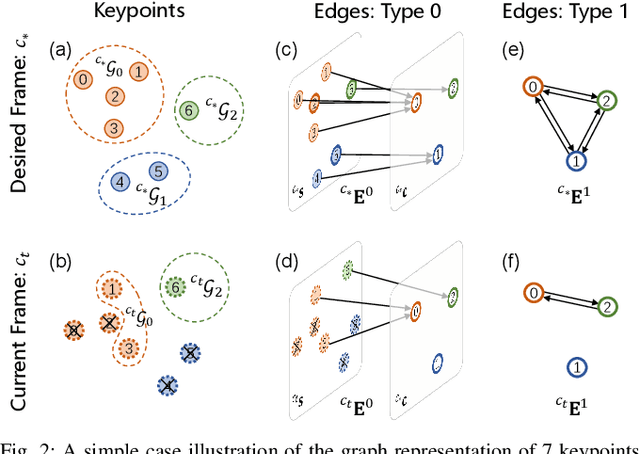

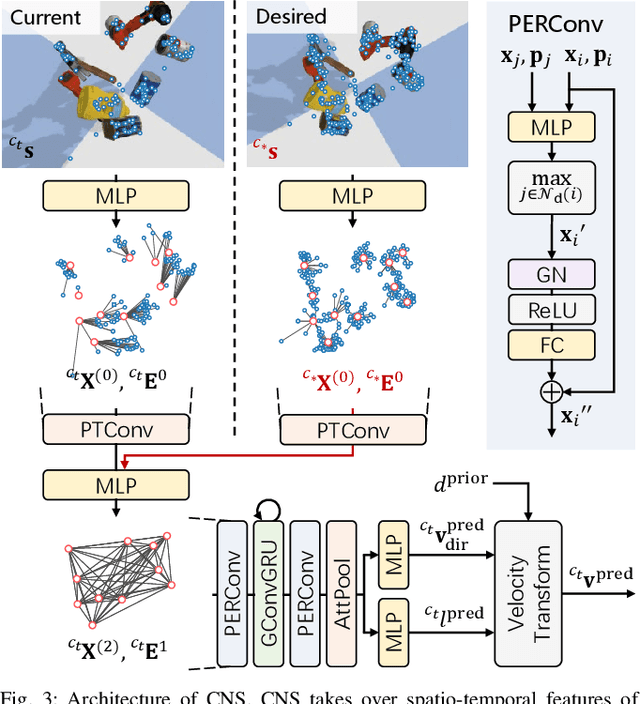

Abstract:Image servo is an indispensable technique in robotic applications that helps to achieve high precision positioning. The intermediate representation of image servo policy is important to sensor input abstraction and policy output guidance. Classical approaches achieve high precision but require clean keypoint correspondence, and suffer from limited convergence basin or weak feature error robustness. Recent learning-based methods achieve moderate precision and large convergence basin on specific scenes but face issues when generalizing to novel environments. In this paper, we encode keypoints and correspondence into a graph and use graph neural network as architecture of controller. This design utilizes both advantages: generalizable intermediate representation from keypoint correspondence and strong modeling ability from neural network. Other techniques including realistic data generation, feature clustering and distance decoupling are proposed to further improve efficiency, precision and generalization. Experiments in simulation and real-world verify the effectiveness of our method in speed (maximum 40fps along with observer), precision (<0.3{\deg} and sub-millimeter accuracy) and generalization (sim-to-real without fine-tuning). Project homepage (full paper with supplementary text, video and code): https://hhcaz.github.io/CNS-home

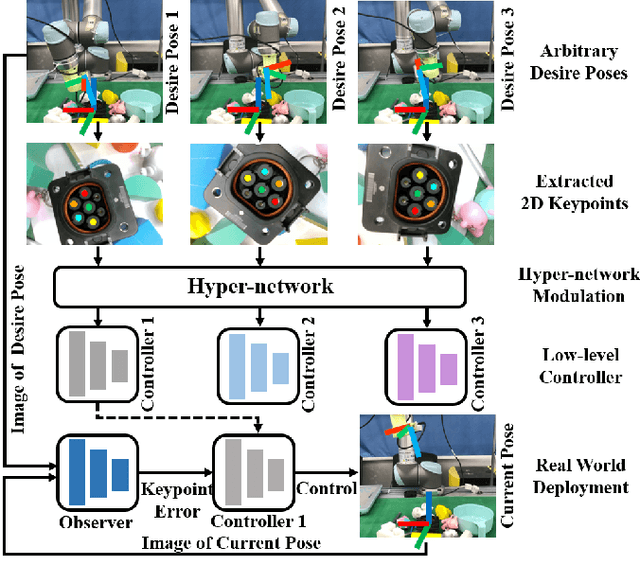

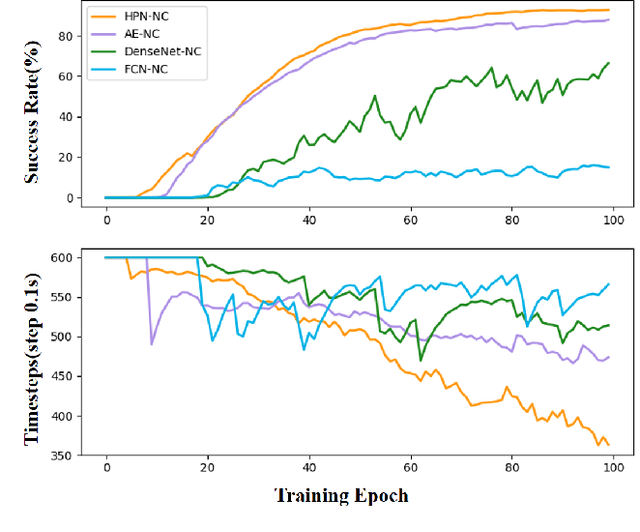

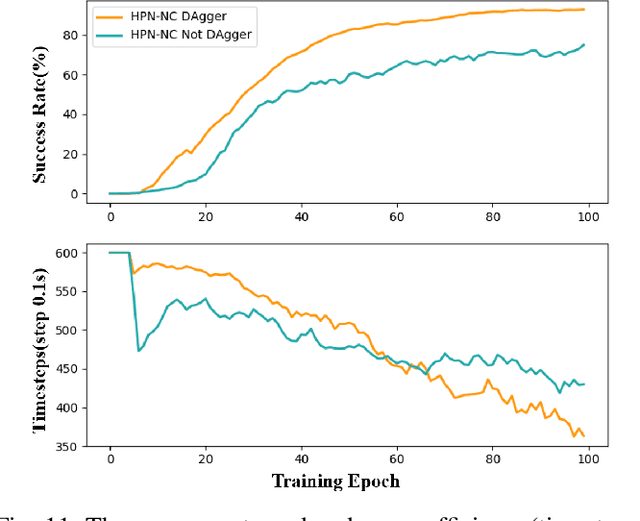

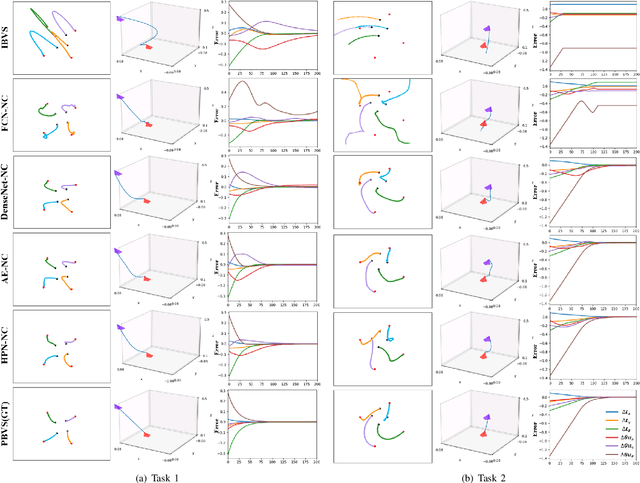

A Hyper-network Based End-to-end Visual Servoing with Arbitrary Desired Poses

Apr 18, 2023

Abstract:Recently, several works achieve end-to-end visual servoing (VS) for robotic manipulation by replacing traditional controller with differentiable neural networks, but lose the ability to servo arbitrary desired poses. This letter proposes a differentiable architecture for arbitrary pose servoing: a hyper-network based neural controller (HPN-NC). To achieve this, HPN-NC consists of a hyper net and a low-level controller, where the hyper net learns to generate the parameters of the low-level controller and the controller uses the 2D keypoints error for control like traditional image-based visual servoing (IBVS). HPN-NC can complete 6 degree of freedom visual servoing with large initial offset. Taking advantage of the fully differentiable nature of HPN-NC, we provide a three-stage training procedure to servo real world objects. With self-supervised end-to-end training, the performance of the integrated model can be further improved in unseen scenes and the amount of manual annotations can be significantly reduced.

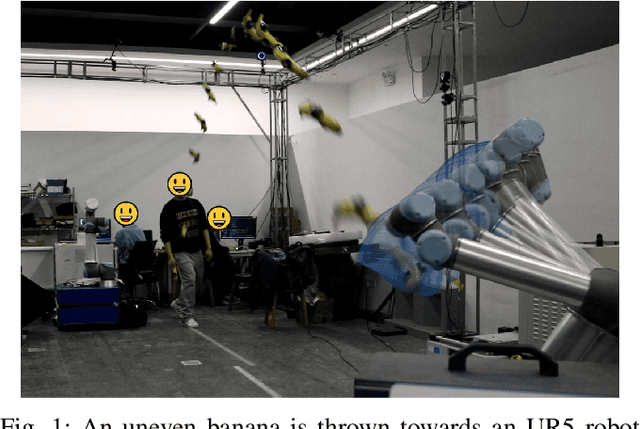

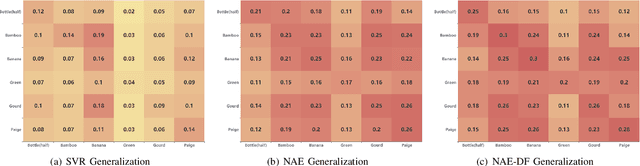

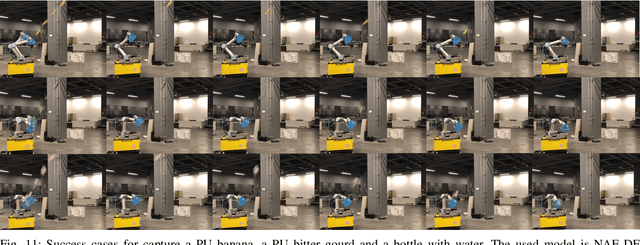

Neural Motion Prediction for In-flight Uneven Object Catching

Mar 15, 2021

Abstract:In-flight objects capture is extremely challenging. The robot is required to complete trajectory prediction, interception position calculation and motion planning in sequence within tens of milliseconds. As in-flight uneven objects are affected by various kinds of forces, motion prediction is difficult for a time-varying acceleration. In order to compensate the system's non-linearity, we introduce the Neural Acceleration Estimator (NAE) that estimates the varying acceleration by observing a small fragment of previous deflected trajectory. Moreover, end-to-end training with Differantiable Filter (NAE-DF) gives a supervision for measurement uncertainty and further improves the prediction accuracy. Experimental results show that motion prediction with NAE and NAE-DF is superior to other methods and has a good generalization performance on unseen objects. We test our methods on a robot, performing velocity control in real world and respectively achieve 83.3% and 86.7% success rate on a ploy urethane banana and a gourd. We also release an object in-flight dataset containing 1,500 trajectorys for uneven objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge