Anurag Sarkar

Generative Modeling of Individual Behavior at Scale

Feb 20, 2025Abstract:There has been a growing interest in using AI to model human behavior, particularly in domains where humans interact with this technology. While most existing work models human behavior at an aggregate level, our goal is to model behavior at the individual level. Recent approaches to behavioral stylometry -- or the task of identifying a person from their actions alone -- have shown promise in domains like chess, but these approaches are either not scalable (e.g., fine-tune a separate model for each person) or not generative, in that they cannot generate actions. We address these limitations by framing behavioral stylometry as a multi-task learning problem -- where each task represents a distinct person -- and use parameter-efficient fine-tuning (PEFT) methods to learn an explicit style vector for each person. Style vectors are generative: they selectively activate shared "skill" parameters to generate actions in the style of each person. They also induce a latent space that we can interpret and manipulate algorithmically. In particular, we develop a general technique for style steering that allows us to steer a player's style vector towards a desired property. We apply our approach to two very different games, at unprecedented scales: chess (47,864 players) and Rocket League (2,000 players). We also show generality beyond gaming by applying our method to image generation, where we learn style vectors for 10,177 celebrities and use these vectors to steer their images.

Procedural Content Generation via Knowledge Transformation (PCG-KT)

May 01, 2023

Abstract:We introduce the concept of Procedural Content Generation via Knowledge Transformation (PCG-KT), a new lens and framework for characterizing PCG methods and approaches in which content generation is enabled by the process of knowledge transformation -- transforming knowledge derived from one domain in order to apply it in another. Our work is motivated by a substantial number of recent PCG works that focus on generating novel content via repurposing derived knowledge. Such works have involved, for example, performing transfer learning on models trained on one game's content to adapt to another game's content, as well as recombining different generative distributions to blend the content of two or more games. Such approaches arose in part due to limitations in PCG via Machine Learning (PCGML) such as producing generative models for games lacking training data and generating content for entirely new games. In this paper, we categorize such approaches under this new lens of PCG-KT by offering a definition and framework for describing such methods and surveying existing works using this framework. Finally, we conclude by highlighting open problems and directions for future research in this area.

* 15 pages, 14 figures

tile2tile: Learning Game Filters for Platformer Style Transfer

Aug 15, 2022

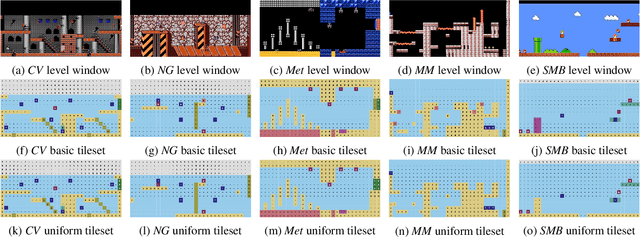

Abstract:We present tile2tile, an approach for style transfer between levels of tile-based platformer games. Our method involves training models that translate levels from a lower-resolution sketch representation based on tile affordances to the original tile representation for a given game. This enables these models, which we refer to as filters, to translate level sketches into the style of a specific game. Moreover, by converting a level of one game into sketch form and then translating the resulting sketch into the tiles of another game, we obtain a method of style transfer between two games. We use Markov random fields and autoencoders for learning the game filters and apply them to demonstrate style transfer between levels of Super Mario Bros, Kid Icarus, Mega Man and Metroid.

Latent Combinational Game Design

Jun 28, 2022

Abstract:We present an approach for generating playable games that blend a given set of games in a desired combination using deep generative latent variable models. We refer to this approach as latent combinational game design -- latent since we use learned latent representations to perform blending, combinational since game blending is a combinational creativity process and game design since the approach generates novel, playable games. We use Gaussian Mixture Variational Autoencoders (GMVAEs), which use a mixture of Gaussians to model the VAE latent space. Through supervised training, each component learns to encode levels from one game and lets us define new, blended games as linear combinations of these learned components. This enables generating new games that blend the input games as well as control the relative proportions of each game in the blend. We also extend prior work using conditional VAEs to perform blending and compare against the GMVAE. Our results show that both models can generate playable blended games that blend the input games in the desired proportions.

Procedural Content Generation using Behavior Trees (PCGBT)

Jun 24, 2021

Abstract:Behavior trees (BTs) are a popular method of modeling the behavior of NPCs and enemy AI and have found widespread use in a large number of commercial games. In this paper, rather than use BTs to model game-playing agents, we demonstrate their use for modeling game design agents, defining behaviors as executing content generation tasks rather than in-game actions. Similar to how traditional BTs enable modeling behaviors in a modular and dynamic manner, BTs for PCG enable simple subtrees for generating parts of levels to be combined modularly to form more complex trees for generating whole levels as well as generators that can dynamically vary the generated content. We demonstrate this approach by using BTs to model generators for Super Mario Bros., Mega Man and Metroid levels as well as dungeon layouts and discuss several ways in which this PCGBT paradigm could be applied and extended in the future.

Dungeon and Platformer Level Blending and Generation using Conditional VAEs

Jun 17, 2021

Abstract:Variational autoencoders (VAEs) have been used in prior works for generating and blending levels from different games. To add controllability to these models, conditional VAEs (CVAEs) were recently shown capable of generating output that can be modified using labels specifying desired content, albeit working with segments of levels and platformers exclusively. We expand these works by using CVAEs for generating whole platformer and dungeon levels, and blending levels across these genres. We show that CVAEs can reliably control door placement in dungeons and progression direction in platformer levels. Thus, by using appropriate labels, our approach can generate whole dungeons and platformer levels of interconnected rooms and segments respectively as well as levels that blend dungeons and platformers. We demonstrate our approach using The Legend of Zelda, Metroid, Mega Man and Lode Runner.

Generating and Blending Game Levels via Quality-Diversity in the Latent Space of a Variational Autoencoder

Feb 24, 2021

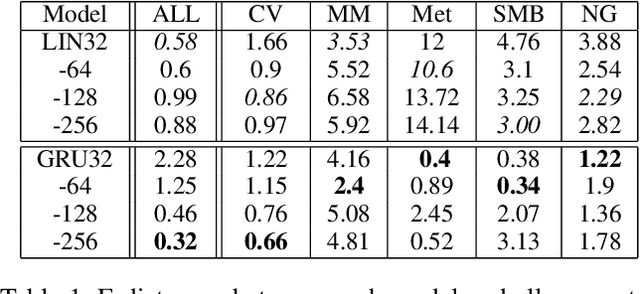

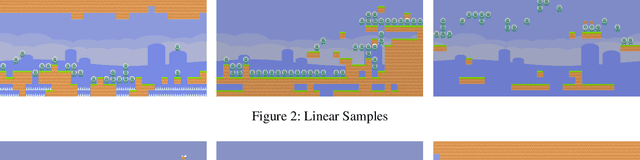

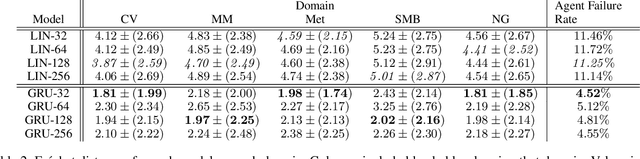

Abstract:Several recent works have demonstrated the use of variational autoencoders (VAEs) for both generating levels in the style of existing games as well as blending levels across different games. Additionally, quality-diversity (QD) algorithms have also become popular for generating varied game content by using evolution to explore a search space while focusing on both variety and quality. In order to reap the benefits of both these approaches, we present a level generation and game blending approach that combines the use of VAEs and QD algorithms. Specifically, we train VAEs on game levels and then run the MAP-Elites QD algorithm using the learned latent space of the VAE as the search space. The latent space captures the properties of the games whose levels we want to generate and blend, while MAP-Elites searches this latent space to find a diverse set of levels optimizing a given objective such as playability. We test our method using models for 5 different platformer games as well as a blended domain spanning 3 of these games. Our results show that using MAP-Elites in conjunction with VAEs enables the generation of a diverse set of playable levels not just for each individual game but also for the blended domain while illuminating game-specific regions of the blended latent space.

Conditional Level Generation and Game Blending

Oct 13, 2020

Abstract:Prior research has shown variational autoencoders (VAEs) to be useful for generating and blending game levels by learning latent representations of existing level data. We build on such models by exploring the level design affordances and applications enabled by conditional VAEs (CVAEs). CVAEs augment VAEs by allowing them to be trained using labeled data, thus enabling outputs to be generated conditioned on some input. We studied how increased control in the level generation process and the ability to produce desired outputs via training on labeled game level data could build on prior PCGML methods. Through our results of training CVAEs on levels from Super Mario Bros., Kid Icarus and Mega Man, we show that such models can assist in level design by generating levels with desired level elements and patterns as well as producing blended levels with desired combinations of games.

Exploring Level Blending across Platformers via Paths and Affordances

Aug 22, 2020

Abstract:Techniques for procedural content generation via machine learning (PCGML) have been shown to be useful for generating novel game content. While used primarily for producing new content in the style of the game domain used for training, recent works have increasingly started to explore methods for discovering and generating content in novel domains via techniques such as level blending and domain transfer. In this paper, we build on these works and introduce a new PCGML approach for producing novel game content spanning multiple domains. We use a new affordance and path vocabulary to encode data from six different platformer games and train variational autoencoders on this data, enabling us to capture the latent level space spanning all the domains and generate new content with varying proportions of the different domains.

Game Level Clustering and Generation using Gaussian Mixture VAEs

Aug 22, 2020

Abstract:Variational autoencoders (VAEs) have been shown to be able to generate game levels but require manual exploration of the learned latent space to generate outputs with desired attributes. While conditional VAEs address this by allowing generation to be conditioned on labels, such labels have to be provided during training and thus require prior knowledge which may not always be available. In this paper, we apply Gaussian Mixture VAEs (GMVAEs), a variant of the VAE which imposes a mixture of Gaussians (GM) on the latent space, unlike regular VAEs which impose a unimodal Gaussian. This allows GMVAEs to cluster levels in an unsupervised manner using the components of the GM and then generate new levels using the learned components. We demonstrate our approach with levels from Super Mario Bros., Kid Icarus and Mega Man. Our results show that the learned components discover and cluster level structures and patterns and can be used to generate levels with desired characteristics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge