Anthony E. Samir

Foundation Model of Electronic Medical Records for Adaptive Risk Estimation

Feb 10, 2025

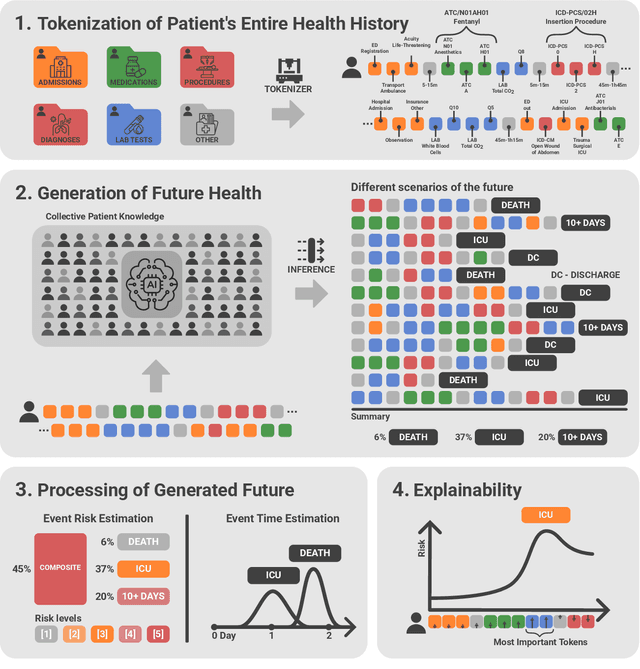

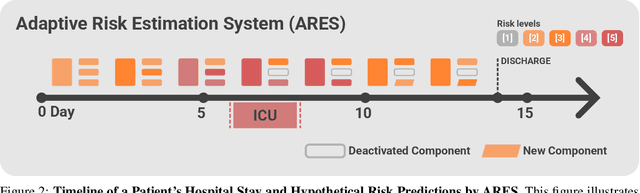

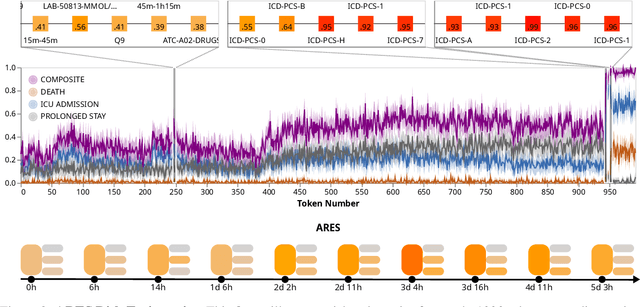

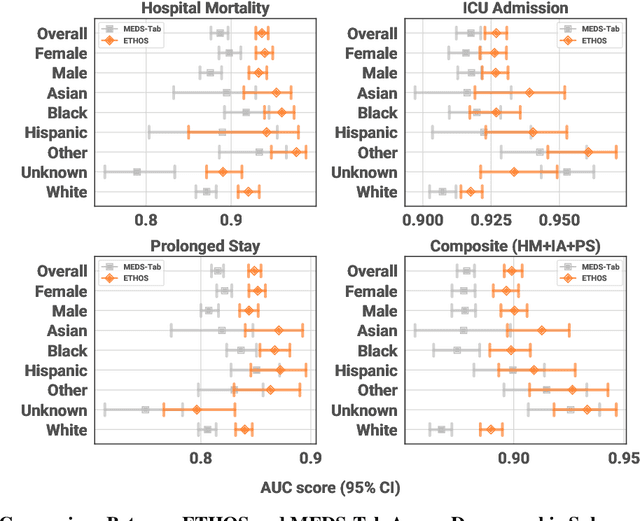

Abstract:We developed the Enhanced Transformer for Health Outcome Simulation (ETHOS), an AI model that tokenizes patient health timelines (PHTs) from EHRs. ETHOS predicts future PHTs using transformer-based architectures. The Adaptive Risk Estimation System (ARES) employs ETHOS to compute dynamic and personalized risk probabilities for clinician-defined critical events. ARES incorporates a personalized explainability module that identifies key clinical factors influencing risk estimates for individual patients. ARES was evaluated on the MIMIC-IV v2.2 dataset in emergency department (ED) settings, benchmarking its performance against traditional early warning systems and machine learning models. We processed 299,721 unique patients from MIMIC-IV into 285,622 PHTs, with 60% including hospital admissions. The dataset contained over 357 million tokens. ETHOS outperformed benchmark models in predicting hospital admissions, ICU admissions, and prolonged hospital stays, achieving superior AUC scores. ETHOS-based risk estimates demonstrated robustness across demographic subgroups with strong model reliability, confirmed via calibration curves. The personalized explainability module provides insights into patient-specific factors contributing to risk. ARES, powered by ETHOS, advances predictive healthcare AI by providing dynamic, real-time, and personalized risk estimation with patient-specific explainability to enhance clinician trust. Its adaptability and superior accuracy position it as a transformative tool for clinical decision-making, potentially improving patient outcomes and resource allocation in emergency and inpatient settings. We release the full code at github.com/ipolharvard/ethos-ares to facilitate future research.

Zero Shot Health Trajectory Prediction Using Transformer

Jul 30, 2024Abstract:Integrating modern machine learning and clinical decision-making has great promise for mitigating healthcare's increasing cost and complexity. We introduce the Enhanced Transformer for Health Outcome Simulation (ETHOS), a novel application of the transformer deep-learning architecture for analyzing high-dimensional, heterogeneous, and episodic health data. ETHOS is trained using Patient Health Timelines (PHTs)-detailed, tokenized records of health events-to predict future health trajectories, leveraging a zero-shot learning approach. ETHOS represents a significant advancement in foundation model development for healthcare analytics, eliminating the need for labeled data and model fine-tuning. Its ability to simulate various treatment pathways and consider patient-specific factors positions ETHOS as a tool for care optimization and addressing biases in healthcare delivery. Future developments will expand ETHOS' capabilities to incorporate a wider range of data types and data sources. Our work demonstrates a pathway toward accelerated AI development and deployment in healthcare.

Efficient Aberration Correction via Optimal Bulk Speed of Sound Compensation

Mar 03, 2023

Abstract:Diagnostic ultrasound is a versatile and practical tool in the abdomen, and is particularly vital toward the detection and mitigation of early-stage non-alcoholic fatty liver disease (NAFLD). However, its performance in those with obesity -- who are at increased risk for NAFLD -- is degraded due to distortions of the ultrasound as it traverses thicker, acoustically heterogeneous body walls (aberration). Here, we assess the capability of a bulk speed of sound correction in receive beamforming to correct aberration, and improve the resulting images. We find that a bulk correction may approximate the aberration profile for layers or relevant thicknesses (1 to 3 cm) and speeds of sound (1400 to 1500 m/s). Additionally, through in vitro experiments, we show significant improvement in resolution (average point target width reduced by 60 %) with bulk speed of sound correction determined automatically from the beamformed images. Together, these results demonstrate the utility of simple, efficient bulk speed of sound correction to improve the quality of diagnostic liver images.

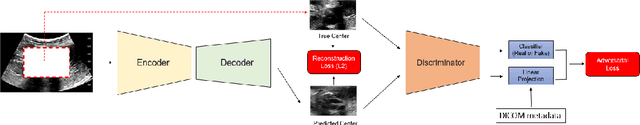

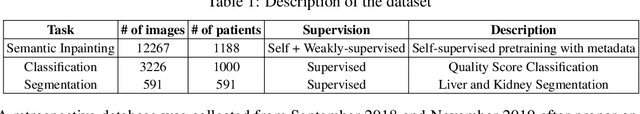

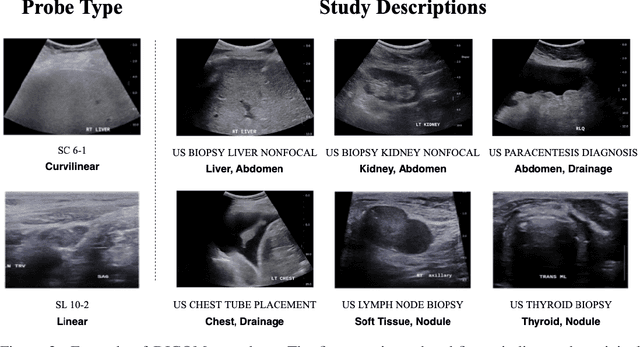

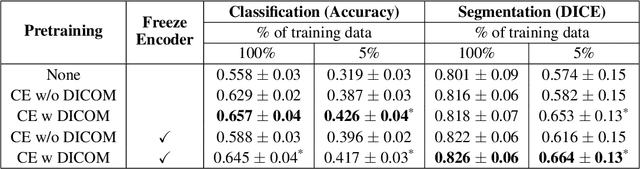

Weakly Supervised Context Encoder using DICOM metadata in Ultrasound Imaging

Mar 20, 2020

Abstract:Modern deep learning algorithms geared towards clinical adaption rely on a significant amount of high fidelity labeled data. Low-resource settings pose challenges like acquiring high fidelity data and becomes the bottleneck for developing artificial intelligence applications. Ultrasound images, stored in Digital Imaging and Communication in Medicine (DICOM) format, have additional metadata data corresponding to ultrasound image parameters and medical exams. In this work, we leverage DICOM metadata from ultrasound images to help learn representations of the ultrasound image. We demonstrate that the proposed method outperforms the non-metadata based approaches across different downstream tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge