Anne Collin

The Reasonable Crowd: Towards evidence-based and interpretable models of driving behavior

Jul 28, 2021

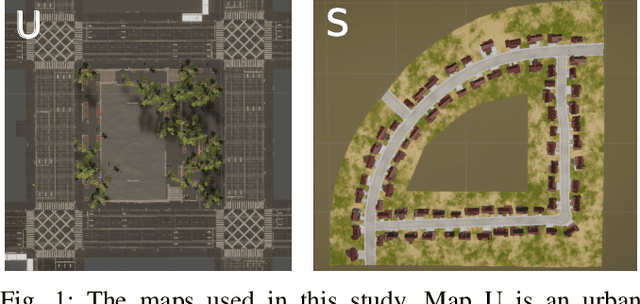

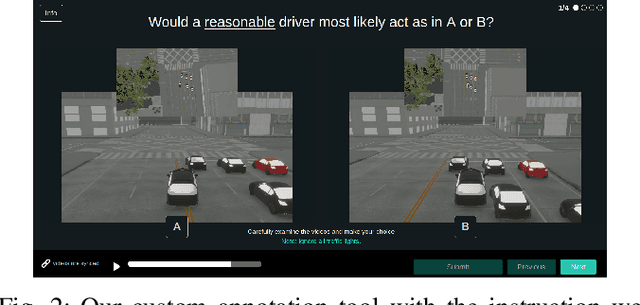

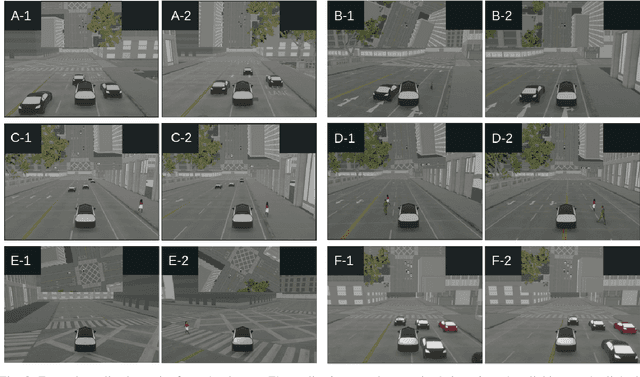

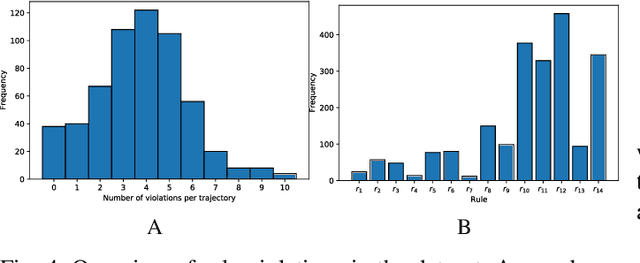

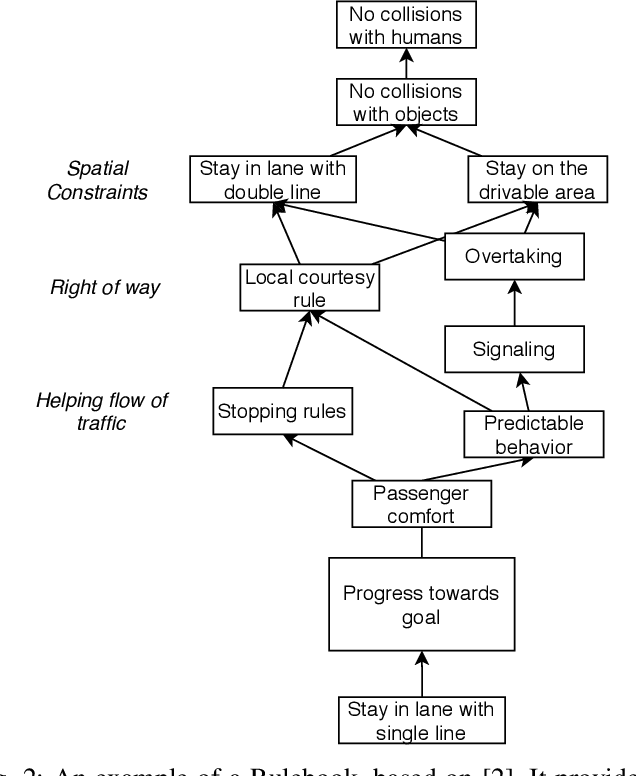

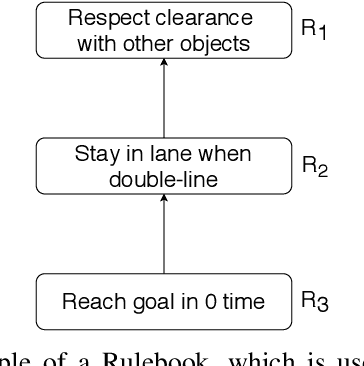

Abstract:Autonomous vehicles must balance a complex set of objectives. There is no consensus on how they should do so, nor on a model for specifying a desired driving behavior. We created a dataset to help address some of these questions in a limited operating domain. The data consists of 92 traffic scenarios, with multiple ways of traversing each scenario. Multiple annotators expressed their preference between pairs of scenario traversals. We used the data to compare an instance of a rulebook, carefully hand-crafted independently of the dataset, with several interpretable machine learning models such as Bayesian networks, decision trees, and logistic regression trained on the dataset. To compare driving behavior, these models use scores indicating by how much different scenario traversals violate each of 14 driving rules. The rules are interpretable and designed by subject-matter experts. First, we found that these rules were enough for these models to achieve a high classification accuracy on the dataset. Second, we found that the rulebook provides high interpretability without excessively sacrificing performance. Third, the data pointed to possible improvements in the rulebook and the rules, and to potential new rules. Fourth, we explored the interpretability vs performance trade-off by also training non-interpretable models such as a random forest. Finally, we make the dataset publicly available to encourage a discussion from the wider community on behavior specification for AVs. Please find it at github.com/bassam-motional/Reasonable-Crowd.

Rule-based Evaluation and Optimal Control for Autonomous Driving

Jul 15, 2021

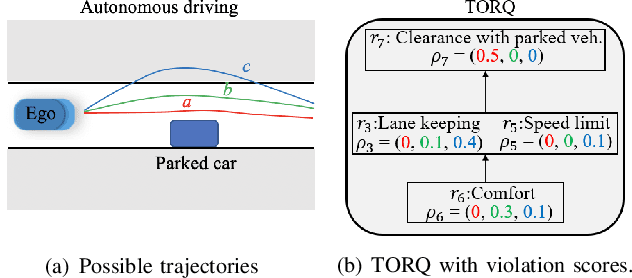

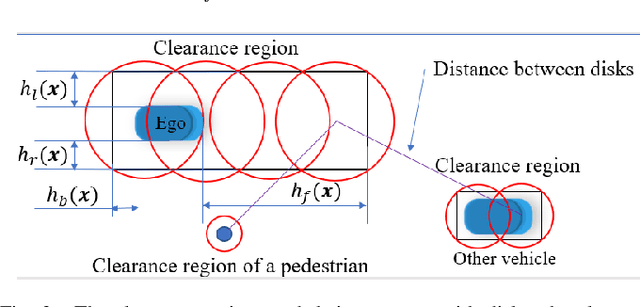

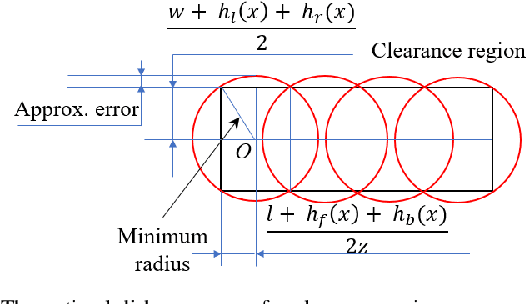

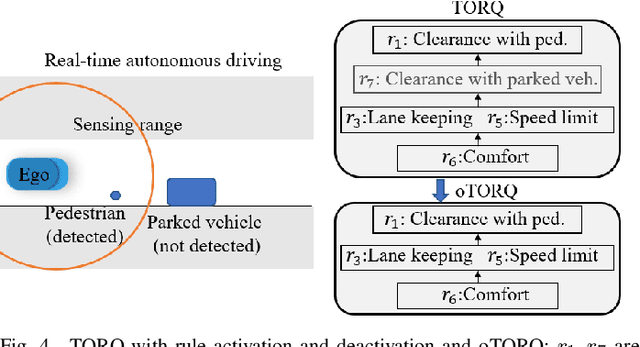

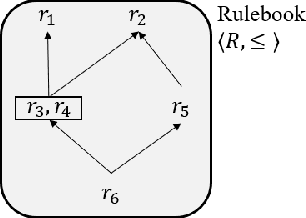

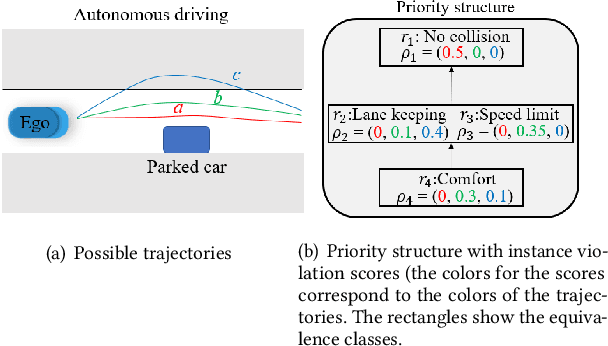

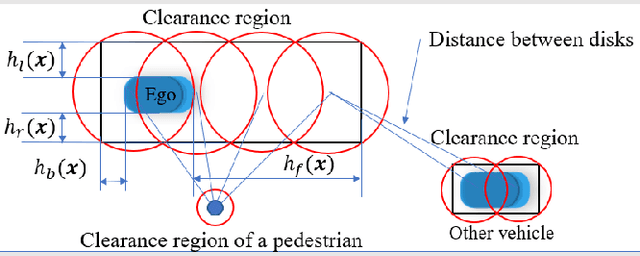

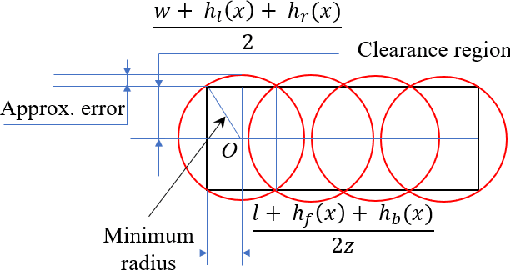

Abstract:We develop optimal control strategies for autonomous vehicles (AVs) that are required to meet complex specifications imposed as rules of the road (ROTR) and locally specific cultural expectations of reasonable driving behavior. We formulate these specifications as rules, and specify their priorities by constructing a priority structure, called \underline{T}otal \underline{OR}der over e\underline{Q}uivalence classes (TORQ). We propose a recursive framework, in which the satisfaction of the rules in the priority structure are iteratively relaxed in reverse order of priority. Central to this framework is an optimal control problem, where convergence to desired states is achieved using Control Lyapunov Functions (CLFs) and clearance with other road users is enforced through Control Barrier Functions (CBFs). We present offline and online approaches to this problem. In the latter, the AV has limited sensing range that affects the activation of the rules, and the control is generated using a receding horizon (Model Predictive Control, MPC) approach. We also show how the offline method can be used for after-the-fact (offline) pass/fail evaluation of trajectories - a given trajectory is rejected if we can find a controller producing a trajectory that leads to less violation of the rule priority structure. We present case studies with multiple driving scenarios to demonstrate the effectiveness of the algorithms, and to compare the offline and online versions of our proposed framework.

Plane and Sample: Maximizing Information about Autonomous Vehicle Performance using Submodular Optimization

Jun 15, 2021

Abstract:As autonomous vehicles (AVs) take on growing Operational Design Domains (ODDs), they need to go through a systematic, transparent, and scalable evaluation process to demonstrate their benefits to society. Current scenario sampling techniques for AV performance evaluation usually focus on a specific functionality, such as lane changing, and do not accommodate a transfer of information about an AV system from one ODD to the next. In this paper, we reformulate the scenario sampling problem across ODDs and functionalities as a submodular optimization problem. To do so, we abstract AV performance as a Bayesian Hierarchical Model, which we use to infer information gained by revealing performance in new scenarios. We propose the information gain as a measure of scenario relevance and evaluation progress. Furthermore, we leverage the submodularity, or diminishing returns, property of the information gain not only to find a near-optimal scenario set, but also to propose a stopping criterion for an AV performance evaluation campaign. We find that we only need to explore about 7.5% of the scenario space to meet this criterion, a 23% improvement over Latin Hypercube Sampling.

Safety of the Intended Driving Behavior Using Rulebooks

May 10, 2021

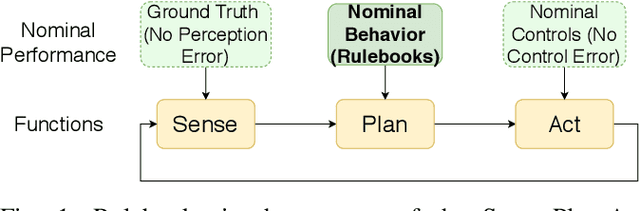

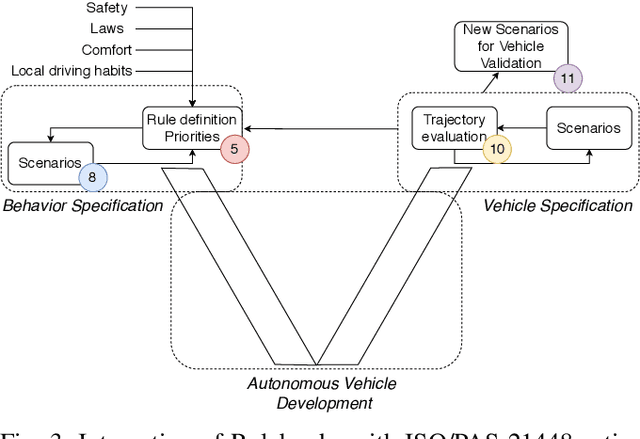

Abstract:Autonomous Vehicles (AVs) are complex systems that drive in uncertain environments and potentially navigate unforeseeable situations. Safety of these systems requires not only an absence of malfunctions but also high performance of functions in many different scenarios. The ISO/PAS 21448 [1] guidance recommends a process to ensure the Safety of the Intended Functionality (SOTIF) for road vehicles. This process starts with a functional specification that fully describes the intended functionality and further includes the verification and validation that the AV meets this specification. For the path planning function, defining the correct sequence of control actions for each vehicle in all potential driving situations is intractable. In this paper, the authors provide a link between the Rulebooks framework, presented by [2], and the SOTIF process. We establish that Rulebooks provide a functional description of the path planning task in an AV and discuss the potential usage of the method for verification and validation.

Rule-based Optimal Control for Autonomous Driving

Jan 14, 2021

Abstract:We develop optimal control strategies for Autonomous Vehicles (AVs) that are required to meet complex specifications imposed by traffic laws and cultural expectations of reasonable driving behavior. We formulate these specifications as rules, and specify their priorities by constructing a priority structure. We propose a recursive framework, in which the satisfaction of the rules in the priority structure are iteratively relaxed based on their priorities. Central to this framework is an optimal control problem, where convergence to desired states is achieved using Control Lyapunov Functions (CLFs), and safety is enforced through Control Barrier Functions (CBFs). We also show how the proposed framework can be used for after-the-fact, pass / fail evaluation of trajectories - a given trajectory is rejected if we can find a controller producing a trajectory that leads to less violation of the rule priority structure. We present case studies with multiple driving scenarios to demonstrate the effectiveness of the proposed framework.

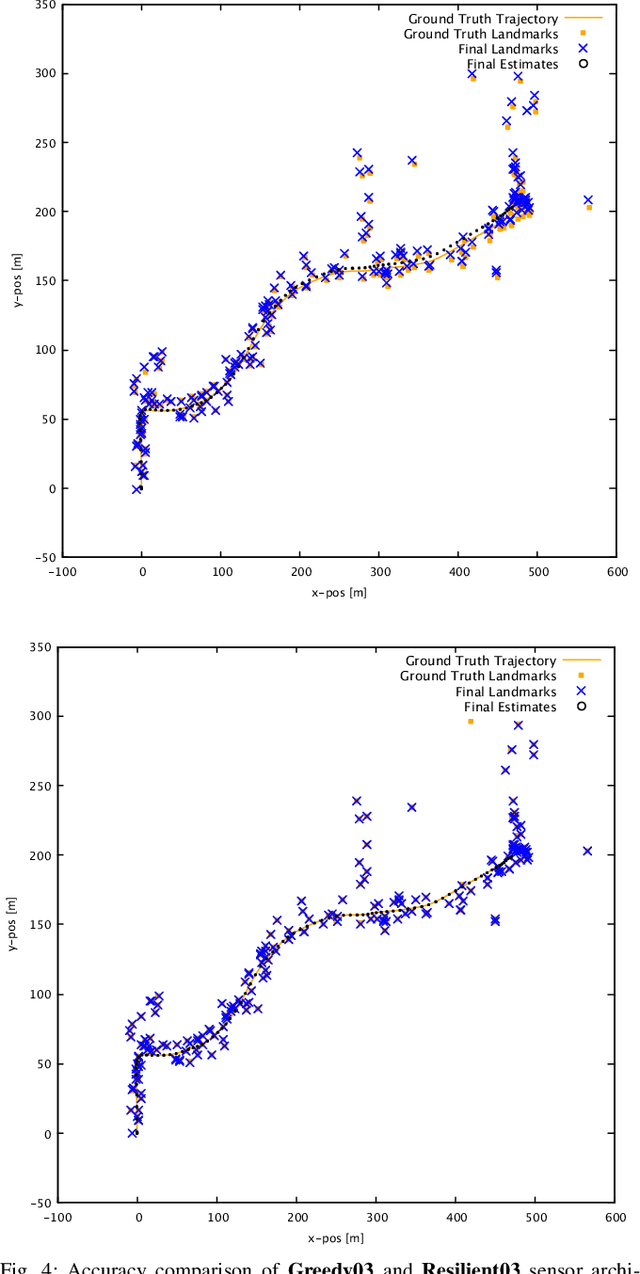

Resilient Sensor Architecture Design and Tradespace Analysis for Autonomous Vehicle Localization and Mapping

Jul 19, 2019

Abstract:As autonomous cars are rolled out into new environments, their ability to solve the simultaneous localization and mapping (SLAM) problem becomes critical. In order to tackle this problem, autonomous vehicles rely on sensor suites that provide them with information about their operating environment. When large scale production is taken into consideration, a trade-off between an acceptable sensor suite cost and its resulting performance characteristics arises. Furthermore, guaranteeing the system's performance requires a resilient sensor network design. This work seeks to address such trade-offs by introducing a method that takes into account the performance, cost, and resiliency of distinct sensor selections. As a result, this method is able to offer sensor combination recommendations based on the vehicle's operating environment. It is found that the structure of the environment influences sensor placement, and that the design of a resilient sensor network involves careful consideration of both environmental attributes such as landmark density and location, as well as the available types of complimentary sensors. Demonstration of the proposed approach is shown by evaluating it using sequences from the KITTI Benchmark Suite.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge