Anna Wróblewska

On the Effectiveness of LLM-Specific Fine-Tuning for Detecting AI-Generated Text

Jan 27, 2026Abstract:The rapid progress of large language models has enabled the generation of text that closely resembles human writing, creating challenges for authenticity verification in education, publishing, and digital security. Detecting AI-generated text has therefore become a crucial technical and ethical issue. This paper presents a comprehensive study of AI-generated text detection based on large-scale corpora and novel training strategies. We introduce a 1-billion-token corpus of human-authored texts spanning multiple genres and a 1.9-billion-token corpus of AI-generated texts produced by prompting a variety of LLMs across diverse domains. Using these resources, we develop and evaluate numerous detection models and propose two novel training paradigms: Per LLM and Per LLM family fine-tuning. Across a 100-million-token benchmark covering 21 large language models, our best fine-tuned detector achieves up to $99.6\%$ token-level accuracy, substantially outperforming existing open-source baselines.

Evaluating LLM-Generated Q&A Test: a Student-Centered Study

May 10, 2025Abstract:This research prepares an automatic pipeline for generating reliable question-answer (Q&A) tests using AI chatbots. We automatically generated a GPT-4o-mini-based Q&A test for a Natural Language Processing course and evaluated its psychometric and perceived-quality metrics with students and experts. A mixed-format IRT analysis showed that the generated items exhibit strong discrimination and appropriate difficulty, while student and expert star ratings reflect high overall quality. A uniform DIF check identified two items for review. These findings demonstrate that LLM-generated assessments can match human-authored tests in psychometric performance and user satisfaction, illustrating a scalable approach to AI-assisted assessment development.

Applying Text Mining to Analyze Human Question Asking in Creativity Research

Jan 03, 2025

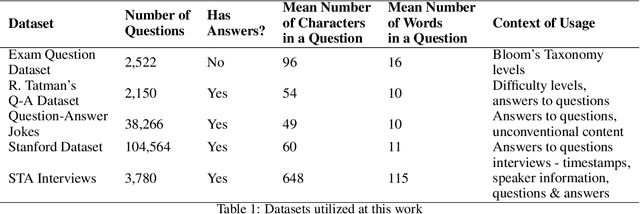

Abstract:Creativity relates to the ability to generate novel and effective ideas in the areas of interest. How are such creative ideas generated? One possible mechanism that supports creative ideation and is gaining increased empirical attention is by asking questions. Question asking is a likely cognitive mechanism that allows defining problems, facilitating creative problem solving. However, much is unknown about the exact role of questions in creativity. This work presents an attempt to apply text mining methods to measure the cognitive potential of questions, taking into account, among others, (a) question type, (b) question complexity, and (c) the content of the answer. This contribution summarizes the history of question mining as a part of creativity research, along with the natural language processing methods deemed useful or helpful in the study. In addition, a novel approach is proposed, implemented, and applied to five datasets. The experimental results obtained are comprehensively analyzed, suggesting that natural language processing has a role to play in creative research.

Consumer Transactions Simulation through Generative Adversarial Networks

Aug 07, 2024Abstract:In the rapidly evolving domain of large-scale retail data systems, envisioning and simulating future consumer transactions has become a crucial area of interest. It offers significant potential to fortify demand forecasting and fine-tune inventory management. This paper presents an innovative application of Generative Adversarial Networks (GANs) to generate synthetic retail transaction data, specifically focusing on a novel system architecture that combines consumer behavior modeling with stock-keeping unit (SKU) availability constraints to address real-world assortment optimization challenges. We diverge from conventional methodologies by integrating SKU data into our GAN architecture and using more sophisticated embedding methods (e.g., hyper-graphs). This design choice enables our system to generate not only simulated consumer purchase behaviors but also reflects the dynamic interplay between consumer behavior and SKU availability -- an aspect often overlooked, among others, because of data scarcity in legacy retail simulation models. Our GAN model generates transactions under stock constraints, pioneering a resourceful experimental system with practical implications for real-world retail operation and strategy. Preliminary results demonstrate enhanced realism in simulated transactions measured by comparing generated items with real ones using methods employed earlier in related studies. This underscores the potential for more accurate predictive modeling.

Fake News Detection: It's All in the Data!

Jul 02, 2024Abstract:This comprehensive survey serves as an indispensable resource for researchers embarking on the journey of fake news detection. By highlighting the pivotal role of dataset quality and diversity, it underscores the significance of these elements in the effectiveness and robustness of detection models. The survey meticulously outlines the key features of datasets, various labeling systems employed, and prevalent biases that can impact model performance. Additionally, it addresses critical ethical issues and best practices, offering a thorough overview of the current state of available datasets. Our contribution to this field is further enriched by the provision of GitHub repository, which consolidates publicly accessible datasets into a single, user-friendly portal. This repository is designed to facilitate and stimulate further research and development efforts aimed at combating the pervasive issue of fake news.

Intelligent Interface: Enhancing Lecture Engagement with Didactic Activity Summaries

Jun 20, 2024Abstract:Recently, multiple applications of machine learning have been introduced. They include various possibilities arising when image analysis methods are applied to, broadly understood, video streams. In this context, a novel tool, developed for academic educators to enhance the teaching process by automating, summarizing, and offering prompt feedback on conducting lectures, has been developed. The implemented prototype utilizes machine learning-based techniques to recognise selected didactic and behavioural teachers' features within lecture video recordings. Specifically, users (teachers) can upload their lecture videos, which are preprocessed and analysed using machine learning models. Next, users can view summaries of recognized didactic features through interactive charts and tables. Additionally, stored ML-based prediction results support comparisons between lectures based on their didactic content. In the developed application text-based models trained on lecture transcriptions, with enhancements to the transcription quality, by adopting an automatic speech recognition solution are applied. Furthermore, the system offers flexibility for (future) integration of new/additional machine-learning models and software modules for image and video analysis.

Mining United Nations General Assembly Debates

Jun 19, 2024Abstract:This project explores the application of Natural Language Processing (NLP) techniques to analyse United Nations General Assembly (UNGA) speeches. Using NLP allows for the efficient processing and analysis of large volumes of textual data, enabling the extraction of semantic patterns, sentiment analysis, and topic modelling. Our goal is to deliver a comprehensive dataset and a tool (interface with descriptive statistics and automatically extracted topics) from which political scientists can derive insights into international relations and have the opportunity to have a nuanced understanding of global diplomatic discourse.

Improving Object Detection Quality in Football Through Super-Resolution Techniques

Jan 31, 2024Abstract:This study explores the potential of super-resolution techniques in enhancing object detection accuracy in football. Given the sport's fast-paced nature and the critical importance of precise object (e.g. ball, player) tracking for both analysis and broadcasting, super-resolution could offer significant improvements. We investigate how advanced image processing through super-resolution impacts the accuracy and reliability of object detection algorithms in processing football match footage. Our methodology involved applying state-of-the-art super-resolution techniques to a diverse set of football match videos from SoccerNet, followed by object detection using Faster R-CNN. The performance of these algorithms, both with and without super-resolution enhancement, was rigorously evaluated in terms of detection accuracy. The results indicate a marked improvement in object detection accuracy when super-resolution preprocessing is applied. The improvement of object detection through the integration of super-resolution techniques yields significant benefits, especially for low-resolution scenarios, with a notable 12\% increase in mean Average Precision (mAP) at an IoU (Intersection over Union) range of 0.50:0.95 for 320x240 size images when increasing the resolution fourfold using RLFN. As the dimensions increase, the magnitude of improvement becomes more subdued; however, a discernible improvement in the quality of detection is consistently evident. Additionally, we discuss the implications of these findings for real-time sports analytics, player tracking, and the overall viewing experience. The study contributes to the growing field of sports technology by demonstrating the practical benefits and limitations of integrating super-resolution techniques in football analytics and broadcasting.

Survey of Action Recognition, Spotting and Spatio-Temporal Localization in Soccer -- Current Trends and Research Perspectives

Sep 21, 2023Abstract:Action scene understanding in soccer is a challenging task due to the complex and dynamic nature of the game, as well as the interactions between players. This article provides a comprehensive overview of this task divided into action recognition, spotting, and spatio-temporal action localization, with a particular emphasis on the modalities used and multimodal methods. We explore the publicly available data sources and metrics used to evaluate models' performance. The article reviews recent state-of-the-art methods that leverage deep learning techniques and traditional methods. We focus on multimodal methods, which integrate information from multiple sources, such as video and audio data, and also those that represent one source in various ways. The advantages and limitations of methods are discussed, along with their potential for improving the accuracy and robustness of models. Finally, the article highlights some of the open research questions and future directions in the field of soccer action recognition, including the potential for multimodal methods to advance this field. Overall, this survey provides a valuable resource for researchers interested in the field of action scene understanding in soccer.

Automating the Analysis of Institutional Design in International Agreements

May 26, 2023Abstract:This paper explores the automatic knowledge extraction of formal institutional design - norms, rules, and actors - from international agreements. The focus was to analyze the relationship between the visibility and centrality of actors in the formal institutional design in regulating critical aspects of cultural heritage relations. The developed tool utilizes techniques such as collecting legal documents, annotating them with Institutional Grammar, and using graph analysis to explore the formal institutional design. The system was tested against the 2003 UNESCO Convention for the Safeguarding of the Intangible Cultural Heritage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge