Anna Rudenko

SparseGrad: A Selective Method for Efficient Fine-tuning of MLP Layers

Oct 09, 2024

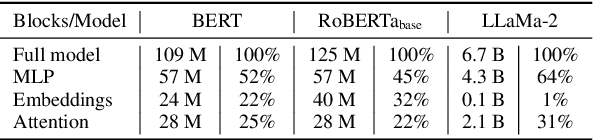

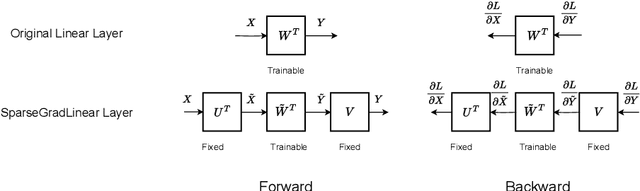

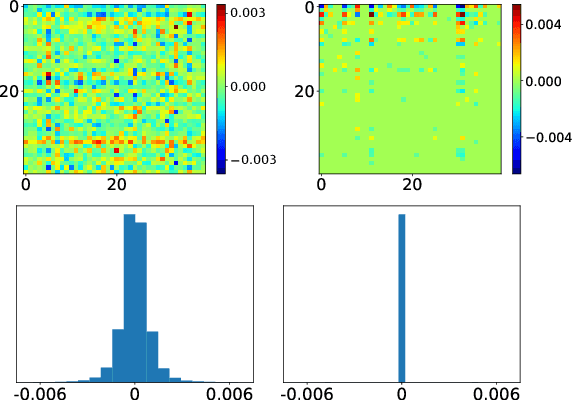

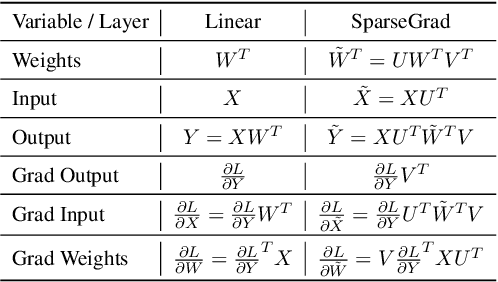

Abstract:The performance of Transformer models has been enhanced by increasing the number of parameters and the length of the processed text. Consequently, fine-tuning the entire model becomes a memory-intensive process. High-performance methods for parameter-efficient fine-tuning (PEFT) typically work with Attention blocks and often overlook MLP blocks, which contain about half of the model parameters. We propose a new selective PEFT method, namely SparseGrad, that performs well on MLP blocks. We transfer layer gradients to a space where only about 1\% of the layer's elements remain significant. By converting gradients into a sparse structure, we reduce the number of updated parameters. We apply SparseGrad to fine-tune BERT and RoBERTa for the NLU task and LLaMa-2 for the Question-Answering task. In these experiments, with identical memory requirements, our method outperforms LoRA and MeProp, robust popular state-of-the-art PEFT approaches.

Interpretable Deep Learning for Pattern Recognition in Brain Differences Between Men and Women

Jun 20, 2020

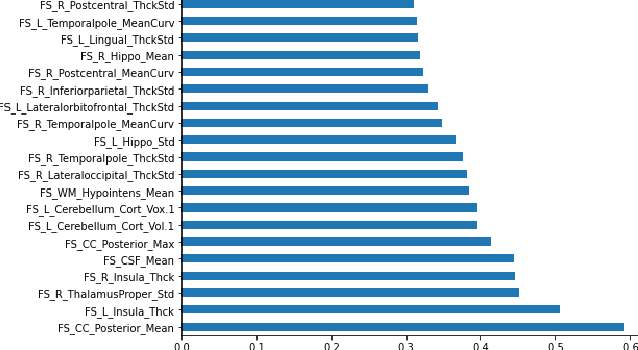

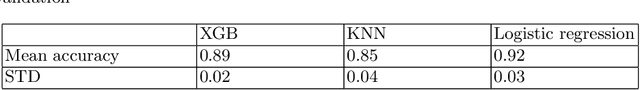

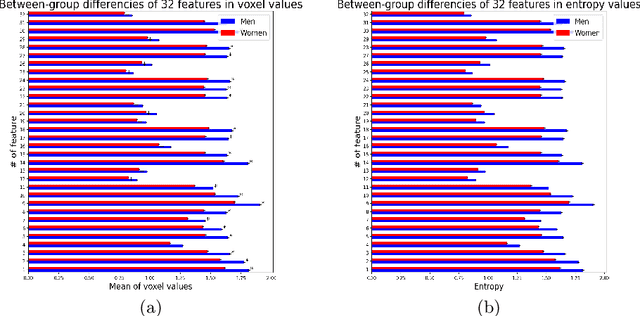

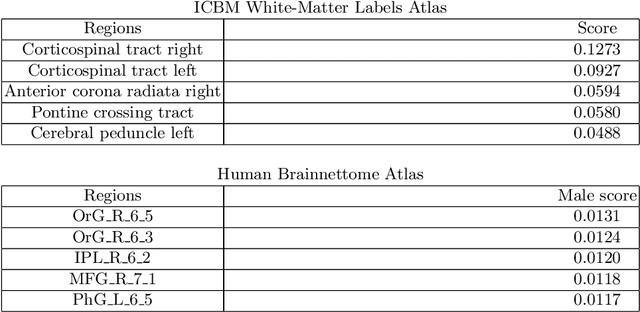

Abstract:Deep learning shows high potential for many medical image analysis tasks. Neural networks work with full-size data without extensive preprocessing and feature generation and, thus, information loss. Recent work has shown that morphological difference between specific brain regions can be found on MRI with deep learning techniques. We consider the pattern recognition task based on a large open-access dataset of healthy subjects - an exploration of brain differences between men and women. However, interpretation of the lately proposed models is based on a region of interest and can not be extended to pixel or voxel-wise image interpretation, which is considered to be more informative. In this paper, we confirm the previous findings in sex differences from diffusion-tensor imaging on T1 weighted brain MRI scans. We compare the results of three voxel-based 3D CNN interpretation methods: Meaningful Perturbations, GradCam and Guided Backpropagation and provide the open-source code.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge