Andrew Wallace

Radar SLAM: A Robust SLAM System for All Weather Conditions

Apr 12, 2021

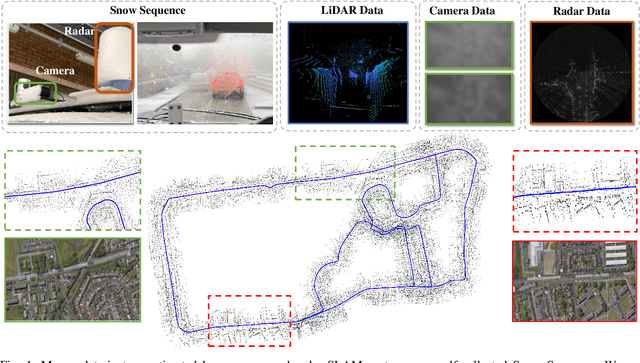

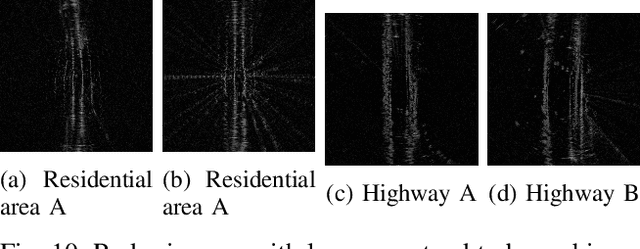

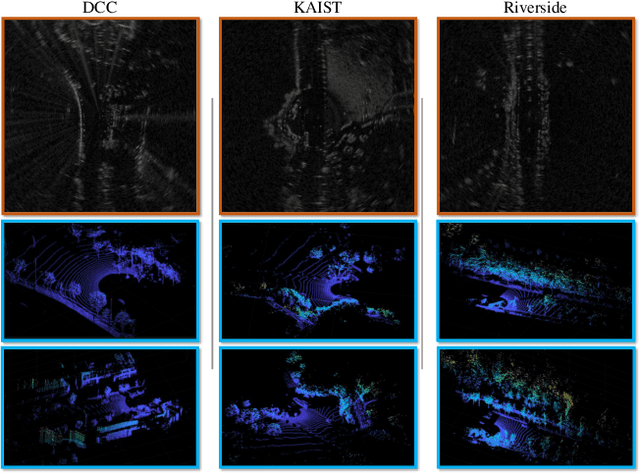

Abstract:A Simultaneous Localization and Mapping (SLAM) system must be robust to support long-term mobile vehicle and robot applications. However, camera and LiDAR based SLAM systems can be fragile when facing challenging illumination or weather conditions which degrade their imagery and point cloud data. Radar, whose operating electromagnetic spectrum is less affected by environmental changes, is promising although its distinct sensing geometry and noise characteristics bring open challenges when being exploited for SLAM. % However, there are still open challenges since most existing visual and LiDAR SLAM systems do not operate in bad weathers. This paper studies the use of a Frequency Modulated Continuous Wave radar for SLAM in large-scale outdoor environments. We propose a full radar SLAM system, including a novel radar motion tracking algorithm that leverages radar geometry for reliable feature tracking. It also optimally compensates motion distortion and estimates pose by joint optimization. Its loop closure component is designed to be simple yet efficient for radar imagery by capturing and exploiting structural information of the surrounding environment. % while a scheme to reject ambiguous loop closure candidates is also designed specifically for radar. Extensive experiments on three public radar datasets, ranging from city streets and residential areas to countryside and highways, show competitive accuracy and reliability performance of the proposed radar SLAM system compared to the state-of-the-art LiDAR, vision and radar methods. The results show that our system is technically viable in achieving reliable SLAM in extreme weather conditions, e.g. heavy snow and dense fog, demonstrating the promising potential of using radar for all-weather localization and mapping.

RADIATE: A Radar Dataset for Automotive Perception

Oct 18, 2020

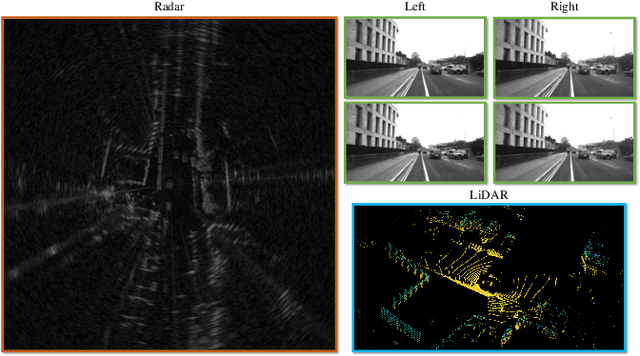

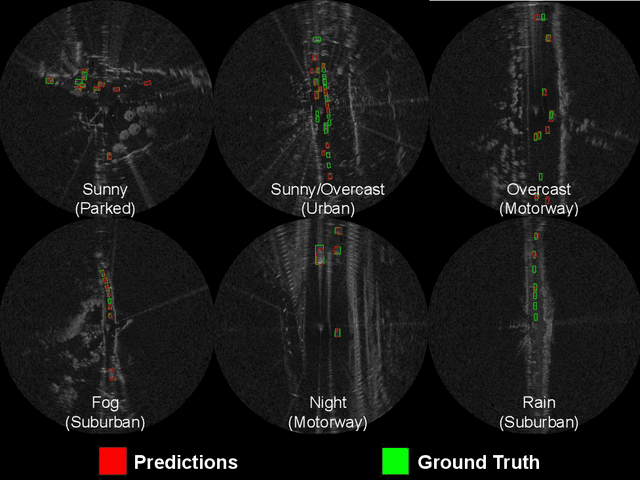

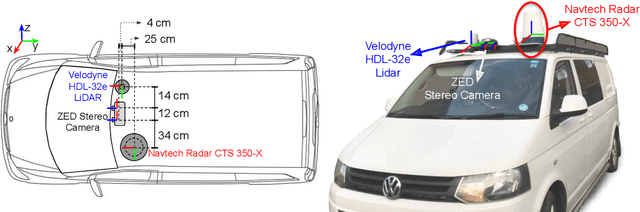

Abstract:Datasets for autonomous cars are essential for the development and benchmarking of perception systems. However, most existing datasets are captured with camera and LiDAR sensors in good weather conditions. In this paper, we present the RAdar Dataset In Adverse weaThEr (RADIATE), aiming to facilitate research on object detection, tracking and scene understanding using radar sensing for safe autonomous driving. RADIATE includes 3 hours of annotated radar images with more than 200K labelled road actors in total, on average about 4.6 instances per radar image. It covers 8 different categories of actors in a variety of weather conditions (e.g., sun, night, rain, fog and snow) and driving scenarios (e.g., parked, urban, motorway and suburban), representing different levels of challenge. To the best of our knowledge, this is the first public radar dataset which provides high-resolution radar images on public roads with a large amount of road actors labelled. The data collected in adverse weather, e.g., fog and snowfall, is unique. Some baseline results of radar based object detection and recognition are given to show that the use of radar data is promising for automotive applications in bad weather, where vision and LiDAR can fail. RADIATE also has stereo images, 32-channel LiDAR and GPS data, directed at other applications such as sensor fusion, localisation and mapping. The public dataset can be accessed at http://pro.hw.ac.uk/radiate/.

300 GHz Radar Object Recognition based on Deep Neural Networks and Transfer Learning

Dec 06, 2019

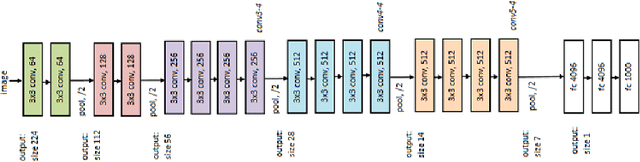

Abstract:For high resolution scene mapping and object recognition, optical technologies such as cameras and LiDAR are the sensors of choice. However, for robust future vehicle autonomy and driver assistance in adverse weather conditions, improvements in automotive radar technology, and the development of algorithms and machine learning for robust mapping and recognition are essential. In this paper, we describe a methodology based on deep neural networks to recognise objects in 300GHz radar images, investigating robustness to changes in range, orientation and different receivers in a laboratory environment. As the training data is limited, we have also investigated the effects of transfer learning. As a necessary first step before road trials, we have also considered detection and classification in multiple object scenes.

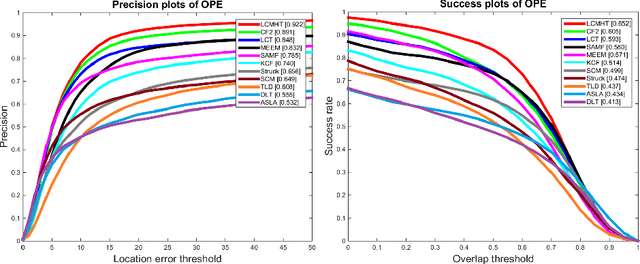

Long-term Correlation Tracking using Multi-layer Hybrid Features in Sparse and Dense Environments

Sep 06, 2018

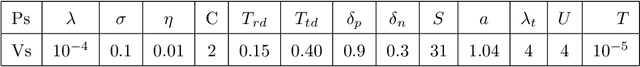

Abstract:Tracking a target of interest in both sparse and crowded environments is a challenging problem, not yet successfully addressed in the literature. In this paper, we propose a new long-term visual tracking algorithm, learning discriminative correlation filters and using an online classifier, to track a target of interest in both sparse and crowded video sequences. First, we learn a translation correlation filter using a multi-layer hybrid of convolutional neural networks (CNN) and traditional hand-crafted features. We combine advantages of both the lower convolutional layer which retains more spatial details for precise localization and the higher convolutional layer which encodes semantic information for handling appearance variations, and then integrate these with histogram of oriented gradients (HOG) and color-naming traditional features. Second, we include a re-detection module for overcoming tracking failures due to long-term occlusions by training an incremental (online) SVM on the most confident frames using hand-engineered features. This re-detection module is activated only when the correlation response of the object is below some pre-defined threshold. This generates high score detection proposals which are temporally filtered using a Gaussian mixture probability hypothesis density (GM-PHD) filter to find the detection proposal with the maximum weight as the target state estimate by removing the other detection proposals as clutter. Finally, we learn a scale correlation filter for estimating the scale of a target by constructing a target pyramid around the estimated or re-detected position using the HOG features. We carry out extensive experiments on both sparse and dense data sets which show that our method significantly outperforms state-of-the-art methods.

Development of a N-type GM-PHD Filter for Multiple Target, Multiple Type Visual Tracking

Sep 06, 2018

Abstract:We propose a new framework that extends the standard Probability Hypothesis Density (PHD) filter for multiple targets having $N$ different types where $N\geq2$ based on Random Finite Set (RFS) theory, taking into account not only background false positives (clutter), but also confusions among detections of different target types, which are in general different in character from background clutter. Under the assumptions of Gaussianity and linearity, our framework extends the existing Gaussian mixture (GM) implementation of the standard PHD filter to create a N-type GM-PHD filter. The methodology is applied to real video sequences by integrating object detectors' information into this filter for two scenarios. In the first scenario, a tri-GM-PHD filter ($N=3$) is applied to real video sequences containing three types of multiple targets in the same scene, two football teams and a referee, using separate but confused detections. In the second scenario, we use a dual GM-PHD filter ($N=2$) for tracking pedestrians and vehicles in the same scene handling their detectors' confusions. For both cases, Munkres's variant of the Hungarian assignment algorithm is used to associate tracked target identities between frames. This approach is evaluated and compared to both raw detection and independent GM-PHD filters using the Optimal Sub-pattern Assignment (OSPA) metric and the discrimination rate. This shows the improved performance of our strategy on real video sequences.

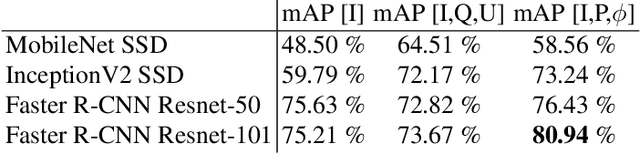

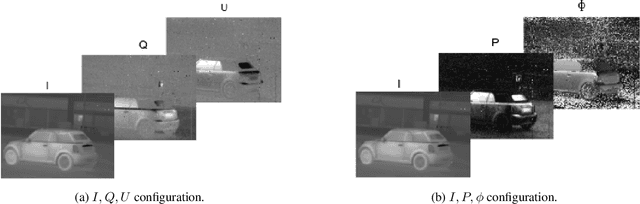

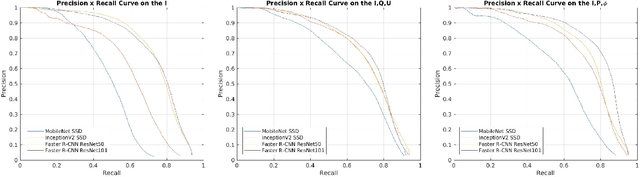

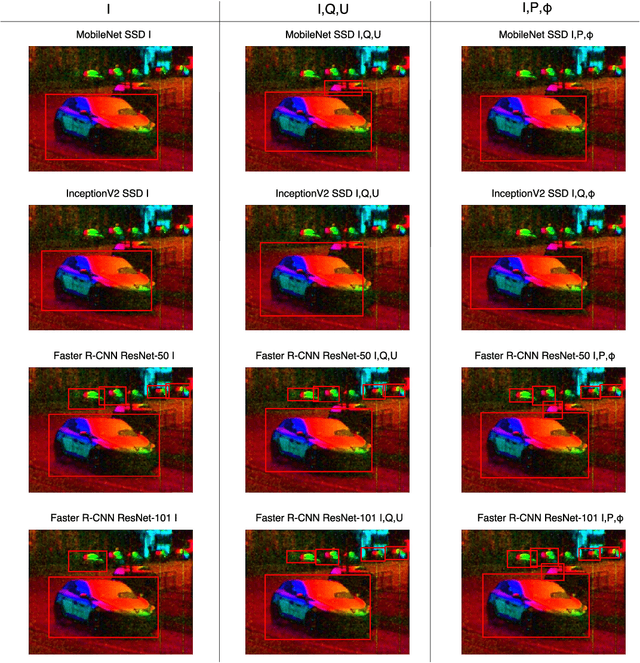

POL-LWIR Vehicle Detection: Convolutional Neural Networks Meet Polarised Infrared Sensors

Apr 07, 2018

Abstract:For vehicle autonomy, driver assistance and situational awareness, it is necessary to operate at day and night, and in all weather conditions. In particular, long wave infrared (LWIR) sensors that receive predominantly emitted radiation have the capability to operate at night as well as during the day. In this work, we employ a polarised LWIR (POL-LWIR) camera to acquire data from a mobile vehicle, to compare and contrast four different convolutional neural network (CNN) configurations to detect other vehicles in video sequences. We evaluate two distinct and promising approaches, two-stage detection (Faster-RCNN) and one-stage detection (SSD), in four different configurations. We also employ two different image decompositions: the first based on the polarisation ellipse and the second on the Stokes parameters themselves. To evaluate our approach, the experimental trials were quantified by mean average precision (mAP) and processing time, showing a clear trade-off between the two factors. For example, the best mAP result of 80.94% was achieved using Faster-RCNN, but at a frame rate of 6.4 fps. In contrast, MobileNet SSD achieved only 64.51% mAP, but at 53.4 fps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge