Alireza Ahrabian

RADIATE: A Radar Dataset for Automotive Perception

Oct 18, 2020

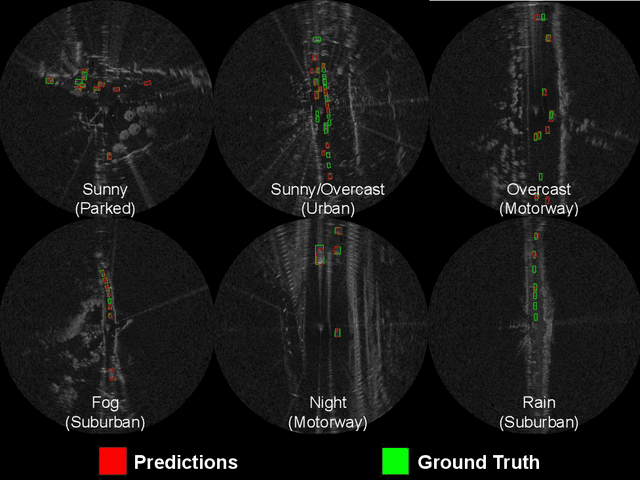

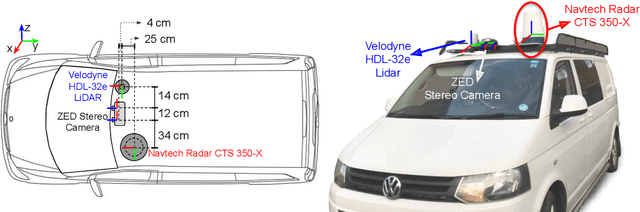

Abstract:Datasets for autonomous cars are essential for the development and benchmarking of perception systems. However, most existing datasets are captured with camera and LiDAR sensors in good weather conditions. In this paper, we present the RAdar Dataset In Adverse weaThEr (RADIATE), aiming to facilitate research on object detection, tracking and scene understanding using radar sensing for safe autonomous driving. RADIATE includes 3 hours of annotated radar images with more than 200K labelled road actors in total, on average about 4.6 instances per radar image. It covers 8 different categories of actors in a variety of weather conditions (e.g., sun, night, rain, fog and snow) and driving scenarios (e.g., parked, urban, motorway and suburban), representing different levels of challenge. To the best of our knowledge, this is the first public radar dataset which provides high-resolution radar images on public roads with a large amount of road actors labelled. The data collected in adverse weather, e.g., fog and snowfall, is unique. Some baseline results of radar based object detection and recognition are given to show that the use of radar data is promising for automotive applications in bad weather, where vision and LiDAR can fail. RADIATE also has stereo images, 32-channel LiDAR and GPS data, directed at other applications such as sensor fusion, localisation and mapping. The public dataset can be accessed at http://pro.hw.ac.uk/radiate/.

Image-Guided Depth Upsampling via Hessian and TV Priors

Oct 31, 2019

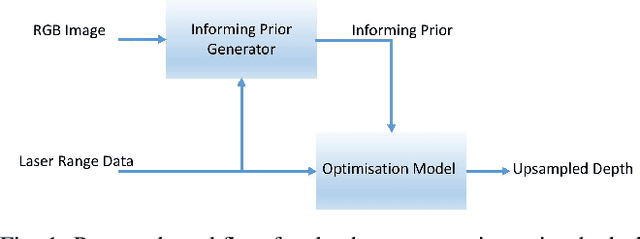

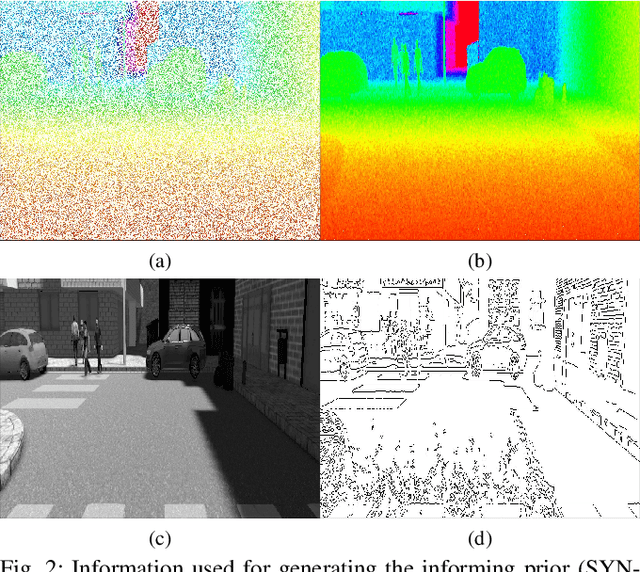

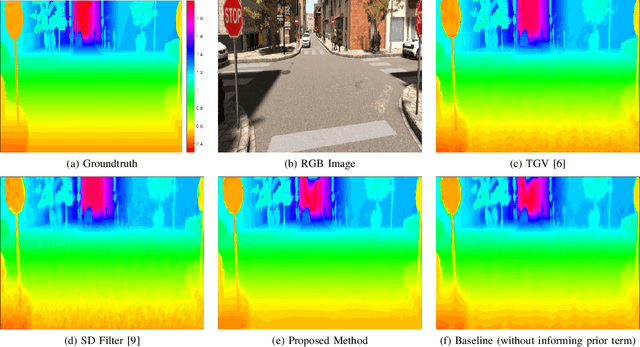

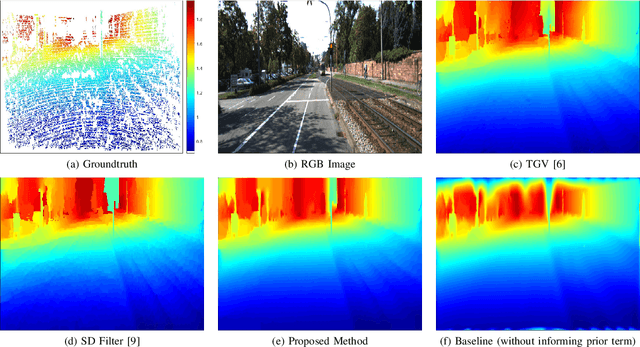

Abstract:We propose a method that combines sparse depth (LiDAR) measurements with an intensity image and to produce a dense high-resolution depth image. As there are few, but accurate, depth measurements from the scene, our method infers the remaining depth values by incorporating information from the intensity image, namely the magnitudes and directions of the identified edges, and by assuming that the scene is composed mostly of flat surfaces. Such inference is achieved by solving a convex optimisation problem with properly weighted regularisers that are based on the `1-norm (specifically, on total variation). We solve the resulting problem with a computationally efficient ADMM-based algorithm. Using the SYNTHIA and KITTI datasets, our experiments show that the proposed method achieves a depth reconstruction performance comparable to or better than other model-based methods.

Segment Parameter Labelling in MCMC Mean-Shift Change Detection

Oct 26, 2017

Abstract:This work addresses the problem of segmentation in time series data with respect to a statistical parameter of interest in Bayesian models. It is common to assume that the parameters are distinct within each segment. As such, many Bayesian change point detection models do not exploit the segment parameter patterns, which can improve performance. This work proposes a Bayesian mean-shift change point detection algorithm that makes use of repetition in segment parameters, by introducing segment class labels that utilise a Dirichlet process prior. The performance of the proposed approach was assessed on both synthetic and real world data, highlighting the enhanced performance when using parameter labelling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge