Mehryar Emambakhsh

Filtering Point Targets via Online Learning of Motion Models

Feb 20, 2019

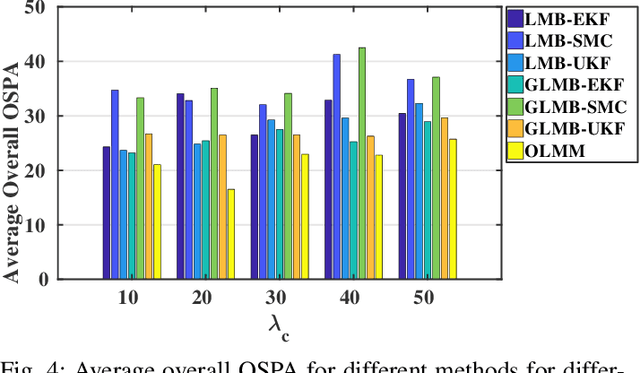

Abstract:Filtering point targets in highly cluttered and noisy data frames can be very challenging, especially for complex target motions. Fixed motion models can fail to provide accurate predictions, while learning based algorithm can be difficult to design (due to the variable number of targets), slow to train and dependent on separate train/test steps. To address these issues, this paper proposes a multi-target filtering algorithm which learns the motion models, on the fly, using a recurrent neural network with a long short-term memory architecture, as a regression block. The target state predictions are then corrected using a novel data association algorithm, with a low computational complexity. The proposed algorithm is evaluated over synthetic and real point target filtering scenarios, demonstrating a remarkable performance over highly cluttered data sequences.

Nasal Patches and Curves for Expression-robust 3D Face Recognition

Jan 01, 2019

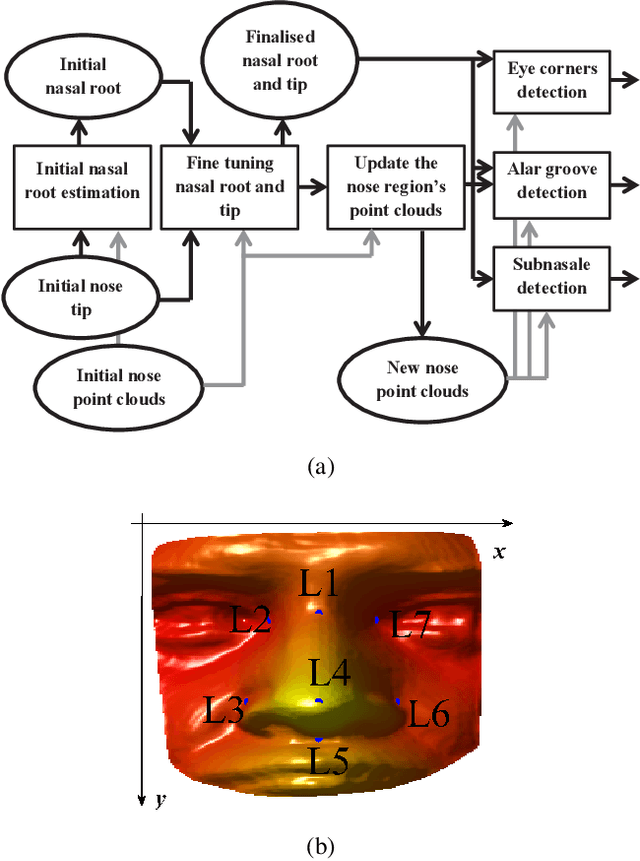

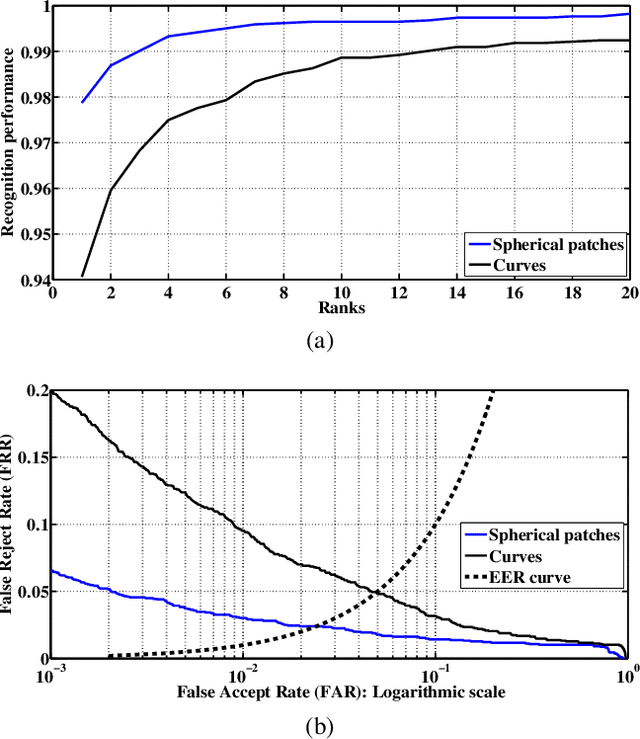

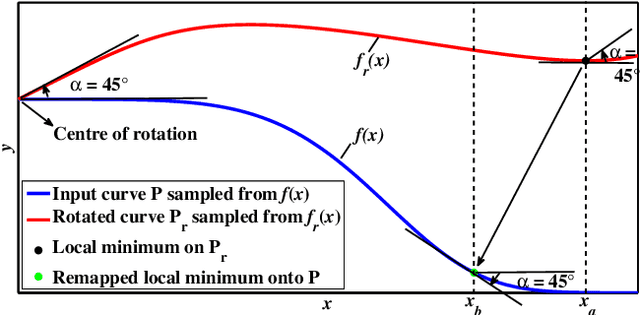

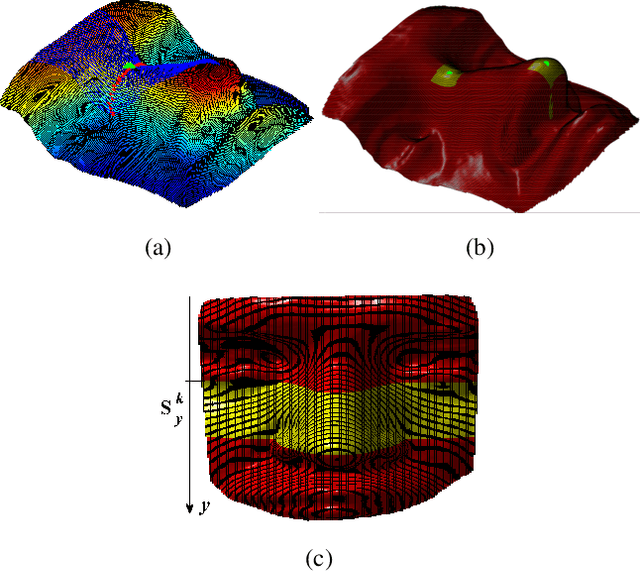

Abstract:The potential of the nasal region for expression robust 3D face recognition is thoroughly investigated by a novel five-step algorithm. First, the nose tip location is coarsely detected and the face is segmented, aligned and the nasal region cropped. Then, a very accurate and consistent nasal landmarking algorithm detects seven keypoints on the nasal region. In the third step, a feature extraction algorithm based on the surface normals of Gabor-wavelet filtered depth maps is utilised and, then, a set of spherical patches and curves are localised over the nasal region to provide the feature descriptors. The last step applies a genetic algorithm-based feature selector to detect the most stable patches and curves over different facial expressions. The algorithm provides the highest reported nasal region-based recognition ranks on the FRGC, Bosphorus and BU-3DFE datasets. The results are comparable with, and in many cases better than, many state-of-the-art 3D face recognition algorithms, which use the whole facial domain. The proposed method does not rely on sophisticated alignment or denoising steps, is very robust when only one sample per subject is used in the gallery, and does not require a training step for the landmarking algorithm. https://github.com/mehryaragha/NoseBiometrics

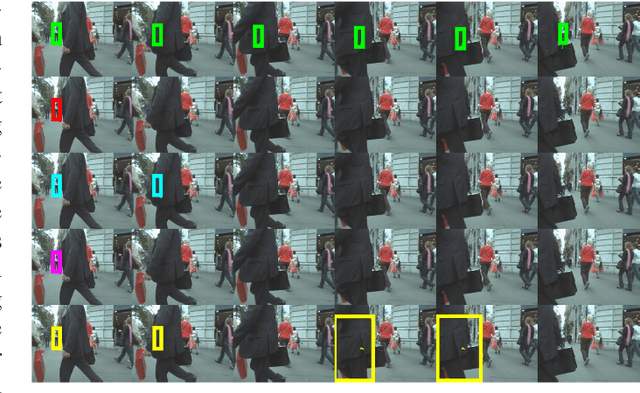

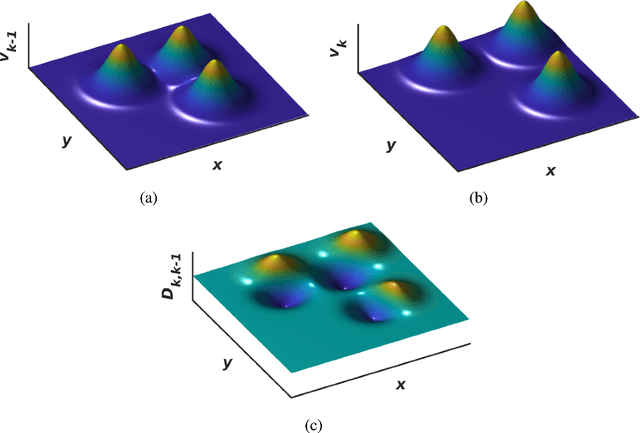

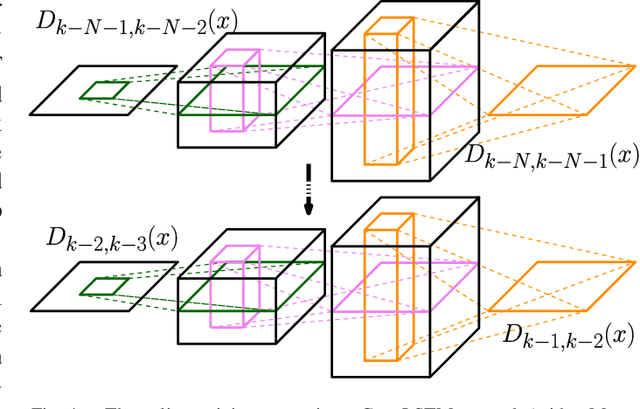

Convolutional Recurrent Predictor: Implicit Representation for Multi-target Filtering and Tracking

Nov 01, 2018

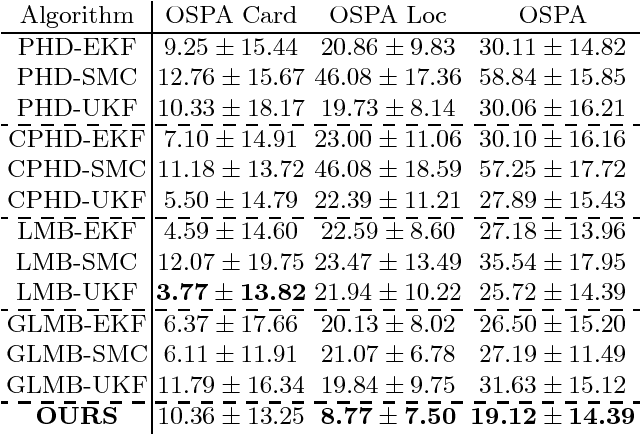

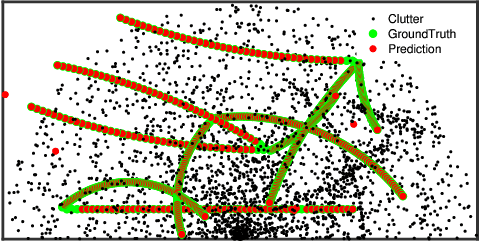

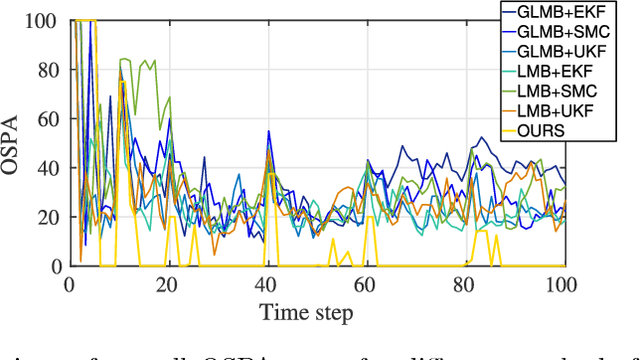

Abstract:Defining a multi-target motion model, which is an important step of tracking algorithms, can be very challenging. Using fixed models (as in several generative Bayesian algorithms, such as Kalman filters) can fail to accurately predict sophisticated target motions. On the other hand, sequential learning of the motion model (for example, using recurrent neural networks) can be computationally complex and difficult due to the variable unknown number of targets. In this paper, we propose a multi-target filtering and tracking (MTFT) algorithm which learns the motion model, simultaneously for all targets, from an implicitly represented state map and performs spatio-temporal data prediction. To this end, the multi-target state is modelled over a continuous hypothetical target space, using random finite sets and Gaussian mixture probability hypothesis density formulations. The prediction step is recursively performed using a deep convolutional recurrent neural network with a long short-term memory architecture, which is trained as a regression block, on the fly, over "probability density difference" maps. Our approach is evaluated over widely used pedestrian tracking benchmarks, remarkably outperforming state-of-the-art multi-target filtering algorithms, while giving competitive results when compared with other tracking approaches.

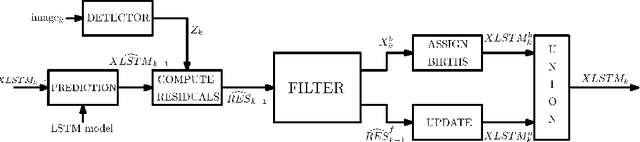

Deep Recurrent Neural Network for Multi-target Filtering

Oct 08, 2018

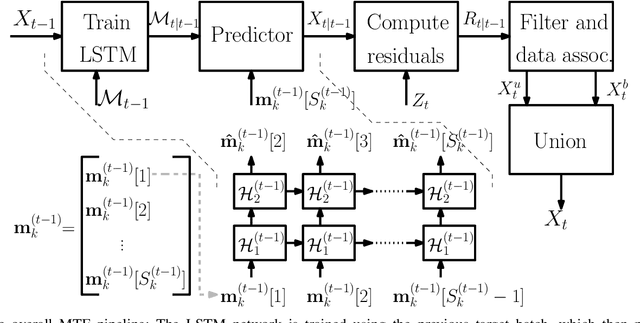

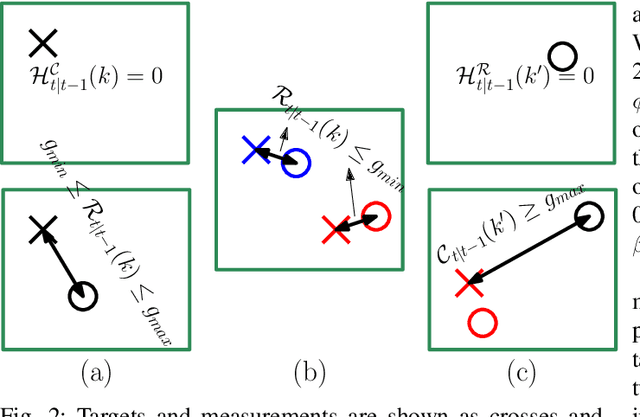

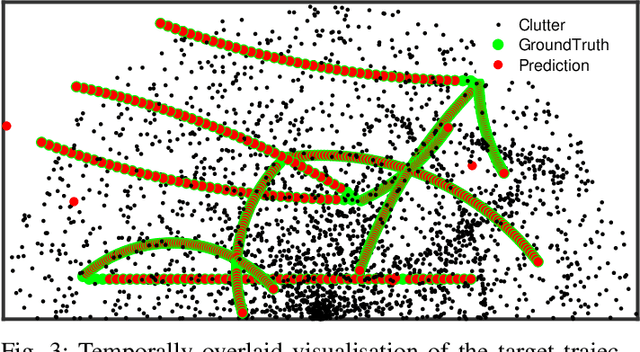

Abstract:This paper addresses the problem of fixed motion and measurement models for multi-target filtering using an adaptive learning framework. This is performed by defining target tuples with random finite set terminology and utilisation of recurrent neural networks with a long short-term memory architecture. A novel data association algorithm compatible with the predicted tracklet tuples is proposed, enabling the update of occluded targets, in addition to assigning birth, survival and death of targets. The algorithm is evaluated over a commonly used filtering simulation scenario, with highly promising results.

POL-LWIR Vehicle Detection: Convolutional Neural Networks Meet Polarised Infrared Sensors

Apr 07, 2018

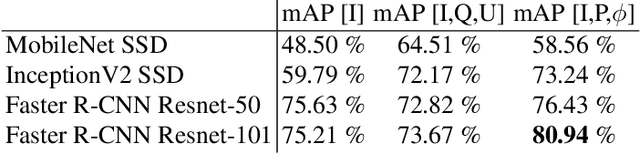

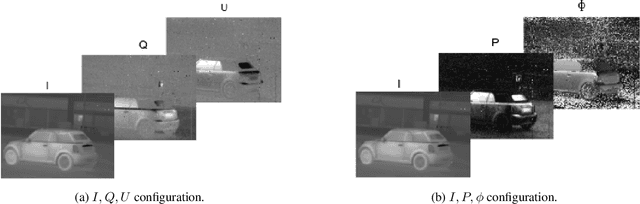

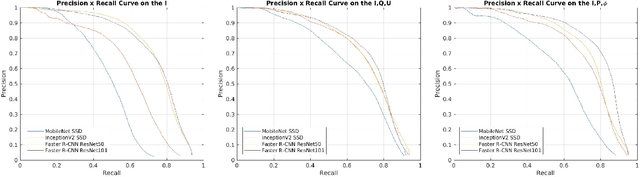

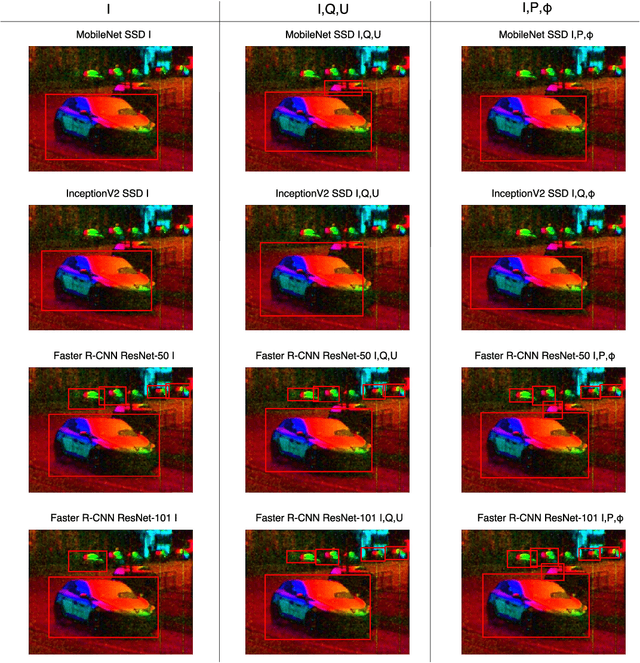

Abstract:For vehicle autonomy, driver assistance and situational awareness, it is necessary to operate at day and night, and in all weather conditions. In particular, long wave infrared (LWIR) sensors that receive predominantly emitted radiation have the capability to operate at night as well as during the day. In this work, we employ a polarised LWIR (POL-LWIR) camera to acquire data from a mobile vehicle, to compare and contrast four different convolutional neural network (CNN) configurations to detect other vehicles in video sequences. We evaluate two distinct and promising approaches, two-stage detection (Faster-RCNN) and one-stage detection (SSD), in four different configurations. We also employ two different image decompositions: the first based on the polarisation ellipse and the second on the Stokes parameters themselves. To evaluate our approach, the experimental trials were quantified by mean average precision (mAP) and processing time, showing a clear trade-off between the two factors. For example, the best mAP result of 80.94% was achieved using Faster-RCNN, but at a frame rate of 6.4 fps. In contrast, MobileNet SSD achieved only 64.51% mAP, but at 53.4 fps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge