Andreas Weinmann

Generalization of Self-Supervised Vision Transformers for Protein Localization Across Microscopy Domains

Feb 05, 2026Abstract:Task-specific microscopy datasets are often too small to train deep learning models that learn robust feature representations. Self-supervised learning (SSL) can mitigate this by pretraining on large unlabeled datasets, but it remains unclear how well such representations transfer across microscopy domains with different staining protocols and channel configurations. We investigate the cross-domain transferability of DINO-pretrained Vision Transformers for protein localization on the OpenCell dataset. We generate image embeddings using three DINO backbones pretrained on ImageNet-1k, the Human Protein Atlas (HPA), and OpenCell, and evaluate them by training a supervised classification head on OpenCell labels. All pretrained models transfer well, with the microscopy-specific HPA-pretrained model achieving the best performance (mean macro $F_1$-score = 0.8221 \pm 0.0062), slightly outperforming a DINO model trained directly on OpenCell (0.8057 \pm 0.0090). These results highlight the value of large-scale pretraining and indicate that domain-relevant SSL representations can generalize effectively to related but distinct microscopy datasets, enabling strong downstream performance even when task-specific labeled data are limited.

Vision-Language Model for Accurate Crater Detection

Jan 12, 2026Abstract:The European Space Agency (ESA), driven by its ambitions on planned lunar missions with the Argonaut lander, has a profound interest in reliable crater detection, since craters pose a risk to safe lunar landings. This task is usually addressed with automated crater detection algorithms (CDA) based on deep learning techniques. It is non-trivial due to the vast amount of craters of various sizes and shapes, as well as challenging conditions such as varying illumination and rugged terrain. Therefore, we propose a deep-learning CDA based on the OWLv2 model, which is built on a Vision Transformer, that has proven highly effective in various computer vision tasks. For fine-tuning, we utilize a manually labeled dataset fom the IMPACT project, that provides crater annotations on high-resolution Lunar Reconnaissance Orbiter Camera Calibrated Data Record images. We insert trainable parameters using a parameter-efficient fine-tuning strategy with Low-Rank Adaptation, and optimize a combined loss function consisting of Complete Intersection over Union (CIoU) for localization and a contrastive loss for classification. We achieve satisfactory visual results, along with a maximum recall of 94.0% and a maximum precision of 73.1% on a test dataset from IMPACT. Our method achieves reliable crater detection across challenging lunar imaging conditions, paving the way for robust crater analysis in future lunar exploration.

Detector-Augmented SAMURAI for Long-Duration Drone Tracking

Jan 08, 2026Abstract:Robust long-term tracking of drone is a critical requirement for modern surveillance systems, given their increasing threat potential. While detector-based approaches typically achieve strong frame-level accuracy, they often suffer from temporal inconsistencies caused by frequent detection dropouts. Despite its practical relevance, research on RGB-based drone tracking is still limited and largely reliant on conventional motion models. Meanwhile, foundation models like SAMURAI have established their effectiveness across other domains, exhibiting strong category-agnostic tracking performance. However, their applicability in drone-specific scenarios has not been investigated yet. Motivated by this gap, we present the first systematic evaluation of SAMURAI's potential for robust drone tracking in urban surveillance settings. Furthermore, we introduce a detector-augmented extension of SAMURAI to mitigate sensitivity to bounding-box initialization and sequence length. Our findings demonstrate that the proposed extension significantly improves robustness in complex urban environments, with pronounced benefits in long-duration sequences - especially under drone exit-re-entry events. The incorporation of detector cues yields consistent gains over SAMURAI's zero-shot performance across datasets and metrics, with success rate improvements of up to +0.393 and FNR reductions of up to -0.475.

Fast and Exact Least Absolute Deviations Line Fitting via Piecewise Affine Lower-Bounding

Dec 22, 2025

Abstract:Least-absolute-deviations (LAD) line fitting is robust to outliers but computationally more involved than least squares regression. Although the literature includes linear and near-linear time algorithms for the LAD line fitting problem, these methods are difficult to implement and, to our knowledge, lack maintained public implementations. As a result, practitioners often resort to linear programming (LP) based methods such as the simplex-based Barrodale-Roberts method and interior-point methods, or on iteratively reweighted least squares (IRLS) approximation which does not guarantee exact solutions. To close this gap, we propose the Piecewise Affine Lower-Bounding (PALB) method, an exact algorithm for LAD line fitting. PALB uses supporting lines derived from subgradients to build piecewise-affine lower bounds, and employs a subdivision scheme involving minima of these lower bounds. We prove correctness and provide bounds on the number of iterations. On synthetic datasets with varied signal types and noise including heavy-tailed outliers as well as a real dataset from the NOAA's Integrated Surface Database, PALB exhibits empirical log-linear scaling. It is consistently faster than publicly available implementations of LP based and IRLS based solvers. We provide a reference implementation written in Rust with a Python API.

Performance Optimization of YOLO-FEDER FusionNet for Robust Drone Detection in Visually Complex Environments

Sep 17, 2025

Abstract:Drone detection in visually complex environments remains challenging due to background clutter, small object scale, and camouflage effects. While generic object detectors like YOLO exhibit strong performance in low-texture scenes, their effectiveness degrades in cluttered environments with low object-background separability. To address these limitations, this work presents an enhanced iteration of YOLO-FEDER FusionNet -- a detection framework that integrates generic object detection with camouflage object detection techniques. Building upon the original architecture, the proposed iteration introduces systematic advancements in training data composition, feature fusion strategies, and backbone design. Specifically, the training process leverages large-scale, photo-realistic synthetic data, complemented by a small set of real-world samples, to enhance robustness under visually complex conditions. The contribution of intermediate multi-scale FEDER features is systematically evaluated, and detection performance is comprehensively benchmarked across multiple YOLO-based backbone configurations. Empirical results indicate that integrating intermediate FEDER features, in combination with backbone upgrades, contributes to notable performance improvements. In the most promising configuration -- YOLO-FEDER FusionNet with a YOLOv8l backbone and FEDER features derived from the DWD module -- these enhancements lead to a FNR reduction of up to 39.1 percentage points and a mAP increase of up to 62.8 percentage points at an IoU threshold of 0.5, compared to the initial baseline.

Fast Trajectory-Independent Model-Based Reconstruction Algorithm for Multi-Dimensional Magnetic Particle Imaging

May 28, 2025Abstract:Magnetic Particle Imaging (MPI) is a promising tomographic technique for visualizing the spatio-temporal distribution of superparamagnetic nanoparticles, with applications ranging from cancer detection to real-time cardiovascular monitoring. Traditional MPI reconstruction relies on either time-consuming calibration (measured system matrix) or model-based simulation of the forward operator. Recent developments have shown the applicability of Chebyshev polynomials to multi-dimensional Lissajous Field-Free Point (FFP) scans. This method is bound to the particular choice of sinusoidal scanning trajectories. In this paper, we present the first reconstruction on real 2D MPI data with a trajectory-independent model-based MPI reconstruction algorithm. We further develop the zero-shot Plug-and-Play (PnP) algorithm of the authors -- with automatic noise level estimation -- to address the present deconvolution problem, leveraging a state-of-the-art denoiser trained on natural images without retraining on MPI-specific data. We evaluate our method on the publicly available 2D FFP MPI dataset ``MPIdata: Equilibrium Model with Anisotropy", featuring scans of six phantoms acquired using a Bruker preclinical scanner. Moreover, we show reconstruction performed on custom data on a 2D scanner with additional high-frequency excitation field and partial data. Our results demonstrate strong reconstruction capabilities across different scanning scenarios -- setting a precedent for general-purpose, flexible model-based MPI reconstruction.

Remote Sensing Imagery for Flood Detection: Exploration of Augmentation Strategies

Apr 28, 2025

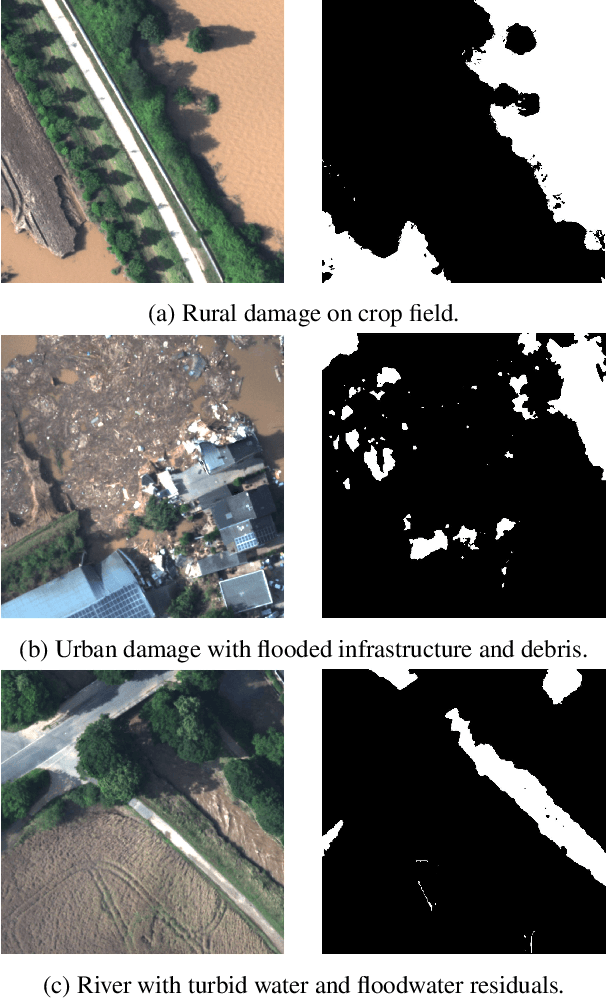

Abstract:Floods cause serious problems around the world. Responding quickly and effectively requires accurate and timely information about the affected areas. The effective use of Remote Sensing images for accurate flood detection requires specific detection methods. Typically, Deep Neural Networks are employed, which are trained on specific datasets. For the purpose of river flood detection in RGB imagery, we use the BlessemFlood21 dataset. We here explore the use of different augmentation strategies, ranging from basic approaches to more complex techniques, including optical distortion. By identifying effective strategies, we aim to refine the training process of state-of-the-art Deep Learning segmentation networks.

SynDroneVision: A Synthetic Dataset for Image-Based Drone Detection

Nov 08, 2024

Abstract:Developing robust drone detection systems is often constrained by the limited availability of large-scale annotated training data and the high costs associated with real-world data collection. However, leveraging synthetic data generated via game engine-based simulations provides a promising and cost-effective solution to overcome this issue. Therefore, we present SynDroneVision, a synthetic dataset specifically designed for RGB-based drone detection in surveillance applications. Featuring diverse backgrounds, lighting conditions, and drone models, SynDroneVision offers a comprehensive training foundation for deep learning algorithms. To evaluate the dataset's effectiveness, we perform a comparative analysis across a selection of recent YOLO detection models. Our findings demonstrate that SynDroneVision is a valuable resource for real-world data enrichment, achieving notable enhancements in model performance and robustness, while significantly reducing the time and costs of real-world data acquisition. SynDroneVision will be publicly released upon paper acceptance.

BlessemFlood21: Advancing Flood Analysis with a High-Resolution Georeferenced Dataset for Humanitarian Aid Support

Jul 06, 2024

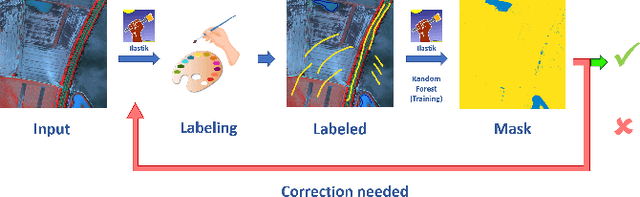

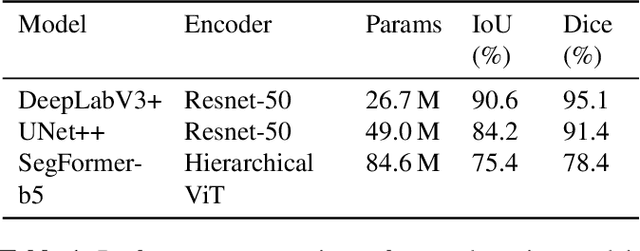

Abstract:Floods are an increasingly common global threat, causing emergencies and severe damage to infrastructure. During crises, organisations such as the World Food Programme use remotely sensed imagery, typically obtained through drones, for rapid situational analysis to plan life-saving actions. Computer Vision tools are needed to support task force experts on-site in the evaluation of the imagery to improve their efficiency and to allocate resources strategically. We introduce the BlessemFlood21 dataset to stimulate research on efficient flood detection tools. The imagery was acquired during the 2021 Erftstadt-Blessem flooding event and consists of high-resolution and georeferenced RGB-NIR images. In the resulting RGB dataset, the images are supplemented with detailed water masks, obtained via a semi-supervised human-in-the-loop technique, where in particular the NIR information is leveraged to classify pixels as either water or non-water. We evaluate our dataset by training and testing established Deep Learning models for semantic segmentation. With BlessemFlood21 we provide labeled high-resolution RGB data and a baseline for further development of algorithmic solutions tailored to flood detection in RGB imagery.

CNN Based Flank Predictor for Quadruped Animal Species

Jun 19, 2024

Abstract:The bilateral asymmetry of flanks of animals with visual body marks that uniquely identify an individual, complicates tasks like population estimations. Automatically generated additional information on the visible side of the animal would improve the accuracy for individual identification. In this study we used transfer learning on popular CNN image classification architectures to train a flank predictor that predicts the visible flank of quadruped mammalian species in images. We automatically derived the data labels from existing datasets originally labeled for animal pose estimation. We trained the models in two phases with different degrees of retraining. The developed models were evaluated in different scenarios of different unknown quadruped species in known and unknown environments. As a real-world scenario, we used a dataset of manually labeled Eurasian lynx (Lynx lynx) from camera traps in the Bavarian Forest National Park to evaluate the model. The best model, trained on an EfficientNetV2 backbone, achieved an accuracy of 88.70 % for the unknown species lynx in a complex habitat.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge